- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

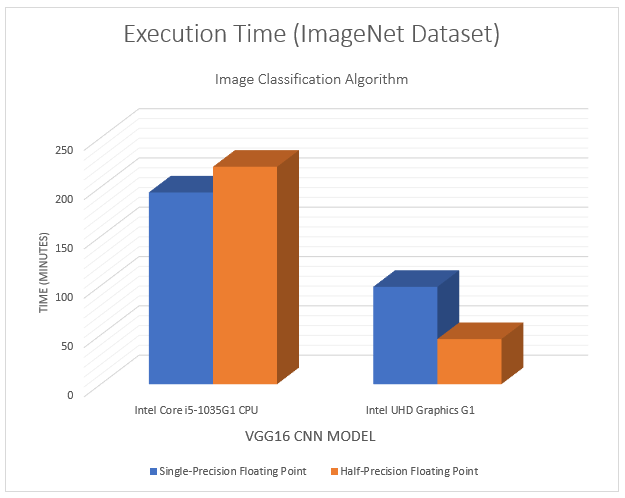

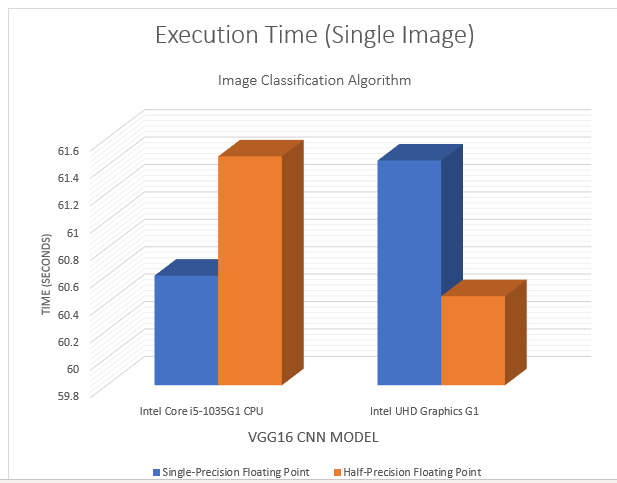

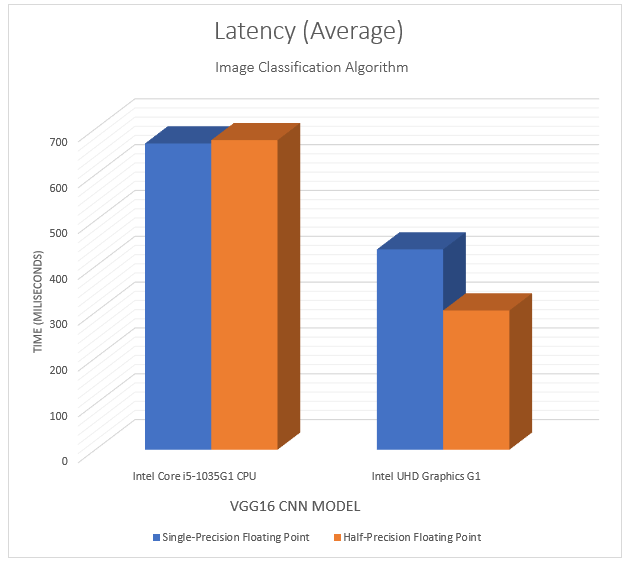

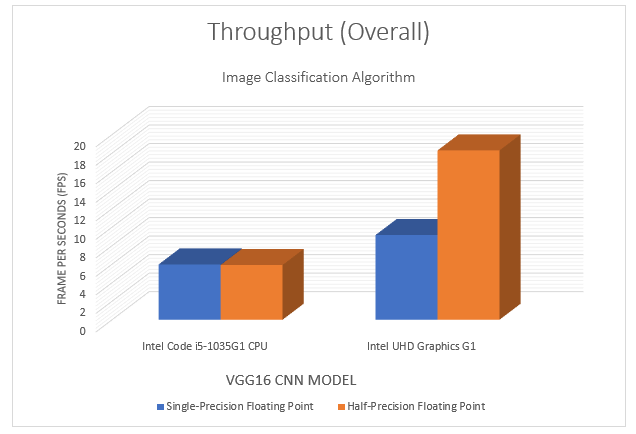

I am trying to understand FP16 and FP32 results that I got. Shouldn't the latency be lower and throughput be higher for haif-precision floating point (FP16) compared to single-precision floating point (FP32). Same case for duration. The duration/executing time is higher for haif-precision floating point (FP16) compare to single-precision floating point (FP32). Shouldn't it be the opposite

My understanding was FP16 is less resource intensive to calculate as compared to FP32 so FP16 should be faster, but the results are opposite

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

after reading some search results on Google I understand that Intel cpu doesn’t have fp16 type and will call function to do fp16 calculation. Is this correct?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Since you are mentioning precisions and latency/throughput, I assume you are trying to assess your model's performance.

Indeed, FP32 would be more accurate compared to lower in size precision such as FP16 or even INT8. However, this also varies according to the hardware/device compatibility. Running FP32 on hardware might results in a faster inference rate compared to FP16 if the hardware actually prefers FP32 instead FP16.

You'll need to evaluate the hardware and use the precision that it prefers. You may refer to this supported formats section.

This Benchmark documentation might help to explain further.

Sincerely,

Iffa

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

how do i find out if a particulart CPU (Intel I5-10351G1 in this case) prefers FP32 instead of FP16?. Is there a spec sheet or do I look in the instruction set manual for this CPU?. I tried google, but couldn't find anything.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Generally, according to the documentation that I shared previously, the CPU supports and prefers FP32 model format (precision).

OpenVINO CPU Plugin supports (latest):

- Intel Xeon with Intel® Advanced Vector Extensions 2 (Intel® AVX2),

- Intel® Advanced Vector Extensions 512 (Intel® AVX-512),

- AVX512_BF16, Intel Core Processors with Intel AVX2,

- Intel Atom Processors with Intel® Streaming SIMD Extensions (Intel® SSE)

Refer to: Supported Devices

Your CPU version should go well with FP32.

Sincerely,

Iffa

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Greetings,

Intel will no longer monitor this thread since we have provided a solution. If you need any additional information from Intel, please submit a new question.

Sincerely,

Iffa

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page