- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please help me with the difficulties I am experiencing trying to convert a YOLOv4-tiny model to IR (I downloaded the three files classes.txt, yolov4-tiny.cfg and yolov4-tiny.weights somewhere from the Internet some time ago and the model works fine).

I am following this page (https://docs.openvino.ai/latest/workbench_docs_Workbench_DG_Tutorial_Import_YOLO.html) and below, I will be asking questions (I colored in red for you to find them easily) where I have difficulties to understand:

Opening the Github (in the browser) for Yolov4-tiny (https://github.com/openvinotoolkit/open_model_zoo/tree/master/models/public/yolo-v4-tiny-tf), I opened the model.yml file and found these converter parameters:

model_optimizer_args:

- --input_shape=[1,416,416,3]

- --input=image_input

- --scale_values=image_input[255]

- --reverse_input_channels

- --input_model=$conv_dir/yolo-v4-tiny.pb

I noticed the arguments differs from YOLOv4, where the YOLOv4 has an argument that reads --saved_model_dir=$conv_dir/yolo-v4.savedmodel while the Yolov4-tiny has an argument that reads --input_model=$conv_dir/yolo-v4-tiny.pb. But even then, I cannot understand what does the YOLOv4 page mean when it says, "Here you can see that the required format for the YOLOv4 model is SavedModel". What does it mean by "SavedModel"? Does it mean the converted filename extension has to be declared ending with ".savedmodel"?

Opening the Github (in the browser) for Yolov4-tiny, I opened the pre-convert.py file and found the parameters required to use the converter:

subprocess.run([sys.executable, '--',

str(args.input_dir / 'keras-YOLOv3-model-set/tools/model_converter/convert.py'),

str(args.input_dir / 'keras-YOLOv3-model-set/cfg/yolov4-tiny.cfg'),

str(args.input_dir / 'yolov4-tiny.weights'),

str(args.output_dir / 'yolo-v4-tiny.h5'),

'--yolo4_reorder',

], check=True)

I noticed the input_dir argument asks for the "yolov4-tiny.weights" file and the output_dir says "yolo-v4-tiny.h5". Shouldn't the output_dir filename extension be ".pb"? I ask because I noticed that for YOLOv4, both, the model.yml conv_dir and the pre-converter.py output_dir had the same filename with same filename extension ".savedmodel".

I ran the git clone to download the Darknet-to-TensorFlow converter with no issues. I prepared and activated the virtual environment with no issues as well. I saw some warnings (or errors) when I installed the ./keras-YOLOv3-model-set/requirements.txt the first time but, I ran the installation a second time and, I saw no warnings nor errors.

Following the YOLOv4 page again, where I have to organize the folders and files, I understood I had to put the "yolov4-tiny.weights" file (in my case) in the root of the keras-YOLOv3-model-set folder and the "yolov4-tiny.cfg" file in the "cfg" subfolder. However, I could not understand why is it saying there has to be a file named "saved_model" (on the root of the keras-YOLOv3-model-set folder)? Where is this file? And in my case, what should I put instead of "saved_model"?

Finally, should the script to run the converter for my yolov4r-tiny model read as follows?

python keras-YOLOv3-model-set/tools/model_converter/convert.py keras-YOLOv3-model-set/cfg/yolov4-tiny.cfg yolov4-tiny.weights yolo-v4-tiny.pb --yolo4_reorder

If the above script is wrong, can you please write to me how it should read?

Thank you in advanced and will be waiting for your reply,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello RGVGreatCoder,

The yolov4-tiny model was converted to SavedModel format. To convert the model to OpenVINO IR format, specify the--saved_model_dir parameter in the SavedModel directory.

Modify your command as follows:

mo --saved_model_dir C:\openvino-models -o C:\openvino-models\IR --input_shape [1,608,608,3] --data_type=FP16 -n yolov4-tiny

[ SUCCESS ] Generated IR version 10 model.

[ SUCCESS ] XML file: C:\Users\yolo-v4-tiny\yolov4-tiny.xml

[ SUCCESS ] BIN file: C:\Users\yolo-v4-tiny\yolov4-tiny.bin

[ SUCCESS ] Total execution time: 26.04 seconds.

Sincerely,

Zulkifli

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello RGVGreatCoder,

Thank you for your question.

A SavedModel consists of a special directory with a .pb file and several subfolders: variables, assets, and assets.extra. For more information about the SavedModel directory, refer to the README file in the TensorFlow repository.

All YOLO models are originally implemented in the DarkNet framework and consist of two files:

- .cfg file with model configurations

- .weights file with model weights

In pre-convert.py file as you mentioned, the model is converted from .weights (YOLO native format) to .h5 (Keras), then from .h5 to .pb. You can refer to this snipped code from the pre-convert.py:

subprocess.run([sys.executable, '--',

str(args.input_dir / 'keras-YOLOv3-model-set/tools/model_converter/keras_to_tensorflow.py'),

'--input_model={}'.format(args.output_dir / 'yolo-v4-tiny.h5'),

'--output_model={}'.format(args.output_dir / 'yolo-v4-tiny.pb'),

], check=True)

This method is applicable if you are using YOLO models from Intel Open Model ZOO (OMZ) Public model. These are the command used to convert yolo-v4-tiny-tf from OMZ Public model to IR format:

- python3 downloader.py --name yolo-v4-tiny-tf

- python3 converter.py –name yolo-v4-tiny-tf

If you wanted to convert your custom YOLOv4 model to IR format, I suggest you refer to Converting YOLO Models to the Intermediate Representation (IR).

Sincerely,

Zulkifli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oh my. Please excuse me if I wasn't clear. I meant to say right at the beginning of my message, I needed to convert to IR, a custom Yolov4-tiny model I own, downloaded from a site I cannot remember, which is not from the Open Model Zoo (OMZ). At the end of your response, it seems you are telling me that the page I was following does not apply to my need; therefore, your clarifications, although appreciated, in a way are pointless, since these only apply to Yolov4 models downloaded from OMZ. Am I correct?

Seems I have to follow instead another page to convert my custom Yolov4-tiny model. The page you mentioned is this one: (https://docs.openvino.ai/2021.4/openvino_docs_MO_DG_prepare_model_convert_model_tf_specific_Convert_YOLO_From_Tensorflow.html#convert-yolov4-model-to-ir). However, this page does not apply to me eihter because it is asking to download and work on a yolov4.weight model from an existing hub. It starts of saying, "This section explains how to convert the YOLOv4 Keras* model from the https://github.com/Ma-Dan/keras-yolo4" [github]. This page does not say it can work for a custom Yolov4 model.

Can you please provide me a step-by-step instruction on how to convert my own custom Yolov4-tiny model to IR?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I still have not received an answer to my last reply. I will greatly appreciate if I can get an answer.

Also, I should let you know that I installed the OpenVINO toolkit for Developer using PIP. Is there a command to preconvert a custom YoloV4-tiny model before running the omz_converter?

This issue is still not resolved. Please help.

Thank you in advanced and will be waiting for your reply ...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello RGVGreatCoder,

Sorry for the delay in response,

You can refer to Converting YOLO* Models to the Intermediate Representation (IR) to convert your custom trained YOLOv4 model to IR.

Sincerely,

Zulkifli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Okay. Almost there!

So I ran the git clone, then ran the convert.py and, it generated a file named "saved_model.pb". I think I got it right up to this point.

The page you shared, suggests running the PIP model optimizer "mo" script following this format:

mo --saved_model_dir yolov4 --output_dir models/IRs --input_shape [1,608,608,3] --model_name yolov4

So I provided my argument values (giving my best interpretation of the format):

mo --C:\openvino-models yolov4 --C:\openvino-models models/IRs --input_shape [1,608,608,3] --model_name yolov4

When I ran it, it threw the following error:

usage: main.py [options]

main.py: error: unrecognized arguments: --C:\openvino-models yolov4 --C:\openvino-models models/IRs

To learn more about the PIP model optimizer "mo", I found this page in your site: https://docs.openvino.ai/latest/openvino_docs_MO_DG_prepare_model_convert_model_Converting_Model.html

So I rewrote the "mo" script as follows:

mo -m C:\openvino-models\saved_model.pb -o C:\openvino-models\IR --input_shape [1,608,608,3] -n yolov4

And when I ran it, it displayed the following content that includes errors:

Model Optimizer arguments:

Common parameters:

- Path to the Input Model: C:\openvino-models\saved_model.pb

- Path for generated IR: C:\openvino-models\IR

- IR output name: yolov4

- Log level: ERROR

- Batch: Not specified, inherited from the model

- Input layers: Not specified, inherited from the model

- Output layers: Not specified, inherited from the model

- Input shapes: [1,608,608,3]

- Mean values: Not specified

- Scale values: Not specified

- Scale factor: Not specified

- Precision of IR: FP32

- Enable fusing: True

- Enable grouped convolutions fusing: True

- Move mean values to preprocess section: None

- Reverse input channels: False

TensorFlow specific parameters:

- Input model in text protobuf format: False

- Path to model dump for TensorBoard: None

- List of shared libraries with TensorFlow custom layers implementation: None

- Update the configuration file with input/output node names: None

- Use configuration file used to generate the model with Object Detection API: None

- Use the config file: None

- Inference Engine found in: c:\users\manue\appdata\local\programs\python\python38\lib\site-packages\openvino

Inference Engine version: 2021.4.2-3976-0943ed67223-refs/pull/539/head

Model Optimizer version: 2021.4.2-3976-0943ed67223-refs/pull/539/head

2022-02-11 10:06:25.853710: W tensorflow/stream_executor/platform/default/dso_loader.cc:60] Could not load dynamic library 'cudart64_110.dll'; dlerror: cudart64_110.dll not found

2022-02-11 10:06:25.853884: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

c:\users\manue\appdata\local\programs\python\python38\lib\site-packages\tensorflow\python\autograph\impl\api.py:22: DeprecationWarning: the imp module is deprecated in favour of importlib; see the module's documentation for alternative uses import imp

[ FRAMEWORK ERROR ] Cannot load input model: TensorFlow cannot read the model file: "C:\openvino-models\saved_model.pb" is incorrect TensorFlow model file.

The file should contain one of the following TensorFlow graphs:

1. frozen graph in text or binary format

2. inference graph for freezing with checkpoint (--input_checkpoint) in text or binary format

3. meta graph

Make sure that --input_model_is_text is provided for a model in text format. By default, a model is interpreted in binary format. Framework error details: Error parsing message with type 'tensorflow.GraphDef'. For more information please refer to Model Optimizer FAQ, question #43. (https://docs.openvinotoolkit.org/latest/openvino_docs_MO_DG_prepare_model_Model_Optimizer_FAQ.html?question=43#question-43)

I noticed an error on the common parameter -Log level and another error where it says something about the [ FRAMEWORK ERROR ].

Questions:

- Why is my script getting those errors and, can you please help me fix them?

- Can you please write for me, How should the "mo" script exactly read with the arguments I provided above?

Again, thank you for all your help and will be waiting for your reply,

P.S. I am now developing on a Windows 11 machine with the following features:

-Windows 11 Home 21H2

-Python 3.8.10 (tensorflow 2.4.4, numpy 1.19.5, opencv 4.5.5)

-cmake 3.22.2

-Installed OpenVINO through PIP and its version is OpenVINO 2021.4.2

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, I tried today the following:

mo -m C:\openvino-models\saved_model.pb -o C:\openvino-models\IR --input_shape [1,608,608,3] --data_type=FP16 -n yolov4-tinyAnd got the following result:

Model Optimizer arguments:

Common parameters:

- Path to the Input Model: C:\Users\openvino-models\saved_model.pb

- Path for generated IR: C:\Users\openvino-models\IR

- IR output name: yolov4-tiny

- Log level: ERROR

- Batch: Not specified, inherited from the model

- Input layers: Not specified, inherited from the model

- Output layers: Not specified, inherited from the model

- Input shapes: [1,608,608,3]

- Mean values: Not specified

- Scale values: Not specified

- Scale factor: Not specified

- Precision of IR: FP16

- Enable fusing: True

- Enable grouped convolutions fusing: True

- Move mean values to preprocess section: None

- Reverse input channels: False

TensorFlow specific parameters:

- Input model in text protobuf format: False

- Path to model dump for TensorBoard: None

- List of shared libraries with TensorFlow custom layers implementation: None

- Update the configuration file with input/output node names: None

- Use configuration file used to generate the model with Object Detection API: None

- Use the config file: None

- Inference Engine found in: c:\users\manue\appdata\local\programs\python\python38\lib\site-packages\openvino

Inference Engine version: 2021.4.2-3976-0943ed67223-refs/pull/539/head

Model Optimizer version: 2021.4.2-3976-0943ed67223-refs/pull/539/head

2022-02-14 13:27:15.649281: W tensorflow/stream_executor/platform/default/dso_loader.cc:60] Could not load dynamic library 'cudart64_110.dll'; dlerror: cudart64_110.dll not found

2022-02-14 13:27:15.649447: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

c:\users\manue\appdata\local\programs\python\python38\lib\site-packages\tensorflow\python\autograph\impl\api.py:22: DeprecationWarning: the imp module is deprecated in favour of importlib; see the module's documentation for alternative uses

import imp

[ FRAMEWORK ERROR ] Cannot load input model: TensorFlow cannot read the model file: "C:\openvino-models\saved_model.pb" is incorrect TensorFlow model file.

The file should contain one of the following TensorFlow graphs:

1. frozen graph in text or binary format

2. inference graph for freezing with checkpoint (--input_checkpoint) in text or binary format

3. meta graph

Make sure that --input_model_is_text is provided for a model in text format. By default, a model is interpreted in binary format. Framework error details: Error parsing message with type 'tensorflow.GraphDef'.

For more information please refer to Model Optimizer FAQ, question #43. (https://docs.openvinotoolkit.org/latest/openvino_docs_MO_DG_prepare_model_Model_Optimizer_FAQ.html?question=43#question-43)

I am attaching the three Yolov4-tiny model files in a compressed zip file, that I am trying to convert (I downloaded these files a few months ago from another github location and not from the zoo model).

Please let me know how I can convert these files. I will appreciate very much if you can help me.

-----------

Software Developer:

-C#, .Net Core, learning C++ and Python

Developing Workstation:

-Hardware:

CPU Intel(R) Core(TM) i5-9300H CPU @ 2.40GHz

GPU: 3071MB NVIDIA GeForce GTX 1050

RAM: 16GB

-OS: Windows 11 Home 21H2 64-bit

-Python 3.8.10 (tensorflow 2.4.4, numpy 1.19.5, opencv 4.5.5)

-cmake 3.22.2

-OpenVINO 2021.4.2 (installed using PIP)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello RGVGreatCoder,

The yolov4-tiny model was converted to SavedModel format. To convert the model to OpenVINO IR format, specify the--saved_model_dir parameter in the SavedModel directory.

Modify your command as follows:

mo --saved_model_dir C:\openvino-models -o C:\openvino-models\IR --input_shape [1,608,608,3] --data_type=FP16 -n yolov4-tiny

[ SUCCESS ] Generated IR version 10 model.

[ SUCCESS ] XML file: C:\Users\yolo-v4-tiny\yolov4-tiny.xml

[ SUCCESS ] BIN file: C:\Users\yolo-v4-tiny\yolov4-tiny.bin

[ SUCCESS ] Total execution time: 26.04 seconds.

Sincerely,

Zulkifli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your immediate response! ...

Your script worked (after adding a second hyphen "-" before "-saved_model_dir" argument).

This time, I created a 244MB YoloV4 weights model (not a tiny version) from scratch (in Google Colab) and then ran the "convert.py" and "mo" and it generated the IR model with these files: yolov4.xml, yolov4.bin (of 121MB) and yolov4.mapping. Awesome.

Trouble is, it generated an IR model that crashes my Python Program when it uses it.

The generated IR model has the following details (I used Python to get and print these values):

- Inputs:

name: image_input

shape: [1, 3, 608, 608]

precision: FP32 - Outputs:

name: StatefulPartitionedCall/model/conv2d_101/BiasAdd/Add

shape: [1, 21, 38, 38]

layout: NCHW

precision: FP32

name: StatefulPartitionedCall/model/conv2d_109/BiasAdd/Add

shape: [1, 21, 19, 19]

layout: NCHW

precision: FP32

name: StatefulPartitionedCall/model/conv2d_93/BiasAdd/Add

shape: [1, 21, 76, 76]

layout: NCHW

precision: FP32

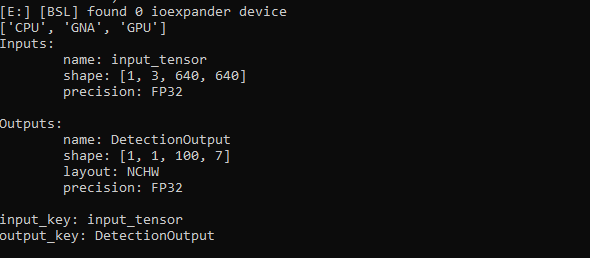

However, my Python program (running in my laptop) works well when using an IR model (specifically the "ssdlite_mobilenet_v2.xml" I downloaded from your site) which has the following details:

- Inputs:

name: image_tensor

shape: [1, 3, 300, 300]

precision: FP32 - Outputs:

name: DetectionOutput

shape: [1, 1, 100, 7]

layout: NCHW

precision: FP32

Following is a chunk of my Python program that when using my IR model, it crashes in line 27 with an error message that reads "too many values to unpack (expected 7)":

import cv2 as cv

import numpy as np

from openvino.inference_engine import IECore

...

device_name = 'GPU' # try this on WSL Ubuntu runnin in my laptop

ie = IECore()

net = ie.read_network(

model=openvino_xml,

weights=openvino_weights,

)

exec_net = ie.load_network(net, device_name)

input_key = list(exec_net.input_info)[0]

height, width = exec_net.input_info[input_key].tensor_desc.dims[2:]

...

def process_results(frame, results, thresh=0.6):

# size of the original frame

h, w = frame.shape[:2]

# results is a tensor [1, 1, 100, 7]

results = results[output_key][0][0]

boxes = []

labels = []

scores = []

for _, label, score, xmin, ymin, xmax, ymax in results: # <== CRASHES HERE !!!

# create a box with pixels coordinates from the box with normalized coordinates [0,1]

...

while True:

# resize image and change dims to fit neural network input

resized_image = cv.resize(src=imageFrame, dsize=(width, height), interpolation=cv.INTER_AREA)

# create batch of images (size = 1)

input_img = resized_image.transpose(2, 0, 1)[np.newaxis, ...]

result = exec_net.infer(inputs={input_key: input_img})

# get poses from network results

boxes = process_results(frame=imageFrame, results=result)

...

Questions:

- How can I generate an IR model with the details I need for my Python program to work?

- If I want to use my IR model as it is, what code should I add (or change) in my Python program?

Thank you again and will be waiting for your reply,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello RGVGreatCoder,

Please share with us the full Python code, YOLOv4 model, and any additional detail that can help us to investigate this issue further.

Sincerely,

Zulkifli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I created another model from scratch again, but this time a YoloV4-tiny model (I am attaching the zipped file). I then ran the converter and the "mo" script to generate the IR model (attached zipped file); however, when I tested the IR model it still crashed in line 87 of my program below with same error message "too many values to unpack (expected 7)".

These are the steps I ran which successfully converted the Yolov4-tiny model to IR:

python keras-YOLOv3-model-set/tools/model_converter/convert.py <directory_path>\yolov4-tiny-custom.cfg <directory_path>\yolov4-tiny-custom_last.weights <directory_path_pb_model>

mo --saved_model_dir <directory_path_pb_model> -o <directory_path_pb_model>\IR --input_shape [1,608,608,3] --data_type=FP16 -n yolov4-tiny

The "printOpenVino_InputOutputItems()" method (in line 60) printed the following values of the IR model that currentl crashes my program in line 87:

Inputs:

name: image_input

shape: [1, 3, 608, 608]

precision: FP32

Outputs:

name: StatefulPartitionedCall/model/conv2d_17/BiasAdd/Add

shape: [1, 21, 19, 19]

layout: NCHW

precision: FP32

name: StatefulPartitionedCall/model/conv2d_20/BiasAdd/Add

shape: [1, 21, 38, 38]

layout: NCHW

precision: FP32

input_key: image_input

output_key: StatefulPartitionedCall/model/conv2d_17/BiasAdd/Add

As I mentioned on my previous post, the Input and Output values that my program works well with, are the following:

Inputs:

name: image_tensor

shape: [1, 3, 300, 300]

precision: FP32

Outputs:

name: DetectionOutput

shape: [1, 1, 100, 7]

layout: NCHW

precision: FP32

Finally, below is my Python program:

#############

# This program has code from the following pages:

# https://docs.openvino.ai/latest/notebooks/004-hello-detection-with-output.html#

# https://docs.openvino.ai/latest/notebooks/002-openvino-api-with-output.html

# https://docs.openvino.ai/latest/notebooks/401-object-detection-with-output.html

# To pre-convert and convert the YoloV4 files to OpenVINO IR (xml and bin) format, follow this link:

# https://github.com/openvinotoolkit/openvino/blob/0948a54f53d832e2517911114829e2b8785845b5/docs/MO_DG/prepare_model/convert_model/tf_specific/Convert_YOLO_From_Tensorflow.md

# The github repository mentioned on the above link is already cloned in: C:\Users\manue\source\repos\AI-Machine-Learning\keras-YOLOv3-model-set

###########

import collections

import cv2 as cv

import numpy as np

import time

from openvino.inference_engine import IECore

# PATHS

save_detection_path = 'detections/'

video_path = 'videos/'

openvino_model_path = 'openvino-models/chairs/'

picture_captured_path = 'picture-captured/chairs/'

# MODELS

openvino_xml = openvino_model_path + 'yolov4-tiny.xml'

openvino_weights = openvino_model_path + 'yolov4-tiny.bin'

#device_name = 'MYRIAD' # try this on the Rpi4

#device_name = 'CPU' # Use CPU of my laptop

device_name = 'GPU' # Use GPU of my laptop

ie = IECore()

net = ie.read_network(

model=openvino_xml,

weights=openvino_weights,

)

exec_net = ie.load_network(net, device_name)

input_layer_ir = next(iter(exec_net.input_info))

output_layer_ir = next(iter(exec_net.outputs))

print(ie.available_devices)

# get input and output names of nodes

input_key = list(exec_net.input_info)[0]

output_key = list(exec_net.outputs.keys())[0]

# get input size

height, width = exec_net.input_info[input_key].tensor_desc.dims[2:]

classes = ['office-chair', 'dining-chair']

# colors for above classes (Rainbow Color Map)

colors = cv.applyColorMap(

src=np.arange(0, 255, 255 / len(classes), dtype=np.float32).astype(np.uint8),

colormap=cv.COLORMAP_RAINBOW

).squeeze()

def printOpenVino_InputOutputItems():

print("Inputs:")

for name, info in exec_net.input_info.items():

print("\tname: {}".format(name))

print("\tshape: {}".format(info.tensor_desc.dims))

# print("\tlayout: {}".format(info.layout))

print("\tprecision: {}\n".format(info.precision))

print("Outputs:")

for name, info in exec_net.outputs.items():

print("\tname: {}".format(name))

print("\tshape: {}".format(info.shape))

print("\tlayout: {}".format(info.layout))

print("\tprecision: {}\n".format(info.precision))

print("input_key: " + input_key)

print("output_key: " + output_key)

def process_results(frame, results, thresh=0.6):

# size of the original frame

h, w = frame.shape[:2]

# results is a tensor [1, 1, 100, 7]

results = results[output_key][0][0]

boxes = []

labels = []

scores = []

for _, label, score, xmin, ymin, xmax, ymax in results:

# create a box with pixels coordinates from the box with normalized coordinates [0,1]

boxes.append(tuple(map(int, (xmin * w, ymin * h, xmax * w, ymax * h))))

labels.append(int(label))

scores.append(float(score))

# apply non-maximum suppression to get rid of many overlapping entities

# see https://paperswithcode.com/method/non-maximum-suppression

# this algorithm returns indices of objects to keep

indices = cv.dnn.NMSBoxes(bboxes=boxes, scores=scores, score_threshold=thresh, nms_threshold=0.6)

# if there are no boxes

if len(indices) == 0:

return []

# filter detected objects

return [(labels[idx], scores[idx], boxes[idx]) for idx in indices.flatten()]

def draw_boxes(frame, boxes):

for label, score, box in boxes:

# choose color for the label

color = tuple(map(int, colors[label]))

# draw box

cv.rectangle(img=frame, pt1=box[:2], pt2=box[2:], color=color, thickness=2)

# draw label name inside the box

cv.putText(img=frame, text=f"{classes[label]}", org=(box[0] + 10, box[1] + 30),

fontFace=cv.FONT_HERSHEY_COMPLEX, fontScale=frame.shape[1] / 1000, color=color,

thickness=1, lineType=cv.LINE_AA)

return frame

def detectVehicle(media_source_type, video, picture, count_axles):

video = video_path + video

picture = picture_captured_path + picture

frame_counter = 0

if media_source_type == 'V':

# cap = cv.VideoCapture(0) # 0 =capture video from first camera

cap = cv.VideoCapture(video)

processing_times = collections.deque()

while ((media_source_type == 'V' and True) or (media_source_type == 'P' and frame_counter < 1)):

if media_source_type == 'V':

# reads frames from a video

ret, imageFrame = cap.read()

else:

imageFrame = cv.imread(picture)

if (type(imageFrame) == type(None) or (media_source_type == 'V' and ret == False)):

break

# resize image and change dims to fit neural network input

resized_image = cv.resize(src=imageFrame, dsize=(width, height), interpolation=cv.INTER_AREA)

# create batch of images (size = 1)

input_img = resized_image.transpose(2, 0, 1)[np.newaxis, ...]

frame_counter += 1

# measure processing time

start_time = time.time()

# get results

result = exec_net.infer(inputs={input_key: input_img})

stop_time = time.time()

# get poses from network results

boxes = process_results(frame=imageFrame, results=result)

# draw boxes on a frame

imageFrame = draw_boxes(frame=imageFrame, boxes=boxes)

processing_times.append(stop_time - start_time)

# use processing times from last 200 frames

if len(processing_times) > 200:

processing_times.popleft()

_, f_width = imageFrame.shape[:2]

# mean processing time [ms]

processing_time = np.mean(processing_times) * 1000

fps = 1000 / processing_time

cv.putText(img=imageFrame, text=f"Inference time: {processing_time:.1f}ms ({fps:.1f} FPS)", org=(20, 40),

fontFace=cv.FONT_HERSHEY_COMPLEX, fontScale=f_width / 1000,

color=(0, 0, 255), thickness=1, lineType=cv.LINE_AA)

cv.imshow('detected image', imageFrame)

if cv.waitKey(1) & 0xFF == ord('q'):

break

if media_source_type == 'V':

cap.release()

if media_source_type == 'P':

cv.waitKey(0)

def main():

printOpenVino_InputOutputItems()

detectVehicle(media_source_type='P',video='', picture='1644965910719.JPEG', count_axles=True)

#detectVehicle(media_source_type='V',video='chairs1.mp4',picture='', count_axles=False)

main()

cv.destroyAllWindows()

Questions:

- Did I use the right conversion value for "--input_shape" argument in the "mo" script, considering that the 32 pictures I used to create the Yolov4-tiny model were of 312 x 416?

- Do I need to set values to additional arguments in the "mo" script?

- Do I rather need to adjust something in my Python program to read and interpret correctly the Input/Output values of the new IR model?

- Am I missing something?

Thank you for all of your time and effort. I will be waiting for your reply.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello RGVGreatCoder,

Thank you for sharing the code. Please send the Google Drive link to this email:

zulkiflix.bin.abdul.halim@intel.com

Sincerely,

Zulkifli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good news is, I created a YoloV4-tiny model (in a compressed zipped file) small enough to attach it on my previous post. Notice I edited my post last night. I also attached the IR (.xml and .bin) model (in a compressed zipped file) too.

I still sent you a Google Drive share invitation to a folder with those compressed zipped files anyway.

Let me know if you need anything else.

Thank you for all your support and will be waiting for your reply.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello RGVGreatCoder

The input shape must have the same shape as your training data. YOLO model pixel resolution must be a multiple of 32. A standard resolution size to choose is 416x416.

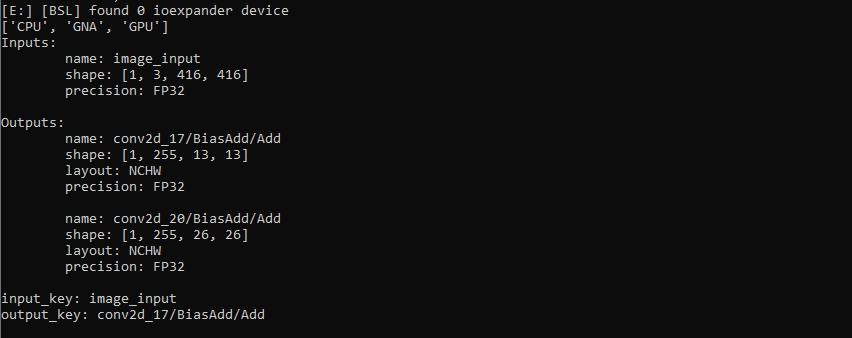

I tested your Python code with Intel OMZ model yolo-v4-tf and ssd_mobilenet_v1, I'm sharing with you the result:

yolo-v4-tf:

ssd_resnet50:

Sincerely,

Zulkifli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I ran the "mo" script with "--input_shape [1,416,416,3]" as you suggested and generated a new IR model (.xml/.bin/.mapping files are attached in the compressed yolov4-tiny-416.zip file). And when I ran my Python program it displayed the following values (similar to the ones you posted):

Inputs:

name: image_input

shape: [1, 3, 416, 416]

precision: FP32

Outputs:

name: StatefulPartitionedCall/model/conv2d_17/BiasAdd/Add

shape: [1, 21, 13, 13]

layout: NCHW

precision: FP32

name: StatefulPartitionedCall/model/conv2d_20/BiasAdd/Add

shape: [1, 21, 26, 26]

layout: NCHW

precision: FP32

input_key: image_input

output_key: StatefulPartitionedCall/model/conv2d_17/BiasAdd/AddHowever, my program is still crashing in the same place with same error "too many values to unpack (expected 7)", in line 87 that reads:

for _, label, score, xmin, ymin, xmax, ymax in results:As you probably realize by now, the goal of my program is to draw boxes on the detected objects before displaying them on the screen but, it still crashes in same place with the new IR model.

As I wrote post above, my program is using code from this page in your site (https://docs.openvino.ai/latest/notebooks/401-object-detection-with-output.html)

Questions:

- Why is the new IR model still displaying two outputs when the "ssdlite_mobilenet_v2.xml" only displays one output?

- Can you please suggest me some code I should try in my program to make it work?

Thank you and will be waiting for your reply,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello RGVGreatCoder,

The two outputs are expected because yolo-v4-tiny-tf model has 2 output layers:

- conv2d_20/BiasAdd

- conv2d_17/BiasAdd

The array of detection summary info, name - conv2d_20/BiasAdd, shape - 1, 26, 26, 255. The anchor values are 23,27, 37,58, 81,82.

The array of detection summary info, name - conv2d_17/BiasAdd, shape - 1, 13, 13, 255. The anchor values are 81,82, 135,169, 344,319.

Please refer to Output for more info.

You can use Object Detection Python Demo to run with the yolo-v4-tiny model. Note that Open Model Zoo demos expect input with BGR channels order. If you trained your model to work with RGB order, you need to manually rearrange the default channels order in the demo application or reconvert your model using the Model Optimizer tool with the --reverse_input_channels argument specified.

Sincerely,

Zulkifli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am sorry to say this, but, I beg to speak or chat with someone at your end to speed this particular case. It's been already a month going back and forth through this portal where all I need is to be able to have OpenVINO run a custom Yolov4-tiny model (I created using Darknet in Google Colab) that I understand now, it needs to be pre-converted before it is converted first to IR model. Yet, I still cannot find a clear and simple solution from your site, on how to make use of the generated IR model. I am sorry. I am sorry. I am very frustrated, so many hours and I may even lose my job for not being able to come up with a solution into this case at my current job. :'(

You point to me to this page now: https://github.com/openvinotoolkit/open_model_zoo/tree/2021.4.2/demos/object_detection_demo/python. Nowhere in this page are there instructions on how to download the object_detection_demo.py program. So I am lucky to find some instructions on this other page: https://github.com/openvinotoolkit/open_model_zoo. from which I downloaded the entire folder with all the demos, including the object_detection_demo.py that apparently has the code I need in my program to box and label a detected image before displaying on the screen (this is all I need).

As I navigate to the demo folder: https://github.com/openvinotoolkit/open_model_zoo/tree/master/demos, I realize there are some instructions to follow before running the demo page I need, as follow:

<INSTALL_DIR>/extras/scripts/download_opencv.sh

source <INSTALL_DIR>/setupvars.shExcept, a month ago (when I barely started playing with this toolkit), I installed OpenVINO through PIP installation. So I don't have an "<INSTALL_DIR>" directory. I cannot run the lines of script above to run the "source... setupvars.sh".

Then I find that this page says has this note:

NOTE: If you plan to use Python* demos only, you can install the OpenVINO Python* package.

pip install openvino

Now, I had mentioned before I had installed OpenVINO using PIP, but the developer version. So further instructions after this, seem to not apply to me anymore. So, at this point, I am lost guys. I really need some serious help.

Please. I need a faster response from you. I am sorry to even ask for this, but I have no other way to say it. I really do. How can we speed our communication? I am so lost; I don't even know what I need to ask from here on. Except, all you have need remember is this: I need to run a program that can box an image with a label, before displaying it on the screen using my custom Yolov4-tiny model converted to IR.

Please, please, help. Let me know how we can do this. I feel like I am getting close, but I need more help from you. How can we interact much faster?

Sorry. Will be waiting for your reply.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello RGVGreatCoder,

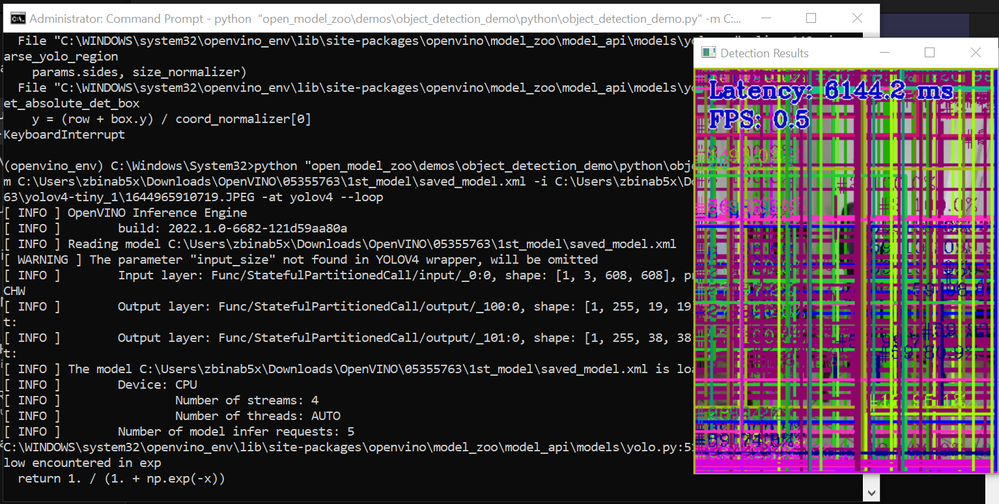

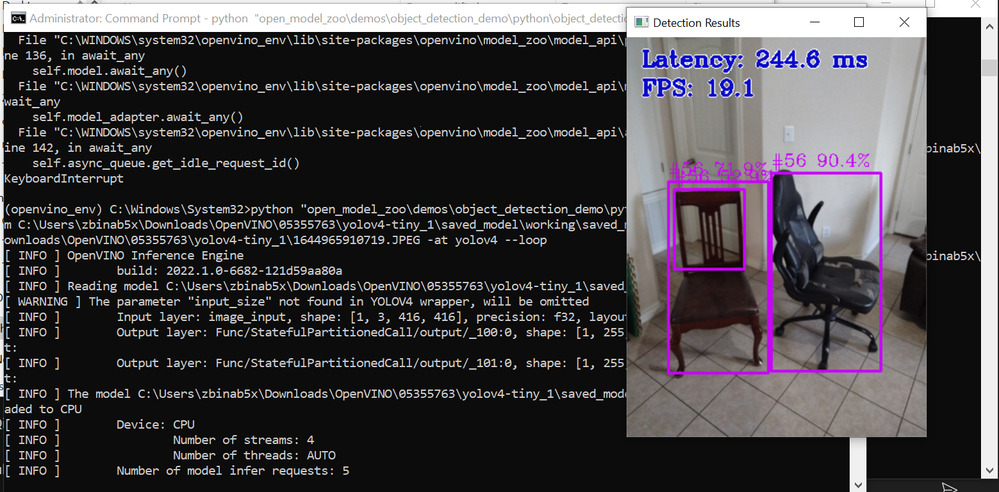

I managed to run your model with Object Detection Python Demo from Open Model Zoo (OMZ) demos.

Here is the comparison result between your models and Intel OMZ model:

Using your 1st yolo-v4-tiny:

Using your 2nd yolov4-tiny model:

Using Intel OMZ yolo-v4-tiny-tf model:

To use the OMZ demos here are the steps to follow:

1. Convert the yolov4-tiny model using this conversion command:

mo --saved_model_dir <directory_path_pb_model> -o <directory_path_pb_model>\IR --input_shape [1,416,416,3] --reverse_input_channels --data_type=FP16 -n yolov4-tiny 2. Clone Open Model Zoo repository:

git clone https://github.com/openvinotoolkit/open_model_zoo.git3. Configure the Python model API installation

4. Once completed, go to the Object Detection Python demo:

cd open_model_zoo\demos\object_detection_demo\python5. Run demo using this command:

python object_detection_demo.py -m <model_directory>\yolov4-tiny.xml -i <input_directory>\1644965910719.JPEG -at yolov4 --loop

Sincerely

Zulkifli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your prompt response.

Step 1, worked well and generated new .xml, .bin, .mapping files.

Step 2, worked well, generating a folder with all its contents as follows:

>git clone https://github.com/openvinotoolkit/open_model_zoo.git

Cloning into 'open_model_zoo'...

remote: Enumerating objects: 92030, done.

remote: Counting objects: 100% (71/71), done.

remote: Compressing objects: 100% (68/68), done.

remote: Total 92030 (delta 13), reused 16 (delta 3), pack-reused 91959 eceiving objects: 100% (92030/92030), 279.

Receiving objects: 100% (92030/92030), 279.80 MiB | 6.61 MiB/s, done.

Resolving deltas: 100% (62565/62565), done.

Updating files: 100% (2586/2586), done.

Step 3.1, the pip installation went well as follows:

>pip install setuptools

Requirement already satisfied: setuptools in c:\users\manue\appdata\local\programs\python\python38\lib\site-packa

ges (56.0.0)

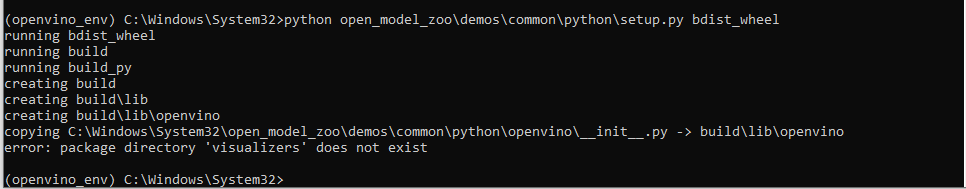

But Step 3.2, failed as follows:

>python open_model_zoo/demos/common/python/setup.py bdist_wheel

running bdist_wheel

running build

running build_py

copying C:\Users\manue\source\repos\AI-Machine-Learning\open_model_zoo\demos\common\python\openvino\__init__.py -

> build\lib\openvino

error: package directory 'visualizers' does not exist

Interesting enough, I found a folder named "visualizers" inside "open_model_zoo\demos\common\python\" . Could the setup program be looking for that folder in some other location?

Please let me know how to fix this. Thank you for your patience and will be waiting for your reply,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello RGVGreatCoder,

I encountered the same error when using this command:

python open_model_zoo\demos\common\python\setup.py bdist_wheel

To avoid this error, please run this command:

cd open_model_zoo\demos\common\python

python setup.py bdist_wheel

Sincerely,

Zulkifli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your prompt response.

I successfully setup bdist_wheel as follows (pasting the first and last lines to reduce space):

cd open_model_zoo\demos\common\python

python setup.py bdist_wheel

running bdist_wheel

running build

running build_py

...

adding 'openmodelzoo_modelapi-0.0.0.dist-info/WHEEL'

adding 'openmodelzoo_modelapi-0.0.0.dist-info/top_level.txt'

adding 'openmodelzoo_modelapi-0.0.0.dist-info/RECORD'

removing build\bdist.win-amd64\wheel

I installed "openmodelzoo_modelapi-0.0.0-py3-none-any.whl" as follows:

>cd dist

>python -m pip install openmodelzoo_modelapi-0.0.0-py3-none-any.whl --force-reinstall

Processing c:\users\manue\source\repos\ai-machine-learning\open_model_zoo\demos\common\python\dist\openmodelzoo_modelapi-0.0.0-py3-none-any.whl

Collecting scipy~=1.5.4

Using cached scipy-1.5.4-cp38-cp38-win_amd64.whl (31.4 MB)

Collecting opencv-python==4.5.*

Using cached opencv_python-4.5.5.62-cp36-abi3-win_amd64.whl (35.4 MB)

Collecting numpy<=1.21,>=1.16.6

Downloading numpy-1.21.0-cp38-cp38-win_amd64.whl (14.0 MB)

---------------------------------------- 14.0/14.0 MB 13.4 MB/s eta 0:00:00

Installing collected packages: numpy, scipy, opencv-python, openmodelzoo-modelapi

Attempting uninstall: numpy

Found existing installation: numpy 1.19.5

Uninstalling numpy-1.19.5:

Successfully uninstalled numpy-1.19.5

Attempting uninstall: scipy

Found existing installation: scipy 1.5.4

Uninstalling scipy-1.5.4:

Successfully uninstalled scipy-1.5.4

Attempting uninstall: opencv-python

Found existing installation: opencv-python 4.5.5.62

Uninstalling opencv-python-4.5.5.62:

Successfully uninstalled opencv-python-4.5.5.62

ERROR: pip's dependency resolver does not currently take into account all the packages that are installed. This behaviour is the source of the following dependency conflicts.

tensorflow 2.4.4 requires numpy~=1.19.2, but you have numpy 1.21.0 which is incompatible.

openvino 2021.4.2 requires numpy<1.20,>=1.16.6, but you have numpy 1.21.0 which is incompatible.

openvino-dev 2021.4.2 requires numpy<1.20,>=1.16.6, but you have numpy 1.21.0 which is incompatible.

Successfully installed numpy-1.21.0 opencv-python-4.5.5.62 openmodelzoo-modelapi-0.0.0 scipy-1.5.4

It installed numpy-1.21.0, opencv-python-4.5.5.62, openmodelzoo-modelapi-0.0.0 and scipy-1.5.4. However, I noticed there are some incompatibility issues with numpy-1.21.0 so I uninstalled it and installed numpy-1.19.5 as follows:

>pip uninstall numpy

Found existing installation: numpy 1.21.0

Uninstalling numpy-1.21.0:

Would remove:

c:\users\manue\appdata\local\programs\python\python38\lib\site-packages\numpy-1.21.0.dist-info\*

c:\users\manue\appdata\local\programs\python\python38\lib\site-packages\numpy\*

c:\users\manue\appdata\local\programs\python\python38\scripts\f2py.exe

Proceed (Y/n)? y

Successfully uninstalled numpy-1.21.0

>pip install numpy==1.19.5

Collecting numpy==1.19.5

Using cached numpy-1.19.5-cp38-cp38-win_amd64.whl (13.3 MB)

Installing collected packages: numpy

Successfully installed numpy-1.19.5

I checked if the model API was successfully installed (seeing no errors when importing the model_api) as follows:

>python

Python 3.8.10 (tags/v3.8.10:3d8993a, May 3 2021, 11:48:03) [MSC v.1928 64 bit (AMD64)] on win32

Type "help", "copyright", "credits" or "license" for more information.

>>> from openvino.model_zoo import model_api

>>> quit()

Now, I assumed that after installing "openmodelzoo_modelapi-0.0.0-py3-none-any.whl", I did not need to install nor run further steps in the page you referred to me which is "Python model API installation". So please let me know if there are more steps to execute from this page.

Having said that, I thought it was about time to try and see if the demo worked but it failed as follows:

>python object_detection_demo.py -at yolov4 -d GPU --loop -m <model_directory>\yolov4-tiny-416.xml -i <input_directory>\1644965910719.JPEG --labels <path>\classes.txt

Traceback (most recent call last):

File "object_detection_demo.py", line 28, in <module>

from openvino.model_zoo.model_api.models import DetectionModel, DetectionWithLandmarks, RESIZE_TYPES, OutputTransform

File "C:\Users\manue\AppData\Local\Programs\Python\Python38\lib\site-packages\openvino\model_zoo\model_api\models\__init__.py", line 32, in <module>

from .open_pose import OpenPose

File "C:\Users\manue\AppData\Local\Programs\Python\Python38\lib\site-packages\openvino\model_zoo\model_api\models\open_pose.py", line 23, in <module>

import openvino.runtime.opset8 as opset8

ModuleNotFoundError: No module named 'openvino.runtime'

Please let me know how to fix this issue.

Thank you and will be waiting for your reply.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page