- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I converted this PyTorch 7x model to an ONNX model with the idea of trying to use this in the open VINO toolkit. And after converting the Pytorch model to open VINO format:

import cv2

import numpy as np

import matplotlib.pyplot as plt

from openvino.runtime import Core

model_path = (

f"./yolov7.xml"

)

ie_core = Core()

def model_init(model_path):

model = ie_core.read_model(model=model_path)

compiled_model = ie_core.compile_model(model=model, device_name="CPU")

input_keys = compiled_model.input(0)

output_keys = compiled_model.output(0)

return input_keys, output_keys, compiled_model

input_key, output_keys, compiled_model = model_init(model_path)

This will print on the `compiled_model`:

<CompiledModel:

inputs[

<ConstOutput: names[input.1] shape{1,3,640,640} type: f32>

]

outputs[

<ConstOutput: names[812] shape{1,25200,85} type: f32>,

<ConstOutput: names[588] shape{1,3,80,80,85} type: f32>,

<ConstOutput: names[669] shape{1,3,40,40,85} type: f32>,

<ConstOutput: names[750] shape{1,3,20,20,85} type: f32>

]>

But how do I use this? This repo for the Yolo7 model contains a detect.py and some direction on how use this .py file (also a ipynb) but not a ton of information. Is it a enough to be able to use this model in the open VINO toolkit?

What I am desiring to use this is for a people detector and from my testing it works really well from my testing using the detect.py, just curious if I could this in in the open VINO toolkit.

Thanks for anytime in response...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you thank you so much....especially helping junior level dev people leverage this platform.

I think I have enough info to get me going on getting started. If I run into another technical snag ill post another comment for the training extensions help.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Bartlino,

Thanks for reaching out.

For your information, YOLOv7 is currently not an officially supported topology by the OpenVINO toolkit nor has it been validated by us.

For sharing purposes, you can check out this Github repository (*This is an external link and is not maintained by Intel ) which demonstrates how to deploy YOLOv7 pre-trained model with OpenVINO Runtime API.

Besides that, you may use any of the OpenVINO Intel Pre-Trained models that might fit with your People Detector application. Below I listed a few models that might be relevant to you.

- Person-detection-0106

- Person-detection-0200

- Person-detection-0201

- Person-detection-0202

- Person-detection-0203

- Person-detection-0200

- Person-detection-0301

- Person-detection-0302

- Person-detection-0303

- Person-detection-retail-0002

- Person-detection-retail-0013

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Okay good to know that Yolo7 isn't supported on openVINO.

One thing to note is I have tested all the pretrained models you listed but the results are not that good for a conference room setting in a building....see this Microsoft Word document, its my write up for the Udacity/bootcamp AI course for edge device AI course.

Would there be any other solutions I could use within the pretrained open model zoo library? Sorry if this sounds like a silly idea, but is it possible to take a open model zoo pretrained model and enhance or optimize it for people detection? If it is possible for a beginner tutorial?

Thank you for your time in response !

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Bartlino,

The Intel Pre-trained models are used by the Open Model Zoo demos to show its capabilities. In order to run it with a custom application, you need to retrain the models based on your application requirement. You may retrain the models using OpenVINO™ Training Extensions.

Below is the step to train the person detection model using OpenVINO™ Training Extensions.

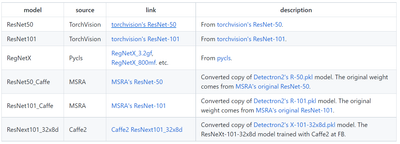

1. Choose an object detection model from mmdetection model zoo.

2. Train selected model to person detection model using OpenVINO™ Training Extensions.

Refer to Quick Start Guide.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In the mmdetection models, could you recommend one to me that would work best for my application of counting people in a conference of a building? I like in the open model zoo some models state they work well with different light levels which is definitely something I am up against as well that the daylighting from outdoors is constantly changing.

For retraining the model with my own image dataset, could you recommend on how many images of people I should have in my dataset?

Thank you for your time in response.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

In the quickstart can someone give me a tip on if I am on track at all for understanding this? For example these arg:

ote optimize ./external/mmdetection/configs/ote/custom-object-detection/gen3_mobilenetV2_ATSS/template.yaml --load-weights openvino.xml --save-model-to ./pot_output --save-performance ./pot_output/performance.json --train-ann-file ./data/car_tree_bug/annotations/instances_default.json --train-data-roots ./data/car_tree_bug/images --val-ann-file ./data/car_tree_bug/annotations/instances_default.json --val-data-roots ./data/car_tree_bug/images

This is for optimizing an existing gen3_mobilenetV2_ATSS model with some new data input coming from the ./data/car_tree_bug/images directory....

I am just looking to do something similar but with images of people in conference rooms not car, tree, bug. Any beginner tips appreciated, not a lot of wisdom here. Is it just trial and error for best results with picking between these options:

Or what's the difference between ImageNet Pretrained Models and Baselines? Where's a good place to start?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Bartlino,

For your previous questions, no exact minimum number of custom images is required to train for object detection application. You may decide based on the model accuracy required. However, a small number of trained images can decrease the output performance.

In the selection of a model that works well with your application, we cannot suggest any model since the pre-trained model is based on OMZ demos requirement. You might try with person detection model since your main objective is to detect images of people.

Next, yes you are right about the OpenVINO Training Extensions (OTE) command. The Post-training Optimization Tool (POT) is to accelerate the inference speed of your Intermediate Representation (IR) model by applying post-training quantization. This helps your model to run faster and use less memory. In some instances, it causes a slight reduction in accuracy.

For NNCF, it integrates with PyTorch and TensorFlow to quantize and compress your model during or after training to increase model speed while maintaining accuracy and keeping it in the original framework’s format. Refer to OTE Developer Guide for more details.

Steps to start a custom person detection model training:

- Follow Steps 1 to 5 in training_extention documentation.

- Deactivate the virtual environment once completed.

- Follow Steps 1 to 5 in person detection documentation.

Apart from that, the ImageNet Pretrained Models are the models that have been trained with respect to the OMZ demos requirement. Then, Baselines are actually the algorithm and topology for the model network.

Here is the video of OpenVINO Training Extensions. Please be noted, this video is outdated but the concept of the OTE might be a help to you.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you thank you so much....especially helping junior level dev people leverage this platform.

I think I have enough info to get me going on getting started. If I run into another technical snag ill post another comment for the training extensions help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Bartlino,

I am glad to help you.

Thank you for your question. This thread will no longer be monitored since we have provided all the information. If you need any additional information from Intel, please submit a new question as this thread is no longer being monitored.

Regards,

Aznie

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page