- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

what I'm doing is to : change the onnx format to IR.

My Command:

python /opt/intel/openvino_2021/deployment_tools/model_optimizer/mo.py --input_model "model.onnx" --output_dir "cur_folder/"

And here is the brief error:

[ ERROR ] The ExpandDims node Unsqueeze_583 has more than 1 input

[ ERROR ] Cannot infer shapes or values for node "Slice_4".

[ ERROR ] Output shape: [0 3 0 0] of node "Slice_4" contains non-positive values

[ ERROR ]

[ ERROR ] It can happen due to bug in custom shape infer function <function Slice.infer at 0x7fda43996f70>.

[ ERROR ] Or because the node inputs have incorrect values/shapes.

[ ERROR ] Or because input shapes are incorrect (embedded to the model or passed via --input_shape).

Please see the attachment for more details.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi TonyWong,

I have converted your model into Intermediate Representation (IR) successfully. For your yolov5 model, there are 3 output nodes. You can use Netron to visualize the Yolov5 ONNX weights and get the information. Use the command below to get the IR files.

Model Optimizer command: python mo.py --input_model model.onnx --output Conv_410,Conv_322,Conv_498 --input_shape [1,3,512,512]

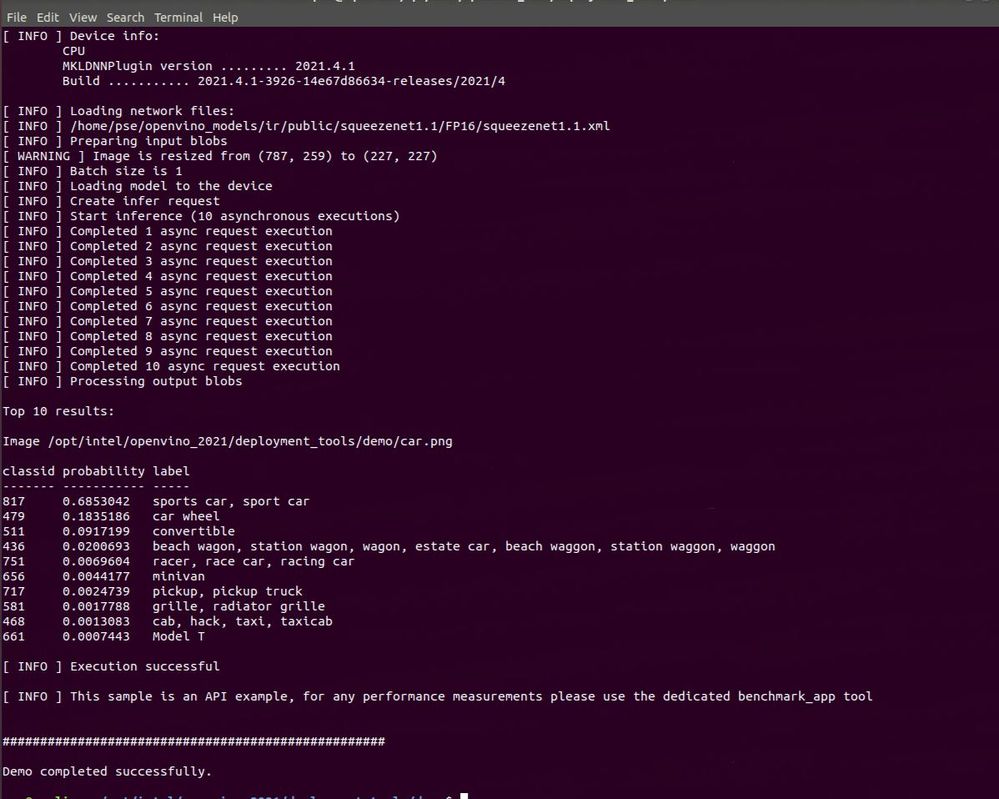

I attached the screenshot of the conversion below.

Regards,

Aznie

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi TonyWong,

Thanks for reaching out.

From the error message, it shows that Model Optimizer cannot infer shapes or values for the specified node. It can happen because of a bug in the custom shape infer function, because the node inputs have incorrect values/shapes, or because the input shapes are incorrect. You have to specify the input shapes of your model.

You can share your model for us to further investigate this. Meanwhile, please refer to this parameters information from Converting a ONNX* Model for a specific model type.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Aznie,

Many thanks for your reply.

I've read the link you shared.

And also, I've tried to specify the shape. (though the only requirement is 3 color channel.)

Here is the input shape that you can find on netron . float32[batch, 3, height, width]

I've tried to specify Input shape, with no luck.

As the model is 95M. Here is the google drive link.

Many thanks for your kind reply and help.

Tony

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oh, one thing I want to mention.

that the input dimension, unlike all existing samples, does not specify the batch, height, and width for the image.

Thus I do not know how to specify these values.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Something like the dynamic input shape. (and it looks that openVino does not support it yet.)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi TonyWong,

I have converted your model into Intermediate Representation (IR) successfully. For your yolov5 model, there are 3 output nodes. You can use Netron to visualize the Yolov5 ONNX weights and get the information. Use the command below to get the IR files.

Model Optimizer command: python mo.py --input_model model.onnx --output Conv_410,Conv_322,Conv_498 --input_shape [1,3,512,512]

I attached the screenshot of the conversion below.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Wow. Many thanks.

Quick question. May I ask why the shape is for Width and Height is 512?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi TonyWong,

The 512 value is the standard size used for Model Optimizer. It is not the input shape of your model. If you are not specifying the input shape, Model Optimizer will use the input shape of the original model. If you specify the input shape as 512, the IR generated will be in 512 sizes.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you ,Aznie.

One thing I want to mention:

that although your 512 parameter does make the mo.py pass.

Here is my modification that it can really produce some result.

FYI, your documentation are really full of problems.

For example:

this tutorials are badly outdated.

Also, your official documentation , even the first page:

https://docs.openvino.ai/latest/openvino_docs_get_started_get_started_linux.html

has problems: when I ran this command:

./demo_squeezenet_download_convert_run.sh

Errors as : (I'm using Ubuntu 20.04, and OpenVino 2021.4,latest so far)

CMake Error at object_detection_sample_ssd/config/InferenceEngineConfig.cmake:36 (message):

File or directory

/opt/intel/openvino_2021/deployment_tools/inference_engine/samples/cpp/object_detection_sample_ssd/external/tbb/cmake

referenced by variable _tbb_dir does not exist !

Call Stack (most recent call first):

object_detection_sample_ssd/config/InferenceEngineConfig.cmake:114 (set_and_check)

CMakeLists.txt:237 (find_package)

object_detection_sample_ssd/CMakeLists.txt:5 (ie_add_sample)

Hope these feedback can be of any help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

FYI, for the error mentioned in last post:

it can be fixed by change the line 31 of opt/intel/openvino_2021/inference_eggine/samples/cpp/object_detection_sample_ssd/config/InferenceEngineConfig.cmake file into:

get_filename_component(PACKAGE_PREFIX_DIR "${CMAKE_CURRENT_LIST_DIR}/../../../../" ABSOLUTE)

But immediately, other errors :

set_target_properties Can not find target to add properties to:

IE::inference_engine

These errors on tutorial, is really a torture for engineers who want to use OpenVino. crying~~~~~~~~~~~

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sigh~~~~~~, even I managed to fix all these parameter path issue.

and also changed the line 133

include("${CMAKE_CURRENT_LIST_DIR}/InferenceEngineTargets.cmake")

I've searched everywhere on my local. still cannot found it.

Can you help?

(yeah, I already skip and move forward, but similar issues keeps happening.)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi TonyWong,

You are unable to get the IR file using the command? What do you mean the result is not as expected? Please share the error you got when running the Model Optimizer.

Thus, for the demo_squeezenet_download_convert_run.sh failure, it might related to the ngraph.This demo is to verify your installation is successful. Could you confirm you completed all the steps in Install Intel® Distribution of OpenVINO™ toolkit for Linux* ?

Please make sure you are installing all OpenVINO packages. Make sure you are following the installation steps carefully.You may have forgotten install external dependencies. Please refer to the following link to install them.

Meanwhile, I can run the demo_squeezenet_download_convert_run.sh successfully as below:

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aznie,

I'm not blaming anyone.

I know you are here trying to help me.

And I do appreciate it.

My company is trying to use your OpenVino on our Cloud infrastructure on all our ML inference machines, which can potentially save a lot.

Firstly, the 512, 512 parameter you provided works when producing the IE file. (but when doing the inference, it complains dimension error, so I changed it into

This is just FYI, and though the output format is no longer the same with ONNX. in Netron.

Maybe that's by design. ( can you confirm that? )

At least, it can produced something.

Also, there's a time that I have

And yes, I have followed this link:

to install everything.

As you can see, the build errors I mentioned above are path changing issues.

And I can fix them for path changing.

As the screenshot that you listed above, yes, there is a time that I have run that script and get your result.

But not last time, which I've found some path configuration error.

Best wishes.

I will create a new VM for ubuntu20.04, and start from scratch. Hope this time, no silly error.

Let's close this question. And I would post other question.

Again, thank you, Aznie.

Best wishes.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi TonyWong,

This thread will no longer be monitored since we have provided a solution. If you need any additional information from Intel, please submit a new question.

Regards,

Aznie

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page