- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a tensorflow 2 model which is based of https://www.tensorflow.org/api_docs/python/tf/keras/applications/inception_v3/InceptionV3

but with an input of 160,160,3 and a final softmax dense layer of 12.

I use

mo --saved_model_dir . --input_shape [1,160,160,3] --output_dir ir --framework 'tf' --layout 'input(NHWC)' --reverse_input_channels --mean_values [127.5,127.5,127.7] --scale_values [127.5,127.5,127.5]to convert the model to an IR model.

Then when I load the IR model if i set the device to CPU i get the correct results. The same as if i use the tf2 frozen model. But if i set the device to Myriad, i always get a predicted class of 9.

I'm not sure why, is it a precision error difference between Myriad and CPU devices? I am running it on a Nerual Compute Stick 2.

Using:

OpenVINO runtime version: 2022.1.0-7019-cdb9bec7210-releases/2022/1 and

Model Optimizer version: 2022.1.0-7019-cdb9bec7210-releases/2022/1

Any help or advice is appreciated, thanks

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

can you share your model files for us to validate from our side?

Did you use any custom layer/operations in this model?

Sincerely,

Iffa

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No custom layers or operations, here is my frozen tf model

https://drive.google.com/file/d/1rLFy7-py3tSL8xGw-1Qe94csbb_jvEVF/view?usp=sharing

This is the model creation code here

incModel = tf.keras.applications.InceptionV3(

weights="imagenet",

include_top=False,

input_shape=(160, 160, 3),

)

input = tf.keras.Input(shape=(160, 160, 3), name="input")

x = incModel(input, training=False)

x = tf.keras.layers.GlobalAveragePooling2D()(x)

x = tf.keras.layers.Dense(12, activation="softmax", name="prediction")(x)

incModel = tf.keras.models.Model(input, outputs=x)Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

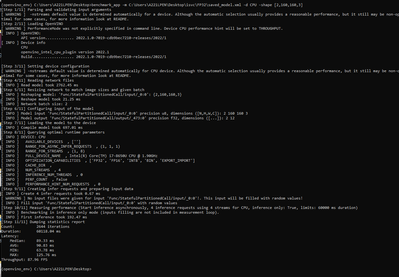

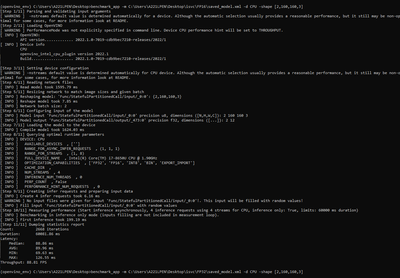

I checked your model and here are my findings:

FP32

FP16 CPU

FP16 MYRIAD

In short:

FP32

Count: 2644 iterations

Duration: 60118.04 ms

Latency:

Median: 89.33 ms

AVG: 90.83 ms

MIN: 63.78 ms

MAX: 125.76 ms

Throughput: 87.96 FPS

FP16 CPU

Count: 2668 iterations

Duration: 60081.86 ms

Latency:

Median: 88.86 ms

AVG: 89.96 ms

MIN: 69.63 ms

MAX: 126.55 ms

Throughput: 88.81 FPS

FP16 MYRIAD

Count: 2860 iterations

Duration: 60099.45 ms

Latency:

Median: 83.52 ms

AVG: 83.82 ms

MIN: 48.40 ms

MAX: 94.76 ms

Throughput: 95.18 FPS

Based on the results, the FP32 and FP16 precision that runs on CPU seems to be having quite similar performance with FPS that differ by 0.85. Meanwhile, the FP16 precision runs on MYRIAD have the best FPS among those three results which is expected.

However, bear in mind that compressing a model from its original format or full precision FP32 into smaller in size precision (FP16/INT8) has its trade-off which is accuracy. If your use case requires to be accurate, such as clinical-related results, it is not recommended to shrink the model as you need to bear with a less accurate prediction. Meanwhile, if your use case requires fast results without the need for prediction to be really accurate, then it is suitable to use a smaller size format.

This might help you to understand better.

Sincerely,

Iffa

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry I think you mis understood the problem.

The problem is when i run the model on a MYRIAD device I always get an incorrect prediction ( Prediction class 9) But if I predict the same model on the CPU i get the correct prediction class.

Thanks,

Giampolo

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

what I meant by less acurate is, your model that runs on MYRIAD need to be in FP16 format which is compressed from a full precision model. So this means that the model that runs on the MYRIAD (FP16) will have less accurate result compared to CPU (if you are using FP32 in CPU) which you didn't specify in the first place.

Another possibility is that your result is due to the hardware computing power capabilities or it is caused by your custom inferencing code itself. (The VPU or MYRIAD device definitely has less computing power compared to the CPU).

I did check your model performance with FP16 precision on both CPU and MYRIAD and it seems that the FP16 format on MYRIAD serves its purpose because it does infer faster. You definitely can run the FP16 on CPU however it is not the complete appropriate precision to be used with.

Check this compatible precision for target hardware devices (focus on CPU & VPU(MYRIAD)).

To sum it all, those are the possible reasons that may cause your MYRIAD and CPU to be having different results.

Sincerely,

Iffa

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok I did not realise that the Intel Compute Stick 2 requires FP16. When I convert using --data_type FP16 i get the same incorrect results on CPU and on MYRIAD which makes sense.

So my next question is, what can I do to change my model to FP16 and still get the correct predictions?

Do i need to retrain the tensorflow model using mixed precision?

Thanks,

Giampaolo

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It is recommended to use consistent precision during training & inferencing including before and after the use of OpenVINO.

Sincerely,

Iffa

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Intel will no longer monitor this thread since we have provided a solution. If you need any additional information from Intel, please submit a new question

Sincerely,

Iffa

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page