- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi there,

I downloaded the Intel pre-trained Unet model from the OpenVINO Model Zoo Github repo without any modifications.

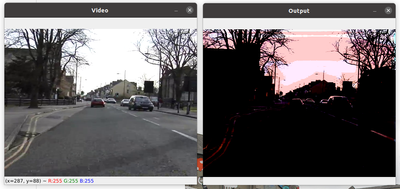

But it does not seem working, please have a look the below prediction on the right side.

I am expecting to see correct segmentation with different color marks, but it just shows darker image.

Here is my code, please let me know if you found anything wrong in it, otherwise, the model should be updated:

from logging import exception

import cv2

import numpy as np

from openvino.inference_engine import IECore

class ColorMap:

SKY=[28,51,71]

BUILDING=[28,28,28]

POLE=[60,60,60]

ROAD=[50,25,50]

PAVEMENT=[95,14,91]

FENCE=[74,60,60]

VEHICLE=[0,0,56]

PEDESTRIAN=[86,8,23]

BIKE=[47,5,13]

UNLABELED = [17,18,21]

TREE=[40,40,61]

SIGNSYMBOL=[86,86,0]

COLORS = []

COLORS_BGR = []

COLOR_MAP = {}

# the sequence of colors in this arrar matters!!! as it maps to the prediction classes

COLORS.append(SKY)

COLORS.append(BUILDING)

COLORS.append(POLE)

COLORS.append(ROAD)

COLORS.append(PAVEMENT)

COLORS.append(TREE)

COLORS.append(SIGNSYMBOL)

COLORS.append(FENCE)

COLORS.append(VEHICLE)

COLORS.append(PEDESTRIAN)

COLORS.append(BIKE)

COLORS.append(UNLABELED)

for color in COLORS:

np_color = np.array(color)

COLORS_BGR.append(np_color[[2,1,0]])

def crop_to_square(frame):

height = frame.shape[0]

width = frame.shape[1]

delta = int((width-height) / 2)

return frame[0:height, delta:width-delta]

model_xml = 'unet-camvid-onnx-0001.xml'

model_bin = "unet-camvid-onnx-0001.bin"

shape = (480, 368)

ie = IECore()

print("Available devices:", ie.available_devices)

net = ie.read_network(model=model_xml, weights=model_bin)

input_blob = next(iter(net.input_info))

# You can select device_name="CPU" to run on CPU

# exec_net = ie.load_network(network=net, device_name='MYRIAD')

exec_net = ie.load_network(network=net, device_name='CPU')

# Get video from the computers webcam

cap = cv2.VideoCapture('/media/winstonfan/Workspace/Learning/Github/depthai/videos/CamVid.mp4')

while cap.isOpened():

ret, raw_image = cap.read()

if not ret:

continue

image = crop_to_square(raw_image)

image = cv2.resize(image, shape)

cv2.imshow('Video ', image)

image = image.astype(np.float32)

image = np.expand_dims(image, axis=0)

image = image.transpose((0, 3, 1, 2))

image = image / 127.5 - 1.0

# Do the inference on the MYRIAD device

output = exec_net.infer(inputs={input_blob: image})

output = np.squeeze(output['206'])

data = np.argmax(output, axis=0)

if data.shape != (368, 480):

raise exception('unexpected shape of data from decode() method in handler.py');

class_colors = ColorMap.COLORS

class_colors = np.asarray(class_colors, dtype=np.uint8)

output_colors = np.take(class_colors, data, axis=0)

max_value = output_colors.max()

output_colors = (output_colors /(max_value/2.0) - 1.0).astype(np.float32)

sqz_img = np.moveaxis(np.squeeze(image), 0, 2)

overlayed = cv2.addWeighted(sqz_img, 1, output_colors, 0.2, 0)

cv2.imshow('Output', overlayed)

if cv2.waitKey(1) == ord('q'):

break

Thanks,

Winston

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Winston,

Thanks for reaching out.

We experienced the same output as you. I will investigate this issue with our developer and will update you with the information soon.

Meanwhile, the model output of unet-camvid-onnx-0001 is the per-pixel probabilities of each input pixel of the 12 classes of the CamVid dataset. You can find the RGB value of the classes in this directory:

INSTALL_DIR\deployment_tools\open_model_zoo\data\palettes

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your update.

I am looking forward to your update soon.

I am actually looking for a fast segmentation model on Raspberry Pi 4 (4GB).

After digging a while, I found that the DeepLabV3Plus-MobileNetV2 or DeepLabV3Plus-MobileNetV3(timm-mobilenetv3_small_075) can be a better fit.

Is it possible for Intel to provide this model?

As it's for the portable devices, it would have a huge popularity.

Best regards,

Winston

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Winston,

OpenVINO does have deeplabv3 supported but not the Plus version of the model. Currently, we cannot provide any model that is not yet validated in the OpenVINO toolkit.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the update.

Do you have the model which is constructed with DeepLabV3(not plus) as the head part + MobileNetV3_Small_x75( as backbone) ?

Or

SFNet + MobileNetV3_Small_x75 model?

I need one of these 2 models to run on my edge device for just the road segmentation.

Could you please share the link to these 2 models if they exist?

Many thanks,

Winston

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Winston,

Thank you for your patience. I have validated unet-camvid-onnx-0001 model using our Open Model Zoo segmentation demo and the result is not as it should be. I will escalate this to our development team for further clarification and it might take some time. I will provide you with the latest updates once available from their end.

Also, I did try running the segmentation demo using other supported models (icnet-camvid-ava-0001, icnet-camvid-ava-sparse-30-0001,icnet-camvid-ava-sparse-60-0001) and the result is slightly better compared to unet model. I would suggest you try these models for your custom code and see if the result can meet your requirement. You might need to change your custom code.

Meanwhile, we do not have such models available regarding the DeepLabV3 model + MobileNetV3_Small_x75 (as backbone) or SFNet _ MobileNetV3_Small_x75 model. I suggest you use our Open Model Zoo models( road-segmentation-adas-0001 and semantic-segmentation-adas-0001) that validate and test for road segmentation purposes.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @IntelSupport for the update and confitmation.

In my requirements, I only need 1 class for the segmentation which is road and the input size should be small e.g. 224x224.

I would like to train it myself, since I don't think I'd be able to find any model like this.

Could you please provide a tutorial for how to train a model for segmentation with PyTorch?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, @franva.

If you are interested in road segmentation, I suggest you use the following models:

- road-segmentation-adas-0001 (classify each pixel into four classes: BG, road, curb, mark.)

- semantic-segmentation-adas-0001

- deeplabv3

These models are the lightest and fastest.

Also I would recommend you use segmentation_demo from Open Model Zoo, because it contains the correct pre- and postprocessing:

- Preprocessing - is only resize image to the input shape of model. Crop don't needed. Normalization is done automatically while inference of model.

- Postprocessing - to get color map from output and mixed it with original (input) image. Your postprocessing is normal, but alpha and beta in cv2.addWeighted are strange. (alp + beta = 1)

So, your code will look like this:

from logging import exception

import cv2

import numpy as np

from openvino.inference_engine import IECore

class ColorMap:

SKY=(0, 0, 0)

BUILDING=(128, 0, 0)

POLE=(0, 128, 0)

ROAD=(128, 128, 0)

PAVEMENT=(0, 0, 128)

FENCE=(128, 0, 128)

VEHICLE=(0, 128, 128)

PEDESTRIAN=(128, 128, 128)

BIKE=(64, 0, 0)

UNLABELED = (192, 0, 0)

TREE=(64, 128, 0)

SIGNSYMBOL=(192, 128, 0)

COLORS = []

COLORS_BGR = []

COLOR_MAP = {}

# the sequence of colors in this arrar matters!!! as it maps to the prediction classes

COLORS.append(SKY)

COLORS.append(BUILDING)

COLORS.append(POLE)

COLORS.append(ROAD)

COLORS.append(PAVEMENT)

COLORS.append(TREE)

COLORS.append(SIGNSYMBOL)

COLORS.append(FENCE)

COLORS.append(VEHICLE)

COLORS.append(PEDESTRIAN)

COLORS.append(BIKE)

COLORS.append(UNLABELED)

for color in COLORS:

np_color = np.array(color)

COLORS_BGR.append(np_color[[2,1,0]])

def crop_to_square(frame):

height = frame.shape[0]

width = frame.shape[1]

delta = int((width-height) / 2)

return frame[0:height, delta:width-delta]

model_xml = '<seg_model>.xml'

model_bin = '<seg_model>.bin'

shape = (input_w, input_h)

ie = IECore()

print("Available devices:", ie.available_devices)

net = ie.read_network(model=model_xml, weights=model_bin)

input_blob = next(iter(net.input_info))

output_blob = next(iter(net.outputs))

# You can select device_name="CPU" to run on CPU

# exec_net = ie.load_network(network=net, device_name='MYRIAD')

exec_net = ie.load_network(network=net, device_name='CPU')

# Get video from the computers webcam

cap = cv2.VideoCapture('video.mp4')

while cap.isOpened():

ret, raw_image = cap.read()

if not ret:

continue

image = cv2.resize(raw_image, shape)

cv2.imshow('Video ', image)

image = np.expand_dims(image, axis=0)

image = image.transpose((0, 3, 1, 2))

# Do the inference on the MYRIAD device

output = exec_net.infer(inputs={input_blob: image})

output = np.squeeze(output[output_blob])

if len(output.shape) > 2:

output = np.argmax(output, axis=0)

class_colors = ColorMap.COLORS

class_colors = np.asarray(class_colors, dtype=np.uint8)

output_colors = np.take(class_colors, output, axis=0)

sqz_img = np.moveaxis(np.squeeze(image), 0, 2)

overlayed = cv2.addWeighted(sqz_img, 0.5, output_colors, 0.5, 0)

cv2.imshow('Output', overlayed)

if cv2.waitKey(1) == ord('q'):

break

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Aleksei , Thanks for your sharing.

Yep, I have tried it, it works

But I'm on a limited edge device, OAK-D so I'd prefer to be able to re-train the model myself which requires smaller input size e.g. 224x224 and only has 2 classes: the road and everything else.

Is it possible?

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Winston,

There are trainable models in OpenVINO Training Extension that show how to train a model for segmentation. You might use the instance segmentation models.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Winston,

This thread will no longer be monitored since we have provided a solution. If you need any additional information from Intel, please submit a new question.

Regards,

Aznie

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page