- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, thank you for providing these useful tools. Currently, I'm working on INT8 quantization on both NNCF and POT. I've noticed that the inference time of POT is faster than FP32, which totally makes sense; however, the inference time of NNCF not only is slower than POT but also slower than the original FP32. I have followed the suggestion on NNCF github repo. The benchmark tool results are as follows:

Original FP32:

[Step 1/11] Parsing and validating input arguments

[Step 2/11] Loading Inference Engine

[ INFO ] InferenceEngine:

API version............. 2.1.2020.4.0-359-21e092122f4-releases/2020/4

[ INFO ] Device info

CPU

MKLDNNPlugin............ version 2.1

Build................... 2020.4.0-359-21e092122f4-releases/2020/4

[Step 3/11] Setting device configuration

[Step 4/11] Reading the Intermediate Representation network

[ INFO ] Read network took 57.23 ms

[Step 5/11] Resizing network to match image sizes and given batch

[ INFO ] Network batch size: 1

[Step 6/11] Configuring input of the model

[Step 7/11] Loading the model to the device

[ INFO ] Load network took 294.24 ms

[Step 8/11] Setting optimal runtime parameters

[Step 9/11] Creating infer requests and filling input blobs with images

[ INFO ] Network input 'input0' precision U8, dimensions (NCHW): 1 3 640 640

/opt/intel/openvino_2020.4.287/python/python3.6/openvino/tools/benchmark/utils/inputs_filling.py:71: DeprecationWarning: The 'warn' method is deprecated, use 'warning' instead

logger.warn("No input files were given: all inputs will be filled with random values!")

[ WARNING ] No input files were given: all inputs will be filled with random values!

[ INFO ] Infer Request 0 filling

[ INFO ] Fill input 'input0' with random values (image is expected)

[Step 10/11] Measuring performance (Start inference asyncronously, 1 inference requests using 1 streams for CPU, limits: 60000 ms duration)

[Step 11/11] Dumping statistics report

Count: 1842 iterations

Duration: 60044.23 ms

Latency: 32.15 ms

Throughput: 30.68 FPS

========================================================================

POT INT8:

[Step 1/11] Parsing and validating input arguments

[Step 2/11] Loading Inference Engine

[ INFO ] InferenceEngine:

API version............. 2.1.2020.4.0-359-21e092122f4-releases/2020/4

[ INFO ] Device info

CPU

MKLDNNPlugin............ version 2.1

Build................... 2020.4.0-359-21e092122f4-releases/2020/4

[Step 3/11] Setting device configuration

[Step 4/11] Reading the Intermediate Representation network

[ INFO ] Read network took 86.67 ms

[Step 5/11] Resizing network to match image sizes and given batch

[ INFO ] Network batch size: 1

[Step 6/11] Configuring input of the model

[Step 7/11] Loading the model to the device

[ INFO ] Load network took 411.73 ms

[Step 8/11] Setting optimal runtime parameters

[Step 9/11] Creating infer requests and filling input blobs with images

[ INFO ] Network input 'input0' precision U8, dimensions (NCHW): 1 3 640 640

/opt/intel/openvino_2020.4.287/python/python3.6/openvino/tools/benchmark/utils/inputs_filling.py:71: DeprecationWarning: The 'warn' method is deprecated, use 'warning' instead

logger.warn("No input files were given: all inputs will be filled with random values!")

[ WARNING ] No input files were given: all inputs will be filled with random values!

[ INFO ] Infer Request 0 filling

[ INFO ] Fill input 'input0' with random values (image is expected)

[Step 10/11] Measuring performance (Start inference asyncronously, 1 inference requests using 1 streams for CPU, limits: 60000 ms duration)

[Step 11/11] Dumping statistics report

Count: 3245 iterations

Duration: 60032.85 ms

Latency: 18.25 ms

Throughput: 54.05 FPS

===========================================================================

NNCF INT8:

[Step 1/11] Parsing and validating input arguments

[Step 2/11] Loading Inference Engine

[ INFO ] InferenceEngine:

API version............. 2.1.2020.4.0-359-21e092122f4-releases/2020/4

[ INFO ] Device info

CPU

MKLDNNPlugin............ version 2.1

Build................... 2020.4.0-359-21e092122f4-releases/2020/4

[Step 3/11] Setting device configuration

[Step 4/11] Reading the Intermediate Representation network

[ INFO ] Read network took 114.43 ms

[Step 5/11] Resizing network to match image sizes and given batch

[ INFO ] Network batch size: 1

[Step 6/11] Configuring input of the model

[Step 7/11] Loading the model to the device

[ INFO ] Load network took 599.62 ms

[Step 8/11] Setting optimal runtime parameters

[Step 9/11] Creating infer requests and filling input blobs with images

[ INFO ] Network input 'result.1' precision U8, dimensions (NCHW): 1 3 640 640

/opt/intel/openvino_2020.4.287/python/python3.6/openvino/tools/benchmark/utils/inputs_filling.py:71: DeprecationWarning: The 'warn' method is deprecated, use 'warning' instead

logger.warn("No input files were given: all inputs will be filled with random values!")

[ WARNING ] No input files were given: all inputs will be filled with random values!

[ INFO ] Infer Request 0 filling

[ INFO ] Fill input 'result.1' with random values (image is expected)

[Step 10/11] Measuring performance (Start inference asyncronously, 1 inference requests using 1 streams for CPU, limits: 60000 ms duration)

[Step 11/11] Dumping statistics report

Count: 1291 iterations

Duration: 60082.78 ms

Latency: 46.00 ms

Throughput: 21.49 FPS

===========================================================================

These results are conducted on Intel(R) Xeon(R) Gold 5218 CPU @ 2.30GHz .

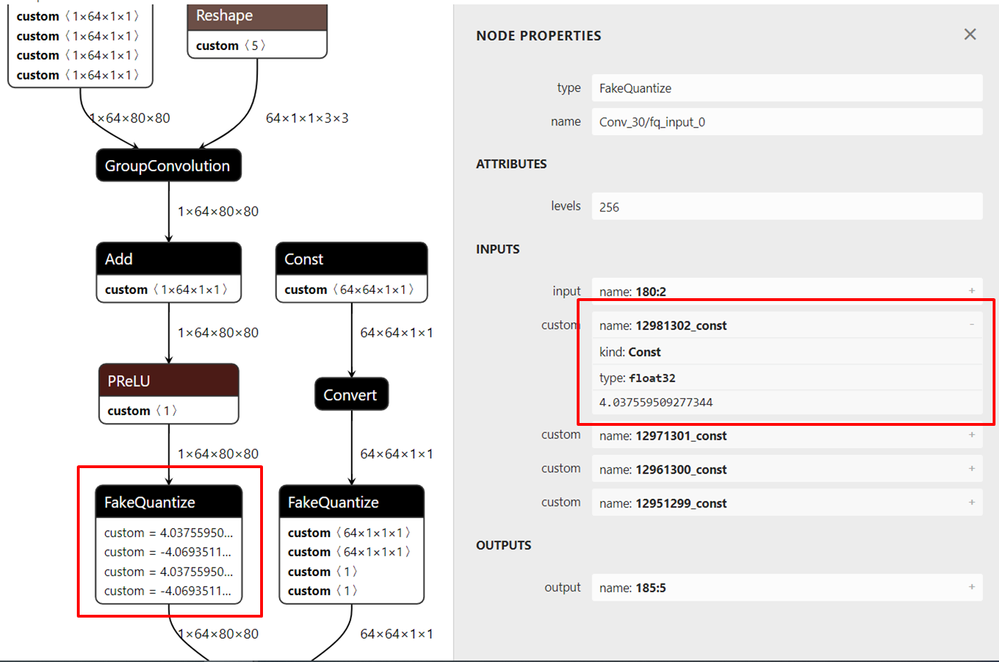

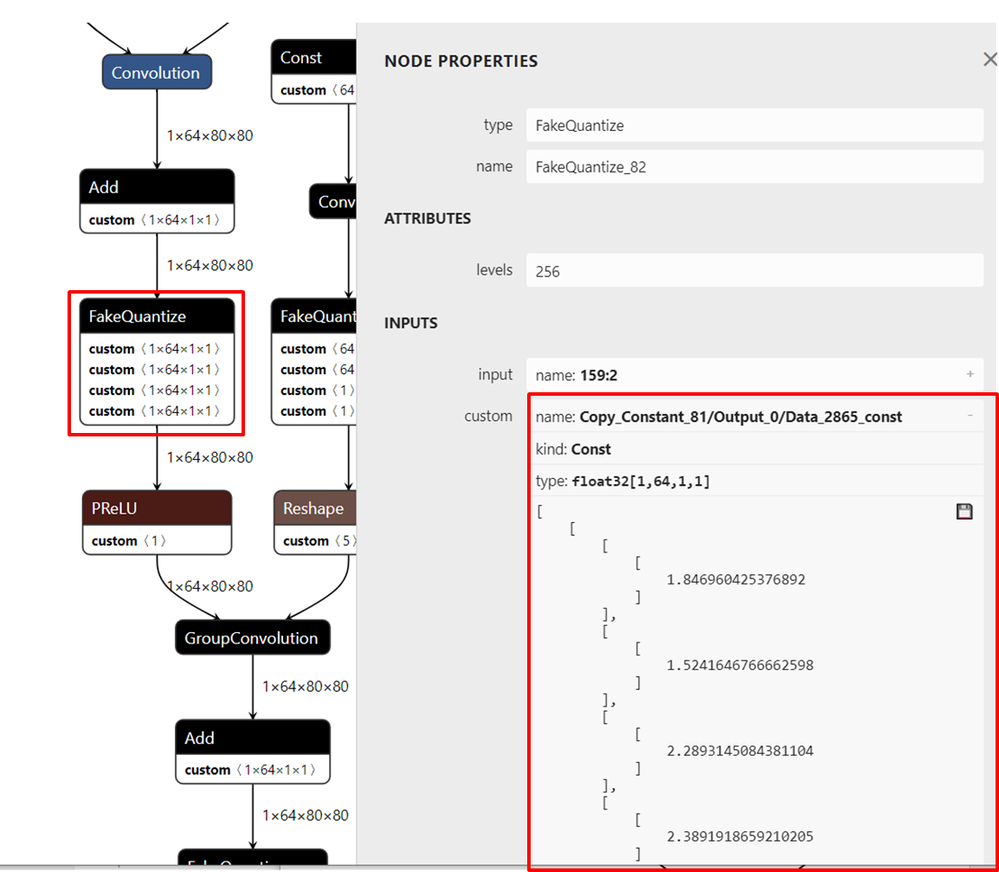

We have found that there is a difference between the IR model of NNCF and POT, where the FakeQuantize Layer and the activation function happen to be in the opposite order, which leads to more parameters in FakeQuantize Layer in NNCF. The Neutron visualization results show as follows:

POT INT8:

NNCF INT8:

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi there Summer,

it seems that the Neural Network Compression is not working as intended for your case.

Both the quantization tools that you have utilized should produce some form of improvement on inference efficiency.

We may have to look further into your case.

Are you using a validated model within this validated list or a custom model?

If possible, could you share your NNCF configuration, POT configuration and IR files for this particular model?

Regards,

Rizal

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Summer,

It has been a while.

Intel will no longer monitor this thread since we have provided a solution. If you need any additional information from Intel, please submit a new question.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page