- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, I asked related questions before,

https://community.intel.com/t5/Intel-Distribution-of-OpenVINO/Some-questions-about-OpenVINO-Post-Training-Optimization-Tool/m-p/1275708#M23585

I am very happy to solve the above problem, but then I encountered a new problem.

My goal is to quantify the YOLOv4 FP32/FP16 model, which includes a single channel (Grayscale) and a rectangular Input Size (height x width, 320 x 544).

When I quantified the YOLOv4 FP32/FP16 model (3 Channel and 416x416), the result was normal, and the mAP was calculated correctly.

Terminal:

pot -c yolov4-tiny-3l-license_plate_prune_0.46_keep_0.01_re416x416_ptz.json --output-dir backup -e

Output:

INFO:app.run:map : 1.0

INFO:app.run:AP@0.5 : 1.0

INFO:app.run:AP@0.5:0.05:95 : 0.8820987695866987

But when I quantified it into a YOLOv4 FP32/FP16 model (1 Channel and 320x544), although it was able to quantify it successfully, my mAP was abnormal. It should be 0.99 or 1 (I'm pretty sure).

Terminal:

pot -c yolov4-tiny-3l-gray-license_plate_prune_0.46_keep_0.01_320x544_qtz.json --output-dir backup -e

Output:

INFO:app.run:map : 0.47562541279744447

INFO:app.run:AP@0.5 : 0.0

INFO:app.run:AP@0.5:0.05:95 : 0.0

Although it is a 3-channel 320x544,

Terminal:

pot -c yolov4-tiny-3l-license_plate_prune_0.46_keep_0.01_320x544_qtz.json --output-dir backup -e

Output:

INFO:app.run:map : 0.5875964602840454

INFO:app.run:AP@0.5 : 0.0

INFO:app.run:AP@0.5:0.05:95 : 0.0

If the mAP quantized to INT8 is abnormal, it means that I cannot accurately use certain functions of quantization, such as determining the degree of quantization based on mAP.

Maybe my quantified json file is wrong. Please check it for me. After my test, I suspect it is a "resize" problem.

When I resize to 416x416, it is normal.

"datasets": [

{

"name": "license_plate",

"preprocessing": [

{

"type": "resize",

"dst_width": 416,

"dst_height": 416

},

{

"type": "bgr_to_rgb"

}

],

But when I resize to 320x544, it is abnormal.

"datasets": [

{

"name": "license_plate",

"preprocessing": [

{

"type": "resize",

"dst_width": 544,

"dst_height": 320

},

{

"type": "bgr_to_rgb"

}

],

As attached, please help, thank you.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Peng Chang-Jan,

Greetings to you.

We will look into this issue and get back to you soon.

Regards,

Zulkifli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi intel,

Thank you for your assistance. If you have any solution, please let me know, thank you!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Peng Chang-Jan,

Sorry for the delay.

We have performed the accuracy check for your models and indeed did not obtained your desired mAP result for 544x320 shape as below. We also used your previous models and changing the input size and obtained similar results.

Based on our findings, this result is normal due to the definition of the mAP itself: The rule that is used to compare inference results of a model with reference values. Mean average precision (mAP) is calculated by first finding the sum of average precisions of all classes and then dividing the sum by the number of classes [Configure Accuracy Settings - OpenVINO™ Toolkit (openvinotoolkit.org)]

Another reason is that you did not successfully obtain mAP 1.00 for your quantized model using different input_shape due to our OpenVINO models were tested and validated using yolov3: 416x416 and yolov4: 608x608 which was the default network sizes in the common template configuration files by the industry [https://www.ccoderun.ca/programming/2020-09-25_Darknet_FAQ/#square_network]

Therefore, using other than the validated size may cause the result of mAP will be returning less than 1.0 which is normal as it will calculate the mean of the average precision values.

Also, your .json file is correct as we are able to run from our end - it just the mAP result does not return as your desired result.

We hope this helps.

Sincerely,

Zulkifli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am glad to receive your reply. I understand that this kind of bugs is normal.

Due to the excessive packaging of Intel OpenVINO, it is more difficult for ordinary users to modify the source code by themselves.

May I ask you (Intel OpenVINO), when will you provide the correct quantized mAP for rectangular input (for example, 320x544)?

Although the industry standard is square, such as 416x416 or 608x608, I have actually experimented with more squares (320x320 and 288x288), and their mAP output is normal.

But as long as the rectangle is resized, there will be problems. This is obviously a detail that Intel OpenVINO has not done. I hope that future versions can be corrected.

The reason why we need rectangular input is because our training resources are all 1080x1920 (9:16).

Our experimental results are that the rectangular type obtains better results than the square type (in our solution).

If I can modify the source code by myself, please tell me how. Thank you very much!!!

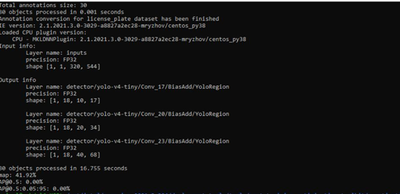

By the way, the new problem I encountered, the quantization of ssd-mobilenet-v2 failed to obtain the correct mAP, the error is as follows, please refer to the attachment link for details.

pot -c ssd_mobilenet_v2_license_plate_new_raw_pos_300x300_0525_qtz.json --output-dir backup -e

INFO:compression.algorithms.quantization.default.algorithm:Computing statistics finished

INFO:compression.algorithms.quantization.default.algorithm:Start computing statistics for algorithms : MinMaxQuantization,FastBiasCorrection

INFO:compression.algorithms.quantization.default.algorithm:Computing statistics finished

INFO:compression.pipeline.pipeline:Finished: DefaultQuantization

===========================================================================

INFO:compression.pipeline.pipeline:Evaluation of generated model

INFO:compression.engines.ac_engine:Start inference on the whole dataset

Total dataset size: 30

30 objects processed in 0.488 seconds

INFO:compression.engines.ac_engine:Inference finished

INFO:app.run:map : 0.0

INFO:app.run:AP@0.5 : 0.0

INFO:app.run:AP@0.5:0.05:95 : 0.0

Please help me experiment and teach me how to solve it. Maybe my .json is wrong. Thank you for your careful help!!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Peng Chang-Jan,

Firstly, we are glad about your sharing on the resizing issue. There is also a GitHub thread discussing on it, we hope this will help.

Secondly, for the ssd_mobilenet_v2 model, we highly appreciate it if you can open a new thread for this issue. It will help us to serve you better.

Regards,

Zulkifli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Peng Chang-Jan.

This thread will no longer be monitored since we have provided a solution.

Regards,

Zulkifli

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page