- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi every one,

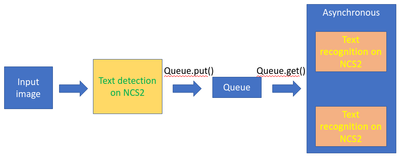

I am making a project that detects boxes of texts in an image the print texts in those boxes. To do this task, I used two models which are text detection – pixel link and text recognition – text recognition 0012. Because the inference time of recognition task is too long, so I used 2 NCS2s in this task. The image below is described the pipeline of the program.

Two text detection and text recognition are loaded on 3 NCS2 as the image above. After that, the program predicts the input image number 0, 1, 2 ... and so on. The input image is put in a text detection model, then results are put in a queue. Then, data in the queue are get by python queue.get() method to put in 2 NCS2s that are operated in asynchronous. When the queue is empty, end the program.

Image number 0: length of text detection box: 96, length of text recognition string: 94. (The length of two model output have to equal)

This is the string output of image number 1:

['XALibrary(Level4)', 'PB', 'Errod', 'Production', 'i', 'Nu', 'SL60328P.N_V6', 'Wires', '559', 'Aligns', '3', 'Unit', '1', '1', 'R', 'c', '1', 'LFINE', '10', '9', 'OUTS', 'Program', 'AUTO', 'Management', 'MHS(WH)', 'Teach', 'DFM', 'CHirOff', 'CH2:Off', 'Edit', 'Bond', 'Program', 'PRE', 'BND', 'POST', 'ZTC', 'HAC', 'MTM', '1600', 'OFE', 'OfF', '360', '228.9', '748', 'PROGRAM', 'Bond', 'Assistant', 'Setup', 'Null', 'Chess', 'LaT', 'Null', 'Bond', 'Base', 'Parameters', 'Parameters', 'Loop', 'CONFIGURE', 'Assistant', 'Kit', 'Control', 'Loop', 'Copper', 'MAINTENANCE', 'Setup', 'UTILITY', 'XY', '1x', 'tl', 'Mojo', 'SYSTEM', 'Safelyremovelise', 'Masss', 'Device', 'I', 'p6asZ', 'Storage', 'PM', 'F14564', '18940.72um', 'XXI', 'ZI', '7210', 'Arrow', '2lumyt', '50um', 'Key', 'XI', 'Chess', 'Record', 'IControl', '2:44', 'Record', 'EN']

Image number 1: length text detection box: 90 , Length of text recognition string: 90ox.

This is the output of image number 2:

['26Nov20', 'JPM', 'XALibrary(Level4)', 'PB', 'Nu', 'Production', 'i', 'Errod', 'Wires', 'SL60328P.N_V6', '559', 'Aligns', '3', 'Unit', 'I', 'R', 'I', '1', '1', '9', 'OUTS', 'LFINE', 'Auto', 'Bond', '7', 'AUTO', 'Configuration', 'Bonder', 'Capillary', 'Usage', 'DFM', 'CH2:Off', 'CHirOff', 'Bonder', 'PRE', 'Wire', 'BND', 'Usage', 'POST', 'HAC', 'ZTC', 'OFE', '1600', '360', 'MTM', 'ZAZ', 'OfF', 'PROGRAM', 'Management', 'Nulls', '26', 'Chess', 'LaT', 'Statistics', 'Null', 'MHS(WH)', 'Configuration', 'Parameters', 'Sub', 'Selection', 'System', 'CONFIGURE', 'Factory', 'Auto', 'Automation', 'Wire', 'MAINTENANCE', 'Rethread', 'UTILITY', '1x', 'XY', 'SYSTEM', 'Z', 'MOzOs', 'Mojo', 'AMI', 'F164723Bumy', 'XI', 'ZI', '29810.99um', '7210.54um', 'Key', 'AoArow', 'XI', 'Chess', 'Record', 'IControl', 'Record', '2:44', 'JPM'] The length of text recognition string is 90 because 2 strings ['26Nov20', 'JPM'] are added in the result. Of course, those strings are in results of Image number 0. And the result of Image number 1 still lack of 2 strings. Similarly, those string are added to the next image's result.

The following line of code is the function that I used to recognize text of an image:

def predict_async(image):

input_blob = next(iter(self._net.input_info))

#log.info("Start Async")

self._exec_net.start_async(request_id=self.next_request_id, inputs= {input_blob:image})

#Waiting for the inference task dones

if (self._exec_net.requests[self.current_request_id].wait(-1)==0):

res = self._exec_net.requests[self.current_request_id].outputs[out_blob]

#Append the result's list

string_list.append(decodeText(res)) This function is used on 2 threads for speed up a duration time of prediction of text recognition task.

I can fix the lack of 2 strings bug by adding 2 empty elements to the queue. Then make prediction, but 2 strings are still added two the next result, I did not yet fix this bug. When I try this: Load a text recognition model on 2 NCS2 -> Predicting -> Load a text recognition on 2 NC2S -> Predicting -> ... and so on. The bug is completely addressed. Seem like, results are still saved on the exec_net, even the prediction on an image is done. one

How can I reset the exec_net after working done on an image? Or how can I fix the bug which 2 strings are added to next result on 2 NCS2.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Iffa,

I tried to use Multi Device mode when loading model by this command and used asynchronous to do inference.

exec_net = plugin.load_network(network = net, device_name="MULTI:MYRIAD.1.3-ma2480,MYRIAD.1.2-ma2480", num_requests = num_requests)The code for inference is:

for slot in active_slots:

exec_net_tr.start_async(request_id=slot, inputs ={input_blob_tr: job_queue.get()})

while completed_images < len(bboxes):

for slot in active_slots:

status = exec_net_tr.requests[slot].wait(timeout=0)

if status == 0:

#print("Start Async")

res=exec_net_tr.requests[slot].outputs['Transpose_190'].copy()

if started_images < len(bboxes):

exec_net_tr.start_async(request_id=slot,

inputs={input_blob_tr: job_queue.get()})

started_images += 1

else:

active_slots.remove(slot)

#preprocessResult(res)

string_list.append(decodeText(res))

completed_images += 1

elif status != -9:

raise Exception("One of the inference errored out")

if job_queue.empty():

break # Ensure that we have at least one image in queueAlthough, 2 NCS2s are used, the inference time of my task is 4.2s. While the inference time when I use only one NCS2 is also 4.2s. However, if I loaded the network like the below script:

exec_net = plugin.load_network(network = net, device_name="MULTI:MYRIAD.1.3-ma2480,CPU", num_requests = num_requests)The inference time is only 1.8s.

So, do the multi-deivces mode only work with different devices ?

Thanks.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Greetings,

I recommend you to try the multi-device plugin.

This is the full documentation of the Multi-Device plugin: https://docs.openvinotoolkit.org/latest/openvino_docs_IE_DG_supported_plugins_MULTI.html

and this is how you can use it correctly, including things that you need to check: https://www.youtube.com/watch?v=xbORYFEmrqU

Sincerely,

Iffa

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Iffa,

Do you have any sample code of Multiple Device with asynchronous inference in python?

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Iffa,

I tried to use Multi Device mode when loading model by this command and used asynchronous to do inference.

exec_net = plugin.load_network(network = net, device_name="MULTI:MYRIAD.1.3-ma2480,MYRIAD.1.2-ma2480", num_requests = num_requests)The code for inference is:

for slot in active_slots:

exec_net_tr.start_async(request_id=slot, inputs ={input_blob_tr: job_queue.get()})

while completed_images < len(bboxes):

for slot in active_slots:

status = exec_net_tr.requests[slot].wait(timeout=0)

if status == 0:

#print("Start Async")

res=exec_net_tr.requests[slot].outputs['Transpose_190'].copy()

if started_images < len(bboxes):

exec_net_tr.start_async(request_id=slot,

inputs={input_blob_tr: job_queue.get()})

started_images += 1

else:

active_slots.remove(slot)

#preprocessResult(res)

string_list.append(decodeText(res))

completed_images += 1

elif status != -9:

raise Exception("One of the inference errored out")

if job_queue.empty():

break # Ensure that we have at least one image in queueAlthough, 2 NCS2s are used, the inference time of my task is 4.2s. While the inference time when I use only one NCS2 is also 4.2s. However, if I loaded the network like the below script:

exec_net = plugin.load_network(network = net, device_name="MULTI:MYRIAD.1.3-ma2480,CPU", num_requests = num_requests)The inference time is only 1.8s.

So, do the multi-deivces mode only work with different devices ?

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Greetings,

The multi-device plugin does meant for different devices actually, however it could be implemented as what you had tried. Similar to this case: https://stackoverflow.com/questions/64345884/multiple-ncs2-devices-work-on-an-inference

Plus, this is additional info on how to use NCS2 in parallel: https://community.intel.com/t5/Intel-Distribution-of-OpenVINO/How-to-use-Multi-Stick-NCS2-Parallelization-of-reasoning/td-p/1181316

Low performance of NCS2 is expected if compared with other devices: https://docs.openvinotoolkit.org/latest/openvino_docs_performance_benchmarks.html

But if you had solved your problem already, I'm glad to hear it.

Sincerely,

Iffa

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Greetings,

Intel will no longer monitor this thread since we have provided a solution. If you need any additional information from Intel, please submit a new question.

Sincerely,

Iffa

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page