- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm facing an issue while trying to run a model with dynamic batch enabled.

I've prepared a model following this tutorial - https://docs.openvinotoolkit.org/latest/openvino_docs_IE_DG_DynamicBatching.html (specified maximum batch size with CNNNetwork::setBatchSize and passed config to LoadNetwork). Inference with batch of images, where batch size equals maximum batch size, works fine, but when trying to infer on smaller batch of images following error appears - "Input blob size is not equal network input size ("blob size with small batch"!="blob size with max batch").

For example, when trying to run a model with batch size = 1 and max_batch = 2, I get "Input blob size is not equal network input size (65536!=131072)"(since input size is 256x256). Here is part of code used for inference:

auto input_det = cv::dnn::blobFromImages(frames, scale, size, cv::Scalar(0), false, false);

auto imgBlob = wrapMat2Blob(input_det,size.height,size.width, static_cast<int>(frames.size()));

auto infer_request = net.CreateInferRequest();

infer_request.SetBlob("data", imgBlob);

infer_request.Infer();//batch size 2; works fine

std::vector<cv::Mat> new_frames = {frames[0]};

infer_request.SetBatch(new_frames.size());//setting new batch size

input_det = cv::dnn::blobFromImages(new_frames, scale, size, cv::Scalar(0), false, false);

imgBlob = wrapMat2Blob(input_det,size.height,size.width, static_cast<int>(new_frames.size()));

infer_request.SetBlob("data", imgBlob);

infer_request.Infer();//batch size 1, fails

Here frames is std::vector<cv::Mat> of size 2. First call of Infer() works fine, and the second one fails. And here is code used for preparing model:

network = ie.ReadNetwork(filename, filename.substr(0, filename.size() - 4) + WEIGHTS_EXT);

const std::map<std::string, std::string> dyn_config =

{ { InferenceEngine::PluginConfigParams::KEY_DYN_BATCH_ENABLED, InferenceEngine::PluginConfigParams::YES } };

network.setBatchSize(batch_limit);

input_info = network.getInputsInfo().begin()->second;

input_name = network.getInputsInfo().begin()->first;

input_info->setLayout(InferenceEngine::Layout::NCHW);

input_info->setPrecision(InferenceEngine::Precision::FP32);

auto output = network.getOutputsInfo();

auto cl_output = output_names[0];

auto cl_output_info = output[cl_output];

auto rg_output = output_names[1];

auto rg_output_info = output[rg_output];

rg_output_info->setPrecision(InferenceEngine::Precision::FP32);

cl_output_info->setPrecision(InferenceEngine::Precision::FP32);

auto net = ie.LoadNetwork(network, DEVICE, dyn_config);

return net;

Is there any mistake that causes issue? And is there any demo to test models or to compare its code with mine? Thanks in advance for any help!

- Tags:

- dynamic_batch

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your patience.

You can refer to Dynamic Batch Python* Demo included in 2019R1 demonstrated how to set batch size dynamically for certain infer requests.

Link for the demo code - dynamic_batch_demo.py

The demo was deprecated in 2019R2, but it showcases dynamic batching for your network. Unfortunately, we don't have a C++ version of the demo.

This demo is not optimized for the latest OpenVINO 2021.1 however, you can use the following snippets as a reference:

# Configure plugin to support dynamic batch size

ie.set_config(config={"DYN_BATCH_ENABLED": "YES"}, device_name=args.device) infer_time = []

# Set max batch size

inputs_count = len(args.input)

if args.max_batch < inputs_count:

log.warning("Defined max_batch size {} less than input images count {}."

"\n\t\t\tInput images count will be used as max batch size".format(args.max_batch, inputs_count))

net.batch_size = max(args.max_batch, inputs_count)

# Create numpy array for the max_batch size images

n, c, h, w = net.input_info[input_blob].input_data.shape

images = np.zeros(shape=(n, c, h, w))

log.info("Network input shape: [{},{},{},{}]".format(n,c,h,w))

# Set batch size dynamically for the infer request and start sync inference

infer_time = []

exec_net.requests[0].set_batch(inputs_count)

log.info("Starting inference with dynamically defined batch {} for the 2nd infer request ({} iterations)".format(

inputs_count, args.number_iter))

infer()

Looking at the code you have provided, everything looks correctly set for dynamic batching. The possible issue I see is the change of input blob's batch size which is not necessary.

My suggestion to your case as per https://docs.openvinotoolkit.org/latest/openvino_docs_IE_DG_Intro_to_Performance.html "running multiple independent inference requests in parallel often gives much better performance, than using a batch only.

This allows you to simplify the app-logic, as you don't need to combine multiple inputs into a batch to achieve good CPU performance. Instead, it is possible to keep a separate infer request per camera or another source of input and process the requests in parallel using Async API".

Based on this changing the input blob's batch size is not necessary, as dynamically defining the batch size in a network is enough.

Hope this information helps.

Thank you.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Abramov_Alexey,

Thanks for reaching out. That error might arise due to the different input shapes in your .xml file. You need to resize your input image and make sure you have the same input blob.

Check out this OpenVINO Sample Deep Dive - Hello Claasification C++ on how to load the inputs and prepare the output blobs.

The following page has more information regarding the blob in OpenVINO Toolkit.

https://docs.openvinotoolkit.org/latest/ie_c_api/group__Blob.html

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for response!

I've checked dimensions of input blobs after each call of wrapMat2Blob with:

auto dims = imgBlob->getTensorDesc().getDims();

std::cout<<dims[0]<<" "<<dims[1]<<" "<<dims[2]<<" "<<dims[3]<<std::endl;and got [2,1,256,256] and [1,1,256,256] respectively. I've also checked link with classification sample and added

input_info->getPreProcess().setResizeAlgorithm(InferenceEngine::RESIZE_BILINEAR);to make sure, that input image is resized to corresponding shape. However, now I get following error - Input blob batch size is invalid: (input blob) 1 != 2 (expected by network), although I've called infer_request.SetBatch(1) before second inference.

Is there any way to check if calling SetBatch() really changed input shape of network? I could not find suitable method of InferenceEngine::InferRequest. And could this be possibly caused by older version of model optimizer, used for generating IR (used model optimizer from OpenVINO 2020.2)?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Abramov_Alexey,

Which model are you running? That might due to the older version of OpenVINO Toolkit, could you try to update to the latest version 2021.2.

Meanwhile, the description of the InferenceEngine::InferRequest Class Reference can be found here.

https://docs.openvinotoolkit.org/latest/classInferenceEngine_1_1InferRequest.html

Also, here is how to integrate the Inference Engine with your application.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi!

I'm running custom detector with latest version of OpenVINO (2021.2.185). Inference Engine is already successfully integrated inside application and was used without any issues before enabling dynamic batch support.

I've also converted model into IR with latest version of the Model Optimizer and faced same issue. It's still confusing for me, since I've added only corresponding config option and infer_request.SetBatch(batchSize) from here:

https://docs.openvinotoolkit.org/latest/openvino_docs_IE_DG_DynamicBatching.html

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Abramov_Alexey,

Could you share your model and any related files with us so that we can have a look and test it on our side?

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi!

I've attached an archive with IR (both .xml and .bin + .mapping). In addition, it contains an original ONNX model, maybe it will be useful as well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Abramov_Alexey,

Thank for provided your model for better debugging the issue.

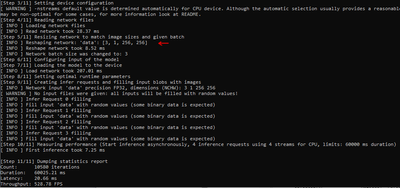

I have tested your model with benchmark_app and specified batch size below

benchmark_app.exe -m detector.xml -b 3

as the results, The Network batch size changes as specified. so there is no problem with your model.

Regarding your code error, perhaps you might want to refer to benchmark_app sample code which available at <OpenVINO installation path>\openvino_2021.2.185\inference_engine\samples\cpp\benchmark_app\main.cpp

refer to line 342 on implementing batch size.

or you can provide us your full code for further debugs if you still facing the problem.

Regards

Hari Chand

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi!

Thanks for testing my model! I've checked source code for benchmark_app and noticed, that inputs of model are reshaped before loading to device. This is a bit different from my case, since I'm trying to change inputs shape dynamically from Inference Request after loading the model to device, according to tutorial from here:

https://docs.openvinotoolkit.org/latest/openvino_docs_IE_DG_DynamicBatching.html

Running model with any fixed batch size works fine in my project.

I've created a small sample, that reproduces this issue, and added few comments to make it clearer. OpenCV 4.5.1 and OpenVINO 2021.2.185 were used for building it. Model is supposed to be next to the executable file.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your patient and your code as well, we are still investigate the issue you're facing and will get back to you shortly.

Thank

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your patience.

You can refer to Dynamic Batch Python* Demo included in 2019R1 demonstrated how to set batch size dynamically for certain infer requests.

Link for the demo code - dynamic_batch_demo.py

The demo was deprecated in 2019R2, but it showcases dynamic batching for your network. Unfortunately, we don't have a C++ version of the demo.

This demo is not optimized for the latest OpenVINO 2021.1 however, you can use the following snippets as a reference:

# Configure plugin to support dynamic batch size

ie.set_config(config={"DYN_BATCH_ENABLED": "YES"}, device_name=args.device) infer_time = []

# Set max batch size

inputs_count = len(args.input)

if args.max_batch < inputs_count:

log.warning("Defined max_batch size {} less than input images count {}."

"\n\t\t\tInput images count will be used as max batch size".format(args.max_batch, inputs_count))

net.batch_size = max(args.max_batch, inputs_count)

# Create numpy array for the max_batch size images

n, c, h, w = net.input_info[input_blob].input_data.shape

images = np.zeros(shape=(n, c, h, w))

log.info("Network input shape: [{},{},{},{}]".format(n,c,h,w))

# Set batch size dynamically for the infer request and start sync inference

infer_time = []

exec_net.requests[0].set_batch(inputs_count)

log.info("Starting inference with dynamically defined batch {} for the 2nd infer request ({} iterations)".format(

inputs_count, args.number_iter))

infer()

Looking at the code you have provided, everything looks correctly set for dynamic batching. The possible issue I see is the change of input blob's batch size which is not necessary.

My suggestion to your case as per https://docs.openvinotoolkit.org/latest/openvino_docs_IE_DG_Intro_to_Performance.html "running multiple independent inference requests in parallel often gives much better performance, than using a batch only.

This allows you to simplify the app-logic, as you don't need to combine multiple inputs into a batch to achieve good CPU performance. Instead, it is possible to keep a separate infer request per camera or another source of input and process the requests in parallel using Async API".

Based on this changing the input blob's batch size is not necessary, as dynamically defining the batch size in a network is enough.

Hope this information helps.

Thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This thread will no longer be monitored since this issue has been resolved. If you need any additional information from Intel, please submit a new question.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page