- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

I have trained a model to detect seven-segment display numbers on yolov5 and I was able to convert to intel's IR model as well. Followed the following steps to convert:

python.exe yolov5\export.py --weights yolov5s640x.pt --include onnx --data customdata.yaml

python.exe mo.py --input_model yolov5s640x.onnx --model_name seven-segment-yolov5 --reverse_input_channels -s 255 --output /model.24/m.0/Conv,/model.24/m.1/Conv,/model.24/m.2/Conv

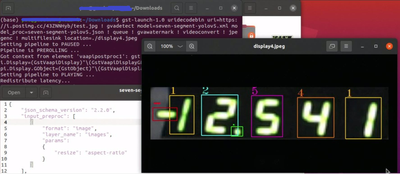

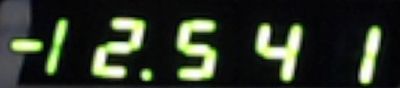

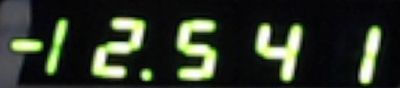

I have run the python based https://github.com/violet17/yolov5_demo (using openVINO 2021.4 )to test the IR model and the outputs are as same onnx model. Attaching the output (good) for reference.

However, when I ran the same IR model through DLstreamer, the detection is not fine. Attaching the output (bad) for reference.

I have tested in openVINO 2022 based DLstreamer as well but the results are the same.

I have used yolov5 + openVINO combination before and it all worked fine.

I doubt the yolo parsing region either has bug or I am missing something. Attaching model proc I used.

Any help would be welcome. Thank you.

Warm Regards,

Karthick

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Karthick,

Yes, I am able to duplicate the issue and getting the same bad inference result.

The bad inference result can be improved by resizing the image with aspect-ratio. This can be done by defining “input_preproc” in the model-proc file.

Add the following parameter in “input_preproc”.

"input_preproc": [

{

"format": "image",

"layer_name": "images",

"params":

{

"resize": "aspect-ratio"

}

}

],

The inference result looks nice now.

I attach the model-proc file as well.

Regards,

Peh

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Karthick,

Support for Yolo-V5 was added in DL Streamer 2022.2 version:

https://github.com/dlstreamer/dlstreamer/releases/tag/2022.2-release

You can find a model-proc file now as part of the samples, here:

Please try to change the following parameters:

"converter": "yolo_v5",

"do_cls_softmax": true,

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Peh, @Peh_Intel ,

Thank you for the response.

One thing I forgot to write was that I had tried the same model in 2022 version as you mentioned and the results were the same (the detection was terrible).

However, the python code (The link I provided above ) works without issue.

I see the problem with only this model. I tried coco yolov5s IR converted model and that is working fine.

Warm Regards,

Karthick

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Karthick,

In this case, we would like to request your model (yolov5s640x.onnx) for further investigation.

Besides, please also share with us on how you run IR model through DL Streamer for our ease to duplicate.

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Peh_Intel ,

This is the command I used to convert from pytorch to onnx.

yolov5\export.py --weights yolov5s640x.pt --include onnx --data data.yaml --batch-size 1 --nms

mo.py --input_model yolov5s640x.onnx --model_name seven-segment-yolov5 --reverse_input_channels -s 255 --output /model.24/m.0/Conv,/model.24/m.1/Conv,/model.24/m.2/Conv

gst-launch-1.0 uridecodebin uri=file:/dsp/Dec-2022/TextDetection/inputMultimeter/n2.jpg ! queue ! gvadetect model=./seven-segment-yolov5.xml model-proc=./seven-segment-yolov5.json threshold=0.2 ! gvawatermark ! videoconvert ! jpegenc

! multifilesink location=./display4Orig1.jpeg

Please rename the files as below:

seven-segment-yolov5.txt ==> seven-segment-yolov5.json

yolov5s640x_Onnx.zip == > yolov5s640x.onnx

yolov5s640x_pt.zip ==> yolov5s640x.pt

Warm Regards,

Karthick

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Karthick,

Thanks for sharing. Let me duplicate from my side first.

In a meanwhile, could you also share the test image as well?

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Karthick,

Yes, I am able to duplicate the issue and getting the same bad inference result.

The bad inference result can be improved by resizing the image with aspect-ratio. This can be done by defining “input_preproc” in the model-proc file.

Add the following parameter in “input_preproc”.

"input_preproc": [

{

"format": "image",

"layer_name": "images",

"params":

{

"resize": "aspect-ratio"

}

}

],

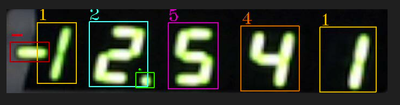

The inference result looks nice now.

I attach the model-proc file as well.

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Peh_Intel ,

Thank you very much. This has really helped us get the proper detection results.

The only thing I see is that the detected object's confidence result is 2 % down compared to python openVINO output, but that is expected I guess?

Thanks again for the quick responses.

My result:

Warm Regards,

Karthick

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Karthick,

I am glad that I was able to help.

Yes, a little difference is expected. By the way, you can play a trick of adjusting the threshold value to obtain the optimal performance.

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Peh_Intel ,

Do you mean input_preproc parameters? I will adjust those parameters and see if I can improve the performance.

Warm Regards,

Karthick

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Karthick,

I actually mean adjust threshold value for gvadetect as I noticed that you only set threshold=0.2 ! in your previous gst-launch-1.0 command.

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Peh_Intel ,

I kept threshold=0.2 just to see if there was any detection. I have already fine-tuned the threshold. Thanks for all the help.

Warm Regards,

Karthick

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Karthick,

This thread will no longer be monitored since this issue has been resolved. If you need any additional information from Intel, please submit a new question.

Regards,

Peh

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page