- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi:

I 'm trying to use the heterogeneus plugin to test the affinity of the layers using this tutorial:

https://docs.openvinotoolkit.org/latest/openvino_docs_IE_DG_supported_plugins_HETERO.html

but when I try to define some layer to specific hardware like the following code I have two issues:

res.supportedLayersMap["layerName"] = "CPU";

// set affinities to network

for (auto && layer : res.supportedLayersMap) {

network.getLayerByName(layer->first)->affinity = layer->second;

}

INFERENCE_ENGINE_DEPRECATED("Migrate to IR v10 and work with ngraph::Function directly. The method will be removed in 2020.3")

https://github.com/openvinotoolkit/openvino/tree/master/inference-engine/samples/ngraph_function_creation_sample

The second one is, when I modify the affinity of specific layer when I try to query the network again with,

InferenceEngine::QueryNetworkResult res1 = core.QueryNetwork(cnnNetwork, device, { });This, layer affinity is not changed.

So my question are:

When a network is loaded in IR7 using read network, this network is read-only? I cannot modify any layer affinity unless I dont use ngraph graph builder?

Are there other samples that uses the heterogeneous plugin manual affinity?

Thanks in advance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Max_L_Intel:

Your suggestion definitively works in my test:

I have taken the benchmarking example of OpenVINO benchmark app and I made the following test:

I made two tests:

1. - I forced to use CPU in all layers in heterogeneus plugin and

for (auto && layer : res.supportedLayersMap) {

cnnNetwork.getLayerByName(layer.first.c_str())->affinity = "CPU";

}

2.- I forced to use MYRIAD in all layers in heterogeneus plugin.

2.- for (auto && layer : res.supportedLayersMap) {

cnnNetwork.getLayerByName(layer.first.c_str())->affinity = "MYRIAD";

}

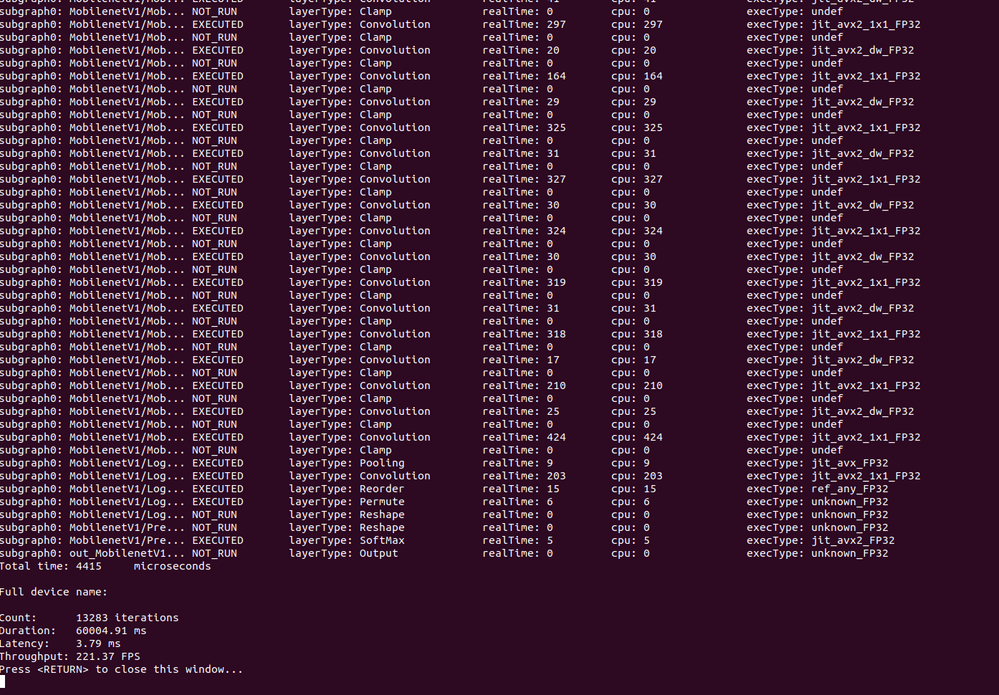

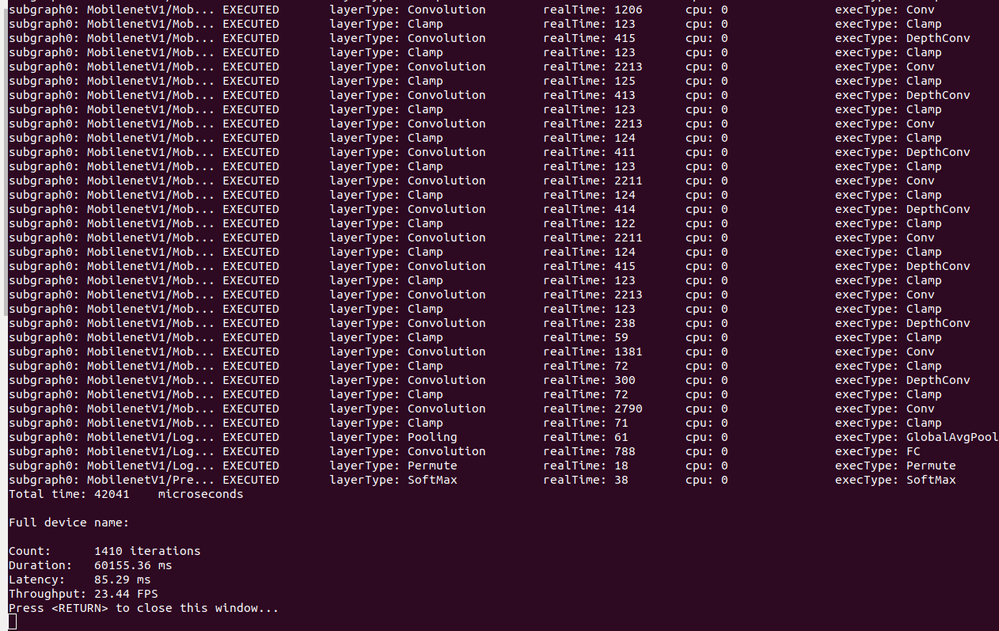

I also added the -pc flag to see the performance counts with this result:

in case of the CPU results i see that the peformance counts exec type is jit_avx2_1x1_FP32 style and in the myriad the output is more like the operation itself( such as Conv, CLamp, DpethConv).

The performance results indicate that this forcing is working:

CPU results:

latency: 3.79 ms

Throughput: 221.37FPS

MYRIAD RESULTS:

latency: 85.29ms

Throughput: 23.44 FPS.

below, the results in images.

CPU results:

MYRIAD RESULTS:

So I assume that this heterogeneus plugin is working fine.

¿Would you need any more details about test?

Thanks @Max_L_Intel, for your support.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @uelor

We are still looking into your issue. Meanwhile would you please let us know what OpenVINO version you use?

Also, as per Release Notes for latest OpenVINO releases,

>IRv7 is deprecated and support for this version will be removed in the next major version (v.2021.1). It means IRv7 and older cannot be read using Core::ReadNetwork. Users are recommended to migrate to IRv10 to prevent compatibility issues in the next release.

And since the manual affinity configuration is provided via core.ReadNetwork, hence this method might not work for IRv7 model.

Have you tried to test manual affinity configuration for IRv10 model?

- Tags:

- Hi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi my openVINO version is 2020.3. So I assume the default IR representation is IRV10.

I tried my the model with IRV7 and IRV10, but in both cases the result is the same.

After I assigned manually the affinity of specific layer I have not any error, but, when I query the network with the new affinities ,using querynetwork function, the affinity of that layer is not changed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @uelor

Yes, by default in OpenVINO 2020.3 you should produce IRv10 models.

If manual affinity configuration doesn’t work for IRv10, then can you please attach a reproducer code and all the related data files that could help us to replicate this behavior from our end (model, sample code, all the files that you modified)?

Thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the answer @Max_L_Intel.

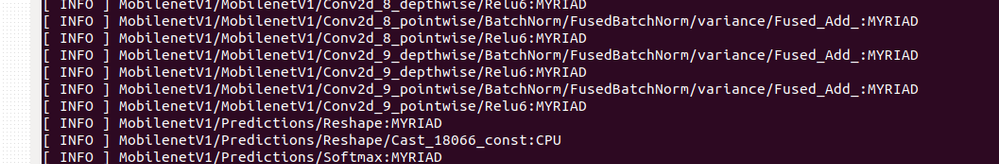

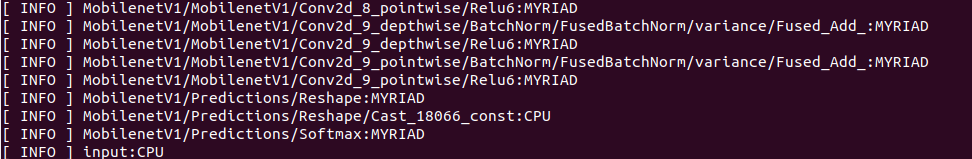

I have create a simple test, with HETERO:MYRIAD,CPU.

The model is created as IRV10 with FP16 precission, using model optimizer, is a mobilenet_v1_224x224.xml.

I tried to modify the affinity of 2 layers:

res.supportedLayersMap["MobilenetV1/MobilenetV1/Conv2d_9_pointwise/Relu6"] = "CPU";

res.supportedLayersMap["MobilenetV1/Predictions/Softmax"] = "CPU";

I have attached the model and the source code to reproduce the issue.

My biggest concern is that this line is not changing the model structure:

network.getLayerByName(layer.first.c_str())->affinity = layer.second.c_str();

#include <format_reader_ptr.h>

#include <inference_engine.hpp>

#include <limits>

#include <memory>

#include <samples/args_helper.hpp>

#include <samples/common.hpp>

#include <samples/slog.hpp>

#include <samples/classification_results.h>

#include <string>

#include <vector>

#include <inference_engine.hpp>

using namespace InferenceEngine;

using namespace ngraph;

int main(int argc, char* argv[]) {

InferenceEngine::Core core;

std::string model_path="/home/VICOMTECH/uelordi/projects/smacs/heterogeneus_execution/models/mobilenet_v1_FP16.xml";

auto network = core.ReadNetwork(model_path);

// This example demonstrates how to perform default affinity initialization and then

// correct affinity manually for some layers

const std::string device = "HETERO:MYRIAD,CPU";

// QueryNetworkResult object contains map layer -> device

InferenceEngine::QueryNetworkResult res = core.QueryNetwork(network, device, { });

std::map<std::string, std::string>::iterator it;

for ( it = res.supportedLayersMap.begin();

it != res.supportedLayersMap.end(); it++ ) {

slog::info << it->first // string (key)

<< ':'

<< it->second // string's value

<< slog::endl ;

}

// update default affinities

// this was defined as MYRIAD NOW IT SHOULD BE CHANGED TO CPU

res.supportedLayersMap["MobilenetV1/MobilenetV1/Conv2d_9_pointwise/Relu6"] = "CPU";

res.supportedLayersMap["MobilenetV1/Predictions/Softmax"] = "CPU";

// set affinities to network

for (auto && layer : res.supportedLayersMap) {

network.getLayerByName(layer.first.c_str())->affinity = layer.second.c_str();

}

// load network with affinities set before

InferenceEngine::QueryNetworkResult res1 = core.QueryNetwork(network, device, { });

std::map<std::string, std::string>::iterator it1;

for ( it1 = res1.supportedLayersMap.begin();

it1 != res1.supportedLayersMap.end(); it1++ ) {

slog::info << it1->first // string (key)

<< ':'

<< it1->second // string's value

<< slog::endl ;

}

auto executable_network = core.LoadNetwork(network, device);

return 0;

}

when I try to print the new affinities I see that both lists of affinities are the same, the changes has no make any effect.

output of first cnn layer query:

Output of second cnn layer query:

Thanks in advance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @uelor

Thanks for providing all the additional details and reproducer files. We are investigating this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @uelor

We have to tell that QueryNetwork does not take affinities into account. It just reports a set of layers which supported by particular plugin. Manual affinities are only used in LoadNetwork when we load using HETERO:MYRIAD,CPU.

In your example QueryNetwork is being called twice, but the second call is not needed and it does not include user-set affinities.

Could you please try LoadNetwork and see whether the affinities are utilized?

We need to improve our documentation and state this explicitly that affinities are used only in LoadNetwork.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Max_L_Intel:

Your suggestion definitively works in my test:

I have taken the benchmarking example of OpenVINO benchmark app and I made the following test:

I made two tests:

1. - I forced to use CPU in all layers in heterogeneus plugin and

for (auto && layer : res.supportedLayersMap) {

cnnNetwork.getLayerByName(layer.first.c_str())->affinity = "CPU";

}

2.- I forced to use MYRIAD in all layers in heterogeneus plugin.

2.- for (auto && layer : res.supportedLayersMap) {

cnnNetwork.getLayerByName(layer.first.c_str())->affinity = "MYRIAD";

}

I also added the -pc flag to see the performance counts with this result:

in case of the CPU results i see that the peformance counts exec type is jit_avx2_1x1_FP32 style and in the myriad the output is more like the operation itself( such as Conv, CLamp, DpethConv).

The performance results indicate that this forcing is working:

CPU results:

latency: 3.79 ms

Throughput: 221.37FPS

MYRIAD RESULTS:

latency: 85.29ms

Throughput: 23.44 FPS.

below, the results in images.

CPU results:

MYRIAD RESULTS:

So I assume that this heterogeneus plugin is working fine.

¿Would you need any more details about test?

Thanks @Max_L_Intel, for your support.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @uelor

We are glad that our suggestions helped you to resolved this. Thank you for reporting this back to OpenVINO community!

We are also submitting the documentation change on QueryNetwork.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This thread will no longer be monitored since this issue has been resolved. If you need any additional information from Intel, please submit a new question.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page