- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When using 'omz_converter',

segmentation_model, hrnet-v2-c1-segmentation.xml created.

But 'translation_model, cocosnet.xml doesn't.

Document says,

-m_trn TRANSLATION_MODEL : required

-m_seg SEGMENTATION_MODEL : optional

And running the demo without translation model produces error as follows,

error message : ipykernel_launcher.py: error: the following arguments are required: -m_trn/--translation_model, -o/--output_dir

gb8

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi gb8,

There are two ways to run with this demo. You can refer to commands here.

Besides, there are also some fixes to the preprocessing for Segmentation and Translation models.

Please modify these two files (models.py and preprocessing.py) in the

<omz>/demos/image_translation_demo/python/image_translation_demo directory.

Here are the changes that required to be made.

Regards,

Peh

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@gb8 what was the issue of using omz_converter ?

Note, when you want to load models for particular demo, it might be convenient to use models.lst file from demo folder as a parameter for omz_downloaded or omz_converter tools.

I've just tried under OpenVINO 2022.1 python environment

omz_downloader --list <omz_dir>/demos/image_translation_demo/python/models.lst -o <out_dir>

omz_converter --list <omz_dir>/demos/image_translation_demo/python/models.lst -o <out_dir> -d <out_dir> --precisions FP16

and models listed in models.lst file were downloaded and converted

Remember, when you install openvino-dev into python environment where you plan to convert the models to IR, you also need specify extra dependencies for frameworks you are going to use (or simple list all supported OpenVINO frameworks)

you can do so by running command like this:

pip install openvino==2022.1 openvino-dev[caffe,onnx,tensorflow2,pytorch,mxnet,paddle,kaldi]==2022.1

This will install OpenVINO runtime and tools and also all required deps of proper versions to make conversion to IR from all these frameworks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

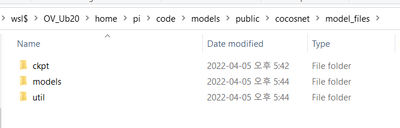

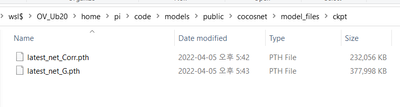

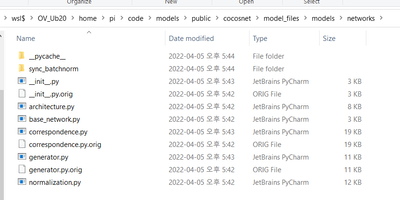

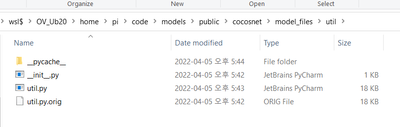

After model_downloader command, folder structure is as follows,

So, model download seems to be completed normally.

download command in vscode is :

I converted the models as following command in vscode'

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

note)

above convert command produces '256'.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am very sorry.

I overlooked the output message of downloader and converter because it is so long and always did well for other examples.

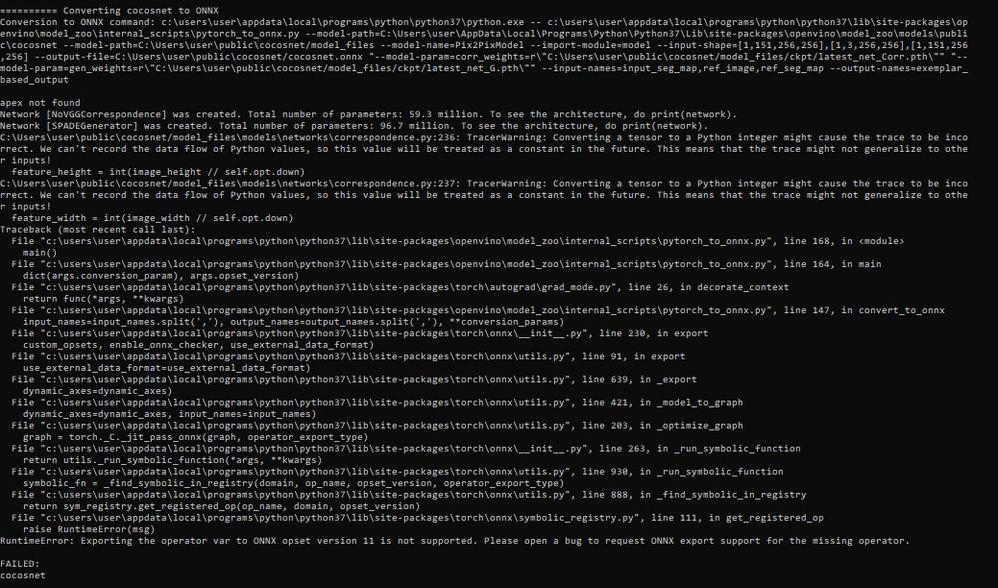

There is converting error for cocosnet as follows:

============================================

################|| Downloading cocosnet ||################ ========== Retrieving /home/pi/code/models/public/cocosnet/model_files/util/__init__.py from the cache ========== Retrieving /home/pi/code/models/public/cocosnet/model_files/util/util.py from the cache ========== Retrieving /home/pi/code/models/public/cocosnet/model_files/models/networks/__init__.py from the cache ========== Retrieving /home/pi/code/models/public/cocosnet/model_files/models/networks/architecture.py from the cache ========== Retrieving /home/pi/code/models/public/cocosnet/model_files/models/networks/base_network.py from the cache ========== Retrieving /home/pi/code/models/public/cocosnet/model_files/models/networks/correspondence.py from the cache ========== Retrieving /home/pi/code/models/public/cocosnet/model_files/models/networks/generator.py from the cache ========== Retrieving /home/pi/code/models/public/cocosnet/model_files/models/networks/normalization.py from the cache ========== Retrieving /home/pi/code/models/public/cocosnet/model_files/models/__init__.py from the cache ========== Retrieving /home/pi/code/models/public/cocosnet/model_files/models/networks/sync_batchnorm/__init__.py from the cache ========== Retrieving /home/pi/code/models/public/cocosnet/model_files/models/networks/sync_batchnorm/batchnorm.py from the cache ========== Retrieving /home/pi/code/models/public/cocosnet/model_files/models/networks/sync_batchnorm/replicate.py from the cache ========== Retrieving /home/pi/code/models/public/cocosnet/model_files/models/networks/sync_batchnorm/comm.py from the cache ========== Retrieving /home/pi/code/models/public/cocosnet/model_files/ckpt/latest_net_Corr.pth from the cache ========== Retrieving /home/pi/code/models/public/cocosnet/model_files/ckpt/latest_net_G.pth from the cache ========== Replacing text in /home/pi/code/models/public/cocosnet/model_files/models/networks/__init__.py ========== Replacing text in /home/pi/code/models/public/cocosnet/model_files/models/networks/correspondence.py ========== Replacing text in /home/pi/code/models/public/cocosnet/model_files/models/networks/correspondence.py ========== Replacing text in /home/pi/code/models/public/cocosnet/model_files/models/networks/generator.py ========== Replacing text in /home/pi/code/models/public/cocosnet/model_files/util/util.py ################|| Downloading hrnet-v2-c1-segmentation ||################ ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/lib/__init__.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/lib/nn/__init__.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/lib/nn/parallel/__init__.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/lib/nn/parallel/data_parallel.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/lib/nn/modules/batchnorm.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/lib/nn/modules/comm.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/lib/nn/modules/__init__.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/lib/nn/modules/replicate.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/models/__init__.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/models/hrnet.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/models/mobilenet.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/models/models.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/models/resnet.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/models/resnext.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/models/utils.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/__init__.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/ckpt/decoder_epoch_30.pth from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/ckpt/encoder_epoch_30.pth from the cache ========== Converting cocosnet to ONNX Conversion to ONNX command: /home/pi/miniconda3/envs/ov/bin/python3.8 -- /home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/openvino/model_zoo/internal_scripts/pytorch_to_onnx.py --model-path=/home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/openvino/model_zoo/models/public/cocosnet --model-path=/home/pi/code/models/public/cocosnet/model_files --model-name=Pix2PixModel --import-module=model '--input-shape=[1,151,256,256],[1,3,256,256],[1,151,256,256]' --output-file=/home/pi/code/models/public/cocosnet/cocosnet.onnx '--model-param=corr_weights=r"/home/pi/code/models/public/cocosnet/model_files/ckpt/latest_net_Corr.pth"' '--model-param=gen_weights=r"/home/pi/code/models/public/cocosnet/model_files/ckpt/latest_net_G.pth"' --input-names=input_seg_map,ref_image,ref_seg_map --output-names=exemplar_based_output apex not found Network [NoVGGCorrespondence] was created. Total number of parameters: 59.3 million. To see the architecture, do print(network). Network [SPADEGenerator] was created. Total number of parameters: 96.7 million. To see the architecture, do print(network).

/home/pi/code/models/public/cocosnet/model_files/models/networks/correspondence.py:236: TracerWarning: Converting a tensor to a Python integer might cause the trace to be incorrect. We can't record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

feature_height = int(image_height // self.opt.down)

/home/pi/code/models/public/cocosnet/model_files/models/networks/correspondence.py:237: TracerWarning: Converting a tensor to a Python integer might cause the trace to be incorrect. We can't record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

feature_width = int(image_width // self.opt.down)

Traceback (most recent call last):

File "/home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/openvino/model_zoo/internal_scripts/pytorch_to_onnx.py", line 168, in <module>

main()

File "/home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/openvino/model_zoo/internal_scripts/pytorch_to_onnx.py", line 163, in main

convert_to_onnx(model, args.input_shapes, args.output_file, args.input_names, args.output_names, args.inputs_dtype,

File "/home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/torch/autograd/grad_mode.py", line 26, in decorate_context

return func(*args, **kwargs)

File "/home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/openvino/model_zoo/internal_scripts/pytorch_to_onnx.py", line 146, in convert_to_onnx

torch.onnx.export(model, dummy_inputs, str(output_file), verbose=False, opset_version=opset_version,

File "/home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/torch/onnx/__init__.py", line 225, in export

return utils.export(model, args, f, export_params, verbose, training,

File "/home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/torch/onnx/utils.py", line 85, in export

_export(model, args, f, export_params, verbose, training, input_names, output_names,

File "/home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/torch/onnx/utils.py", line 632, in _export

_model_to_graph(model, args, verbose, input_names,

File "/home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/torch/onnx/utils.py", line 417, in _model_to_graph

graph = _optimize_graph(graph, operator_export_type,

File "/home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/torch/onnx/utils.py", line 203, in _optimize_graph

graph = torch._C._jit_pass_onnx(graph, operator_export_type)

File "/home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/torch/onnx/__init__.py", line 263, in _run_symbolic_function

return utils._run_symbolic_function(*args, **kwargs)

File "/home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/torch/onnx/utils.py", line 930, in _run_symbolic_function

symbolic_fn = _find_symbolic_in_registry(domain, op_name, opset_version, operator_export_type)

File "/home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/torch/onnx/utils.py", line 888, in _find_symbolic_in_registry

return sym_registry.get_registered_op(op_name, domain, opset_version)

File "/home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/torch/onnx/symbolic_registry.py", line 111, in get_registered_op

raise RuntimeError(msg)

RuntimeError: Exporting the operator var to ONNX opset version 11 is not supported. Please open a bug to request ONNX export support for the missing operator.

========== Converting hrnet-v2-c1-segmentation to ONNX Conversion to ONNX command: /home/pi/miniconda3/envs/ov/bin/python3.8 -- /home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/openvino/model_zoo/internal_scripts/pytorch_to_onnx.py --model-path=/home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/openvino/model_zoo/models/public/hrnet-v2-c1-segmentation --model-path=/home/pi/code/models/public/hrnet-v2-c1-segmentation --model-name=HrnetV2C1 --import-module=model --input-shape=1,3,320,320 --output-file=/home/pi/code/models/public/hrnet-v2-c1-segmentation/hrnet-v2-c1-segmentation.onnx '--model-param=encoder_weights=r"/home/pi/code/models/public/hrnet-v2-c1-segmentation/ckpt/encoder_epoch_30.pth"' '--model-param=decoder_weights=r"/home/pi/code/models/public/hrnet-v2-c1-segmentation/ckpt/decoder_epoch_30.pth"' --input-names=data --output-names=prob Loading weights for net_encoder Loading weights for net_decoder ONNX check passed successfully. ========== Converting hrnet-v2-c1-segmentation to IR (FP16) Conversion command: /home/pi/miniconda3/envs/ov/bin/python3.8 -- /home/pi/miniconda3/envs/ov/bin/mo --framework=onnx --data_type=FP16 --output_dir=/home/pi/code/models/public/hrnet-v2-c1-segmentation/FP16 --model_name=hrnet-v2-c1-segmentation --input=data --reverse_input_channels '--mean_values=data[123.675,116.28,103.53]' '--scale_values=data[58.395,57.12,57.375]' --output=prob --input_model=/home/pi/code/models/public/hrnet-v2-c1-segmentation/hrnet-v2-c1-segmentation.onnx '--layout=data(NCHW)' '--input_shape=[1, 3, 320, 320]' Model Optimizer arguments: Common parameters: - Path to the Input Model: /home/pi/code/models/public/hrnet-v2-c1-segmentation/hrnet-v2-c1-segmentation.onnx - Path for generated IR: /home/pi/code/models/public/hrnet-v2-c1-segmentation/FP16 - IR output name: hrnet-v2-c1-segmentation - Log level: ERROR - Batch: Not specified, inherited from the model - Input layers: data - Output layers: prob - Input shapes: [1, 3, 320, 320] - Source layout: Not specified - Target layout: Not specified - Layout: data(NCHW) - Mean values: data[123.675,116.28,103.53] - Scale values: data[58.395,57.12,57.375] - Scale factor: Not specified - Precision of IR: FP16 - Enable fusing: True - User transformations: Not specified - Reverse input channels: True - Enable IR generation for fixed input shape: False - Use the transformations config file: None Advanced parameters: - Force the usage of legacy Frontend of Model Optimizer for model conversion into IR: False - Force the usage of new Frontend of Model Optimizer for model conversion into IR: False OpenVINO runtime found in: /home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/openvino OpenVINO runtime version: 2022.1.0-7019-cdb9bec7210-releases/2022/1 Model Optimizer version: 2022.1.0-7019-cdb9bec7210-releases/2022/1 [ SUCCESS ] Generated IR version 11 model. [ SUCCESS ] XML file: /home/pi/code/models/public/hrnet-v2-c1-segmentation/FP16/hrnet-v2-c1-segmentation.xml [ SUCCESS ] BIN file: /home/pi/code/models/public/hrnet-v2-c1-segmentation/FP16/hrnet-v2-c1-segmentation.bin [ SUCCESS ] Total execution time: 1.95 seconds. [ SUCCESS ] Memory consumed: 595 MB. [ INFO ] The model was converted to IR v11, the latest model format that corresponds to the source DL framework input/output format. While IR v11 is backwards compatible with OpenVINO Inference Engine API v1.0, please use API v2.0 (as of 2022.1) to take advantage of the latest improvements in IR v11. Find more information about API v2.0 and IR v11 at https://docs.openvino.ai ========== Converting hrnet-v2-c1-segmentation to IR (FP32) Conversion command: /home/pi/miniconda3/envs/ov/bin/python3.8 -- /home/pi/miniconda3/envs/ov/bin/mo --framework=onnx --data_type=FP32 --output_dir=/home/pi/code/models/public/hrnet-v2-c1-segmentation/FP32 --model_name=hrnet-v2-c1-segmentation --input=data --reverse_input_channels '--mean_values=data[123.675,116.28,103.53]' '--scale_values=data[58.395,57.12,57.375]' --output=prob --input_model=/home/pi/code/models/public/hrnet-v2-c1-segmentation/hrnet-v2-c1-segmentation.onnx '--layout=data(NCHW)' '--input_shape=[1, 3, 320, 320]' '--layout=data(NCHW)' '--input_shape=[1, 3, 320, 320]' Model Optimizer arguments: Common parameters: - Path to the Input Model: /home/pi/code/models/public/hrnet-v2-c1-segmentation/hrnet-v2-c1-segmentation.onnx - Path for generated IR: /home/pi/code/models/public/hrnet-v2-c1-segmentation/FP32 - IR output name: hrnet-v2-c1-segmentation - Log level: ERROR - Batch: Not specified, inherited from the model - Input layers: data - Output layers: prob - Input shapes: [1, 3, 320, 320] - Source layout: Not specified - Target layout: Not specified - Layout: data(NCHW) - Mean values: data[123.675,116.28,103.53] - Scale values: data[58.395,57.12,57.375] - Scale factor: Not specified - Precision of IR: FP32 - Enable fusing: True - User transformations: Not specified - Reverse input channels: True - Enable IR generation for fixed input shape: False - Use the transformations config file: None Advanced parameters: - Force the usage of legacy Frontend of Model Optimizer for model conversion into IR: False - Force the usage of new Frontend of Model Optimizer for model conversion into IR: False OpenVINO runtime found in: /home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/openvino OpenVINO runtime version: 2022.1.0-7019-cdb9bec7210-releases/2022/1 Model Optimizer version: 2022.1.0-7019-cdb9bec7210-releases/2022/1 [ SUCCESS ] Generated IR version 11 model. [ SUCCESS ] XML file: /home/pi/code/models/public/hrnet-v2-c1-segmentation/FP32/hrnet-v2-c1-segmentation.xml [ SUCCESS ] BIN file: /home/pi/code/models/public/hrnet-v2-c1-segmentation/FP32/hrnet-v2-c1-segmentation.bin [ SUCCESS ] Total execution time: 1.35 seconds. [ SUCCESS ] Memory consumed: 599 MB. [ INFO ] The model was converted to IR v11, the latest model format that corresponds to the source DL framework input/output format. While IR v11 is backwards compatible with OpenVINO Inference Engine API v1.0, please use API v2.0 (as of 2022.1) to take advantage of the latest improvements in IR v11. Find more information about API v2.0 and IR v11 at https://docs.openvino.ai FAILED: cocosnet

======================================================================

gb8

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am verry sorry.

I overlooked downloader and converter message because it is so long and it worked well for other examples.

There is converter error for cocosnet as follows:

=================================================

################|| Downloading cocosnet ||################ ========== Retrieving /home/pi/code/models/public/cocosnet/model_files/util/__init__.py from the cache ========== Retrieving /home/pi/code/models/public/cocosnet/model_files/util/util.py from the cache ========== Retrieving /home/pi/code/models/public/cocosnet/model_files/models/networks/__init__.py from the cache ========== Retrieving /home/pi/code/models/public/cocosnet/model_files/models/networks/architecture.py from the cache ========== Retrieving /home/pi/code/models/public/cocosnet/model_files/models/networks/base_network.py from the cache ========== Retrieving /home/pi/code/models/public/cocosnet/model_files/models/networks/correspondence.py from the cache ========== Retrieving /home/pi/code/models/public/cocosnet/model_files/models/networks/generator.py from the cache ========== Retrieving /home/pi/code/models/public/cocosnet/model_files/models/networks/normalization.py from the cache ========== Retrieving /home/pi/code/models/public/cocosnet/model_files/models/__init__.py from the cache ========== Retrieving /home/pi/code/models/public/cocosnet/model_files/models/networks/sync_batchnorm/__init__.py from the cache ========== Retrieving /home/pi/code/models/public/cocosnet/model_files/models/networks/sync_batchnorm/batchnorm.py from the cache ========== Retrieving /home/pi/code/models/public/cocosnet/model_files/models/networks/sync_batchnorm/replicate.py from the cache ========== Retrieving /home/pi/code/models/public/cocosnet/model_files/models/networks/sync_batchnorm/comm.py from the cache ========== Retrieving /home/pi/code/models/public/cocosnet/model_files/ckpt/latest_net_Corr.pth from the cache ========== Retrieving /home/pi/code/models/public/cocosnet/model_files/ckpt/latest_net_G.pth from the cache ========== Replacing text in /home/pi/code/models/public/cocosnet/model_files/models/networks/__init__.py ========== Replacing text in /home/pi/code/models/public/cocosnet/model_files/models/networks/correspondence.py ========== Replacing text in /home/pi/code/models/public/cocosnet/model_files/models/networks/correspondence.py ========== Replacing text in /home/pi/code/models/public/cocosnet/model_files/models/networks/generator.py ========== Replacing text in /home/pi/code/models/public/cocosnet/model_files/util/util.py ################|| Downloading hrnet-v2-c1-segmentation ||################ ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/lib/__init__.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/lib/nn/__init__.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/lib/nn/parallel/__init__.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/lib/nn/parallel/data_parallel.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/lib/nn/modules/batchnorm.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/lib/nn/modules/comm.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/lib/nn/modules/__init__.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/lib/nn/modules/replicate.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/models/__init__.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/models/hrnet.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/models/mobilenet.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/models/models.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/models/resnet.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/models/resnext.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/models/utils.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/mit_semseg/__init__.py from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/ckpt/decoder_epoch_30.pth from the cache ========== Retrieving /home/pi/code/models/public/hrnet-v2-c1-segmentation/ckpt/encoder_epoch_30.pth from the cache ========== Converting cocosnet to ONNX Conversion to ONNX command: /home/pi/miniconda3/envs/ov/bin/python3.8 -- /home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/openvino/model_zoo/internal_scripts/pytorch_to_onnx.py --model-path=/home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/openvino/model_zoo/models/public/cocosnet --model-path=/home/pi/code/models/public/cocosnet/model_files --model-name=Pix2PixModel --import-module=model '--input-shape=[1,151,256,256],[1,3,256,256],[1,151,256,256]' --output-file=/home/pi/code/models/public/cocosnet/cocosnet.onnx '--model-param=corr_weights=r"/home/pi/code/models/public/cocosnet/model_files/ckpt/latest_net_Corr.pth"' '--model-param=gen_weights=r"/home/pi/code/models/public/cocosnet/model_files/ckpt/latest_net_G.pth"' --input-names=input_seg_map,ref_image,ref_seg_map --output-names=exemplar_based_output apex not found Network [NoVGGCorrespondence] was created. Total number of parameters: 59.3 million. To see the architecture, do print(network). Network [SPADEGenerator] was created. Total number of parameters: 96.7 million. To see the architecture, do print(network).

/home/pi/code/models/public/cocosnet/model_files/models/networks/correspondence.py:236: TracerWarning: Converting a tensor to a Python integer might cause the trace to be incorrect. We can't record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

feature_height = int(image_height // self.opt.down)

/home/pi/code/models/public/cocosnet/model_files/models/networks/correspondence.py:237: TracerWarning: Converting a tensor to a Python integer might cause the trace to be incorrect. We can't record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

feature_width = int(image_width // self.opt.down)

Traceback (most recent call last):

File "/home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/openvino/model_zoo/internal_scripts/pytorch_to_onnx.py", line 168, in <module>

main()

File "/home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/openvino/model_zoo/internal_scripts/pytorch_to_onnx.py", line 163, in main

convert_to_onnx(model, args.input_shapes, args.output_file, args.input_names, args.output_names, args.inputs_dtype,

File "/home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/torch/autograd/grad_mode.py", line 26, in decorate_context

return func(*args, **kwargs)

File "/home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/openvino/model_zoo/internal_scripts/pytorch_to_onnx.py", line 146, in convert_to_onnx

torch.onnx.export(model, dummy_inputs, str(output_file), verbose=False, opset_version=opset_version,

File "/home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/torch/onnx/__init__.py", line 225, in export

return utils.export(model, args, f, export_params, verbose, training,

File "/home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/torch/onnx/utils.py", line 85, in export

_export(model, args, f, export_params, verbose, training, input_names, output_names,

File "/home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/torch/onnx/utils.py", line 632, in _export

_model_to_graph(model, args, verbose, input_names,

File "/home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/torch/onnx/utils.py", line 417, in _model_to_graph

graph = _optimize_graph(graph, operator_export_type,

File "/home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/torch/onnx/utils.py", line 203, in _optimize_graph

graph = torch._C._jit_pass_onnx(graph, operator_export_type)

File "/home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/torch/onnx/__init__.py", line 263, in _run_symbolic_function

return utils._run_symbolic_function(*args, **kwargs)

File "/home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/torch/onnx/utils.py", line 930, in _run_symbolic_function

symbolic_fn = _find_symbolic_in_registry(domain, op_name, opset_version, operator_export_type)

File "/home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/torch/onnx/utils.py", line 888, in _find_symbolic_in_registry

return sym_registry.get_registered_op(op_name, domain, opset_version)

File "/home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/torch/onnx/symbolic_registry.py", line 111, in get_registered_op

raise RuntimeError(msg)

RuntimeError: Exporting the operator var to ONNX opset version 11 is not supported. Please open a bug to request ONNX export support for the missing operator.

========== Converting hrnet-v2-c1-segmentation to ONNX Conversion to ONNX command: /home/pi/miniconda3/envs/ov/bin/python3.8 -- /home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/openvino/model_zoo/internal_scripts/pytorch_to_onnx.py --model-path=/home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/openvino/model_zoo/models/public/hrnet-v2-c1-segmentation --model-path=/home/pi/code/models/public/hrnet-v2-c1-segmentation --model-name=HrnetV2C1 --import-module=model --input-shape=1,3,320,320 --output-file=/home/pi/code/models/public/hrnet-v2-c1-segmentation/hrnet-v2-c1-segmentation.onnx '--model-param=encoder_weights=r"/home/pi/code/models/public/hrnet-v2-c1-segmentation/ckpt/encoder_epoch_30.pth"' '--model-param=decoder_weights=r"/home/pi/code/models/public/hrnet-v2-c1-segmentation/ckpt/decoder_epoch_30.pth"' --input-names=data --output-names=prob Loading weights for net_encoder Loading weights for net_decoder ONNX check passed successfully. ========== Converting hrnet-v2-c1-segmentation to IR (FP16) Conversion command: /home/pi/miniconda3/envs/ov/bin/python3.8 -- /home/pi/miniconda3/envs/ov/bin/mo --framework=onnx --data_type=FP16 --output_dir=/home/pi/code/models/public/hrnet-v2-c1-segmentation/FP16 --model_name=hrnet-v2-c1-segmentation --input=data --reverse_input_channels '--mean_values=data[123.675,116.28,103.53]' '--scale_values=data[58.395,57.12,57.375]' --output=prob --input_model=/home/pi/code/models/public/hrnet-v2-c1-segmentation/hrnet-v2-c1-segmentation.onnx '--layout=data(NCHW)' '--input_shape=[1, 3, 320, 320]' Model Optimizer arguments: Common parameters: - Path to the Input Model: /home/pi/code/models/public/hrnet-v2-c1-segmentation/hrnet-v2-c1-segmentation.onnx - Path for generated IR: /home/pi/code/models/public/hrnet-v2-c1-segmentation/FP16 - IR output name: hrnet-v2-c1-segmentation - Log level: ERROR - Batch: Not specified, inherited from the model - Input layers: data - Output layers: prob - Input shapes: [1, 3, 320, 320] - Source layout: Not specified - Target layout: Not specified - Layout: data(NCHW) - Mean values: data[123.675,116.28,103.53] - Scale values: data[58.395,57.12,57.375] - Scale factor: Not specified - Precision of IR: FP16 - Enable fusing: True - User transformations: Not specified - Reverse input channels: True - Enable IR generation for fixed input shape: False - Use the transformations config file: None Advanced parameters: - Force the usage of legacy Frontend of Model Optimizer for model conversion into IR: False - Force the usage of new Frontend of Model Optimizer for model conversion into IR: False OpenVINO runtime found in: /home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/openvino OpenVINO runtime version: 2022.1.0-7019-cdb9bec7210-releases/2022/1 Model Optimizer version: 2022.1.0-7019-cdb9bec7210-releases/2022/1 [ SUCCESS ] Generated IR version 11 model. [ SUCCESS ] XML file: /home/pi/code/models/public/hrnet-v2-c1-segmentation/FP16/hrnet-v2-c1-segmentation.xml [ SUCCESS ] BIN file: /home/pi/code/models/public/hrnet-v2-c1-segmentation/FP16/hrnet-v2-c1-segmentation.bin [ SUCCESS ] Total execution time: 1.95 seconds. [ SUCCESS ] Memory consumed: 595 MB. [ INFO ] The model was converted to IR v11, the latest model format that corresponds to the source DL framework input/output format. While IR v11 is backwards compatible with OpenVINO Inference Engine API v1.0, please use API v2.0 (as of 2022.1) to take advantage of the latest improvements in IR v11. Find more information about API v2.0 and IR v11 at https://docs.openvino.ai ========== Converting hrnet-v2-c1-segmentation to IR (FP32) Conversion command: /home/pi/miniconda3/envs/ov/bin/python3.8 -- /home/pi/miniconda3/envs/ov/bin/mo --framework=onnx --data_type=FP32 --output_dir=/home/pi/code/models/public/hrnet-v2-c1-segmentation/FP32 --model_name=hrnet-v2-c1-segmentation --input=data --reverse_input_channels '--mean_values=data[123.675,116.28,103.53]' '--scale_values=data[58.395,57.12,57.375]' --output=prob --input_model=/home/pi/code/models/public/hrnet-v2-c1-segmentation/hrnet-v2-c1-segmentation.onnx '--layout=data(NCHW)' '--input_shape=[1, 3, 320, 320]' '--layout=data(NCHW)' '--input_shape=[1, 3, 320, 320]' Model Optimizer arguments: Common parameters: - Path to the Input Model: /home/pi/code/models/public/hrnet-v2-c1-segmentation/hrnet-v2-c1-segmentation.onnx - Path for generated IR: /home/pi/code/models/public/hrnet-v2-c1-segmentation/FP32 - IR output name: hrnet-v2-c1-segmentation - Log level: ERROR - Batch: Not specified, inherited from the model - Input layers: data - Output layers: prob - Input shapes: [1, 3, 320, 320] - Source layout: Not specified - Target layout: Not specified - Layout: data(NCHW) - Mean values: data[123.675,116.28,103.53] - Scale values: data[58.395,57.12,57.375] - Scale factor: Not specified - Precision of IR: FP32 - Enable fusing: True - User transformations: Not specified - Reverse input channels: True - Enable IR generation for fixed input shape: False - Use the transformations config file: None Advanced parameters: - Force the usage of legacy Frontend of Model Optimizer for model conversion into IR: False - Force the usage of new Frontend of Model Optimizer for model conversion into IR: False OpenVINO runtime found in: /home/pi/miniconda3/envs/ov/lib/python3.8/site-packages/openvino OpenVINO runtime version: 2022.1.0-7019-cdb9bec7210-releases/2022/1 Model Optimizer version: 2022.1.0-7019-cdb9bec7210-releases/2022/1 [ SUCCESS ] Generated IR version 11 model. [ SUCCESS ] XML file: /home/pi/code/models/public/hrnet-v2-c1-segmentation/FP32/hrnet-v2-c1-segmentation.xml [ SUCCESS ] BIN file: /home/pi/code/models/public/hrnet-v2-c1-segmentation/FP32/hrnet-v2-c1-segmentation.bin [ SUCCESS ] Total execution time: 1.35 seconds. [ SUCCESS ] Memory consumed: 599 MB. [ INFO ] The model was converted to IR v11, the latest model format that corresponds to the source DL framework input/output format. While IR v11 is backwards compatible with OpenVINO Inference Engine API v1.0, please use API v2.0 (as of 2022.1) to take advantage of the latest improvements in IR v11. Find more information about API v2.0 and IR v11 at https://docs.openvino.ai FAILED: cocosnet

======================================================

gb8

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

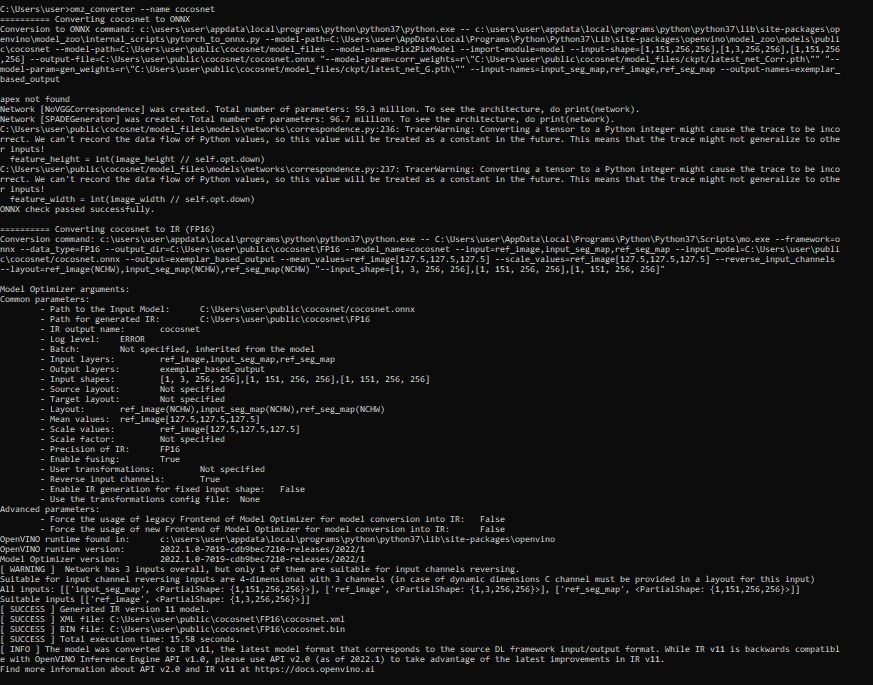

Hi gb8,

Below are the results that I convert CocosNet model via Command Prompt. As you see, the result messages (success, fail and info) are shown.

,

I tried to convert CocosNet model without installing the dependencies and the conversion was failed.

Please make sure you run the command below to install the dependencies:

pip install openvino-dev[pytorch]==2022.1

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Peh.

Your mention succeed.

Former result : I installed using comand someday before as follows,

- 'pip install ‘openvino-dev[caffe, kaldi, mxnet, onnx, pytorch, tensorflow2]’

Now using comamnd, - 'pip install openvino-dev[pytorch]==2022.1'

it succeed.

Is there difference between the two pip command?

Thanks a lot.

gb8

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi gb8,

Thanks for confirming you are running well now.

Both pip commands are actually the same, the only difference is just to specify the version. Installing OpenVINO™ from PyPI without specifying the version, it will install the latest version at that moment.

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Peh.

Thank you for detailed explanation.

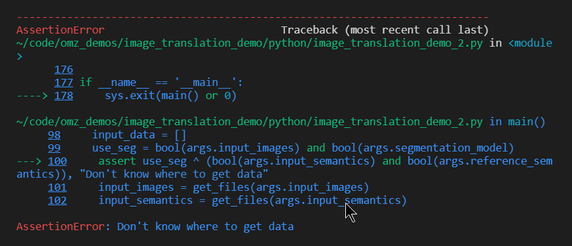

After installation successful, I ran the demo according to doc, it says only '-ri'(reference images) argument is required, and other images are optional.

Running with that argument, it shows error as follows:

=====================================

==========================================

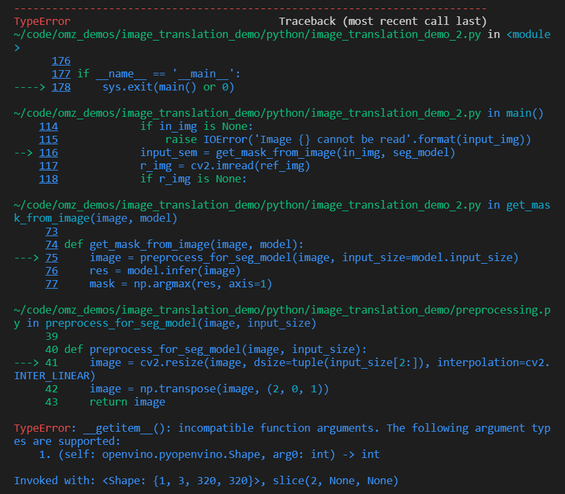

So I rearranged arguments like '-ii imagefile -ri imagefile' (same image file for test), then error message is as follows:

I am not familiar with image translation, I just want to introduce this model to people.

For this demonstration to work, Can I get suitable images ? If possible.

gb8

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi gb8,

There are two ways to run with this demo. You can refer to commands here.

Besides, there are also some fixes to the preprocessing for Segmentation and Translation models.

Please modify these two files (models.py and preprocessing.py) in the

<omz>/demos/image_translation_demo/python/image_translation_demo directory.

Here are the changes that required to be made.

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK.

I see.

I am not familiar with image translation as mentioned before,

I would rather postpone the test until everything is fixed.

I respect you for hard work if not impossible.

Thanks a lot.

gb8

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

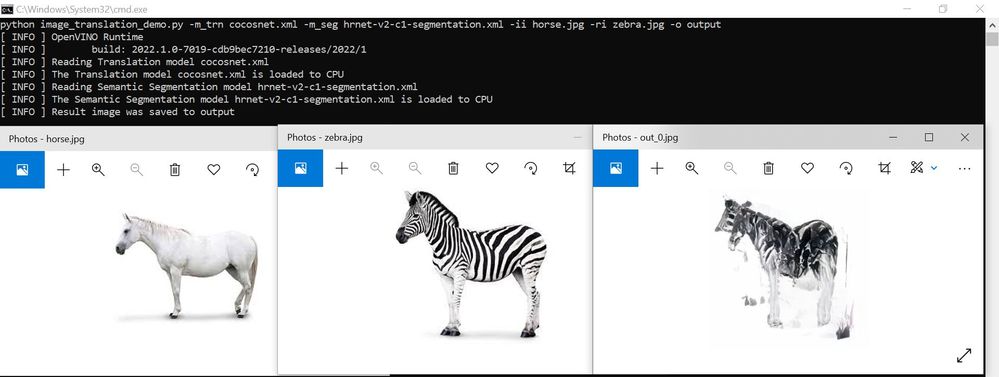

Hi gb8,

I’ve validated the demo works fine on my side after modifying these two files (models.py and preprocessing.py).

Command used:

python image_translation_demo.py -m_trn cocosnet.xml -m_seg hrnet-v2-c1-segmentation.xml -ii horse.jpg -ri zebra.jpg -o output

Besides, I also attached these two modified files as well. You can replace these two files in the directory below:

<omz>/demos/image_translation_demo/python/image_translation_demo

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes. I tested it and everything is OK.

Thank you.

gb8

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi gb8,

This thread will no longer be monitored since this issue has been resolved. If you need any additional information from Intel, please submit a new question.

Regards,

Peh

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page