- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I have been using the OpenVINO toolkit with my NCS2 for a while now and I have learned alot.

But I still have not met my intended goals. Every time I get close, a major obstacle or redirection arises. I am careful about posting for help, so please forgive me if the info I am seeking is posted already. I am asking for direction.

In a nutshell, many months ago I knew nothing about AI or ML whatsoever, but I had a bunch of cameras, a few raspberrry pis, and my interest. With vague knowledge I had an impulse buy of the Neural Compute Stick 2 on amazon one day. I figured I would like to monitor the activity of my cats while I am away. If that works, then train voice commands, or something.

I am using a Dell Inspiron 5558 (no nvidia gpu) Windows 10 i5 pc and I own 2x Neural Compute Stick 2 to work with. I also have a project setup on google colab if I need gpu or cuda.

I am under the impression that NCS2 and openVINO is mostly designed for inference. It was tough to figure out anything from level 0. But I did get to the point I could install openvino + DL streamer and run inference on my NCS2 from my cctv camera. I am working in python. I know PHP, I can read and modify python. I also compiled openvino on the rpi4b+. I messed with the open model zoo, and went through the notebooks. i installed docker and somehow got the workbench up and running. Overall now I'm just using Openvino in python on windows.

In an ideal world, there would be some way to take footage from my Blue Iris software and run object detection on footage through OpenVINO and save results to a mySQL database, which I can check periodically. OpenVINO does have training extensions for custom data sets, just as blue iris uses deepstack(or something) for vision recognition. Blue Iris's deepstack ai will not run on NCS2 and the guides on OpenVINO training extensions use Ubuntu, no ncs2, and the guides are confusing to the casual techie. I'm trying.

I managed so far to create a dataset of about 900 training images, 300 validation images, and ran them through yolov7. I setup a google collab and produced my *.pt weights file.

I can run yolo inference and get accurate object detection on my cpu, just slow.

I have been trying a few days now to get the conversion from *.pt over to a working openvino IR file set.

I followed the notebook : Convert a PyTorch Model to ONNX and OpenVINO™ IR — OpenVINO™ documentation — Version(latest)

and it works on the specific fastseg model, but not my *.pt model. I have checked input and output shapes. At some point it gets too deep for me.

same thing.

I seem to be able to convert my *.pt models to *.onnx, and then use the openvino model optimizer to convert into IR format. And I can readout the input and output shapes. Running inference on my own generated conversions always outputs some form of crap. I reshape, reconvert, re-encode the data, and I get a new form of mis-match. A mismatch which might require an I.T. college degree to interpret. I'm trying.

Sorry for not being specific about what errors, I am not listing bugs(yet)

My question : What would be the best way to train a custom dataset and get it running on my NCS2?

I started with yolo because it seemed most user friendly, but cannot get it converted over to IR. I'm open to using tensorflow, pytorch, open model zoo + OTE. I just need a working guide with working demos which are slightly flexible on the variables and data. I want to train models to recognize specific individuals, and run it on my NCS2 sticks. Am I on the right path?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

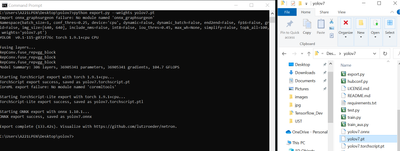

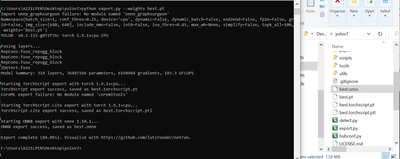

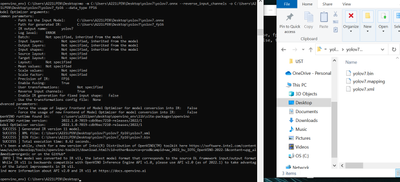

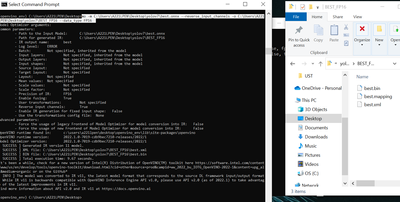

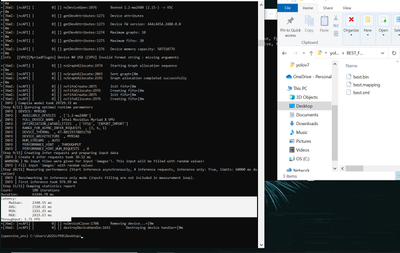

From my side, I don't see any issue converting & inferencing both the original YOLOv7 and your model (model named best)

Conversion to ONNX using the script export.py

YOLOV7

BEST

Infer the ONNX model using OpenVINO benchmark_app (default infer without device specification means I'm using intel CPU)

YOLOV7

BEST

Convert ONNX into OpenVINO IR with FP16 precision

YOLOV7

BEST

Infer the IR with NCS2/MYRIAD

YOLOV7

BEST

Therefore, from OpenVINO perspective, I don't see any issue with the model.

For implementing those YOLO models (training/infer/etc) in Google Colab you'll need to think out of the box and figure it out by reading the Google Colab documentation. There's a functionality in that collab where you could import your own script and utilize it.

This part is beyond OpenVINO utilization hence it's irrelevant to be answered in this forum.

Note: one of the main purpose of using Intel OpenVINO is to enable inferencing on Intel GPU.

If you want to use CUDA/NVDIA, you might as well use other Deep Learning Platforms e.g. Tensorflow & OpenCV.

Sincerely,

Iffa

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

from OpenVINO perspective, the best way to retrain a model is by using the OpenVINO Training Extensions.

You may refer to:

- OpenVINO™ Training Extensions video tutorial

- OpenVINO™ Training Extensions official documentation

- GitHub repo: OpenVINO training_extension

Note: You'll need to have the same folder hierarchy as indicated in the video or, you can take any of these data samples and apply them accordingly to your use case.

Although the video was implemented in Linux (Ubuntu), the same concept can be applied to Windows. You just need to adjust some commands functionality, for example, wget --> install the file directly, tar xf --> right click and unzip.

For converting the mode into IR, you could:

- Convert the model into ONNX (OpenVINO supports native ONNX format inferencing) --as you mentioned above

- Convert the Tensorflow frozen model into IR (XML and BIN) --I usually use this method, you could choose conversion on your model type by selecting the list on the left side of the documentation

Make sure you convert the model into FP16 format since you intend to use NCS2.

If you are using PyPI installation of OpenVINO (pip install) you need to use the mo command instead omz_converter

Flow in AI:

First, the general Neural Network phase :

Data --> model --> fit model -->evaluate --> adjust model parameters --> repeat until satisfied (to get your objective)

Once completed, you'll have a trained model (.pt, .h5, etc)

Next, the general inferencing phase (related to OpenVINO):

Trained model -->convert to IR --> infer --> evaluate --> re-train model --> repeat until satisfied

Sincerely,

Iffa

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In most cases I would dig right in, building the environment and running the commands.

I have learned that some of these environments can take a while to build and configure, and some of these commands can take a while to complete. So I decided to read more on ML until time allowed for me to try this.

I followed and read the links posted.

I am going to try the OTE now based on this guide :

training_extensions/QUICK_START_GUIDE.md at develop · openvinotoolkit/training_extensions · GitHub

Please forgive me if some of the tech should be obvious & I just don't get it.

This is the end point where I no longer believe I can figure this out, so I reach out to others who may possibly share their expertise.

I am learning to recognize different windows CMD, python, and bash shell commands. Sometimes it is not obvious to the inexperienced reader. In most cases I create a python environment, install the various components (requirements.txt) and then start more configurations and functions. As I understand, this process is possible on windows I just need to switch out some commands.

I tried :

cd c:/ai/

git clone https://github.com/openvinotoolkit/training_extensions.git

cd training_extensions

git checkout develop

git submodule update --init --recursive

Python 3.9.13; pip 22.0.4 from c:\ai\venv_mpa\lib\site-packages\pip (python 3.9); virtualenv 20.16.7 from C:\Users\*****\AppData\Local\Programs\Python\Python39\lib\site-packages\virtualenv\__init__.py

find external/ -name init_venv.sh

FIND: Parameter format not correct

dir c:\ai\training_extensions\external /s /b | find "init_venv.sh"

c:\ai\training_extensions\external\anomaly\init_venv.sh

c:\ai\training_extensions\external\deep-object-reid\init_venv.sh

c:\ai\training_extensions\external\deep-object-reid\submodule\init_venv.sh

c:\ai\training_extensions\external\mmdetection\init_venv.sh

c:\ai\training_extensions\external\mmdetection\submodule\init_venv.sh

c:\ai\training_extensions\external\mmsegmentation\init_venv.sh

c:\ai\training_extensions\external\mmsegmentation\submodule\init_venv.sh

c:\ai\training_extensions\external\model-preparation-algorithm\init_venv.sh

external/model-preparation-algorithm/init_venv.sh .venv_mpa

'external' is not recognized as an internal or external command,

operable program or batch file.

edit: running

C:\ai\training_extensions\external\model-preparation-algorithm\init_venv.sh .venv_mpa

would just open a separate command window, rapidly flash text and close. I tried variations with a few flags. i couldn't get the command to work so i made my own python environment.

python -m venv c:\ai\venv_mpa

c:/ai/venv_mpa/scripts/activate

(venv_mpa) cd c:/ai/training_extensions

(venv_mpa) pip3 install -e ote_cli/ -c c:\ai\training_extensions\external\model-preparation-algorithm\constraints.txt

(venv_mpa) ote find --help

Traceback (most recent call last):

File "c:\ai\venv_mpa\Scripts\ote-script.py", line 33, in <module>

sys.exit(load_entry_point('ote-cli', 'console_scripts', 'ote')())

File "c:\ai\venv_mpa\Scripts\ote-script.py", line 25, in importlib_load_entry_point

return next(matches).load()

File "C:\Users\surfreedo\AppData\Local\Programs\Python\Python39\lib\importlib\metadata.py", line 86, in load

module = import_module(match.group('module'))

File "C:\Users\surfreedo\AppData\Local\Programs\Python\Python39\lib\importlib\__init__.py", line 127, in import_module

return _bootstrap._gcd_import(name[level:], package, level)

File "<frozen importlib._bootstrap>", line 1030, in _gcd_import

File "<frozen importlib._bootstrap>", line 1007, in _find_and_load

File "<frozen importlib._bootstrap>", line 986, in _find_and_load_unlocked

File "<frozen importlib._bootstrap>", line 680, in _load_unlocked

File "<frozen importlib._bootstrap_external>", line 850, in exec_module

File "<frozen importlib._bootstrap>", line 228, in _call_with_frames_removed

File "c:\ai\training_extensions\ote_cli\ote_cli\tools\ote.py", line 22, in <module>

from .demo import main as ote_demo

File "c:\ai\training_extensions\ote_cli\ote_cli\tools\demo.py", line 25, in <module>

(venv_mpa) ote --help

Traceback (most recent call last):

File "c:\ai\venv_mpa\Scripts\ote-script.py", line 33, in <module>

sys.exit(load_entry_point('ote-cli', 'console_scripts', 'ote')())

File "c:\ai\venv_mpa\Scripts\ote-script.py", line 25, in importlib_load_entry_point

return next(matches).load()

File "C:\Users\surfreedo\AppData\Local\Programs\Python\Python39\lib\importlib\metadata.py", line 86, in load

module = import_module(match.group('module'))

File "C:\Users\surfreedo\AppData\Local\Programs\Python\Python39\lib\importlib\__init__.py", line 127, in import_module

return _bootstrap._gcd_import(name[level:], package, level)

File "<frozen importlib._bootstrap>", line 1030, in _gcd_import

File "<frozen importlib._bootstrap>", line 1007, in _find_and_load

File "<frozen importlib._bootstrap>", line 986, in _find_and_load_unlocked

File "<frozen importlib._bootstrap>", line 680, in _load_unlocked

File "<frozen importlib._bootstrap_external>", line 850, in exec_module

File "<frozen importlib._bootstrap>", line 228, in _call_with_frames_removed

File "c:\ai\training_extensions\ote_cli\ote_cli\tools\ote.py", line 22, in <module>

from .demo import main as ote_demo

File "c:\ai\training_extensions\ote_cli\ote_cli\tools\demo.py", line 25, in <module>

from ote_sdk.configuration.helper import create

ModuleNotFoundError: No module named 'ote_sdk'

from ote_sdk.configuration.helper import create

ModuleNotFoundError: No module named 'ote_sdk'

I feel like I missed a step or two. I do not believe I should try to proceed forth with further operations until this is resolved. I have run out of hours to investigate on my own so I must request help. The OpenVINO video tutorials show a different line of commands than the Quick Start Guide.

Is it possible to run training on the NCS2? Or should I keep my Google Colab account open? I'm running an old i-5 processor. Training models overnight wouldn't be so bad, if I didn't get errors or non-working results after hours of computations.

If I can actually complete this, I will seriously consider making a video tutorial on the process.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

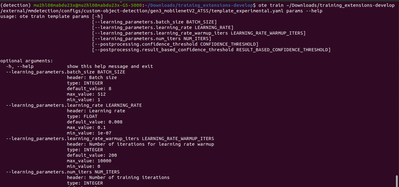

I believe I have found the issue. We are installing something from nothing.

I ran :

cd c:\ai\training_extensions\ote_sdk

python setup.py install

pip install openvino-dev[pytorch,onnx]

pip install torch torchvision torchaudio

ote find --help

usage: ote find [-h] [--root ROOT]

[--task_type {classification,detection,segmentation,instance_segmantation,rotated_detection,anomaly_classification,anomaly_detection,anomaly_segmentation}]

[--experimental]

optional arguments:

-h, --help show this help message and exit

--root ROOT A root dir where templates should be searched.

--task_type {classification,detection,segmentation,instance_segmantation,rotated_detection,anomaly_classification,anomaly_detection,anomaly_segmentation}

--experimental

So Far So Good. I will continue to try this and report back.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It seems as if many of the required demo files are already existing inside the installation. I went back to the youtube video.

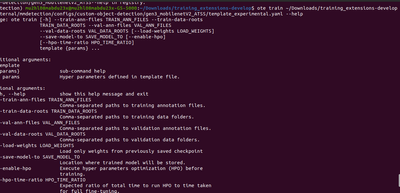

I believe the command for a demo run is on the github quick start guide :

ote train c:\ai\training_extensions\external\model-preparation-algorithm\configs\detection\mobilenetv2_ssd_cls_incr\template.yaml --train-ann-file c:\ai\training_extensions\data\airport\annotation_person_train.json --train-data-roots c:\ai\training_extensions\data\airport\train\ --val-ann-files c:\ai\training_extensions\data\airport\annotation_person_val.json --val-data-roots c:\ai\training_extensions\data\airport\val\ --save-model-to outputs

and the error I got was :

Traceback (most recent call last):

File "c:\ai\venv_mpa\Scripts\ote-script.py", line 33, in <module>

sys.exit(load_entry_point('ote-cli', 'console_scripts', 'ote')())

File "c:\ai\training_extensions\ote_cli\ote_cli\tools\ote.py", line 67, in main

globals()[f"ote_{name}"]()

File "c:\ai\training_extensions\ote_cli\ote_cli\tools\train.py", line 140, in main

task_class = get_impl_class(template.entrypoints.base)

File "c:\ai\training_extensions\ote_cli\ote_cli\utils\importing.py", line 27, in get_impl_class

task_impl_module = importlib.import_module(task_impl_module_name)

File "C:\Users\surfreedo\AppData\Local\Programs\Python\Python39\lib\importlib\__init__.py", line 127, in import_module

return _bootstrap._gcd_import(name[level:], package, level)

File "<frozen importlib._bootstrap>", line 1030, in _gcd_import

File "<frozen importlib._bootstrap>", line 1007, in _find_and_load

File "<frozen importlib._bootstrap>", line 972, in _find_and_load_unlocked

File "<frozen importlib._bootstrap>", line 228, in _call_with_frames_removed

File "<frozen importlib._bootstrap>", line 1030, in _gcd_import

File "<frozen importlib._bootstrap>", line 1007, in _find_and_load

File "<frozen importlib._bootstrap>", line 972, in _find_and_load_unlocked

File "<frozen importlib._bootstrap>", line 228, in _call_with_frames_removed

File "<frozen importlib._bootstrap>", line 1030, in _gcd_import

File "<frozen importlib._bootstrap>", line 1007, in _find_and_load

File "<frozen importlib._bootstrap>", line 984, in _find_and_load_unlocked

ModuleNotFoundError: No module named 'mpa_tasks'

Feeling like I am making slow progress towards getting a custom trained model running on my NCS2. PIP gives me a : ERROR: No matching distribution found for mpa_tasks : when I attempt to install mpa_tasks. I googled : openvino "mpa_tasks" and got nothing.

Is there something I skipped downloading? In the video the narrator does a wget to grab the dataset. I believe the dataset already exists in my OTE data folder. I am unfamiliar with mpa_tasks and cannot find a reference. Help?

Thanks for reading.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thank you for your patience. After doing some clarification, this training extension is validated to be run with Ubuntu 18.04 / 20.04 only. ( I tried this on both windows 10 and ubuntu 20 & only ubuntu works.)

As of now, there's no plan of enabling this on Windows 10 yet.

Hope you are aware of these prerequisites (which are stated in the official doc):

- Ubuntu 18.04 / 20.04

- Python 3.8+

- CUDA Toolkit 11.1 - for training on GPU In order to get started with OpenVINO™ Training Extensions click here.

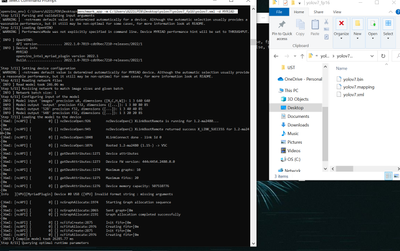

As I mentioned above, I managed to run the OTE in my Ubuntu 20.04:

I just followed the official documentation or the GitHub quick start page.

You could try to use the older version if you want to, ( same as in the video) but you need to have the same older environment.

Prerequisites

- Ubuntu 18.04 / 20.04

- Python 3.6+

- OpenVINO™ - for exporting and running models

- CUDA Toolkit 10.2 - for training on GPU

- Tensorflow 1.13.1

Older training_extension

Older training_extension_models

If your main objective is just to run a custom model on NCS2, I suggest you just train your model in your native platform (eg: tensorflow) and then convert that trained model into IR with FP16 model precision.

Run that trained using OpenVINO benchmark app with parameters -d MYRIAD and see whether it could infer.

I believe this would be easier for you compared to re-training your model using the OpenVINO Training Extension since you are already familiar with your native development platform.

Sincerely,

Iffa

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for following along with me so far. Thank you for your confidence in that I am familiar with a development platform. I'm trying.

Believe it or not I wrote my own annotation program in PHP because all the available softwares were not quite right. I do know server-side admin and web development, but don't know machine learning or AI. So far my capabilities have kept me able to hold a message board forum conversation with someone who does know ML and AI.

When I run the demos using the specific datasets, models, and parameters then my attempts always succeed. When I try to modify, or drop in my own models for application, then I get errors which require a better understanding than I have. I have exhausted all my time and chance and I am still trying.

For the purposes of this project, a few weeks back I did create a dataset and ran it through YOLOv7. Training is too slow on my old intel i5 Inspiron pc so I setup a Google Colab, which has a GPU. Paid subscription. It produced a *.pt file. this is the command I used to run training in YOLOv7 :

!python train.py --device 0 --batch-size 16 --epochs 300 --img 640 640 --data data/coco.yaml --hyp data/hyp.scratch.custom.yaml --cfg cfg/training/yolov7.yaml --weights yolov7.pt --name myTestModel

Which produced a best.pt model in the /yolov7/runs/myTestModel/ folder.

I copied this to my local machine and tried to convert this to IR format for use with my NCS2 sticks. C:/ai/my/mymodels/best.pt

So in my local machine I have openvino[onnx,pytorch] installed and running in a python environment on the windows command line.

i used the pytorch to onnx docs to get this code together. I do not know how it works, and that is possibly my problem.

Convert a PyTorch Model to ONNX and OpenVINO™ IR — OpenVINO™ documentation — Version(latest)

following instructions on this guide allowed me to convert and use the specific fastseg model used in the guide. When I tried to modify the code to use my own best.pt file - I got errors. I've tried various changes to the code and I cant seem to wrap my head around it, and I do not see any (recent) video demos of this process from start to finish which explain the details.

At this time I am just trying to get working operations. After I get working operations, then I will run the operations specifically on my NCS2. I undersstand the NCS2 will only run an FP16 format, but my intel i5 CPU will run both. So, in this case, I am just trying to get ANYTHING operational. I specified data type : FP16 in my mo_command, but checking the output of the shapes shows FP32. and since I am able to use my CPU, I dunno if this should be corrected or not.

I'm sorry if I do not know what I am doing.

Here's what I assembled:

import collections

import os

import sys

import time

from pathlib import Path

import cv2

import numpy as np

import torch

from IPython.display import Markdown, display

from openvino.runtime import Core

from models.experimental import attempt_load

import argparse

import random

device = 'cpu'

ie_core = Core()

sys.path.append("./utils")

import notebook_utils as utils

# from notebook_utils import CityScapesSegmentation, segmentation_map_to_image, viz_result_image # pip install nbutils

# settings :

IMAGE_WIDTH = 640 # Suggested values: 2048, 1024 or 512. The minimum width is 512.

# Set IMAGE_HEIGHT manually for custom input sizes. Minimum height is 512.

IMAGE_HEIGHT = 640 # if IMAGE_WIDTH == 2048 else 512

DIRECTORY_NAME = "mymodels"

BASE_MODEL_NAME = DIRECTORY_NAME + f"/best"

# Paths where PyTorch, ONNX and OpenVINO IR models will be stored.

model_path = Path(BASE_MODEL_NAME).with_suffix(".pth")

print(f"modelpath: {model_path}")

onnx_path = model_path.with_suffix(".onnx")

ir_path = model_path.with_suffix(".xml")

model = attempt_load( model_path.with_suffix(".pt"), map_location=device) # load model

model.train(False)

# #----------------------------------------------------------------------

# Convert PyTorch model to ONNX

if not onnx_path.exists():

x = torch.randn(1, 3, IMAGE_HEIGHT, IMAGE_WIDTH, requires_grad=True)

#

# # For the Fastseg model, setting do_constant_folding to False is required

# # for PyTorch>1.5.1

torch.onnx.export(

model,

x,

onnx_path,

opset_version=11

# do_constant_folding=False,

)

print(f"ONNX model exported to {onnx_path}.")

else:

print(f"ONNX model {onnx_path} already exists.")

#

#

# Convert ONNX Model to OpenVINO IR Format

# Construct the command for Model Optimizer.

mo_command = f"""mo

--input_model "{onnx_path}"

--input_shape "[1,3, {IMAGE_HEIGHT}, {IMAGE_WIDTH}]"

--mean_values="[123.675, 116.28 , 103.53]"

--scale_values="[58.395, 57.12 , 57.375]"

--data_type FP16

--output_dir "{model_path.parent}"

"""

mo_command = " ".join(mo_command.split())

print("Model Optimizer command to convert the ONNX model to OpenVINO:")

display(Markdown(f"`{mo_command}`"))

if not ir_path.exists():

print("Exporting ONNX model to IR... This may take a few minutes.")

# mo_result = %sx $mo_command

mo_result = os.system(mo_command)

print("created onnx model")

else:

print(f"IR model {ir_path} already exists.")

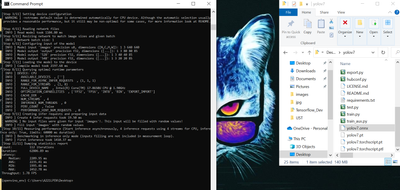

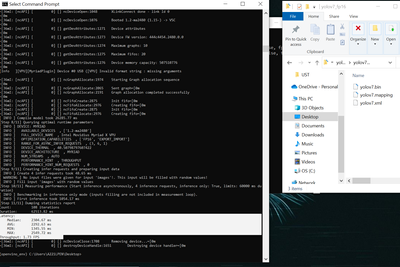

modelpath: mymodels\best.pth

Fusing layers...

RepConv.fuse_repvgg_block

RepConv.fuse_repvgg_block

RepConv.fuse_repvgg_block

IDetect.fuse

c:\ai\my\models\yolo.py:150: TracerWarning: Converting a tensor to a Python boolean might cause the trace to be incorrect. We can't record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

if self.grid[i].shape[2:4] != x[i].shape[2:4]:

ONNX model exported to mymodels\best.onnx.

Model Optimizer command to convert the ONNX model to OpenVINO:

<IPython.core.display.Markdown object>

Exporting ONNX model to IR... This may take a few minutes.

Model Optimizer arguments:

Common parameters:

- Path to the Input Model: c:\ai\my\mymodels\best.onnx

- Path for generated IR: c:\ai\my\mymodels

- IR output name: best

- Log level: ERROR

- Batch: Not specified, inherited from the model

- Input layers: Not specified, inherited from the model

- Output layers: Not specified, inherited from the model

- Input shapes: [1,3, 640, 640]

- Source layout: Not specified

- Target layout: Not specified

- Layout: Not specified

- Mean values: [123.675, 116.28 , 103.53]

- Scale values: [58.395, 57.12 , 57.375]

- Scale factor: Not specified

- Precision of IR: FP16

- Enable fusing: True

- User transformations: Not specified

- Reverse input channels: False

- Enable IR generation for fixed input shape: False

- Use the transformations config file: None

Advanced parameters:

- Force the usage of legacy Frontend of Model Optimizer for model conversion into IR: False

- Force the usage of new Frontend of Model Optimizer for model conversion into IR: False

OpenVINO runtime found in: c:\ai\00\env00\lib\site-packages\openvino

OpenVINO runtime version: 2022.2.0-7713-af16ea1d79a-releases/2022/2

Model Optimizer version: 2022.2.0-7713-af16ea1d79a-releases/2022/2

[ SUCCESS ] Generated IR version 11 model.

[ SUCCESS ] XML file: c:\ai\my\mymodels\best.xml

[ SUCCESS ] BIN file: c:\ai\my\mymodels\best.bin

[ SUCCESS ] Total execution time: 4.48 seconds.

[ INFO ] The model was converted to IR v11, the latest model format that corresponds to the source DL framework input/output format. While IR v11 is backwards compatible with OpenVINO Inference Engine API v1.0, please use API v2.0 (as of 2022.1) to take advantage of the latest improvements in IR v11.

Find more information about API v2.0 and IR v11 at https://docs.openvino.ai

created onnx model

and then i try to run inference and show the result. In most cases I get an error. but here just now I messed with it enough so that I am not specifically getting a conversion or inference error, the error I have here seems to be with my Python Code coming next.

I got this code from openvino jupyter notebook 004:

openvino_notebooks/004-hello-detection.ipynb at main · openvinotoolkit/openvino_notebooks · GitHub

and tried to modify it to fit this application. I'm not good at python. I can mostly read it until I get a procedure that is very language specific (line 56). It would be ideal for me to use python for basic operations and pipe results into PHP so I can be useful to this effort. This is why I tried asking for general directions instead of displaying my poor python coding. Is any of this accurate routine procedure? How can I do better?

# from openvino.runtime import Core

#

ie = Core()

classification_model_xml = ir_path

model = ie.read_model(model=classification_model_xml)

print(f"model any_name : {model.input(0).any_name}")

input_layer = model.input(0)

print(f"input precision: {input_layer.element_type}")

print(f"input shape: {input_layer.shape}")

output_layer = model.output(0)

print(f"output precision: {output_layer.element_type}")

print(f"output shape: {output_layer.shape}")

compiled_model = ie.compile_model(model=model, device_name="CPU")

input_layer = compiled_model.input(0)

output_layer = compiled_model.output(0)

image_filename = "assets/demoimage.jpg"

image = cv2.imread(image_filename)

print(f"image shape {image.shape}")

N, C, H, W = input_layer.shape

resized_image = cv2.resize(src=image, dsize=(W, H))

print(f"resized image shape : {resized_image.shape}")

input_data = np.expand_dims(np.transpose(resized_image, (2, 0, 1)), 0).astype(np.float32)

print(f"input data shape : {input_data.shape}")

result = compiled_model([input_data])[output_layer]

result_index = np.argmax(result)

print(f"result index : {result_index}")

boxes = result

boxes = boxes[~np.all(boxes == 0, axis=2)]

# For each detection, the description is in the [x_min, y_min, x_max, y_max, conf] format:

# The image passed here is in BGR format with changed width and height. To display it in colors expected by matplotlib, use cvtColor function

def convert_result_to_image(bgr_image, resized_image, boxes, threshold=0.3, conf_labels=True):

# Define colors for boxes and descriptions.

colors = {"red": (255, 0, 0), "green": (0, 255, 0)}

# Fetch the image shapes to calculate a ratio.

(real_y, real_x), (resized_y, resized_x) = bgr_image.shape[:2], resized_image.shape[:2]

ratio_x, ratio_y = real_x / resized_x, real_y / resized_y

# Convert the base image from BGR to RGB format.

rgb_image = cv2.cvtColor(bgr_image, cv2.COLOR_BGR2RGB)

# Iterate through non-zero boxes.

for box in boxes:

# Pick a confidence factor from the last place in an array.

conf = box[-1]

if conf > threshold:

# Convert float to int and multiply corner position of each box by x and y ratio.

# If the bounding box is found at the top of the image,

# position the upper box bar little lower to make it visible on the image.

(x_min, y_min, x_max, y_max) = [

int(max(corner_position * ratio_y, 10)) if idx % 2

else int(corner_position * ratio_x)

for idx, corner_position in enumerate(box[:-1])

]

# Draw a box based on the position, parameters in rectangle function are: image, start_point, end_point, color, thickness.

rgb_image = cv2.rectangle(rgb_image, (x_min, y_min), (x_max, y_max), colors["green"], 3)

# Add text to the image based on position and confidence.

# Parameters in text function are: image, text, bottom-left_corner_textfield, font, font_scale, color, thickness, line_type.

if conf_labels:

rgb_image = cv2.putText(

rgb_image,

f"{conf:.2f}",

(x_min, y_min - 10),

cv2.FONT_HERSHEY_SIMPLEX,

0.8,

colors["red"],

1,

cv2.LINE_AA,

)

return rgb_image

cv2.imshow("image",convert_result_to_image(image, resized_image, boxes));

cv2.waitKey(0)

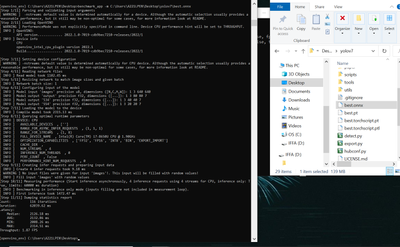

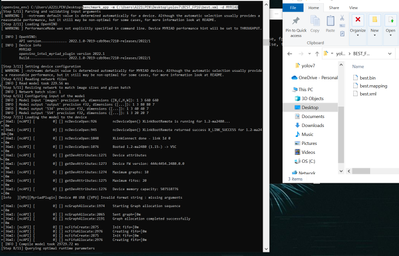

cv2.destroyAllWindows()modelpath: mymodels\best.pth

Fusing layers...

RepConv.fuse_repvgg_block

RepConv.fuse_repvgg_block

RepConv.fuse_repvgg_block

IDetect.fuse

ONNX model mymodels\best.onnx already exists.

Model Optimizer command to convert the ONNX model to OpenVINO:

<IPython.core.display.Markdown object>

IR model mymodels\best.xml already exists.

model any_name : input.1

input precision: <Type: 'float32'>

input shape: {1, 3, 640, 640}

output precision: <Type: 'float32'>

output shape: {1, 25200, 7}

image shape (479, 299, 3)

resized image shape : (640, 640, 3)

input data shape : (1, 3, 640, 640)

result index : 174799

Traceback (most recent call last):

File "c:\ai\my\current.py", line 164, in <module>

cv2.imshow("image",convert_result_to_image(image, resized_image, boxes));

File "c:\ai\my\current.py", line 139, in convert_result_to_image

(x_min, y_min, x_max, y_max) = [

ValueError: too many values to unpack (expected 4)

i am familiar with javascript and php loops. i can reasonably work with python. i fear that when i figure out this specific language block, it will only exhibit an underlying bug which caused the code block to malfunction. I still do not know whether my conversion was accurate, and trying to get results is confusing. Can anyone see where I messed up?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

... My replies were not posting so i retried it several times. Voila - about an hour later they all posted the same message.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The .pt file is usually a saved format for models trained in PyTorch platform.

Could you share your relevant model files and the conversion commands that you did? I'll validate from my side.

Sincerely,

Iffa

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

here is a link to the weights file :

https://drive.google.com/file/d/100BIULHH_FTV4y6WPNmJGE03EwHpZYy8/view?usp=share_link

The weights model works fine in yolov7, it gives me the output i am seeking. The problem is just that yolov7 only runs on my CPU and it is slow. I have 2x NCS2 sticks and I would like to get a custom model running on my NCS2.

here is a demo image that the model should run detection on :

i'm not sure what additional info i can post.

here is the yoloV7 command i used to generate the weights file "best.pt" in google colab:

!python train.py --device 0 --batch-size 16 --epochs 300 --img 640 640 --data data/coco.yaml --hyp data/hyp.scratch.custom.yaml --cfg cfg/training/yolov7.yaml --weights yolov7.pt --name goodies

the python code i used to convert the model was lifted and modified from this reference

Convert a PyTorch Model to ONNX and OpenVINO™ IR — OpenVINO™ documentation — Version(latest)

here is the conversion code i used :

device = 'cpu'

ie_core = Core()

sys.path.append("./utils")

import notebook_utils as utils

# from notebook_utils import CityScapesSegmentation, segmentation_map_to_image, viz_result_image # pip install nbutils

# settings :

IMAGE_WIDTH = 640 # Suggested values: 2048, 1024 or 512. The minimum width is 512.

# Set IMAGE_HEIGHT manually for custom input sizes. Minimum height is 512.

IMAGE_HEIGHT = 640 # if IMAGE_WIDTH == 2048 else 512

DIRECTORY_NAME = "mymodels"

BASE_MODEL_NAME = DIRECTORY_NAME + f"/best"

# Paths where PyTorch, ONNX and OpenVINO IR models will be stored.

model_path = Path(BASE_MODEL_NAME).with_suffix(".pth")

print(f"modelpath: {model_path}")

onnx_path = model_path.with_suffix(".onnx")

ir_path = model_path.with_suffix(".xml")

model = attempt_load( model_path.with_suffix(".pt"), map_location=device) # load model

model.train(False)

# #----------------------------------------------------------------------

# Convert PyTorch model to ONNX

if not onnx_path.exists():

x = torch.randn(1, 3, IMAGE_HEIGHT, IMAGE_WIDTH, requires_grad=True)

#

# # For the Fastseg model, setting do_constant_folding to False is required

# # for PyTorch>1.5.1

torch.onnx.export(

model,

x,

onnx_path,

opset_version=11

# do_constant_folding=False,

)

print(f"ONNX model exported to {onnx_path}.")

else:

print(f"ONNX model {onnx_path} already exists.")

#

#

# Convert ONNX Model to OpenVINO IR Format

# Construct the command for Model Optimizer.

mo_command = f"""mo

--input_model "{onnx_path}"

--input_shape "[1,3, {IMAGE_HEIGHT}, {IMAGE_WIDTH}]"

--mean_values="[123.675, 116.28 , 103.53]"

--scale_values="[58.395, 57.12 , 57.375]"

--data_type FP16

--output_dir "{model_path.parent}"

"""

mo_command = " ".join(mo_command.split())

print("Model Optimizer command to convert the ONNX model to OpenVINO:")

display(Markdown(f"`{mo_command}`"))

if not ir_path.exists():

print("Exporting ONNX model to IR... This may take a few minutes.")

# mo_result = %sx $mo_command

mo_result = os.system(mo_command)

print("created onnx model")

else:

print(f"IR model {ir_path} already exists.")from here i have my best.bin & best.xml file, but i do not know if they are working properly. I use code lifted & modified from this page openvino_notebooks/notebooks/004-hello-detection at main · openvinotoolkit/openvino_notebooks · GitHub

to attempt detection and classification, but this code fails. I'm not good at python. Should I also re-post the code I used to attempt inference detection and classification?

here it is :

ie = Core()

classification_model_xml = ir_path

model = ie.read_model(model=classification_model_xml)

print(f"model any_name : {model.input(0).any_name}")

input_layer = model.input(0)

print(f"input precision: {input_layer.element_type}")

print(f"input shape: {input_layer.shape}")

output_layer = model.output(0)

print(f"output precision: {output_layer.element_type}")

print(f"output shape: {output_layer.shape}")

compiled_model = ie.compile_model(model=model, device_name="CPU")

input_layer = compiled_model.input(0)

output_layer = compiled_model.output(0)

image_filename = "assets/demoimage.jpg"

image = cv2.imread(image_filename)

print(f"image shape {image.shape}")

N, C, H, W = input_layer.shape

resized_image = cv2.resize(src=image, dsize=(W, H))

print(f"resized image shape : {resized_image.shape}")

input_data = np.expand_dims(np.transpose(resized_image, (2, 0, 1)), 0).astype(np.float32)

print(f"input data shape : {input_data.shape}")

result = compiled_model([input_data])[output_layer]

result_index = np.argmax(result)

print(f"result index : {result_index}")

boxes = result

boxes = boxes[~np.all(boxes == 0, axis=2)]

# For each detection, the description is in the [x_min, y_min, x_max, y_max, conf] format:

# The image passed here is in BGR format with changed width and height. To display it in colors expected by matplotlib, use cvtColor function

def convert_result_to_image(bgr_image, resized_image, boxes, threshold=0.3, conf_labels=True):

# Define colors for boxes and descriptions.

colors = {"red": (255, 0, 0), "green": (0, 255, 0)}

# Fetch the image shapes to calculate a ratio.

(real_y, real_x), (resized_y, resized_x) = bgr_image.shape[:2], resized_image.shape[:2]

ratio_x, ratio_y = real_x / resized_x, real_y / resized_y

# Convert the base image from BGR to RGB format.

rgb_image = cv2.cvtColor(bgr_image, cv2.COLOR_BGR2RGB)

# Iterate through non-zero boxes.

# for box in boxes:

# Pick a confidence factor from the last place in an array.

# conf = box[-1]

# if conf > threshold:

# Convert float to int and multiply corner position of each box by x and y ratio.

# If the bounding box is found at the top of the image,

# position the upper box bar little lower to make it visible on the image.

# (x_min, y_min, x_max, y_max) = [

# int(max(corner_position * ratio_y, 10)) if idx % 2

# else int(corner_position * ratio_x)

# for idx, corner_position in enumerate(box[:-1])

# ]

# Draw a box based on the position, parameters in rectangle function are: image, start_point, end_point, color, thickness.

# rgb_image = cv2.rectangle(rgb_image, (x_min, y_min), (x_max, y_max), colors["green"], 3)

# Add text to the image based on position and confidence.

# Parameters in text function are: image, text, bottom-left_corner_textfield, font, font_scale, color, thickness, line_type.

# if conf_labels:

# rgb_image = cv2.putText(

# rgb_image,

# f"{conf:.2f}",

# (x_min, y_min - 10),

# cv2.FONT_HERSHEY_SIMPLEX,

# 0.8,

# colors["red"],

# 1,

# cv2.LINE_AA,

# )

return rgb_image

cv2.imshow("image",convert_result_to_image(image, resized_image, boxes));

cv2.waitKey(0)

cv2.destroyAllWindows()- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i am trying. i am running to into all the pitfalls.

as stated before, i am just trying to get the process going, from start to finish. preferably with finish being on my NCS2.

so i am trying the openvino training extensions on my google colab account.

if i had known it would be best to start with an i-7 pc with nvidia cuda, then i would have started with a better pc.

....is it possible to create and run a python virtual environment in google colab?

!git clone https://github.com/openvinotoolkit/training_extensions.git

%cd /content/training_extensions/

!git checkout develop

!git submodule update --init --recursive

!sudo apt-get install python3-pip python3-venv

!cd ote_sdk

%cd /content/training_extensions/ote_sdk/

!python setup.py install

%cd /content/training_extensions/

!pip install --upgrade virtualenv

!python3 --version; pip3 --version; virtualenv --version

!find external/ -name init_venv.sh

%cd /content/training_extensions/external/model-preparation-algorithm/

#this line outputs code, i don't think it does anything

!sh init_venv.sh .venv_mpa

%cd /content/training_extensions/

!pip3 install -e ote_cli/ -c external/model-preparation-algorithm/constraints.txt

!ote --help

and that is where this effort throws an error.

Traceback (most recent call last):

File "/usr/local/bin/ote", line 33, in <module>

sys.exit(load_entry_point('ote-cli', 'console_scripts', 'ote')())

File "/usr/local/bin/ote", line 25, in importlib_load_entry_point

return next(matches).load()

File "/usr/local/lib/python3.7/dist-packages/importlib_metadata/__init__.py", line 207, in load

module = import_module(match.group('module'))

File "/usr/lib/python3.7/importlib/__init__.py", line 127, in import_module

return _bootstrap._gcd_import(name[level:], package, level)

File "<frozen importlib._bootstrap>", line 1006, in _gcd_import

File "<frozen importlib._bootstrap>", line 983, in _find_and_load

File "<frozen importlib._bootstrap>", line 967, in _find_and_load_unlocked

File "<frozen importlib._bootstrap>", line 677, in _load_unlocked

File "<frozen importlib._bootstrap_external>", line 728, in exec_module

File "<frozen importlib._bootstrap>", line 219, in _call_with_frames_removed

File "/content/training_extensions/ote_cli/ote_cli/tools/ote.py", line 22, in <module>

from .demo import main as ote_demo

File "<fstring>", line 1

(frame_index=)

^

SyntaxError: invalid syntax

It is not possible for me to see where I went wrong.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i am going to try again with Yolov5 and train another model today.

while trying to brainalize the problem, i came across this guide which i will try next.

GitHub - bethusaisampath/YOLOs_OpenVINO: Latest YOLO models inferencing using Intel Openvino toolkit

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page