- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Colleagues,

We have a radiative transfer iterative solver that is threaded. Calculation points are placed on all the surfaces involved; small surfaces with a few points, large surfaces with many. The result is a large collection of points at which flux from all surfaces in their current state is calculated.

The calculation loop is threaded and when it's finished the flux onto all the surfaces is updated (points are grouped and their aggregate effect determined on their 'mother surface'). This cycle is repeated 3 times. But this structure requires that the loop threading setup is performed (again) for each cycle, even though its structure does not change. The time it takes OpenMP to set up the (big) loop is considerable.

To avoid this unnecessary work, we thought of the following:

- triplicate the loop length.

- Have all threads stop when the last point on the last surface has been calculated

- Update state of all surfaces

- resume loop execution to process the 2nd third of the total points.

Even if "monotonic" is added to the schedule so the points are processed in order, it isn't clear that the above process is possible in OpenMP.

Any suggestions? Or is such a process not possible?

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This issue is likely more complicated (subtle?) than the simplicity of your explanation.

What do you mean by "loop threading setup"?

To me (us), your description sketches to:

...

do rep=1,3

...

!$omp parallel do

do vertex=1,nVerticies

...

end do

!$omp end parallel do

...

end do

...And in which case, the loop threading setup (creation of the OpenMP thread team) occurs only once (not three times). Note, if the runtime between the "!$omp end parallel do" and the "!$omp parallel do" of the next iteration is less than 200ms (default time), then there will be no tread suspend/wakeup overhead. If the time between (subsequent) entry into the parallel region is longer than the blocktime, then you will encounter the latency of resuming the paused(suspended) threads (you will not have the overhead of thread creation). In the event of running into thread wakeup, the added overhead is potentially less than 1ms added latency.

Something else is going on.

A closer inspection of your code would be necessary to provide constructive advice.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jim,

Here is the relevant code section(s). The firstprivate variables are arrays and need only be initiated at the start of the first cycle

CycleLoop: &

do Icycle = 1,NumberOfCycles

...

!$OMP parallel do default(private),&

!$omp & firstprivate(RayWeight, LastRTGroup, LastBlockerGroup), &

!$omp & shared(

... 55 shared scalar, vector, and array variables listed here

!$omp & ), &

!$omp & reduction(+:SurfaceEo), &

!$omp & schedule(auto)

PointLoop: &

do NumPoint = 1,NumOfCalcPointsToProcessPerPass

... heavy lifting done here

end do PointLoop

!$OMP end parallel do

... point data after each cycle is aggregated here

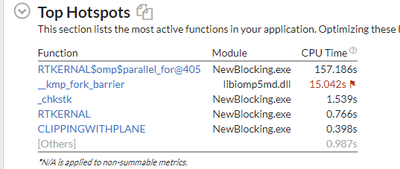

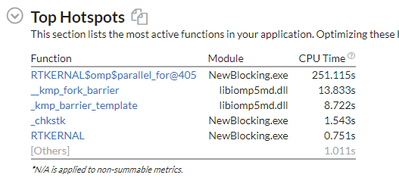

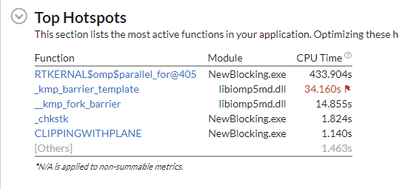

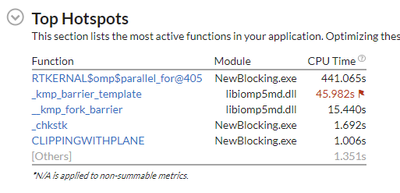

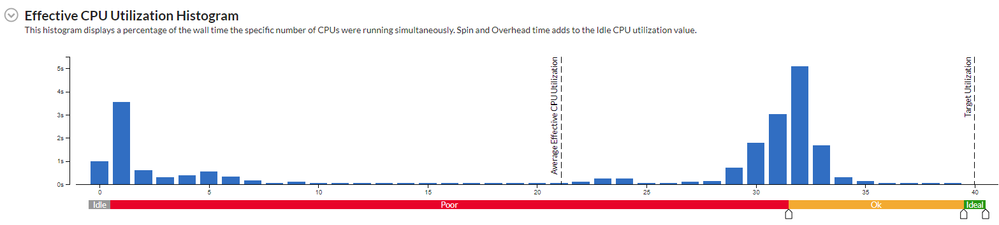

end do CycleLoopUsing a reduced point count (just for demonstration), I ran the code with NumberOfCycles = 1,2,5,10. Here is what VTune reports for these four cycle counts. 1 and 2 cycles in the first row, 5 and10 cycles in the 2nd row:

The machine has 20 physical cores and 20 virtual. The time spent in the point loop is not growing (as much) with the cycle count, since the number of point in these example runs are relatively small.

The CPU usage looks like this and changes only slightly with number of cycles:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

nCyc NewBlocking Barrier

1 157.186 15.042

2 251,115 13.833

5 433.904 34.160

10 441.982 45.982

The barrier time on 1 cycle is indicative of the workload (execution time) per thread within NewBlocking is not relatively the same.

In this test, there are likely two implicit barriers. One immediately after the copy-in phase of the firstprivate, and the second at the close of the parallel region. The implicit barrier on the first iteration's firstprivate is likely also going to see the latency of establishing the thread pool (assuming it has not already been created. The thread creation time for 39 additional threads, the tread context itself is not very large (almost negligible), but if your firtstprivate variables are very large arrays, this may induce large numbers of page faults in assigning unmapped Virtual Addresses to pagefile pages and physical RAM.

The 2 cycle time will not experience the thread creation nor mapping of the pagefile & RAM. The fact that the barrier time went down from 1 to 2 cycles is odd and indicative that their are external factors than the computation affecting the runtime behavior of your program.

I suggest you use "Bottom Up" view. Open the procedure with CycleLoop (?RTKERNEL), then observe the line-by-line:

for all threads

and

for each thread

You can also expand suspected lines to disassembly to see if there are unexpected bottlenecks.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Additionally, in looking at the histogram

do NumPoint = 1,NumOfCalcPointsToProcessPerPass

...

do aPoint = NumPoint+1,NumOfCalcPointsToProcessPerPass

...IOW the workload is specifically unbalanced.

If that is the case, you will then rework your algorithm such as to produce a balanced workload.

Jim Demspey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

An additional note:

You are using schedule(auto). I suggest you change this to: schedule(static, 1).

If this improves the workload distribution, then experiment with increasing the chunksize from 1 to (2, 3, 4, ...)

The auto may have chosen a non-optimal scheduling method.

Jim Dempsey

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page