- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We have a production code which shows significantly worse performance with recent versions of ifort (including oneapi 2022.1 and ifort version 19.0) compared to ifort version 16.0.

I have used VTune to identify a single subroutine responsible for this performance slowdown in a particular test problem.

When the code is built with ifort version 16.0 this routine takes just under 4 seconds.

When the code is built with oneAPI 2022 (ifort) this routine takes more than 21 seconds. This additional CPU time is almost entirely spent in for_alloc_assign_v2 (9.7 sec) and for_alloc_copy (6.2 sec) . I don't see any time spent in these routines at all in the ifort 16.0 case.

The subroutine in question is a complicated piece of legacy code and I have not yet managed to reproduce the same behaviour in a minimum example. It may be possible to refactor the code to avoid repeated allocation and deallocation but first I want to understand why the same code built with different ifort version shows such different behaviour and performance.

Thanks.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Plus an additional 1.6 seconds in for_dealloc_all_nocheck in the oneAPI case.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Are these routines your code or part of the Intel Fortran Runtime System?

If your code, can you please post?

Also, a VTune screenshot of each routine for each compiler might be insightful.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

for_alloc_assign_v2, for_alloc_copy and for_dealloc_all_nocheck are (I assume) part of the Intel Fortran Runtime system. They all appear as children of a routine which is part of our code when compiled with ifort 2022 but not when compiled with ifort 2016.

I would have to redact some parts of the VTune screenshot but it might be possible.

I notice that ifort 2016 is statically linking with -lifcore but ifort 2022 is statically linking with -lifcoremt (multithreaded I assume). Could this be part of the story? Is there an option to tell it not to use multithreaded libraries?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just to avoid ambiguity: the routine which calls the for_alloc* routines is always part of our code regardless of which compiler we use. But those for_alloc* routines do not appear to be called (or at least take significant time) when we compile with ifort 2016.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

>>I notice that ifort 2016 is statically linking with -lifcore but ifort 2022 is statically linking with -lifcoremt (multithreaded I assume)

If your application is multi-threaded you should not link with the single threaded libraries.

Are you compiling as multi-threaded? Are you multi-threaded?

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I inadvertently deleted some text.

the ...alloc... routines presumptively are heap related routines. Multi-threaded applications require (internal runtime library) critical sections when managing the heap whereas single-threaded applications do not.

Keep in mind that there are other places in the mt library that require (internal runtime library) critical sections.

IOW if your program is multi-threaded - do not link with the single threaded library. Adverse interactions (aka race conditions) might not show up in testing but can (and usually do) show up in production code.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It’s complicated. The code I’m looking at is not multithreaded, it is MPI parallel. But it is part of a shared codebase with another code which is multithreaded using OpenMP.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Those routines were not used in version 16, which is why you don't see them. Instead, inline code performed the action, but it couldn't implement the full semantics the standard required so a run-time routine was created. IIRC, I wrote the code in for_alloc_assign_v2.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That’s interesting. Is there are reason why the run time routine would perform badly compared with the previous inline code?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

>>The code I’m looking at is not multithreaded

>>But it is part of a shared codebase with another code which is multithreaded using OpenMP.

If the two are used together within the same application then the application is multi-threaded

(MPI is not multi-threaded it is multi-process)

IIF the two are used separately, .AND. you are making a library, then consider making two libraries: single-threaded and multi-threaded.

(.OR. supply the source of those procedures making the calls such that they can be compiled with and without mt).

Steve>>I wrote the code in for_alloc_assign_v2

For mt compile, do you explicitly use a critical section .OR. do you rely on the mt version of the CRTL heap manager (malloc/free) to perform the critical section?

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Simon,

Should Steve reply that the critical section is not in for_alloc_... but rather in the mt version of malloc/free

then consider replacing malloc/free... with the TBB scalable allocator.

Note, I haven't done this recently, so you may have a little bit of experimentation to do (e.g. messing with library load order).

Intel, I've noticed that in some (at least) earlier versions of ifort that OpenMP !$omp task was using parts of TBB (inclusive of the scalable allocator). If this is still the case, then malloc/free could be quite easily overloaded as well and potentially be available as a compiler option (if not now, then later).

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's been years (at least six!) but the routine I wrote called another Fortran library routine to do the actual allocations, never malloc directly. It is thread-safe.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

>>the routine I wrote called another Fortran library routine to do the actual allocations, never malloc directly

Then, would you know if this other Fortran library routine manages its own heap or calls malloc/free?

The point isn't whether your code is thread-safe, it is where (how) the thread-safety is implemented (e.g. mutex).

a) If within thread-safe Fortran supplied routine is a heap manager, then not much can be done by the user.

b) If the Fortran supplied routine calls upon the thread-safe (mt) version of the CRTL malloc/free, then the user potentially be able to substitute malloc/free with the TBB scalable allocator.

For those not in the know, the TBB scalable allocator can be thought of as a filter function for malloc/free (new/delete) that buffers allocations/deallocations within threadprivate areas without locks and a common pool with locks. Thus drastically reducing the frequency of critical section (mutex) entry and exits.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The code which shows the problem is not multithreaded.

I only raised the question of the multithreaded library in case it's doing a lot of extra work to ensure thread safety and causing reduced performance in single-threaded applications as a result.

I realize that there is not much to work with without example code, but I cannot share the production code and have not yet managed to produce a minimum example.

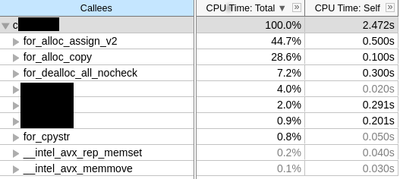

Here are the "Callees" from VTune for the problem routine (redacted name beginning with "c") compiled with oneapi 2022 (ifort). All the redacted names are in our production code.

This routine took 21.7 seconds (26.1%) of the total CPU time of 83.1 seconds. Most of that time is spent in for_alloc_assign_v2, for_alloc_copy and for_alloc_all_nocheck rather than in the application code.

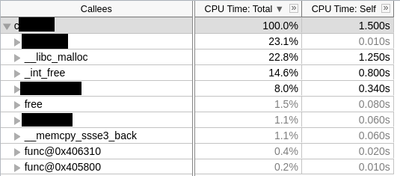

For comparison, the equivalent result from version 16 is

In this case the "c" routine takes 3.9 seconds (6.7%) of the total CPU time of 58.33s

Finally this is the result with gfortran 11.2

Here the "c" routine takes 5.5 seconds (7.9%) of the total CPU time of 69.3 seconds.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

One of the changes to the preferences made between the older Intel Fortran versions and the newer version(s) is the newer version(s) default to -realloc_lhs whereas the older versions did not (or even did not have the ability to realloc the lhs)

In some (to many) cases one may argue the reallocation was unnecessary. There are options to modify this behavior:

/assume:<keyword>

specify assumptions made by the optimizer and code generator

keywords: ...,

..., [no]realloc_lhs, ...,

...

/standard-semantics

explicitly sets assume keywords to conform to the semantics

of the Fortran standard. May result in performance loss.

assume keywords set by /standard-semantics:

..., realloc_lhs,

...

/[no]standard-realloc-lhs

explicitly sets assume keyword realloc_lhs to conform to the

standard, or to override the default. Sets /assume:[no]realloc_lhs

I assume that the bulk of your code was written prior to this change you might experiment with adding option

/nostandard-realloc-lhs

*** note, should you have a few newer pieces of code that rely on this, then you can omit this option for that/those source files.

And, if you have a source with a blend of usages you can elide unnecessary reallocations by changing:

array = ...

to

array(:) = ...

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the suggestion @jimdempseyatthecove but I’ve already considered the possibility that this might have been caused by that change.

I tried using -norealloc_lhs in version 2022 and that did not help. I also tried using -realloc_lhs in version 16 and didn’t see the performance degradation is see in 2022.

Memory tracing in the NAG Fortran compiler shows that the offending routine does have deallocates and allocates on some pointer assignments. These assignments are to quite complicated chains of derived types with allocatable components, some of which are pointers to other derived types. This complexity is why I’m having difficulty in reproducing the problem in a simple, example, and may also be related to the performance problem itself. But ifort version 16 and gfortran version 11.2 seem to manage well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good tip on explicitly adding (:) to avoid unnecessary reallocations though.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Simon_Richards1 wrote:

Good tip on explicitly adding (:) to avoid unnecessary reallocations though.

See this from @Steve_Lionel : https://stevelionel.com/drfortran/2008/03/31/doctor-it-hurts-when-i-do-this/

As the doctor writes, "(:)" can hurt too and badly, proceed with caution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

>>These assignments are to quite complicated chains of derived types with allocatable components, some of which are pointers to other derived types. This complexity is why I’m having difficulty in reproducing the problem in a simple, example, and may also be related to the performance problem itself. But ifort version 16 and gfortran version 11.2 seem to manage well.

Have you considered compiling the "adverse" procedure(s) using the older or other compiler into a static library (provide it produces bug-free results)?

I suspect there is an excessive number of temporary UDT's created for member allocatable objects then either alloc&copied&freed or alloc&movealloc. The listed for_... routines seem to indicate this.

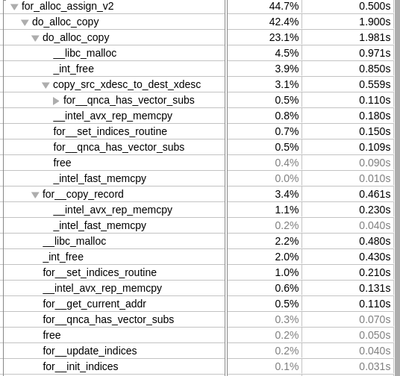

Could you expand, expand, ... for_alloc_assign_v2 to narrow the issue further?

This may lead into functions without debug information, which is OK

Getting the names may be insightful as to if/where a mutex might be located or if this is mostly memory reference overhead.

Note, the very high functions can be opened in a Disassembly window for further inspection.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here is the expansion of for_alloc_assign_v2 callees:

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page