- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We have a production code which shows significantly worse performance with recent versions of ifort (including oneapi 2022.1 and ifort version 19.0) compared to ifort version 16.0.

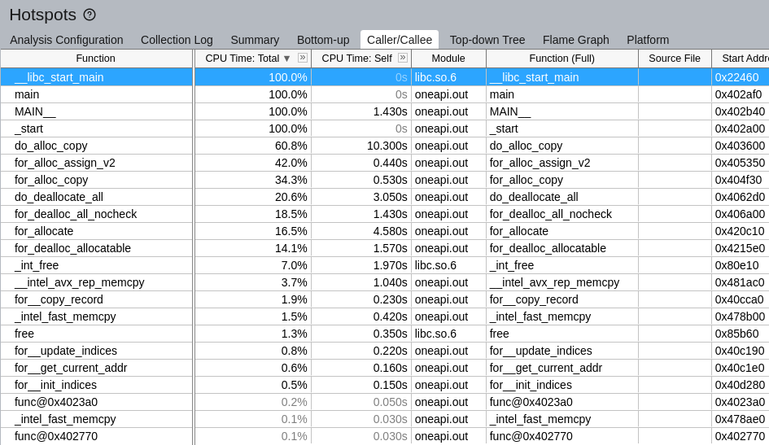

I have used VTune to identify a single subroutine responsible for this performance slowdown in a particular test problem.

When the code is built with ifort version 16.0 this routine takes just under 4 seconds.

When the code is built with oneAPI 2022 (ifort) this routine takes more than 21 seconds. This additional CPU time is almost entirely spent in for_alloc_assign_v2 (9.7 sec) and for_alloc_copy (6.2 sec) . I don't see any time spent in these routines at all in the ifort 16.0 case.

The subroutine in question is a complicated piece of legacy code and I have not yet managed to reproduce the same behaviour in a minimum example. It may be possible to refactor the code to avoid repeated allocation and deallocation but first I want to understand why the same code built with different ifort version shows such different behaviour and performance.

Thanks.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The ___libc_malloc and _int_free (as well as free would be the CRTL libraries (assumed to be thread safe). These consume ~8.5% of the total runtime (for the application under test). This attributes approximately 1/5 the time spent in for_alloc_assign_v2, it therefore seems counter-productive to trying to address this via the TBB scalable allocator (unless it simple to insert this, but the payback would be minimal).

Without seeing the code, it is hard to make suggestions as to how to improve performance. Making a blind guess, this seems symptomatic of a push to oop.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I wouldn’t say this part of the code was particularly oop. It uses derived types heavily but not type-bound procedures.

Elsewhere in the code we are far more OO, and we have definitely run into performance issues there associated with passing non-polymorphic variables to polymorphic dummy arguments. Steve has written a useful blog post on this: https://stevelionel.com/drfortran/2020/06/30/doctor-fortran-in-not-my-type/

Intel Fortran seems to handle this particularly badly - gfortran seems to do a lot better. We solve this problem by making the dummy arguments non-polymorphic by declaring them as TYPE rather than CLASS. Then instead of the syntactic sugar of:

CALL object%method()

we have to use the far clumsier:

CALL object%method(object)

It would be really nice if Intel could fix the performance of this oop construct, but that is not really relevant to the current problem.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Simon_Richards1 wrote:

I wouldn’t say this part of the code was particularly oop. It uses derived types heavily but not type-bound procedures.

Elsewhere in the code we are far more OO, and we have definitely run into performance issues there associated with passing non-polymorphic variables to polymorphic dummy arguments. Steve has written a useful blog post on this: https://stevelionel.com/drfortran/2020/06/30/doctor-fortran-in-not-my-type/

Intel Fortran seems to handle this particularly badly - gfortran seems to do a lot better. We solve this problem by making the dummy arguments non-polymorphic by declaring them as TYPE rather than CLASS. Then instead of the syntactic sugar of:CALL object%method()

we have to use the far clumsier:

CALL object%method(object)

It would be really nice if Intel could fix the performance of this oop construct, but that is not really relevant to the current problem.

Why won't you instead simply do the following?

CALL method( object )

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes we could do that. But we like to make it clear that the method belongs to the object.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Simon_Richards1 wrote:

Yes we could do that. But we like to make it clear that the method belongs to the object.

You may want to review things a bit further with Fortran, the method does not "belong" to the object by any means. Additionally what you write re: the use of "CALL object%method(object)" does not conform to the language:

module m

type t

contains

procedure sub

end type

contains

subroutine sub( me )

class(t), intent(in) :: me

end subroutine

end module

use m

type(t) :: x

call x%sub( x )

end

C:\temp>ifx /c /standard-semantics p.f90

Intel(R) Fortran Compiler for applications running on Intel(R) 64, Version 2022.2.0 Build 20220730

Copyright (C) 1985-2022 Intel Corporation. All rights reserved.

p.f90(13): error #6784: The number of actual arguments cannot be greater than the number of dummy arguments. [SUB]

call x%sub( x )

----------^

compilation aborted for p.f90 (code 1)

C:\temp>You may want to look into whether this is indicative of a larger issue with what you are experiencing, perhaps misunderstandings or erroneous/past assumptions re: the language and/or the Intel Fortran compiler and its optimizations are coming to the fore with the newer version?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry, but you have misunderstood what I meant.

Your example is not correct Fortran, but that is not what I meant. In order to avoid the problem of passing non-polymorphic variables to polymorphic dummy arguments you have to declare 'me' as TYPE rather than CLASS (i.e you have to declare it to be non-polymorphic). And you use the NOPASS attribute when including the procedure in the derived type. I agree that this is not what you should be doing, and we only do this in particular situations when performance is an issue when using the standard approach.

Anyway this is a completely separate issue from the issue discussed in this thread, and the affected subroutine does not use this approach, or indeed any type bound procedures.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

On Windows, the Fortran library uses the Windows API routine VirtualAlloc. On Linux/Mac, it uses malloc in a thread-safe manner.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's on Linux, right?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, this is on Linux.

We do build and deploy on Windows too, but prefer to work on Linux, so I haven’t yet checked if we see the same problem on Windows. I’ll look into that when I get back into the office next week.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have now managed to distil the code down to a minimum representative example:

MODULE data_mod

IMPLICIT NONE

TYPE :: type_law

REAL, ALLOCATABLE :: a(:)

END TYPE type_law

TYPE :: type_law_p

TYPE (type_law), POINTER :: p => null()

END TYPE type_law_p

TYPE :: type_secondary

TYPE (type_law_p), ALLOCATABLE :: b(:)

END TYPE type_secondary

TYPE :: type_secondary_p

TYPE (type_secondary), POINTER :: p => null()

END TYPE type_secondary_p

TYPE :: type_range

TYPE (type_secondary_p), ALLOCATABLE :: secondaries(:)

END TYPE type_range

TYPE :: type_range_p

TYPE (type_range), POINTER :: p => null()

END TYPE type_range_p

TYPE (type_range), POINTER :: nc_range => null()

TYPE (type_range), TARGET, ALLOCATABLE :: nc_ranges(:)

TYPE (type_secondary), TARGET, ALLOCATABLE :: secondaries(:)

TYPE (type_law), TARGET, ALLOCATABLE :: laws(:)

END MODULE data_mod

PROGRAM mwe

USE data_mod

IMPLICIT NONE

INTEGER :: i,j

ALLOCATE(laws(1))

ALLOCATE(laws(1)%a(1))

ALLOCATE(secondaries(1))

ALLOCATE(secondaries(1)%b(1))

secondaries(1)%b(1)%p => laws(1)

ALLOCATE(nc_ranges(1))

ALLOCATE(nc_ranges(1)%secondaries(1))

nc_ranges(1)%secondaries(1)%p=>secondaries(1)

nc_range => nc_ranges(1)

DO i=1,10000

DO j=1,10000

CALL sub()

END DO

END DO

CONTAINS

SUBROUTINE sub()

TYPE(type_range), TARGET, SAVE :: jxrange(2)

jxrange(1) = nc_range

END SUBROUTINE sub

END PROGRAM mwe

Note that the code as shown here is unlikely to make much sense, as it has been substantially cut down from the original code. But the important thing here is the significant performance regression seen with recent Intel compilers. Here are some run times for this example, built with various compilers, on a Linux workstation (Intel Core i7-4770 CPU @ 3.4 GHz):

| Compiler | Compiler options | Elapsed time (seconds) |

| ifort 16.0.8 | -O2 | 0.57 |

| ifort 2021.6.0 | -O2 | 28.08 |

| ifx 2022.1.0 | -O2 | 47.21 |

| GNU Fortran 11.2.0 | -O2 -ftree-vectorize | 2.14 |

| NAG Fortran 7.0 | -O2 | 0.39 |

It may well be that the original code, and hence this minimum example, are doing something inefficient, but we are seeing a hugely significant performance degradation with recent Intel compilers, compared to non-Intel compilers and older Intel compilers.

Here is the VTune hotspot analysis for the ifort 2021.6.0 run:

I hope there is enough information here to give some insight.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I should point out that type_range_p is unused in this example, so should be deleted. I obviously missed that one when I was cutting the example down to a minimum form.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you have or can procure support subscription to oneAPI, I suggest you do so and use that to drive the Intel Software team to look at your reproducer closely and give you feedback.

@Ron_Green , @Barbara_P_Intel , @Devorah_H_Intel ,

OP provides a nice, small case above. Can one or more of you please take a close at it and attempt to figure out the changes in Intel Fortran that affect the performance with the data structure layout and memory access, etc. that lead to such a change in apparent performance to OP? Here's a somewhat modified reproducer also which you can consider:

MODULE data_mod

IMPLICIT NONE

TYPE :: type_law

REAL, ALLOCATABLE :: a(:)

END TYPE type_law

TYPE :: type_law_p

TYPE (type_law), POINTER :: p => null()

END TYPE type_law_p

TYPE :: type_secondary

TYPE (type_law_p), ALLOCATABLE :: b(:)

END TYPE type_secondary

TYPE :: type_secondary_p

TYPE (type_secondary), POINTER :: p => null()

END TYPE type_secondary_p

TYPE :: type_range

TYPE (type_secondary_p), ALLOCATABLE :: secondaries(:)

END TYPE type_range

TYPE :: type_range_p

TYPE (type_range), POINTER :: p => null()

END TYPE type_range_p

TYPE (type_range), POINTER :: nc_range => null()

TYPE (type_range), TARGET, ALLOCATABLE :: nc_ranges(:)

TYPE (type_secondary), TARGET, ALLOCATABLE :: secondaries(:)

TYPE (type_law), TARGET, ALLOCATABLE :: laws(:)

END MODULE data_mod

PROGRAM mwe

USE data_mod

IMPLICIT NONE

TYPE(type_range), TARGET, SAVE :: jxrange(2)

integer, parameter :: R8 = selected_real_kind( p=12 )

real(R8) :: t1, t2

INTEGER :: i,j

ALLOCATE(laws(1))

ALLOCATE(laws(1)%a(1))

laws(1)%a(1) = 0.0

ALLOCATE(secondaries(1))

ALLOCATE(secondaries(1)%b(1))

secondaries(1)%b(1)%p => laws(1)

ALLOCATE(nc_ranges(1))

ALLOCATE(nc_ranges(1)%secondaries(1))

nc_ranges(1)%secondaries(1)%p=>secondaries(1)

nc_range => nc_ranges(1)

call cpu_t( t1 )

DO i=1,10000

DO j=1,10000

CALL sub()

END DO

END DO

call cpu_t( t2 )

print *, "jxrange(1)%secondaries(1)%p%b(1)%p%a(1) = ", jxrange(1)%secondaries(1)%p%b(1)%p%a(1)

print "(g0,g0.2,g0)", "CPU Time per system_clock: ", (t2-t1), " seconds."

CONTAINS

SUBROUTINE sub()

laws(1)%a(1) = laws(1)%a(1) + 1.0

jxrange(1) = nc_range

END SUBROUTINE sub

subroutine cpu_t( time )

use, intrinsic :: iso_fortran_env, only : I8 => int64

! Argument list

real(R8), intent(inout) :: time

! Local variables

integer(I8) :: tick

integer(I8) :: rate

time = 0.0_r8

call system_clock (tick, rate)

time = real(tick, kind=kind(time) ) / real(rate, kind=kind(time) )

return

end subroutine

END PROGRAM mwe

- First consider the above case with Intel Fortran Version 14.0.4.237 Build 20140805 i.e., from over 8 years ago: note the CPU time of 0.49 seconds!

C:\temp>ifort /O2 /standard-semantics p.f90

Intel(R) Visual Fortran Intel(R) 64 Compiler XE for applications running on Intel(R) 64, Version 14.0.4.237 Build 20140805

Copyright (C) 1985-2014 Intel Corporation. All rights reserved.

Microsoft (R) Incremental Linker Version 11.00.50727.1

Copyright (C) Microsoft Corporation. All rights reserved.

-out:p.exe

-subsystem:console

p.obj

C:\temp>p.exe

jxrange(1)%secondaries(1)%p%b(1)%p%a(1) = 1.6777216E+07

CPU Time per system_clock: .49 seconds.

C:\temp>2. Now consider IFORT Intel(R) 64, Version 2021.7.0 Build 20220726_000000, note the response time of 59 seconds!

C:\temp>ifort /standard-semantics /O2 p.f90

Intel(R) Fortran Intel(R) 64 Compiler Classic for applications running on Intel(R) 64, Version 2021.7.0 Build 20220726_000000

Copyright (C) 1985-2022 Intel Corporation. All rights reserved.

Microsoft (R) Incremental Linker Version 14.33.31630.0

Copyright (C) Microsoft Corporation. All rights reserved.

-out:p.exe

-subsystem:console

p.obj

C:\temp>p.exe

jxrange(1)%secondaries(1)%p%b(1)%p%a(1) = 1.6777216E+07

CPU Time per system_clock: 59. seconds.

C:\temp>You can then check with IFX Intel(R) 64, Version 2022.2.0 Build 20220730, you will notice the program performance is the same as IFORT.

You can also check gfortran, as OP mentions, the performance is about 10x better though it is 10x less than IFORT Version 14.0.4.237 from over 8 years ago:

C:\temp>gfortran -ftree-vectorize p.f90 -o p.exe

C:\temp>p.exe

jxrange(1)%secondaries(1)%p%b(1)%p%a(1) = 16777216.0

CPU Time per system_clock: 5.3 seconds.

C:\temp>Note the identical program output for jxrange(1) component using all the above programs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you @FortranFan for confirming my observation and for raising the profile.

(Just a minor observation on your modified example: the default real a(1) has insufficient floating point precision to correctly add 1.0 in each of the 1E8 subroutine calls. That’s why the printed result is 1.6777216.0 rather than 1.0E+08. I know this because I fell into exactly the same trap when making a similar change. That’s what led me to add the int64 counter in my latest example. But that’s a trivial observation which doesn’t relate to the core performance issue here.)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For:

SUBROUTINE sub()

TYPE(type_range), TARGET, SAVE :: jxrange(2)

jxrange(1) = nc_range

END SUBROUTINE sub

The ifort 16, GNU and NAG may have optimized the subroutine out as jxrange isn't referenced after it was defined (in source). Additionally ifort 16 and NAG likely optimized out the DO i and DO j loops whereas GNU executed the do nothing nested loops.

And apparently ifort 2021 and ifx did not optimize out either section of code.

To thwart such optimizations, you must assure the compiler cannot figure out if generated results are not used. To do this, I suggest placing jxrange(2) in data_mod (remove SAVE) then use:

SUBROUTINE sub()

jxrange(1) = nc_range

call foo(jxrange)

END SUBROUTINE sub

And add to project a separate source file

SUBROUTINE foo(arange)

use data_mod

TYPE(type_range):: arange(:)

if(size(arange) <= 0) print *,"Not going to print"

END SUBROUTINE foo

*** And assure IPO does not perform inter-file optimizations (or is disabled).

Please, add the (untested) code, run the performance tests, and report back your findings.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the suggestion @jimdempseyatthecove , but I don't think that is the case. I have observed that the example runs extremely quickly with all compilers if the jxrange(1)=nc_range line is commented out, and that the run time for all compilers scales with the number of calls to sub.

Nevertheless I have implemented your suggested changes and the updated results are as follows:

| ifort 16 | 0.67 s |

| ifort 2021 | 47.97 s |

| ifx | 47.54 s |

| NAG Fortran | 0.51 s |

| GNU Fortran | 2.30 s |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To address the concerns about optimization and code removal I have made further changes to the example:

PROGRAM mwe

USE data_mod

IMPLICIT NONE

INTEGER :: i,j

ALLOCATE(laws(1))

ALLOCATE(laws(1)%a(1), source=0.0)

ALLOCATE(secondaries(1))

ALLOCATE(secondaries(1)%b(1))

secondaries(1)%b(1)%p => laws(1)

ALLOCATE(nc_ranges(1))

ALLOCATE(nc_ranges(1)%secondaries(1))

nc_ranges(1)%secondaries(1)%p=>secondaries(1)

nc_range => nc_ranges(1)

DO i=1,10000

DO j=1,10000

CALL sub()

END DO

END DO

PRINT *, 'Number of calls: ', jxrange(1)%secondaries(1)%p%b(1)%p%counter

CONTAINS

SUBROUTINE sub()

INTEGER :: lastcount = 0

IF (ALLOCATED(jxrange(1)%secondaries)) THEN

lastcount = jxrange(1)%secondaries(1)%p%b(1)%p%counter

END IF

jxrange(1) = nc_range

jxrange(1)%secondaries(1)%p%b(1)%p%counter = lastcount + 1

CALL foo(jxrange)

END SUBROUTINE sub

END PROGRAM mwe

With the following change to the type_law type (now in a separate source file as a result of previous changes):

TYPE :: type_law

INTEGER :: counter=0

REAL, ALLOCATABLE :: a(:)

END TYPE type_lawSo now the lowest level in the chain of types has a counter element which is updated in sub(), and this is used to print the number of calls to sub at the end of the run. This is 100,000,000 in all cases.

Additionally I am now compiling with -O0 with all compilers.

I hope this addresses the concerns about different compilers optimizing out different parts of the code.

The updated results are as follows:

| ifort 16 | 2.88 s |

| ifort 2021 | 48.78 s |

| ifx | 48.22 s |

| NAG Fortran | 1.41 s |

| GNU Fortran | 3.60 s |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The timings still do not look right. Sketch

do loops 100,000,000 iterations say at 4 clocks for inner loop overhead + 4 clocks to push return onto stack for call + 4 clocks pop return from sub (assuming instructions are in L1 iCache). We are at least 12 clock ticks outside sub +call/return.

Inside sub:

jxrange(1) = nc_range

after the 1st call jxrange(1)%secondaries(:) will have been allocated, each element will contain a pointer, thus one pre-= will require a free. This free will cost a few 100's instructions + a few memory writes. Say cost 200 clocks +/- each.

The post-= will require an allocation, say 100 clocks +/- (for new secondary(:)), and a copy of contents (fit in cache line)) 8-10 clocks.

the total for the = 310 clocks +/-

Note, the allocation/deallocation are likely to cost more than 100 clocks each.

This means ~31G clocks consumed

Assuming a fast 3GHz CPU, the expected time of on the order of 10 seconds

The runtimes reported do not seem reasonable.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The runtimes do not seem reasonable to me either, but they are what they are.

I can’t really comment on your estimates of instruction counts and clock counts, but ultimately it is the actual observed CPU time that matters. And the evidence before me is that recent Intel compilers are massively increasing this CPU time.

I don’t think the runtime differences in my example code can be explained away by some compilers optimising out some of the code. But even if that were true it couldn’t explain the observed performance regression in the production code.

I genuinely appreciate your help and advice so far, but remain convinced that there is more to understand here.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A view from a different perspective:

100M calls

ifort 16 0.67s

1GHz 670M 6.7 clock ticks per call

2GHz 1340M 13.4 clock ticks per call

3GHz 2010M 20.1 clock ticks per call

4GHz 2680M 26.8 clock ticks per call

ifort 2021 47.97s

1GHz 48G 480 clock ticks per call

2GHz 96G 690 clock ticks per call

3GHz 144G 1440 clock ticks per call

4GHz 192G 1920 clock ticks per call

I do not know your CPU clock rate, but even at 4GHz, it seems implausible that the code to perform the call to sub and perform the

jxrange(1) = nc_range

could be accomplished in ~27 clock ticks. Code was removed.

IOW something doesn't smell right.

A likely culprit is the compilers removed the DO i and DO j loops and replaced it with a single call to sub because all iterations resulted in the same work. The optimized code reduced the code to the last iteration's value.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks. I will investigate this further tomorrow and let you know, but the run times for all compilers scale with the DO i and DO j loops.

The performance regression in the production code cannot be explained by the optimiser removing code.

For what it’s worth, the CPU clock speed is 3.4 GHz.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

But another way of looking at it is this: if the performance differences are explained by the compiler optimisation removing redundant code, why don’t recent Intel compilers perform the same optimisations?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page