- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I put in a service request but if anyone has or knows how to get zip samples from 2017 compiler,

please help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't see samples from previous releases online, and the latest (2019) bundle has no MIC samples I can find. Support can dig these up for you, if they're willing.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't see samples from previous releases online, and the latest (2019) bundle has no MIC samples I can find. Support can dig these up for you, if they're willing.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It really depends on exactly what samples you are seeking for Phi. If it's the KNC PCI attached offload device, those samples were from v16 and older compilers. This had our Language Extensions for Offload (LEO) which I highly highly recommend you DO NOT use in any code. We extended OMP directives (in a proprietary, non-standard fashion) for offload prior to the OMP 4.5/5.0 TARGET/MAP clauses. He had to do something in those old days before OpenMP caught up to accelerators. Today you'd get all sorts of errors if you use LEO syntax SO DON'T.

There were no samples for Xeon Phi x200 (aka Knights Landing) in any compiler. Simply use -xmic-avx512 and OpenMP threading directives.

What exactly are you looking for or expect in a sample??

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If there's no good model to program it, I guess the best thing is to pull it and forget about it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ron,

While Intel has moved on from KNC (and KNL), there are a lot of them out there and it would show a great deal of customer appreciation (and support) if the One API software would add support for KNC.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can I use OpenMP as provided in the 2017 compiler? The samples from customer service were LEO only.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I wanted to get started with understanding a 3120a, and doing it right.

If this would help as to what method to program:

I got things working with MPSS 3.86 and updated to flash to

Flash Version : 2.1.02.0391

SMC Firmware Version : 1.17.6900

SMC Boot Loader Version : 1.8.4326

Coprocessor OS Version : 2.6.38.8+mpss3.8.6

Device Serial Number : ADKC32800720

Board

Vendor ID : 0x8086

Device ID : 0x225d

Subsystem ID : 0x3608

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

$50-100 on eBay, getting or having a motherboard that it will work on is more challenging.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1. The NUCs are out.

2. The Thinkpad, no

3. The HP envy no,

I have an old CYBERPOWER core i7 - 7600 I think, would it work in there -- my daughter uses that computer?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

3120a uses 300 watts, one 6 pin and one 8 pin graphics connectors, MMIO above 4G and large BAR support. I suspect daughter would be unhappy with you if it worked. Mine worked on a z840 with 1175 watt PS, NOT 850.

The real issue is whether it can be programmed and function reliably with whatever is available.

oneAPI and the right graphics card may be a substitute.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

At 3000 I will pass for the min configuration. But good luck.

Your story reminds me of the Italian engineers, good blokes and ladies, we are sitting in their lab in 100 degree weather at 9 am and they said, this is how we do it. I said there are better ways. Never tell an engineer that he will not believe you, so I sat for eight hours and watched them, they got some minor results.

At 5pm, I said, can I have a go, they stopped said, ok. I pulled out the NUC and the device, borrowed a screen and then took 20 minutes to show them what they had missed and the reason you had to do the tests outside to avoid the lab vibration from motors. They stopped using their device and moved outside. Rule no 1 have the right equipment.

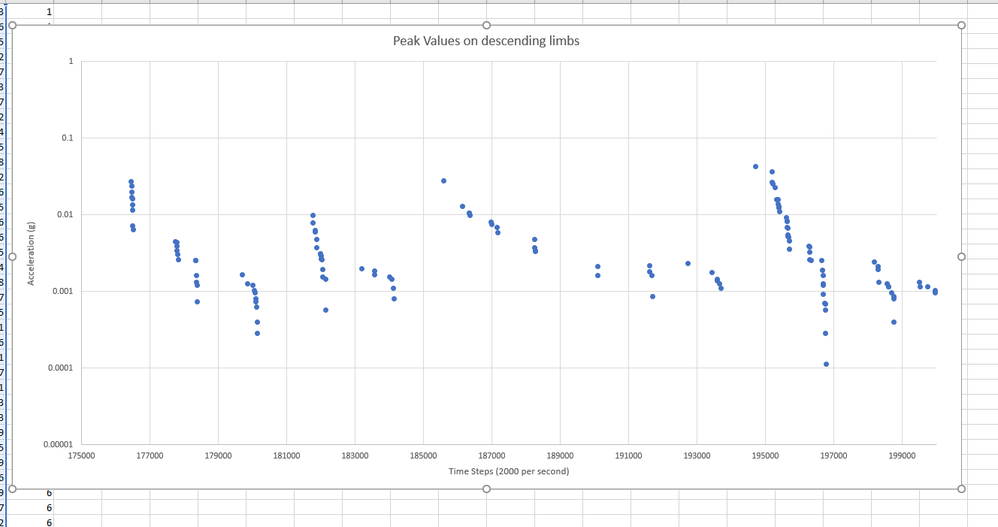

So my workmate in the UK was asked if we could do damping estimates in real time, it has taken 3 weeks but the answer is yes. These are the peak descending limbs. Got it down to 140 lines of code.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

oneAPI does not now and never will support Xeon Phi. It was an interesting attempt. The first-gen cards were slow and power-hungry. Second-gen were pretty good, but the market wasn't interested. Intel is trying again with a different architectural model.

I would say that Phi boards are not worth even $50.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What is LEO? A google search does not return any decent ideas.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'd agree with Steve - the performance of KNC aka Phi x100 aka 3120a was not impressive. When talking to customers we referred to this PCI attached accelerator as "Useful for testing offload programming models but not ideal for production/performance". It was a stepping stone from KNF Knights Ferry to our target device KNL or Knights Landing or Phi x200. Steve alluded to the fact that for KNL originally was planned with 2 hardware models: A PCI attached accelerator that required data movement and kernel launch across the PCI bus AND as a stand-alone socketed processor with a motherboard like a traditional host. Roughty 98% of customers bought the socketed node, standalone host form. So KNC was really the last of the Phi family that acted as a PCI attached accelerator.

Now as for LEO: Some of us here remember programming distributed memory clusters before MPI was around. Remember those days? We had PVM, NX Message passing etc - mostly vendor proprietary programming models to send/receive data between nodes. Well PVM (Parallel Virual Machine) was different and actually is still used by some. The point is, before standardized programming models for message passing or sharing data between heterogeneous distributed memory clusters each vendor came up with their own API for send/receive and collectives. Obviously users were not happy since they had to write unique code for each vendor. Thus, MPI was created to unify the programming model across any vendor's platform. PVM could've been the model but alas users asked for more control on data movement (performance concerns). PVM has a loyal customer base who might object to my characterization, and they are quite vocal in their support for PVM. It is a good model, no doubt, but all I'm saying is history favored MPI over PVM.

SO LEO - Language Extensions for Offload. Intel proprietary. predated OpenMP offload directives. Was needed for us to support KNF and KNC offload programming model before OpenMP support. Yes, there was another vendor specific offload programming model but it was heavily licensed and hence not an option for us at that time. SO - is LEO useful? Well you can use it as a learning model. The concepts of offload to attached accelerators has not changed since FPS days. You have to move data back and forth. you have to offload a kernel to do computation. The syntax is unique to each offload programming model but the ideas are the same. So you could maybe learn something from this KNC card but the code could not be used on any modern GPU system. Intel oneAPI IFX compiler supports offload using the OpenMP standard and supports Intel integrated graphics on most laptops from the past 3-5 years. And doesn't take 300 watts and doesn't need an older version of Linux and the required old drivers for LEO. And the code would be portable to the IBM, Cray, or GNU compilers supporting OMP offload.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The 2017 compiler release notes states support for OpenMP 4.5. Is this what would be a preferred method of using the knc?

(And thanks for history and insights)

New Intel® Xeon Phi™ offload features

- OpenMP* 4.5 clause changes

- for combined or composite constructs, the if clause now supports a directive name modifier:

- if([directive-name-modifier :] scalar-expression) the if clause only applies to the semantics of the construct named by directive-name-modifier if specified; otherwise it applies to all constructs to which an if clause can apply. Example: #pragma omp target parallel for if(target : do_offload_compute)

- use_device_ptr(list) clause now implemented for #pragma omp target data

- is_device_ptr(list) clause now implemented for #pragma omp target

- for combined or composite constructs, the if clause now supports a directive name modifier:

- Support for combined target constructs:

- #pragma omp target parallel

- #pragma omp target parallel for

- #pragma omp target simd

- #pragma omp target parallel for simd

- Support for new device memory APIs:

- void* omp_target_alloc()

- void omp_target_free()

- int omp_target_is_present()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

>>And doesn't take 300 watts and doesn't need an older version of Linux and the required old drivers for LEO.

The implementation would not need to run LEO nor necessarily run Linux within the KNC/KNL. Instead, there would be a subset "o/s" with the requirements to handle the functionality of a "GPU" that supports oneAPI. IOW something that is functionally equivalent to a GPU/APU driver. This should be relatively easy to do.

300 watts is a different issue. The Xe HPC (reportedly) consumes 75/150/300/400/500 watts depending on model.

The large systems will likely migrate to Xe HPC, support for oneAPI KNC would provide a market for the KNC's for the current KNC users making it easier for them to migrate to the Xe HPC. Then following up, these new KNC users, after exploring oneAPI on KNC can then make a decision (performance and/or watts) to migrate to Xe HPC. I see it as a win-win marketing decision as opposed to a perceived market erosion.

Jim Dempsey

BTW I have a system with two KNC's installed and also an additional KNL host system (my opinion is biased).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Since one can't post in archives, I have one last question about networking. In my default mic0 file:

<?xml version="1.0" encoding="utf-8" standalone="no"?>

<mic0>

<MajorVersion>v1.2</MajorVersion>

<Hostname>mic0</Hostname>

<Networking>

<IPAddress>192.168.1.100</IPAddress>

<HostIPAddress>192.168.1.99</HostIPAddress>

<Subnet>255.255.255.0</Subnet>

<HostSubnet>255.255.255.0</HostSubnet>

<MACAddress>4c:79:ba:22:05:a0</MACAddress>

<HostMACAddress>4c:79:ba:22:05:a1</HostMACAddress>

</Networking>

</mic0>

What is the HostIPAddress and proper way to change if I wanted to access card from another local computer. It seems as is tied by MAC to the IPAddress a0,a1. I have two ethernet connections on the containing computer(W10pro) at 192.168.1.110 and 111. How to bridge properly and would the mic0.xml benefit by setting HostIPAddress=192.168.1.112 and the IPAddress=192.168.1.113? Windows bridging does not seem to document preventing conflicts on the same network, though I would hope it would only forward by MAC.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page