- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

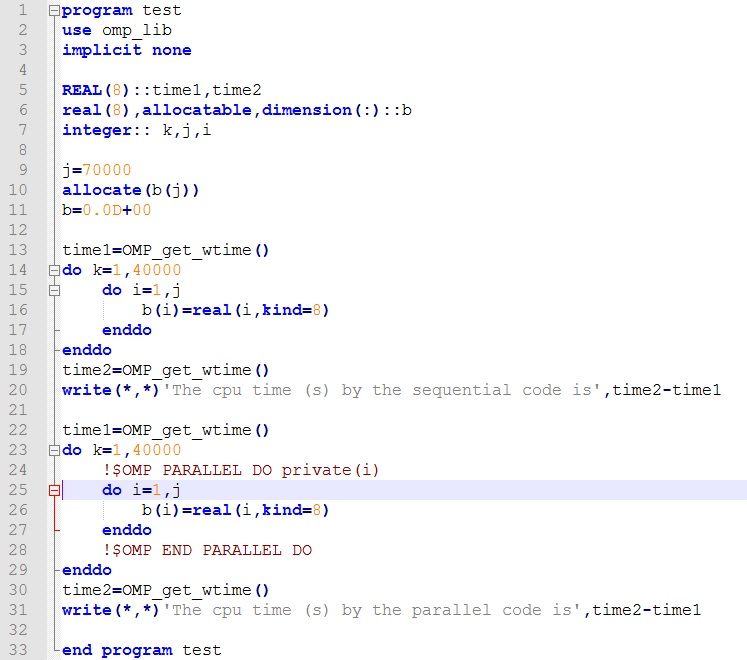

I tried to use OpenMP to parallelize an inner loop. The code is as the following (also see the attachment)

******************************************************************************************

program test

implicit none

REAL(8)::time1,time2

real(8),allocatable,dimension(:)::b

integer:: k,j,i

j=70000

allocate(b(j))

b=0.0D+00

call cpu_time(time1)

do k=1,40000

do i=1,j

b(i)=real(i,kind=8)

enddo

enddo

call cpu_time(time2)

write(*,*)'The cpu time (s) by the sequential code is',time2-time1

call cpu_time(time1)

do k=1,40000

!$OMP PARALLEL DO private(i)

do i=1,j

b(i)=real(i,kind=8)

enddo

!$OMP END PARALLEL DO

enddo

call cpu_time(time2)

write(*,*)'The cpu time (s) by the parallel code is',time2-time1

end program test

******************************************************************************************

The file name of the code is 'test.f90'. I built from the command line:

ifort /Qopenmp test.f90

The results are:

The cpu time (s) by the sequential code is 1.98437500000000

The cpu time (s) cost by the parallel code is 3.85937500000000

The parallel code is much slower than the sequential code. It seems challenging to find out the reason!

I will greatly appreciate your contribution in this problem.

Thanks a lot.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You leave opportunities for the compiler to take shortcuts, more so in the sequential case.

Cpu_time adds up the times devoted to all threads. The usual goal of parallelism is to reduce elapsed time by splitting increased cpu time among threads. Omp_get_wtime measures elapsed time. System_clock works well on linux or with gfortran.

By omitting the simd clause, you are suggesting to the compiler to drop simd vectorization when introducing threading. If you use intel fortran, the opt_report.would give useful information.

If you have hyperthreading and intel openmp, you should find it useful to set 1 thread per core by omp_num_threads and omp_places=cores

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You leave opportunities for the compiler to take shortcuts, more so in the sequential case.

Cpu_time adds up the times devoted to all threads. The usual goal of parallelism is to reduce elapsed time by splitting increased cpu time among threads. Omp_get_wtime measures elapsed time. System_clock works well on linux or with gfortran.

By omitting the simd clause, you are suggesting to the compiler to drop simd vectorization when introducing threading. If you use intel fortran, the opt_report.would give useful information.

If you have hyperthreading and intel openmp, you should find it useful to set 1 thread per core by omp_num_threads and omp_places=cores

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Tim,

could you pl. post an example for "omp_places=cores "? Is "omp_places" an environment variable? Is "cores" to be replaced by a number, e.g. omp_places=4?

I also saw "export omp_places=cores" somewhere. Where, when and how to activate this?

Best Regards,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

On my dual core laptop under cmd using omp environment variables

Set omp_num_threads=2

Set omp_places=cores

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Tim,

Thank you very much for your nice comments!

I followed your suggestions, modified the code(see the figure below or the attached file) and build the application by

ifort /Qopenmp /Qopt-report test.f90

The output file test.optrpt reports the following(my question is marked in bold)

report messages with your source code.

See "https://software.intel.com/en-us/intel-advisor-xe" for details.

-Qinline-factor: 100

-Qinline-min-size: 30

-Qinline-max-size: 230

-Qinline-max-total-size: 2000

-Qinline-max-per-routine: 10000

-Qinline-max-per-compile: 500000

Begin optimization report for: TEST

Report from: OpenMP optimizations [openmp]

LOOP BEGIN at C:\HPC3D\SOR\test.f90(11,1)

remark #25408: memset generated

remark #15542: loop was not vectorized: inner loop was already vectorized

remark #15300: LOOP WAS VECTORIZED

LOOP END

<Remainder loop for vectorization>

LOOP END

LOOP END

remark #15542: loop was not vectorized: inner loop was already vectorized

remark #15300: LOOP WAS VECTORIZED

LOOP END

LOOP END

<Peeled loop for vectorization>

LOOP END

remark #15300: LOOP WAS VECTORIZED

LOOP END

<Remainder loop for vectorization>

LOOP END

Non-optimizable loops:

LOOP BEGIN at C:\HPC3D\SOR\test.f90(29,1)

remark #15543: loop was not vectorized: loop with function call not considered an optimization candidate. [ C:\HPC3D\SOR\test.f90(24,8) ]

LOOP END

===========================================================================

The cpu time (s) by the parallel code is 1.49823977670167

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your parallel code is slow because you start up the threading and close it down 40000 times.

Try this:

program test use omp_lib implicit none REAL(8)::time1,time2 real(8),allocatable,dimension(:)::b integer:: k,j,i j=70000 allocate(b(j)) b=0.0D+00 time1=OMP_get_wtime() do k=1,40000 do i=1,j b(i)=real(i,kind=8) enddo enddo time2=OMP_get_wtime() write(*,*)'The cpu time (s) by the sequential code is',time2-time1 time1=OMP_get_wtime() !$OMP PARALLEL DO PRIVATE(b,i) do k=1,40000 do i=1,j b(i)=real(i,kind=8) enddo enddo !$OMP END PARALLEL DO time2=OMP_get_wtime() write(*,*)'The cpu time (s) by the parallel code is',time2-time1 pause end program test

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In your example, you don't have any inlineable code, so the inline limits aren't remotely approached.

In your cases, if the compiler were to examine the outer loops with a view toward vectorization collapse (making 1 loop out of 2) it would probably lead to skipping the outer loop iterations whose results are over-written immediately, exposing a fallacy in this method for assessing performance. As such an optimization may occur in non-parallel code but be suppressed by a parallel directive, it is of particular concern for the kind of conclusion you are trying to draw.

The function calls referred to in opt-report must be those library calls inserted by expansion of the omp directives. Evidently, they aren't vectorizable, and may be protected against in-lining, knowing that it would be counter-productive.

The compiler has made memset library function calls to zero out arrays. In the context of your example, the effect is practically indistinguishable from local vectorized code expansion. The remark is useful to assure you that the compiler didn't decide to skip the code, even though no locally vectorized loop is generated.

The remark about a peeled loop being generated in your parallel region may have a bearing on the extra time taken there. You would want to check whether adding flags such as -QxHost -align array32byte have an effect. The peeled loop might cause one thread to take extra time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Andrew's code may need modification to meet your needs

... !$OMP PARALLEL PRIVATE(i) do k=1,40000 !$OMP DO do i=1,j b(i)=real(i,kind=8) enddo enddo !$OMP END PARALLEL ...

The reason I say "may need modification" is this is would depend on what your actual code is doing as opposed to what the sketch code you provided is doing:

If you have 40000 "things" (objects, jobs, entities), each separate calculations, then Andrew's suggestion is correct.

If you have one "thing", iterating 40000 times (e.g. simulation advancing through time), then the method above would be correct.

Tim's comments should be read and considered. There are some "gotcha's" you can fall into when first exploring parallelization. CPU_TIME vs elapse time, compiler optimization eliding code generating unused results, compiler optimization removing unnecessary iterations of loops, compiler optimizations producing results calculable at compile time, ... and then there are naïve expectations: no overhead for region entry, no overhead for region distribution, no overhead/interference for memory bus and cache resources.

All of the comments posted here are intended to help you through your learning experience.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Andrew and Jim are correct in principle that entering a parallel region repeatedly could be expensive, and this could be checked by the method Jim showed. Widely used implementations of OpenMP, including Intel's, minimize the penalty for repeated entries by keeping the thread pool open for an interval. Setting KMP_BLOCKTIME=0 in the original version might demonstrate the full penalty for repeated entry to parallel region.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Jim,

Your suggestions are really helpful. In my model, the outer iterations are sequenced and are not suitable for parallelization.

I am trying to fully understanding your and Tim's comments. Now, the results are improved and as follows:

The elapse time (s) by the sequential code is 1.28671266883248

The elapse time (s) by the parallel code is 0.742385344517970

The speedup factor is about 1.7. My CPU is Intel i5-4200U, which has 1 socket, 2 cores and 4 logical processors. My naive exception of the speedup factor is about 4. However, the present one is only 1.7. This phenomenon could be due to overhead cost/interference you mentioned. Is there any other reason? What is a reasonable speedup factor?

Can you suggest some literatures for understanding the overhead for region entry, overhead for region distribution, overhead/interference for memory bus and cache resources?

Best regards,

Jingbo

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jingbo.

Your test program is essentially memory (write) bandwidth limited. Very little computation between writes. Your CPU, has 2 cores, 4 threads. More importantly it has 2 memory channels. Memory bandwidth limited applications tend to scale by the number of memory channels as opposed to number of hardware threads. Floating point intensive applications (that are not memory intensive) tend to scale by the number of cores (vector units). Scalar intensive (that are not memory intensive) tend to scale by the number of hardware threads (though to a lesser extent for applications with larger cache utilization). There is no such thing as a typical application. Applications in general with have a mix "intensiveness". You will have to take the knowledge you learn from experience (currently at beginner level), use that to determine where/how to address issues of improving opportunities for vectorization and where/how to parallelize the code. This (learning experience) will take time with some experimentation on your part. The users on this forum will assist you, and assist you best when you show initiative with advice given.

>> the outer iterations are sequenced and are not suitable for parallelization

Do not assume this without complete understanding of what is being performed. Your code could be suitable for:

Preamble | YourParallelCode | Postamble Preamble | YourParallelCode | Postamble Preamble | YourParallelCode | Postamble

Where the preamble and postamble sections may be doing things like file i/o or state propagation.

IOW you can use parallelization to overlap the preamble/postamble with the (parallel) compute section.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The above description is called a parallel pipeline. In this case the preamble and postable are run sequentially, and concurrently using two threads, concurrent with running the inner parallel section. Barring overhead, total runtime estimate for 40000 iterations

1 Preamble time + 40000 parallel time + 1 postamble time.

As opposed to (not suitable for parallelization)

40000 Preamble time + 40000 parallel time + 40000 postamble time.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As this example relies on floating point instructions, particularly if you find best performance with 1 thread for each of 2 cores, the threaded speedup of 1.7 is fairly typical. I have the same CPU here. You should see a significant advantage for setting /QxHost vs. omitting that option, but it may reduce the threading speedup somewhat.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page