- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am using Ubuntu 20.04 on a Intel® Core™ i9-7900X CPU @ 3.30GHz × 20 machine with 3 Nvidia Titan Xp GPUs. I have installed

1. one API base toolkit and

2. one API HPC toolkit.

I wanted to test the installation with command-line interface sample projects using oneapi-cli.

The fortran project which is the only one project sample available for fortran - "OpenMP-offload-training" fails abruptly with the following message.

"2022/02/17 15:26:56 unexpected EOF"

I could build cpp project, but fortran one fails.

Is that something to do with installation or something is wrong with my configuration.

I work mainly in fortran and it is critical for me to have ifort and mpifort working correctly.

Any help will be appreciated.

Regards,

keyur

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for reporting to us.

We are working on your issue. We will get back to you soon.

Meanwhile, could you please try with the sample codes from Github? Try to git-clone and you can use the sample codes. Please find the below command for git cloning with the Intel oneAPI samples.

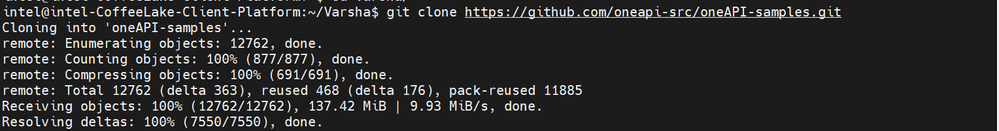

git clone https://github.com/oneapi-src/oneAPI-samples.gitPlease find the below screenshot for more information:

Please find the below path for the "OpenMP-offload-training" code:

oneAPI-samples/DirectProgramming/Fortran/Jupyter/OpenMP-offload-training

Thanks & Regards,

Varsha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We are working on your issue internally and will get back to you soon.

Thanks & Regards,

Varsha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is Linux, correct?

To test the compiler, create this simple fortran program, name it 'hello.f90'

program

print*, "hello"

end program

Next,

source /opt/intel/oneapi/setvars.shthen

ifort -o hello hello.f90

./helloThat should work. If not, show us the error you get from the above.

Next, let's test your MPI. Here is a sample to test, name it 'hello_mpi.f90'

PROGRAM hello_world_mpi

include 'mpif.h'

integer process_Rank, size_Of_Cluster, ierror, tag

call MPI_INIT(ierror)

call MPI_COMM_SIZE(MPI_COMM_WORLD, size_Of_Cluster, ierror)

call MPI_COMM_RANK(MPI_COMM_WORLD, process_Rank, ierror)

print *, 'Hello World from rank: ', process_Rank, 'of ', size_Of_Cluster,' total ranks'

call MPI_FINALIZE(ierror)

END PROGRAM

Compile it

mpiifort -o hello_mpi hello_mpi.f90And run with 1 rank

mpirun -n 1 ./hello_mpi

Hello World from rank: 0 of 1 total ranks

Then run with, oh, say 4 ranks

mpirun -n 4 ./hello_mpi

Hello World from rank: 3 of 4 total ranks

Hello World from rank: 0 of 4 total ranks

Hello World from rank: 2 of 4 total ranks

Hello World from rank: 1 of 4 total ranks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Ron,

ifort is fine. I have tested it with other simple fortran programs.

mpiifort compiles the program, but throws runtime error segmentation fault.

$ mpirun -n 4 ./hello_mpi

===================================================================================

= BAD TERMINATION OF ONE OF YOUR APPLICATION PROCESSES

= RANK 0 PID 39556 RUNNING AT tt433gpu1

= KILLED BY SIGNAL: 9 (Killed)

===================================================================================

===================================================================================

= BAD TERMINATION OF ONE OF YOUR APPLICATION PROCESSES

= RANK 1 PID 39557 RUNNING AT tt433gpu1

= KILLED BY SIGNAL: 11 (Segmentation fault)

===================================================================================

===================================================================================

= BAD TERMINATION OF ONE OF YOUR APPLICATION PROCESSES

= RANK 2 PID 39558 RUNNING AT tt433gpu1

= KILLED BY SIGNAL: 9 (Killed)

===================================================================================

===================================================================================

= BAD TERMINATION OF ONE OF YOUR APPLICATION PROCESSES

= RANK 3 PID 39559 RUNNING AT tt433gpu1

= KILLED BY SIGNAL: 9 (Killed)

===================================================================================

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Further, I tried debugging the application with gdb it seems it fails in MPI_INIT call itself.

I built it with debug flag

mpiifort -g -O0 -o hello_mpi hello_mpi.f90

and

$ mpirun -n 1 gdb ./hello_mpi

GNU gdb (Ubuntu 9.2-0ubuntu1~20.04.1) 9.2

Copyright (C) 2020 Free Software Foundation, Inc.

License GPLv3+: GNU GPL version 3 or later <http://gnu.org/licenses/gpl.html>

This is free software: you are free to change and redistribute it.

There is NO WARRANTY, to the extent permitted by law.

Type "show copying" and "show warranty" for details.

This GDB was configured as "x86_64-linux-gnu".

Type "show configuration" for configuration details.

For bug reporting instructions, please see:

<http://www.gnu.org/software/gdb/bugs/>.

Find the GDB manual and other documentation resources online at:

<http://www.gnu.org/software/gdb/documentation/>.

For help, type "help".

Type "apropos word" to search for commands related to "word"...

Reading symbols from ./hello_mpi...

(gdb) b hello_world_mpi

Breakpoint 1 at 0x4038dc: file hello_mpi.f90, line 4.

(gdb) list

1 PROGRAM hello_world_mpi

2 include 'mpif.h'

3 integer process_Rank, size_Of_Cluster, ierror, tag

4 call MPI_INIT(ierror)

5 call MPI_COMM_SIZE(MPI_COMM_WORLD, size_Of_Cluster, ierror)

6 call MPI_COMM_RANK(MPI_COMM_WORLD, process_Rank, ierror)

7 print *, 'Hello World from rank: ', process_Rank, 'of ', size_Of_Cluster,' total ranks'

8 call MPI_FINALIZE(ierror)

9 END PROGRAM hello_world_mpi

(gdb) r

Starting program: /home/smec17045/hello_mpi

[Thread debugging using libthread_db enabled]

Using host libthread_db library "/lib/x86_64-linux-gnu/libthread_db.so.1".

Breakpoint 1, hello_world_mpi () at hello_mpi.f90:4

4 call MPI_INIT(ierror)

(gdb) n

Program received signal SIGSEGV, Segmentation fault.

0x00007ffff5710131 in ofi_cq_sreadfrom ()

from /opt/intel/oneapi/mpi/2021.5.1//libfabric/lib/prov/libsockets-fi.so

(gdb)

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page