- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am doing a benchmark about vectorization on MacOS with the following processor i7 :

$ sysctl -n machdep.cpu.brand_string

Intel(R) Core(TM) i7-4960HQ CPU @ 2.60GHz

My MacBook Pro is from middle 2014.

I tried to use different flag options for vectorization : the 3 ones that interest me are SSE, AVX and AVX2.

For my benchmark, I add each element of 2 arrays and store the sum in a third array (array sizes vary from 32^3 to 32^5)

I must make you notice that I am working with `double` type for these arrays.

Here are the functions used into my benchmark code :

**1*) First with SSE vectorization :**

#elif __SSE__

#include <x86intrin.h>

#define ALIGN 16

void addition_array(int size, double *a, double *b, double *c)

{

int i;

// Main loop

for (i=size-1; i>=0; i-=2)

{

// Intrinsic SSE syntax

const __m128d x = _mm_load_pd(a); // Load two x elements

const __m128d y = _mm_load_pd(b); // Load two y elements

const __m128d sum = _mm_add_pd(x, y); // Compute two sum elements

_mm_store_pd(c, sum); // Store two sum elements

// Increment pointers by 2 since SSE vectorizes on 128 bits = 16 bytes = 2*sizeof(double)

a += 2;

b += 2;

c += 2;

}

}

**2*) Second with AVX256 vectorization :**

#ifdef __AVX__

#include <immintrin.h>

#define ALIGN 32

void addition_array(int size, double *a, double *b, double *c)

{

int i;

// Main loop

for (i=size-1; i>=0; i-=4)

{

// Intrinsic AVX syntax

const __m256d x = _mm256_load_pd(a); // Load two x elements

const __m256d y = _mm256_load_pd(b); // Load two y elements

const __m256d sum = _mm256_add_pd(x, y); // Compute two sum elements

_mm256_store_pd(c, sum); // Store two sum elements

// Increment pointers by 4 since AVX256 vectorizes on 256 bits = 32 bytes = 4*sizeof(double)

a += 4;

b += 4;

c += 4;

}

}

For SSE vectorization, I expect a Speedup equal around 2 because I align data on 128bits = 16 bytes = 2* sizeof(double).

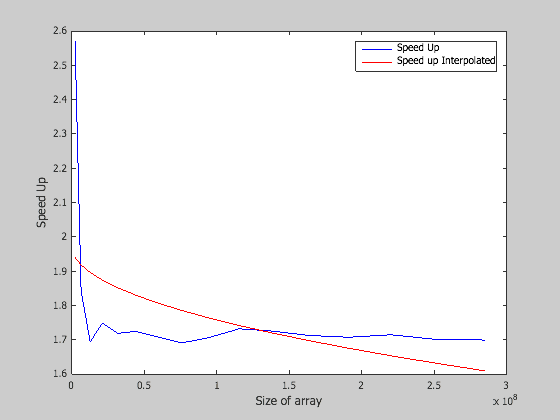

What I get in results for SSE vectorization is represented on the following figure :

So, I think these results are valid because SpeedUp is around factor 2.

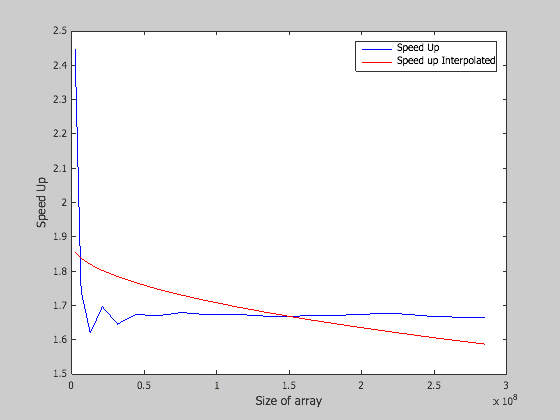

Now for AVX256, I get the following figure :

For AVX256 vectorization, I expect a Speedup equal around 4 because I align data on 256bits = 32 bytes = 4* sizeof(double).

But as you can see, I still get a `factor 2` and not `4` for SpeedUp.

I don't understand why I get the same results for Speedup with SSE and AVX

vectorization.

Does it come from "compilation flags", from my model of processor, ... I don't know.

Here are the compilation command line that I have done for all above results :

**For SSE :**

gcc-mp-4.9 -O3 -msse main_benchmark.c -o vectorizedExe

**For AVX256 :**

gcc-mp-4.9 -O3 -Wa,-q -mavx main_benchmark.c -o vectorizedExe

Entire code is available on : http://beulu.com/test_vectorization/main_benchmark.c.txt

and the shell script for benchmarking is http://beulu.com/test_vectorization/run_benchmark

Could anyone tell me why I get the same speedup between SSE and AVX (i.e a factor 2 between both) ?

Thanks for your help

- Tags:

- Intel® Advanced Vector Extensions (Intel® AVX)

- Intel® Streaming SIMD Extensions

- Parallel Computing

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If I understand your setup correctly, the smallest test cases have arrays that are much larger than the L2 cache, so performance may be limited by L3 bandwidth rather than by core performance.

- 32^3 is 32,768, so 3 arrays of 8 Byte elements will require 768KiB. This is much larger than the 256KiB L2 cache, but fits easily in the 6MiB L3.

- 32^5 is 33,554,432, so 3 arrays of 8 Byte elements will require 768MiB. This is much larger than the 6 MiB L3 cache.

For the Core i7-4960HQ, the memory is 2 channels of DDR3/1600 DRAM, with an aggregate peak bandwidth of 2*8*1.6=25.6 GB/s. Maximum sustained bandwidth should be in the range of 20.5 GB/s to 21.8 GB/s (80%-85%) for this combination of reads and writes. The bandwidth from the L3 cache will be higher, but I don't have any good measurements of this. If I had to guess, I would assume that one core could sustain about 16 Bytes/cycle (based on bandwidths measured on the Xeon E5 v3 processors), which is about 60.8 GB/s at the maximum Turbo frequency of 3.8 GHz.

Your kernel will require 4 memory accesses for each iteration -- 2 reads ("a" and "b"), an "allocate" (reading array "c"), and one writeback of the updated values of array "c". So each iteration will require 32 Bytes of traffic (whether from L3 or from memory), and each iteration has 1 floating-point operation. Bandwidth-limited execution time will therefore be something like:

- 32^3 = 768KiB, which will be L3-contained, so assume 60.8 GB/s, or about 13 microseconds.

- 32^5 = 768MiB, which will be in memory, so assume 21 GB/s, or about 38 milliseconds.

The interaction of vectorization and vector length with sustained bandwidth is quite complex, but it is certainly common for scalar code or SSE vectorization to deliver lower sustained bandwidth on Haswell-based processors. One part of the performance difference is the need to access the L1 Data Cache twice as many times when running SSE code, but there are other more subtle differences as well.

To see the difference in performance due to vectorization, it is best to shrink the problem size down to the point where it fills most of the L1 data cache. You will need to repeat the test many times to get execution times that are long enough to reliably measure, and you will need to be careful to write code that actually does something with the repeated iterations (to ensure that the compiler does not eliminate code that does not change the results).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

An alternative would be to change your test to increase the computation per memory fetch/store.

c = sqrt(a*a + b*b);

#ifdef __AVX__

#include <immintrin.h>

#define ALIGN 32

void addition_array(int size, double *a, double *b, double *c)

{

int i;

// Main loop

for (i=size-1; i>=0; i-=4)

{

// Add from StackOverflow

//__builtin_prefetch(c+32);

// Intrinsic AVX syntax

const __m256d x = _mm256_load_pd(a); // Load two x elements

const __m256d y = _mm256_load_pd(b); // Load two y elements

const __m256d sum = _mm256_sqrt_pd(_mm256_add_pd(_mm256_mul_pd(x,x), _mm256_mul_pd(y,y))); // Compute square root of sum of squares elements

_mm256_store_pd(c, sum); // Store two sum elements

// Increment pointers by 4 since AVX256 vectorizes on 256 bits = 32 bytes = 4*sizeof(double)

a += 4;

b += 4;

c += 4;

}

}

Above is untested code.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In addition to John's suggestion to be mindful of your dataset size, I also suggest to check whether your compiler options result in binary with no AVX to non-VEX SSE code transitions. GCC should be smart enough to avoid that if you specified -mavx, but it doesn't hurt to check.

Check section 9.12 Transitions between VEX and non-VEX modes of Anger Fog's Optimization Guide for more details.

Furthermore, pay attention to the false dependence between memory addresses with the same set and offset -- it is not possible to read and write simultaneously from addresses that are spaced by a multiple of 4 Kbytes without penalty.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I see that the code is only running each function one time. For a variety of reasons, code will typically run slower the first time through -- the instructions won't be in the instruction cache, the branch predictor won't have any experience with the loops, etc....

I recommend that you add a loop in main to repeat the computations many times --- keeping the timer values in an array until the end. Then you can compute the elapsed time for each repetition of the loop and compute statistics.

Igor's point about alignment is a good one -- there are many conflicts that occur when you try to do concurrent accesses to array elements that are separated by powers of two. It is often helpful to round down to the nearest multiple of 100 to avoid these conflicts.

The L1 Data Cache on your processor is 32 KiB, so it will only hold a total of 4096 doubles. Since you have three arrays, you would need an array size of 4096/3=1365 or less to get L1-resident behavior. Values in the range of 1000 to 1200 should work well, but you will definitely need to add a loop around the calls to the "addition_tab()" function to get the caches loaded and to get the timing large enough so that you can neglect the overhead of reading the timers.

Speaking of timers, I am not familiar with the mach timers you are using, but it is important to have an idea of the overhead and granularity of the timers, and to compare those with the elapsed times before making any conclusions about whether the measurements actually mean anything. For short intervals, the lowest overhead is obtained with inline assembly to execute the RDTSCP instruction. This typically has an overhead of ~30 cycles, but I don't recommend trying to understand any timings of less than 1000 cycles or so -- there is too much uncertainty associated with the processor's out-of-order execution mechanisms to make sense of shorter results.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

const __m256d x1 = _mm256_load_pd(a + 4); // Load more two x elements

const __m256d y1 = _mm256_load_pd(b + 4); // Load more two y elements

const __m256d sum1 = _mm256_add_pd(x1, y1); // Compute more two sum elements

_mm256_store_pd(c + 4, sum1); // Store two sum elements

a += 8;

b += 8;

c += 8;

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

With gcc, you may be able to achieve equivalent unrolling by -funroll-loops --param max-unroll-times=2. Some AVX and AVX2 instructions are more dependent than others on optimum unrolling for performance.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page