- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I am implementing an application which uses single threaded IPP and external parallelization via MS OpenMP.

Below you can find a piece of the source code which I used for some tests (the full code is attached to the post).

for (auto t = 1; t <= maxThreads; t++)

{

auto start = clock();

#pragma omp parallel default(shared) num_threads(t)

{

auto id = omp_get_thread_num();

auto buffer = buffers[id];

auto step = steps[id];

#pragma omp for schedule(dynamic, 1)

for (auto i = 0; i < count; i++)

ippiDivC_32f_C1IR(1.0f, buffer, step, roi);

}

auto stop = clock();

cout << "threads=" << t << " time=" << (stop - start) << endl;

}

The code of application is very simple. It just checks an execution time of calculation using IPP depending on the number of threads used for this processing.

For width=5000, height=5000 and count=100 I've obtained following results:

Intel Core i7-3770 CPU @ 3.40GHz

version=7.0 build 205.58 name=ippie9_l.lib

threads=1 time=982

threads=2 time=947

threads=3 time=945

threads=4 time=957

Intel Xeon CPU E5-1660 0 @ 3.30GHz

version=7.0 build 205.58 name=ippie9_l.lib

threads=1 time=988

threads=2 time=698

threads=3 time=679

threads=4 time=678

threads=5 time=678

threads=6 time=699

As you can see it is very difficult to get any significant speed up using multiple threads. My question is what is the reason of above behavior? Could you please tell me what is the bottleneck of described solution?

Thank you in advance for your help.

Krzysztof Piotrowski.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Krzysztof Piotrowski,

It seems the main work ( ippiDivC_32f_C1IR(1.0f, buffer, step, roi); ) does not get distributed to multiple threads but duplicated that it multiplies the amount of work as the number of threads gets larger.

Please refer part 'Resizing a Tiled Image with One Prior Initialization' of this page ( https://software.intel.com/en-us/node/504353#TILING_IMAGE_WITH_ONE_INIT ) , there explains the idea of 'parallelization in one direction'. This example shows how to multithread resize operation using OpenMP with parallelization in Y direction.

Thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jon J K.

Thank you very much for answering my question.

I understand the tilling approach in image processing. But this is not the case which I described. I am not interested for parallelization so deep where multiple threads work on one image to process it. In my example parallelization is done one level higher - multiple threads work on different data and process them independently.

Maybe my example was not so clear but I would like to present it as simple as possible. Please imagine that the number of buffers is equal count and they are indexed in the loop by i (not by thread id). Also ippiDivC operation may be replaced by any sequence of IPP functions which work on independent buffers.

You wrote that in my example the amount of work is getting larger when number of threads is increasing. I wouldn't agree with you - in my opinion the amount of work is constant, independent on number of threads and equals count. Using parallel for from OpenMP the whole work is just separated into multiple threads.

The question is still open - what is the reason of weak parallelization level for larger number of threads.

Thank you in advance for your support.

Best regards, Krzysztof Piotrowski.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Krzysztof, In opposite, I see almost 100%-effective speedup. In between start= and stop= for 4 threads you processes 4 buffers instead of 1 for 1 thread.

Execution time is almost the same ~950 clocks (Core i7), but the amount of work is 4 times bigger.

If you add processed byte count, you could see something like (just an example):

threads=1 time=4753, bytes processed 2.5e+009

threads=2 time=2761, bytes processed 5e+009

threads=3 time=2781, bytes processed 7.5e+009

threads=4 time=2778, bytes processed 1e+010

threads=5 time=2779, bytes processed 1.25e+010

threads=6 time=2788, bytes processed 1.5e+010

threads=7 time=2785, bytes processed 1.75e+010

threads=8 time=2792, bytes processed 2e+010

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Sergey.

I cannot agree with you, the number of processed pixels is always the same width*height*count. It is because the number of all interations in the loop is equal count and OpenMP just dispatches the whole work into threads (see directive: #pragma omp for).

When number of threads is equal 1:

- thread 0 computes width*height*count pixels.

When number of threads is equal 2 (*):

- thread 0 computes with*height*(count/2) pixels,

- thread 1 computes with*height*(count/2) pixels.

When number of threads is equal n (*):

- thread 0 computes with*height*(count/n) pixels,

- thread 1 computes with*height*(count/n) pixels,

...

- thread n-1 computes with*height*(count/n) pixels.

(*) This is a model situation when all threads are equally utilized and count % n == 0.

I hope that now you will agree with me.

Greetings, Krzysztof Piotrowski

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Krzysztof,

You're right, sorry.

So, the only explanation I could have is data race condition, when different threads write to the same locations.

Change IPP read/write function to read-only, like:

Ipp64f sum = 0; ippiSum_32f_C1R(buffer, step, roi, &sum, ippAlgHintAccurate);

and check.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sergey,

I performed additional tests for checking your suggestion.

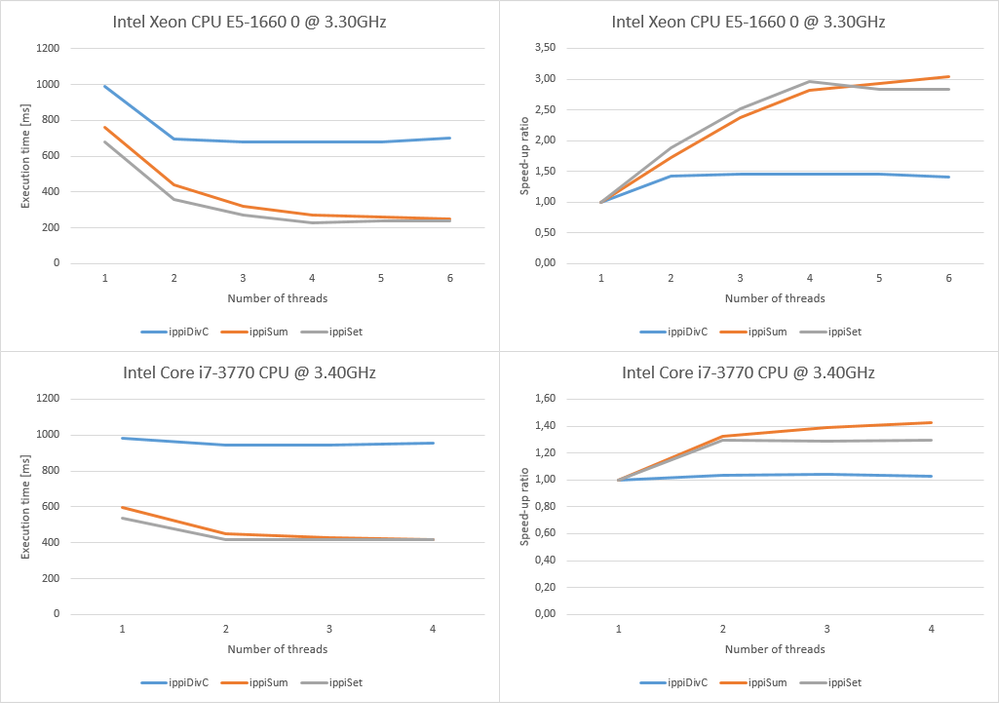

For read-only function ippiSum_32f_C1R I have following results:

Intel Core i7-3770 CPU @ 3.40GHz

threads=1 time=596

threads=2 time=451

threads=3 time=429

threads=4 time=417

Intel Xeon CPU E5-1660 0 @ 3.30GHz

threads=1 time=760

threads=2 time=440

threads=3 time=320

threads=4 time=270

threads=5 time=260

threads=6 time=250

For write-only function ippiSet_32f_C1R I have:

Intel Core i7-3770 CPU @ 3.40GHz

threads=1 time=539

threads=2 time=417

threads=3 time=419

threads=4 time=415

Intel Xeon CPU E5-1660 0 @ 3.30GHz

threads=1 time=680

threads=2 time=360

threads=3 time=270

threads=4 time=230

threads=5 time=240

threads=6 time=240

All results including speed-up ratio (single-threaded / multi-threaded) can be seen on following image:

Now I understand that the bottleneck of the whole process is the the access to data stored in RAM. Am I right?

If so, is there any known technique which could be adapted to avoid memory transfer limitation and increase the parallelization level?

Thank you in advanfor your comments.

Greetings Krzysztof Piotrowski.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Krzysztof,

The best technique for successful parallelization is to make threads work with totally different data. Only in this case there will be no locks. Better if threads play in their own sandboxes for long time.

The next important topic is amount of data your thread needs to work with. I am not expert in parallelization, but as I think, it's better if a particular thread will work with operational data (the area most often accessed by thread) not greater than L2 cache size / number of HW cores.

There's something related to thread affinity in Intel OpenMP docs. Because, your case uses huge image size, there's a lot of cache misses, data transfers between cache and main memory. When a thread travelling from one CPU core to another - it happens if you haven't "nailed" thread to CPU core - the memory system needs to update the whole L1 cache for target core, and so on... I don't even know what happens.)).

Working with big images is faster and better scalable with slices. But, for 5Kx5K images of float data, even slices might not help. Very roughly, if you have 8 core CPU with 8MB of L2 cache, the best slice size for 5K width data is about 8MB / 8 cores / 5000 / 4 (sizeof float) = 50 lines. Very roughly))

Again it's not your case, if you want to parallel it higher level than simple slicing, but nevertheless keep the working data independent and compact.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page