- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I want to use Intel Distribution for GDB to debug the core dump files. And when I use mpirun to run my program on multiple nodes with generating the core dump files ,the result confuses me.

Sometimes, the core dump files were generated correctly.

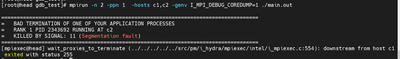

Other times, the core dump files were only generated on c2 node,and the ranks on c1 were killed without core dump files, as shown in the figure below.

So, does mpirun have the machanism to kill other ranks or other processes when core dump is happening, like Open MPI ?

Thanks.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

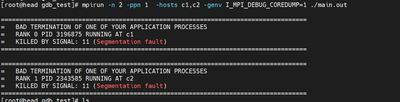

Add the figure for "Sometimes, the core dump files were generated correctly."

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thank you for posting in Intel Communities.

Could you please provide the below details to investigate more on your issue?

- OS details

- The Intel MPI library version you are using.

- Sample reproducer code to try reproducing your issue from our end.

- Did you get any dump files generated after running the program using I_MPI_DEBUG_COREDUMP=1?

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1. OS details

My OS is Centos 8.3.

2. The Intel MPI library version you are using.

The Intel MPI library version is 2021.5.

3. Sample reproducer code to try reproducing your issue from our end.

The code :

/* File: mpi_sum.c

* Compile as: mpicc -g -Wall -std=c99 -o mpi_sum mpi_sum.c -lm

* Description: An MPI solution to sum a 1D array. */

#include <stddef.h>

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <mpi.h>

#include <time.h>

int main(int argc, char *argv[]) {

int myID, numProcs; // myID for the index to know when should the cpu start and stop calculate

//numPro numper of cpu you need to do the calculation

double localSum; // this for one operation on one cpu

double parallelSum; // this for collecting the values of localsum

int length = 10000000; // this for how many num

double Fact = 1 ;

int i; // this for for loop

clock_t clockStart, clockEnd; // timer

srand(5); // Initialize MPI

MPI_Init(NULL, NULL); //Initialize MPI

MPI_Comm_size(MPI_COMM_WORLD, &numProcs); // Get size

MPI_Comm_rank(MPI_COMM_WORLD, &myID); // Get rank

localSum = 0.0; // the value for eash cpu is 0

int A = (length / numProcs)*((long)myID); // this is to make each cpu work on his area

int B = (length / numProcs)*((long)myID + 1); // this is to make each cpu work on his area

A ++; // add 1 to go to next num

B ++;

clockStart = clock(); // start the timer to see how much time it take

for (i = A; i < B; i++)

{

Fact = (1 / myID - 1/numProcs) / (1 - 1/numProcs);

localSum += Fact ;

}

MPI_Reduce(&localSum, ¶llelSum, 1, MPI_DOUBLE, MPI_SUM, 0, MPI_COMM_WORLD);

clockEnd = clock();

if (myID == 0)

{

printf("Time to sum %d floats with MPI in parallel %3.5f seconds\n", length, (clockEnd - clockStart) / (float)CLOCKS_PER_SEC);

printf("The parallel sum: %f\n", parallelSum + 1);

}

MPI_Finalize();

return 0;

}

4. Did you get any dump files generated after running the program using I_MPI_DEBUG_COREDUMP=1?

Yes,I got a core dump file after using I_MPI_DEBUG_COREDUMP=1. But, when I run the program on multiple nodes , only one node can generate only one core dump file , other ranks didn't get any core dump files.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I retest it and run the program(mpi_sum) by using OpenMPI.

It is correct and generates multiple core dump files (each rank generates one core dum file ).

So,could you check it if Intel MPI has the limit to generate the core dump file?

Thanks .

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

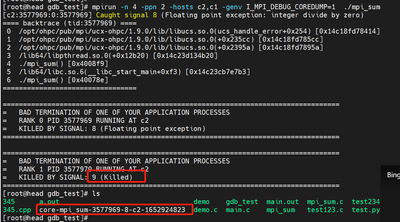

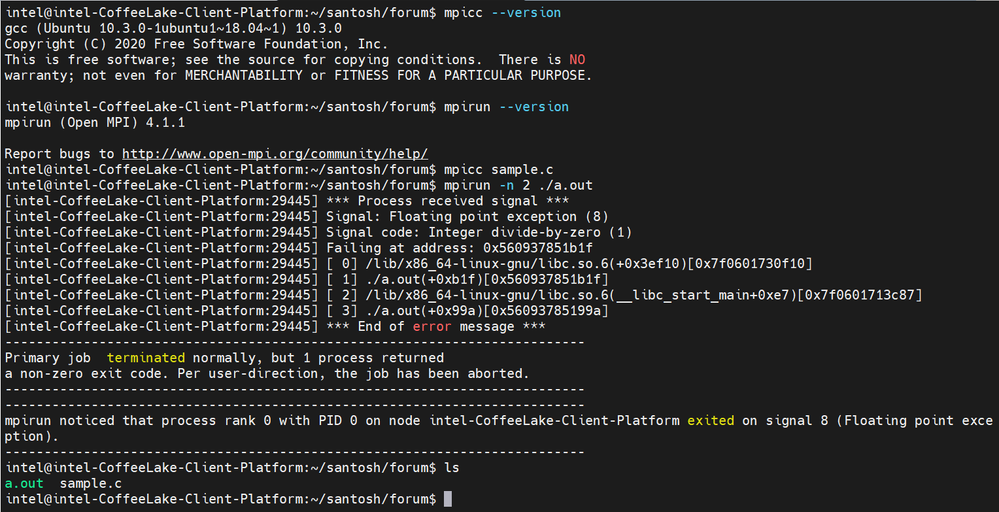

We were able to reproduce your issue from our end using the Intel MPI Library 2021.6 on a Ubuntu 18.04 machine as shown in the below screenshot:

We will check internally if Intel MPI has any limitations to generate the core dump file and we will get back to you soon.

Meanwhile, could you please provide the steps(compilation/execution commands) you followed for testing using OpenMPI?

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We haven't heard back from you.

>>"I retest it and run the program(mpi_sum) by using OpenMPI."

Could you please provide the steps that you followed to test your application using OpenMPI for generating core dump files for each rank?

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Sorry for my late reply.

The compilation is the same for OpenMPI.

And the command is :

mpiexec -np 4 --host c1,c2 ./mpi_sum

After running the command ,it will generated four core dump files in the directory.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We have tested your sample program using OpenMPI, but we were not able to get the core dump files.

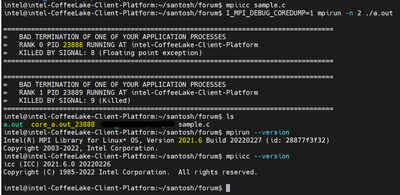

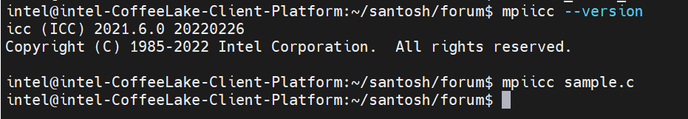

Please refer to the screenshot below for the steps that we followed:

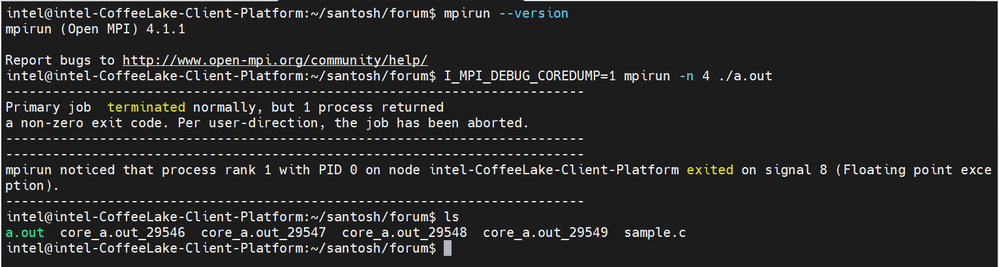

However, we tried another way of implementing it.

- Compile the MPI code using Intel MPI Library's mpiicc.

mpiicc sample.c - Now, run the executable using mpirun/mpiexec of the OpenMPI runtime environment.

I_MPI_DEBUG_COREDUMP=1 mpirun -n 2 ./a.out - We can get the core dump files for each rank.

Please refer to the below screenshot for the steps that we followed:

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for telling me another way to implement it and OpenMPI does generate the core dump files for each rank.

So, If you get the answer to the limitations of Intel MPI to generate the core dump files ,please let me know.

Thank you very much.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

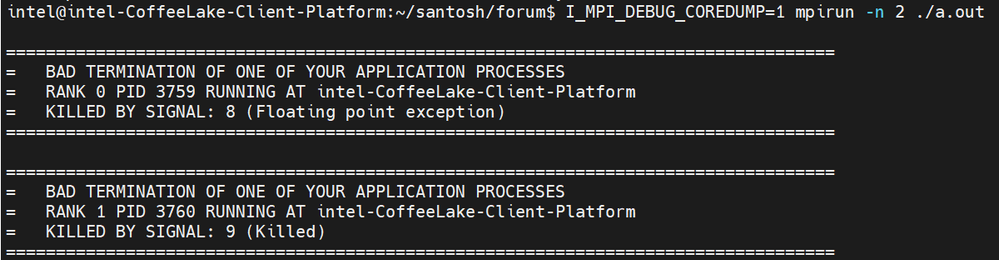

As you can see from the screenshot below when one of the processes is being terminated, it would kill the other processes passively i.e Intel MPI has the mechanism to kill other ranks or other processes when a core dump is happening.

Thanks & Regards,

Santosh

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thank you for your reply and it does help me a lot.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Could you please confirm whether we can close this issue?

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We assume that your issue is resolved. If you need any additional information, please post a new question as this thread will no longer be monitored by Intel.

Thanks & Regards,

Santosh

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page