- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have attached two FORTRAN codes, one serial and one parallel.

These both give the same (correct) solution to the Laplace

equation and take roughly the same number of iterations

for the results.

I'm running this on Windows 10 Pro with

Intel Core I5-10600K 6-Core, 12-Thread desktop.

I am using Intel Visual Studio, Intel oneAPI Base

and HPC...

Serial run: (Execution time ~15 seconds)

>ifort ser.f

>ser

Parallel run: (Execution time ~2125 seconds)

>mpif77 par.f

>mpiexec -np 12 par

This is very discouraging considering I am just

starting out in MPI.

My question here is as follows...

Am I doing something wrong in the parallel code,

in MPI, that slows things down so drastically?

Is there anything I can do to speed the code up?

I also ran the code using 2, 6 and 24 processors.

Generally the more processors I use the longer

it takes. Something is wrong with my MPI program.

Thank you in advance for considering this issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your reply.

The "source" reason that MPI_Barrier was making the code

wait so long is because MPI_Send and MPI_Recv were called

for every node. When MPI_Barrier is removed (after each

task) it takes even longer!

Here is what I have changed...

MPI_Send and MPI_Recv are no longer called for every entry

of the solution array. A new array is sent/received for all

the entries (for a given processor and a given task) at once.

Only then, the data is transferred to the solution array t(i,j).

The code now runs only 1.3 times slower than the serial code

compared to 140 times slower.

I have attached the current code for the benefit of others.

I assume that this is still slower than the serial code

equivalent because there are very few computations carried out

(this effectively only takes the average of the four surrounding

node values - a single line of computation). I assume the

additional complexity of the parallel code with all the additional

subroutines overtakes the advantage of running this on more

processors.

I also assume that for my actual code, the more computationally

intensive 3-D fluid solver, I should get a speed up in the parallel

code. I will find out soon.

With that said, if you have any additional feedback please do not

hesitate to add to this. Otherwise you can close this thread.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for reaching out to us.

We are able to reproduce the issue at our end.We are working on it and will get back to you soon.

Thanks & Regards

Shivani

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

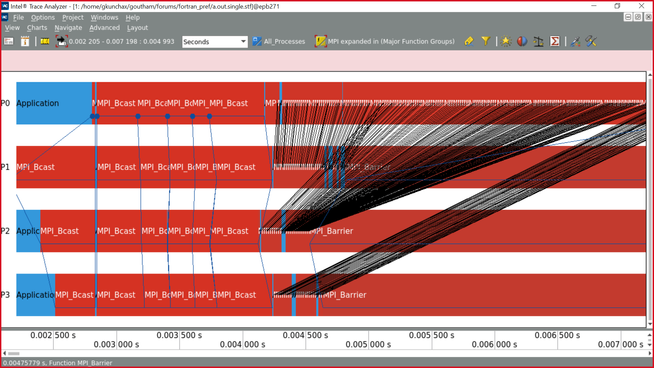

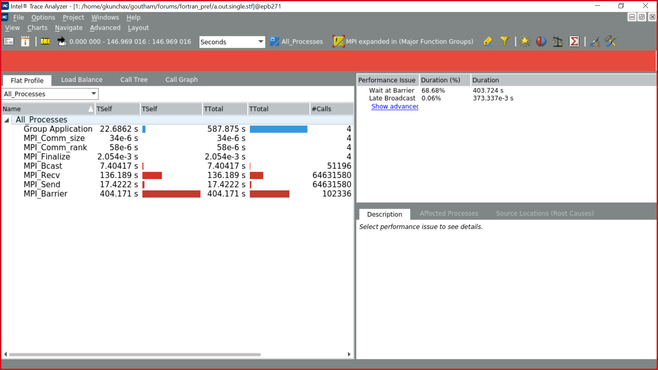

Thanks for providing the source code. As per the ITAC observation, the code you have used is taking a long time with MPI Barrier. We suggest you modify the code accordingly. Please refer to the below screenshots.

Thanks & Regards

Shivani

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your reply.

The "source" reason that MPI_Barrier was making the code

wait so long is because MPI_Send and MPI_Recv were called

for every node. When MPI_Barrier is removed (after each

task) it takes even longer!

Here is what I have changed...

MPI_Send and MPI_Recv are no longer called for every entry

of the solution array. A new array is sent/received for all

the entries (for a given processor and a given task) at once.

Only then, the data is transferred to the solution array t(i,j).

The code now runs only 1.3 times slower than the serial code

compared to 140 times slower.

I have attached the current code for the benefit of others.

I assume that this is still slower than the serial code

equivalent because there are very few computations carried out

(this effectively only takes the average of the four surrounding

node values - a single line of computation). I assume the

additional complexity of the parallel code with all the additional

subroutines overtakes the advantage of running this on more

processors.

I also assume that for my actual code, the more computationally

intensive 3-D fluid solver, I should get a speed up in the parallel

code. I will find out soon.

With that said, if you have any additional feedback please do not

hesitate to add to this. Otherwise you can close this thread.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

For a large number of computations, parallel code will be useful, as there are very few computations carried out parallel code would be taking a bit longer time than serial code.

We are glad that your issue has been resolved. We will no longer respond to this thread. If you require any additional assistance from Intel, please start a new thread. Any further interaction in this thread will be considered community only.

Have a Good day.

Thanks & Regards

Shivani

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page