- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Settings:

Software: WRF version 4.1 compiled with icc, ifort, mpiicc and mpiifort

Intel MPI Version: oneapi/mpi/2021.2.0

OFED Version: version5.3

UCX Version: UCT version=1.10.0 revision 7477e81

Launch Parameter:

source /opt/intel/oneapi/setvars.sh intel64

ulimit -l unlimited

ulimit -s unlimited

export WRFIO_NCD_LARGE_FILE_SUPPORT=1

export KMP_STACKSIZE=20480000000

export I_MPI_FABRICS=shm:ofi

export FI_PROVIDER=mlx

export I_MPI_OFI_LIBRARY_INTERNAL=1

export I_MPI_PIN_RESPECT_HCA=enable

export OMP_NUM_THREADS=4

mpirun -np 64 -machinefile hostfile ./wrf.exe

Error message:

Timing for Writing wrfout/wrfout_d01_2020-07-14_12:00:00 for domain 1: 2.01455 elapsed seconds

Input data is acceptable to use: wrfbdy_d01

Timing for processing lateral boundary for domain 1: 0.37036 elapsed seconds

[cu03:3681 :0:3681] Caught signal 11 (Segmentation fault: address not mapped to object at address 0x7fff85190ab0)

==== backtrace (tid: 3681) ====

0 0x0000000000056d69 ucs_debug_print_backtrace() ???:0

1 0x0000000000012dd0 .annobin_sigaction.c() sigaction.c:0

2 0x000000000145c55b solve_em_() ???:0

3 0x00000000012b4e40 solve_interface_() ???:0

4 0x000000000057c76b module_integrate_mp_integrate_() ???:0

5 0x0000000000417171 module_wrf_top_mp_wrf_run_() ???:0

6 0x0000000000417124 MAIN__() ???:0

7 0x00000000004170a2 main() ???:0

8 0x00000000000236a3 __libc_start_main() ???:0

9 0x0000000000416fae _start() ???:0

=================================

Information that maybe useful:

$ucx_info -d | grep Transport

# Transport: posix

# Transport: sysv

# Transport: self

# Transport: tcp

# Transport: tcp

# Transport: rc_verbs

# Transport: rc_mlx5

# Transport: dc_mlx5

# Transport: ud_verbs

# Transport: ud_mlx5

# Transport: cma

# Transport: knem

$ibstat

CA 'mlx5_0'

CA type: MT4123

Number of ports: 1

Firmware version: 20.30.1004

Hardware version: 0

Node GUID: 0xb8cef60300025b8c

System image GUID: 0xb8cef60300025b8c

Port 1:

State: Active

Physical state: LinkUp

Rate: 200

Base lid: 1

LMC: 0

SM lid: 19

Capability mask: 0x2651e84a

Port GUID: 0xb8cef60300025b8c

Link layer: InfiniBand

Attached please find the logs generated with setting "export I_MPI_DEBUG=10".

Any suggestions are appreciated!

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for reaching out to us.

Could you please provide your cpu information by using the below command:

cpuinfo -g

We need the cluster information i.e how many nodes are being used?

Also, could you please check whether you are able to run any other MPI application on your cluster other than WRF?

Best Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Santosh,

Sorry for the late reply. The issue was resolved by setting

ulimit -l unlimited

ulimit -s unlimited

export KMP_STACKSIZE=20480000000

in every compute node. Since I run wrf.exe as root before, and the compute nodes didn't share the environment settings.

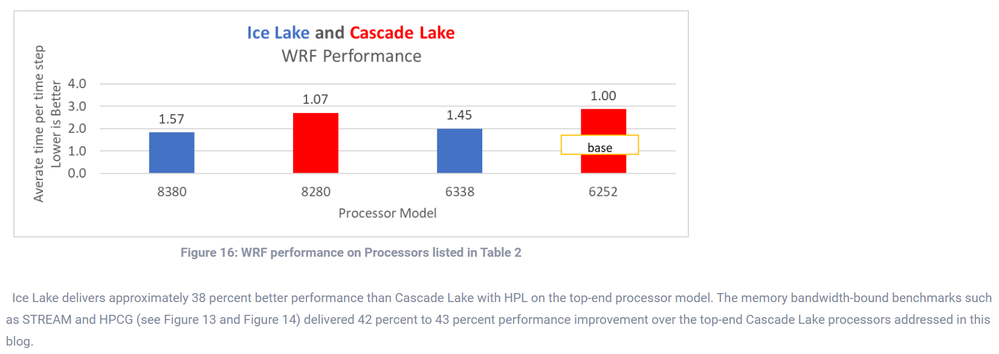

However, I have another question. Since I'm using "Intel(R) Xeon(R) Platinum 8358, 64 cores per socket", I wonder if there are any compiler options I could add to improve the performance of WRF on Ice Lake platform and to fully utilize the Ice Lake CPU?

As I saw on this page https://infohub.delltechnologies.com/p/intel-ice-lake-bios-characterization-for-hpc/

WRF delivered much performance improvement on Ice Lake platform compared to Cascade Lake platform.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It seems, that the faulting IP is located at frame #2 0x000000000145c55b solve_em_() ???:0.

This address looks like a stack address space: 0x7fff85190ab0.

If it is possible the coredump (if collected) may be helpful in further analysis.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Yes, it is a stack size issue. The issue was solved by setting

ulimit -l unlimited

ulimit -s unlimited

export KMP_STACKSIZE=20480000000

in every compute node. Since I run wrf.exe as root before, the compute nodes didn't share the environment settings.

Thank you!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You are welcome!!

Glad that you solved the issue of limited stack size.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Glad that your issue has been resolved.

As your primary question got resolved, we suggest you raise a new thread for further queries/issues. Since your new issue is related to compiling/building an application, we recommend you to post a new question in the below link:

Intel® C++ Compiler - Intel Community

If you have any further queries related to multi-node performance issues post a new thread in the below link:

Intel® oneAPI HPC Toolkit - Intel Community.

As your primary issue has been resolved, we will no longer respond to this thread. If you require additional assistance from Intel, please start a new thread. Any further interaction in this thread will be considered community only.

Have a good day!

Thanks & Regards,

Santosh

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page