- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I'm implementing a parallel multigrid poisson solver on a a dual-Xeon hardware (32 logical cores with Hyperthreading) .

I'm able to very efficiently parallelize the fine resolution levels (i.e. 1024^3 to 256^3) of the multigrid heirarchy with standard OMP worksharing constructs (nearly 100% CPU utilization on all 32 cores). Unfortunately the efficiency of parallelization deteriorates dramatically for the coarser levels, i.e. for 128^3 to 10^3 grid sizes.

The basic structure of the core of the whole algorithm (for example with 100 jacobi smoothing iterations) is as follows:

for(iIter=0;iIter<100;iIter++) {

#pragms omp parallel

{

apply_jacobi_operator_to_grid();

}

}

Realizing that for small grid sizes the overhead of newly invoking the omp parallel section within each iteration is too high (Unfortunately I'm forced to use the quite inefficient gcc/gomp based OMP implementation)

So I tried to move the loop inside the parallel section with an efficient hand-coded spin-based thread barrier to snychronize the iterations:

#pragma omp parallel

{

for(iIter=0;iIter<100;iIter++) {

my_barrier_sync();

apply_jacobi_operator_to_grid();

}

}

While this works prefectly well most of the time there are strange, occasional "temporary stall" problems where many threads seem to be stuck spinning in the barrier sometimes for several seconds (!) Strangely, however, this does not lead to complete deadlock: the "stalling" always resolves itself after a few seconds.

So after two weeks of investigating the root cause of this strange phenomenon without sucess I'd like to ask in this forum for any further Ideas.

Could it be a problem with the windows thread manager (some kind of priority inversion ?) But all worker threads should have the same priority and it is not clear to me at all how it can be that two dozens threads can spin for seconds (i.e. billions of cycles) without apparent progress.

I'm at a loss here and any help is greatly appreciated.

Thanks !

- Tags:

- Parallel Computing

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You likely have a faulty barrier. Try this from my QuickThread threading toolkit:

struct qtBarrier

{

volatile intptr_t c1;

volatile intptr_t c2;

qtBarrier() { c1 = 0; c2 = 0; }

void here(intptr_t iThread, intptr_t nThreads)

{

if(iThread)

{

// indicate this thread reached barrier

XCHGADD((intptr_t*)&c1, 1);

while(c1)

_mm_pause();

// here after master detected all other threads at barrier

// indicate this thread no longer observing c1

XCHGADD((intptr_t*)&c2, 1);

// now wait for team master thread

while(c2)

_mm_pause();

}

else

{

// (iThread==0)

// wait for all other threads to reached barrier

while((c1+1)!=nThreads)

_mm_pause();

// here when all other threads of team at barrier

// release all other threads

c1 = 0;

// wait for all other threads to acknowledge release

// and subsequently, no longer using qtBarrier object

while((c2+1)!=nThreads)

_mm_pause();

// all threads no longer using qtBarrier object

c2 = 0;

}

}

};

// ...

// use in OpenMP

qtBarrier aBarrier; // all threads share this barrier

#pragma omp parallel

{

int nThreads = omp_get_num_threads();

int iThread = omp_get_thread_num();

// *** iter is private

for(int iter=0; iter<100; ++iter) {

aBarrier(iThread, nThreads);

// ...

}

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The (un)common problem you had(have), and is resolved by the above code, is when writing a barrier the mistake is writing the code with the assumption that all the threads are in phase. In particular, the assumption that all threads exit the barrier on phase n prior to any thread entering the barrier on phase n+1. The longer the computation time for the section following the exit of the barrier, the less likely the problem (deadlock) will occur. Conversely, the shorter the computation time for the section following the exit of the barrier, the more likely the problem (deadlock) will occur.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you very much for the quick answer with comprehensive code !

you wrote:

The (un)common problem you had(have), and is resolved by the above code, is when writing a barrier the mistake is writing the code with the assumption that all the threads are in phase. In particular, the assumption that all threads exit the barrier on phase n prior to any thread entering the barrier on phase n+1.

My original barrier code did take this common problem into account via an 'infinitely incremented' sequence number on which the threads wait to 'lapse' before allowed to cross the barrier.

Nevertheless I did try your code but essentially got the same problem as before: very good performance during most test runs but then the same occassional strange 'temporary hangs' for several seconds.

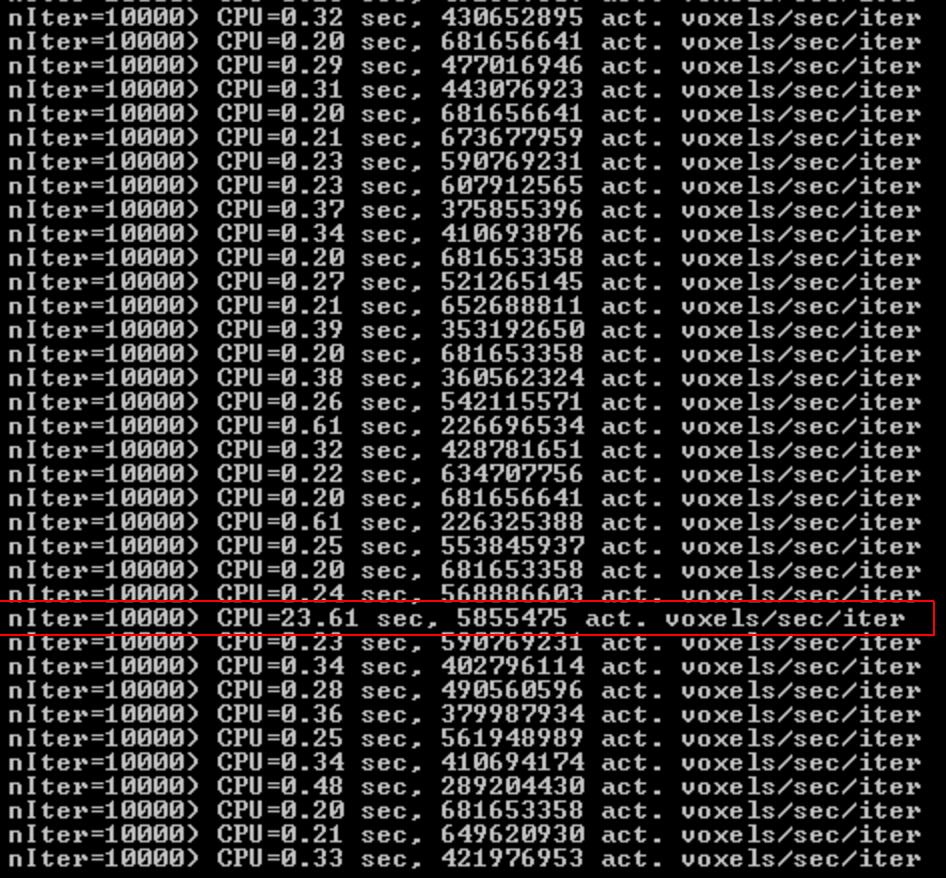

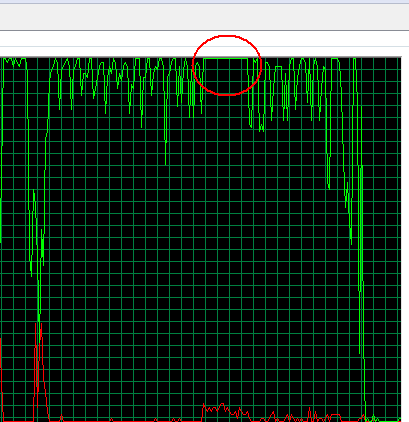

Below is an example of repeated test runs, each with 10000 jacobi iterations (on a 24x24x24 grid) The parallel performance of 400 million grid cells per second per iteration is good considering the small grid size. However: marked in red is the occasional outlier situation where parallel efficiency drops by almost two orders of magnitude to only 5 million per second.

Additional remarks:

- Those are not deadlock situations (and therefore no obvious error in the program synchronization logic!) : eventually there is always progress but I do not understand why the threads are spinning for seconds (i.e. billions of cycles) before making progress

- The problem does not seem directly connected to Hyperthreading (turning HT off via BIOS does not change the symptoms)

- The problem worsens with the number of threads (and background thread activity on the machine) and the problem disappears as soon as there are considerably less worker threads as physical cores (incl. HT). If there are more threads than physical cores, then the problem gets worse up to complete deadlock ..

- I tried various "yield" methods during the spin-wait in the barrier in addition to _mm_pause(), i.e. Sleep(0),Sleep(1),SwitchToThread() etc. but those only partly improved the situation at the price of serious additional CPU/context-switching overhead (up to ten times less throughput than in the 'good cases' of the tightly busy-spinning variant)

- Here is the last version of the complete algorithm (including some details of the inner loop that I omitted for brevity)

struct qtBarrier

{

volatile LONG c1;

volatile LONG c2;

qtBarrier() { c1 = 0; c2 = 0; }

int enter(LONG iThread, LONG nThreads)

{

if(iThread)

{

// indicate this thread reached barrier

InterlockedIncrement((volatile LONG*)&c1);

while(c1) _mm_pause();

InterlockedIncrement((volatile LONG*)&c2);

while(c2) _mm_pause();

return 1;

}

else

{

while((c1+1)!=nThreads) _mm_pause();

return 0;

}

}

void release(int nThreads)

{

c1 = 0;

while((c2+1)!=nThreads) _mm_pause();

c2 = 0;

}

};

qtBarrier aBarrier;

#pragma omp parallel

{

int tid = omp_get_thread_num();

int nThreads = omp_get_num_threads();

for(int iter=0; iter<nJacobiIter; iter++)

{

if(aBarrier.enter(tid,nThreads)==0)

{

std::swap( sourceBuf, destBuf );

iTask = 0;

aBarrier.release(nThreads);

}

// Each thread picks its z-slice to work on

int z;

while( (z = InterlockedIncrement((LONG volatile *)&iTask)-1) < sz)

{

for(int y=0;y<sy;y++)

{

for(int x=0; x<sx; x++)

{

process_grid_cell( sourceBuf, destBuf, x,y,z);

}

}

}

}

}

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The modifications you made, are fine, except the behavior is no longer that of solely a barrier. I suggest you rename the struct/class qtBarrierMaster or something reflective of what it now is used for.

Have you set the OpenMP affinities such that multiple threads are not sharing the same logical processor (and not oversubscribing)?

KMP_AFFINITY=scatter (or compact)

Or any of the other affinity environment variables.

I've had issues with sched_yield, where it will only yield to other threads that were preempted. Any threads that had suspended for I/O would not be a candidate to run as an alternate to sched_yield. Sleep(0) worked.

I suspect that multiple threads are running on the same logical processor.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for commenting again.

Have you set the OpenMP affinities such that multiple threads are not sharing the same logical processor (and not oversubscribing) ?

In the OMP implementation that I'm using (mingw64 gnu omp) setting affinities via environment does not work reliably. Furthermore I prefer my program to deliver reliable and robust performance independent from special environment settings.

In the mean time I have tried half a dozen different barrier implementations and yield/spin strategies but the original problem still persists: See the line marked in red in the screenshot above. I have absolutely no idea why the worker threads suddenly spin for 20 seconds in the barrier without apparent progress: Remember that the workload between barrier-syncs (iteartions) is very small (24^3 grid cells) and should be finished within a few *milli*-seconds.

The only strategy that resolves this "temporary livelock"-kind of situation is to use a non-spinning barrier, (i.e. put waiting threads to sleep on a event) or doing frequent Sleep(0)-yields. But doing so degrades the parallel efficiency by a factor of 10 or so to about the base performance of the naive OMP outer-loop parallelization.

I suspect that multiple threads are running on the same logical processor.

Why should this lead to the observed behavior ? Doesn't the scheduler give the physical core to another thread after 10 milliseconds at the latest ? So how could this lead to such prolonged delays (20 Seconds = 20000 Milliseconds = 2000 Time slices) I imagine that even in the worst case (all but one of the 32 threads spin-waiting in the barrier) the scheduler would make at least one of the 16 physical cores available to the one "latecomer" thread to finish its work (few milliseconds) thus making the barrier ready for the next round.

Or am I missing something fundamentally about windows thread scheduling here ?

Any further advice / insight is highly appreciated

ZX-81

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

test by bugxhexker

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

std::swap( sourceBuf, destBuf ); iTask = 0;

struct Jacobi_context

{

void *pSourceBuf;

void *pDestBuf;

long volatile iTask;

}

astJacobi[2];

// initialize astJacobi

#pragma omp parallel

for (int iJacobi = 0; iJacobi < 100; iJacobi++)

{

Jacobi_context & thisJacobi = astJacobi[(iJacobi & 1)];

Jacobi_context & thatJacobi = astJacobi[(iJacobi & 1) ^ 1];

void *pSourceBuf = thisJacobi.pSourceBuf;

void *pDestBuf = thisJacobi.pDestBuf;

long volatile *piTask = &thisJacobi.iTask;

// inner loops: e.g. for each iTask

thatJacobi.iTask = 0; // update for next iteration

// sync with other threads

}

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

>>In the OMP implementation that I'm using (mingw64 gnu omp) setting affinities via environment does not work reliably. Furthermore I prefer my program to deliver reliable and robust performance independent from special environment settings.

Currently your program is not delivering reliable and robust performance. Consider using sched_setaffinity on Linux (or equivalent on Windows).

The fact that the code progresses due to explicit sleep wait is indicative of the spin wait having thread scheduling issues. These issues can come about when you have multiple software threads using (scheduled on) the same hardware thread. You cannot assume that the author of the scheduler provided a completely "fair" scheduler. Often the scheduler is "unfair" amongst threads of different classes. Note, although your code does not visibly show "different classes" amongst the threads, the class of a specific user thread may vary should the hardware thread running that user thread get interrupted to service an interrupt. Conceptually the class my shift from "available for fast context switch" to "ready to run, but will require manipulation of page table entries". I've seen cases where a sched_yield() would experience the same issue as your are observing. The yield will yield to other thread in the "available for fast context switch" class but not to "ready to run, but will require manipulation of page table entries". *** the author of the scheduler will not be using the "..." nomenclature, but will likely use something else.

I suggest that instead of making assumptions about what you thing the scheduler should be doing, that you implement affinity pinning (your choice of method), such that no two OpenMP threads share the same logical processor. Then see if the code enters or avoids the "hung" state.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's reasonable to assume when you post here and don't specify your platform that you are running a full implementation of OpenMP. As libgomp doesn't claim full support for Windows and doesn't implement GOMP_CPU_AFFINITY, methods for improving the situation in that framework are somewhat off topic here. You ask for suggestions and then later say that you rule out the most important onesm admitting that you don't intend to run a full OpenMP as you implied originally.

I don't think much can be done with libgomp on Windows, beyond experimenting with OMP_NUM_THREADS settings between the number of cores and the number of logical processors (if you can't disable hyperthreads), and running Windows 7 SP1 or a newer Windows. Older Windows schedulers are notoriously poor with hyperthreads. Still, libgomp seems unlikely to produce satisfactory performance on a multiple CPU box or beyond 8 logical processors.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

jimdempseyatthecove wrote:

Often the scheduler is "unfair" amongst threads of different classes. Note, although your code does not visibly show "different classes" amongst the threads, the class of a specific user thread may vary should the hardware thread running that user thread get interrupted to service an interrupt. Conceptually the class my shift from "available for fast context switch" to "ready to run, but will require manipulation of page table entries". I've seen cases where a sched_yield() would experience the same issue as your are observing.

I suggest that instead of making assumptions about what you thing the scheduler should be doing, that you implement affinity pinning (your choice of method), such that no two OpenMP threads share the same logical processor. Then see if the code enters or avoids the "hung" state.

Thanks again, Jim for the detailed and comprehensive answer (highly appreciated). Fixing thread affinity is definitely the next thing I'm trying to implement. Actually I did suspect thread scheduling issues such as you describe (in combination with a poor libgomp implementation) from the start, however I was unaware that this could manifest itself in such dramatic symptoms (20 Seconds delay on 32 logical cores). So, before digging deeper into thread scheduling etc. I wanted to make sure I did not miss something more trivial, hoping to keep the basic OMP approach of conveniently spawning a bunch of threads (equal to the number of cores) via "#pragma omp parallel" and still achive decent performance when combining the simple OMP construct with a more sophisticated software/spin barrier for the inner loop.

Tim P. wrote:

Still, libgomp seems unlikely to produce satisfactory performance on a multiple CPU box or beyond 8 logical processors.

Thanks for clarifying this, which is a very valuable information ! This means that implementing my own "thread creation layer" directly on top of Windows CreateThread API (or using Jim's excellent quickthread framework :-) as a complete replacement for OMP in performance critical areas of my code seems like a sensible investement (unfortunately using intel icc-based OMP is not an option at this time)

Again, thank you very much for all the insightful help !

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

>>and still achive decent performance when combining the simple OMP construct with a more sophisticated software/spin barrier for the inner loop.

If you do not control thread affinity, you cannot be assured that the O/S will schedule the threads in a favorable way. The typical system has many more threads that those of your application. When your threads are not pinned, and your application starts, the O/S will generally place each of your threads on a different logical processor (hardware thread), assuming you are not oversubscribing. The O/S is also free to move your threads about. The applications thread to logical processor assignment may change when your application gets preempted to run some other process or service an interrupt. When the application uses less than the number of available hardware threads, thread repositioning _may_ be beneficial. Thread movement is not generally an issue *** unless *** multiple threads are competing for a common resource. In this situation, the O/S (many O/Ss) scheduler may juxtapose the thread-to-logical processor in an adverse way.

>>This means that implementing my own "thread creation layer" directly on top of Windows CreateThread API

*** Don't do that. Instead, near the top of your program:

#pragma omp parallel

{

your_set_logical_processor(omp_get_thread_num(), omp_get_num_threads());

}

Then use the Windows API that manipulates the thread affinities.

*** do not assume the available logical processors to your process are always 0, 1, 2, ..., n-1 ***

Get the process affinity bit mask, then assign your omp_thread_num's logical processor according to the bit (or bits) in the process affinity mask. IOW if your application is using only half of the logical processors, then you may want to affinitize each thread to two logical processors (preferably those in the same core).

*** If your system has (will have) more that 64 logical processors, then on Windows there is additional work you need to do to affinitize across multiple groups of up to 64 logical processors. This information is available on msdn.microsoft.com.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In order to fixing thread affinities as suggested, I did some testing with code like this

#pragma omp parallel

{

int iCore = omp_get_thread_num();

SetThreadAffinityMask(GetCurrentThread(),((1<<iCore));

...

}

However this still leads to the same occasional 'hanging' situations as before. All threads spinning in the barrier without progress for dozens of seconds before proceeding eventually. There is never a real deadlock or crash or error in the calculations so I'm quite confident that there is not a 'bug' in the algorithm of the barrier.

The problem does not occur if the "omp parallel" clause is moved inside the loop i.e.

for(iter=0;iter<numIter;iter++)

{

#pragma omp parallel

loop_over_grid_and_perform_jacobi_operator

}

But this results in a very inefficient parallelization (10 times less throughput compared to when the iteration loop is kept inside the parallel section together with the fast barrier)

EDIT: Additional observations: the problem does not occur when using significantly fewer threads than cores and it does also not occur when running the program on a standard PC, single-CPU core i7 with 8 logical and 4 physical cores.

Any ideas how to proceed from here ? I really would like to utilize more than 10% parallelization efficiency out of a machine with 16 physical cores.

Thanks & Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

// once only

#pragma omp parallel

{

int iCore = omp_get_thread_num();

SetThreadAffinityMask(GetCurrentThread(),((1<<iCore));

}

...

loop:

...

#pragma omp parallel

{

... // compute using prior set affinities

}

end loop

Is your system running with virtualization enabled?

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

>Is your system running with virtualization enabled?

yes, virtualization is enabled.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is not a formal solution, rather it is an experiment as to ascertain what is causing the intermittent, but not permanent, lockup:

struct qtBarrier

{

volatile LONG c1;

volatile LONG c2;

qtBarrier() { c1 = 0; c2 = 0; }

int enter(LONG iThread, LONG nThreads)

{

if(iThread)

{

// indicate this thread reached barrier

InterlockedIncrement((volatile LONG*)&c1);

while(c1)

{

_mm_pause();

InterlockedExchangeAdd((volatile LONG*)&c1, 0L);

}

InterlockedIncrement((volatile LONG*)&c2);

while(c2)

{

_mm_pause();

InterlockedExchangeAdd((volatile LONG*)&c2, 0L);

}

return 1;

}

else

{

while((c1+1)!=nThreads)

{

_mm_pause();

InterlockedExchangeAdd((volatile LONG*)&c1, 0L);

}

return 0;

}

}

void release(int nThreads)

{

c1 = 0;

while((c2+1)!=nThreads)

{

_mm_pause();

InterlockedExchangeAdd((volatile LONG*)&c2, 0L);

}

c2 = 0;

}

};

If the InterlockedExchangeAdd eliminates the lockup, then experiment with CLFLUSH.

Both hacks should not be required as cache coherency should be maintained. Note, both workarounds (should they work), would then not be necessary on a single socket system (though testing would be required).

Note, we are assuming your set thread affinity is correctly working.

Can you show your code for setting the affinity?

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Assuming you are running on Windows (use if Interlocked... seems to imply this), have you used GetProcessAffinityMask? If so, what does it return?

If the function return value == false, then your process does not have rights to observe or set affinity.

If the function return value == true, but returns ProcessAffinityMask or/and SystemAffinityMask with half the logical processors (16 of 32), then then the O/S has configured the system with multiple processor groups. This normally will not happen when the system has no more than 64 logical processors. However, with Virtualization enabled, there may be configuration options that alters this behavior (and will be observable with GetProcessAffinityMask.

Also,

int iCore = omp_get_thread_num(); SetThreadAffinityMask(GetCurrentThread(),((1<<iCore));

assumes only one processor group .AND. the process affinity mask has all logical processors represented and packed in the bit mask from bit 0 upwards (no gap). A robust system would make tests and verifications, as well as being adaptable to multiple processor groups.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

GetProcessAffinityMask() returns successfully all 64 bits = 1 masks.

jimdempseyatthecove wrote:

A robust system would make tests and verifications, as well as being adaptable to multiple processor groups.

Yes of course. The code examples I posted are only intended as rough sketches in order to "debug" the still unknown root cause of the "thread starvation" problem when parallelizing a tight loop over many cores.

From the experiments with Get/SetAffinityMask() so far it does not look like affinity pinning could solve the problem.

Any Idea on how to further debug/analyze this problem ?

Anyway, as a next step I will try to build a minimum working 'stand-alone' code example reproducing the problem.

Thanks & Regards,

Bernhard

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

>>dual-Xeon hardware (32 logical cores with Hyperthreading) .

From the description above, you have 16 physical cores x2 with HT for 32 logical cores. GetProcessAffinityMask should have returned 32 bits set, and 32 bits cleared in the 64-bit DWORD_PTR. (assuming x64 programming).

int _tmain(int argc, _TCHAR* argv[])

{

DWORD_PTR ProcessAffinityMask = 0;

DWORD_PTR SystemAffinityMask = 0;

BOOL ret = GetProcessAffinityMask(GetCurrentProcess(), &ProcessAffinityMask, &SystemAffinityMask);

std::cout << ret << " " << ProcessAffinityMask << " " << SystemAffinityMask << std::endl;

DWORD_PTR NewThreadAffinityMask = ProcessAffinityMask >> 1; // reserve 1 logical processor

DWORD_PTR PriorThreadAffinityMask = SetThreadAffinityMask(GetCurrentThread(), NewThreadAffinityMask);

std::cout << PriorThreadAffinityMask << " " << NewThreadAffinityMask << " " << GetLastError() << std::endl;

NewThreadAffinityMask = ProcessAffinityMask << 1; // invalid affinity mask

PriorThreadAffinityMask = SetThreadAffinityMask(GetCurrentThread(), NewThreadAffinityMask);

std::cout << PriorThreadAffinityMask << " " << NewThreadAffinityMask << " " << GetLastError() << std::endl;

return 0;

=============================

1 255 255

255 127 0

127 510 0

Above run on 4 core with HT (8 logical processors).

*** Note, due to an invalid affinity mask, the last SetThreadAffinityMask should have returned a 0, and a non-zero GetLastError, however it did not. As to what the scheduler will do with a thread with invalid affinity mask is undocumented.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

BTW, it is the programmers responsibility to manipulate thread affinities within the process affinity mask. And the process affinity mask is not assured to be 0-based, nor be a contiguous list of bits in the bit mask. Do not assume that every time you've seen it presented this way (0-based, and contiguous) that this assures that this will always be the case.

Jim Dempsey

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page