- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I can't succeed in building the wheel of intel-tensorflow 2.5 for Windows. In the build from source version there is a checkout done on r2.3-windows which does not exist for 2.5.

Is Intel planning on publishing those wheels(2.5) anytime soon ?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Sorry for the delay in response. The issue - not getting performance improvement even when TF_ENABLE_ONEDNN_OPTS is set to one, is fixed in TensorFlow 2.7 which was released earlier this month. To get the latest tensorflow 2.7 version, please refer the tensorflow pip page.

Regards

Gopika

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thank you for reaching out. We are checking this with the internal team. We will get back to you as soon as we get an update.

Regards

Gopika

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

TensorFlow* is a widely used machine learning framework in the deep learning arena, demanding efficient utilization of computational resources. In order to take full advantage of Intel® architecture and to extract maximum performance, the TensorFlow framework has been optimized using oneAPI Deep Neural Network Library (oneDNN) primitives, a popular performance library for deep learning applications. We were able to install prebuilt stock tensorflow 2.5 with intel onednn enabled. Please follow the below steps to install stock tensorflow 2.5:

1. Install stock tensorflow wheel for the windows version from this link: https://pypi.org/project/tensorflow/#files

To install the wheel file:

pip install __.whl

2. Running sanity check and displaying verbose log are used to ensure the tensorflow installation is successful with oneDNN optimizations enabled and running. By default, these oneDNN optimizations will be turned off. To enable them, set the environment variable TF_ENABLE_ONEDNN_OPTS.

set TF_ENABLE_ONEDNN_OPTS=1

3. Run sanity check: Once stock TensorFlow is installed, running the sanity.py must print "True" if oneDNN optimizations are present.

<sanity.py attached>

4. Ensure verbose log is displayed while running any simple keras workload. Verbose log ensures that oneDNN optimizations are used. It is often useful to collect information about how much of an application runtime is spent executing oneDNN primitives and which of those take the most time. oneDNN verbose mode enables tracing execution of oneDNN primitives and collection of basic statistics like execution time and primitive parameters. When verbose mode is enabled oneDNN will print out information to the console.

set DNNL_VERBOSE=2

set TF_ENABLE_ONEDNN_OPTS=1

python some_sample.py (the verbose log will be displayed)

Also, could you please share with us the following details?

1. The windows version you are using

2. The reason for opting build from source

For more information oneDNN verbose mode, please refer: https://oneapi-src.github.io/oneDNN/dev_guide_verbose.html

For more information on Intel Optimization for tensorflow, please refer: https://software.intel.com/content/www/us/en/develop/articles/intel-optimization-for-tensorflow-installation-guide.html

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi.

I've already tested that and the TF_ENABLE_ONEDNN_OPTS did not bring more performance. Setting it to true didn't make any impact on inference latency.

I am on Windows10, on a Xeon W-10885M.

I wanted to build from sources to obtain the AVX512 optimized tensorflow build. It looks like the ones from pypi for Windows are only supporting AVX and AVX2.

Thanks,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We have not heard from you in a while. Did the solution provided helped? Is your query resolved? Can we discontinue monitoring this thread?

Regards

Gopika

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thank you for the update. Could you please try freezing the model and then run with setting TF_ENABLE_ONEDNN_OPTS as 1? Also, if it is possible, could you please share with us the verbose log?

For more information on freezing, please refer: https://software.intel.com/content/www/us/en/develop/articles/optimize-tensorflow-pre-trained-model-inference.html .

For more information on verbose log, please refer the last reply: https://community.intel.com/t5/Intel-Optimized-AI-Frameworks/Intel-Tensorflow-Windows-Pypi-wheel/m-p/1293932#M249 .

Regards

Gopika

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

For a BERT Base at a fixed seq length & batch size I have a 25% reduced latency which is not bad at all.

Can you explain why the ONEDNN_OPTS works only with frozen graph and not standard saved_model ?

Thanks,

Remi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thank you for pointing it out. It is a bug. We are looking into the issue. We will get back to you once it gets resolved. Eventually, even with non-frozen models, TF_ENABLE_ONEDNN_OPTS=1 will result in better performance.

Regards

Gopika

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

To analyze the issue from our end, could you please share with us the OneDNN verbose log that you get with frozen and non-frozen models. Steps to display the verbose log is mentioned in the previous reply: https://community.intel.com/t5/Intel-Optimized-AI-Frameworks/Intel-Tensorflow-Windows-Pypi-wheel/m-p/1293932#M249

Thank you in advance

Regards

Gopika

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Very Well.

This is the log with onnl_verbose set to 2 for maximum verbosity and one_dnn_ops "activated" on a FROZEN model

dnnl_verbose,info,oneDNN v2.2.0 (commit N/A)

dnnl_verbose,info,cpu,runtime:threadpool

dnnl_verbose,info,cpu,isa:Intel AVX2

dnnl_verbose,info,gpu,runtime:none

dnnl_verbose,info,prim_template:operation,engine,primitive,implementation,prop_kind,memory_descriptors,attributes,auxiliary,problem_desc,exec_time

dnnl_verbose,create:cache_miss,cpu,inner_product,gemm:jit,forward_inference,src_f32::blocked:ab:f0 wei_f32::blocked:ab:f0 bia_f32::blocked:a:f0 dst_f32::blocked:ab:f0,post_ops:'eltwise_tanh;';,,mb1ic768oc768,0.0472

dnnl_verbose,create:cache_miss,cpu,reorder,jit:uni,undef,src_f32::blocked:ba:f0 dst_f32::blocked:ab:f0,,,768x768,0.0739

dnnl_verbose,exec,cpu,reorder,jit:uni,undef,src_f32::blocked:ba:f0 dst_f32::blocked:ab:f0,,,768x768,0.4752

dnnl_verbose,exec,cpu,inner_product,gemm:jit,forward_inference,src_f32::blocked:ab:f0 wei_f32::blocked:ab:f0 bia_f32::blocked:a:f0 dst_f32::blocked:ab:f0,post_ops:'eltwise_tanh;';,,mb1ic768oc768,0.0853

dnnl_verbose,create:cache_miss,cpu,inner_product,gemm:jit,forward_inference,src_f32::blocked:ab:f0 wei_f32::blocked:ab:f0 bia_f32::blocked:a:f0 dst_f32::blocked:ab:f0,,,mb1ic768oc2,0.00670004

dnnl_verbose,create:cache_miss,cpu,reorder,jit:uni,undef,src_f32::blocked:ba:f0 dst_f32::blocked:ab:f0,,,2x768,0.063

dnnl_verbose,exec,cpu,reorder,jit:uni,undef,src_f32::blocked:ba:f0 dst_f32::blocked:ab:f0,,,2x768,0.015

dnnl_verbose,exec,cpu,inner_product,gemm:jit,forward_inference,src_f32::blocked:ab:f0 wei_f32::blocked:ab:f0 bia_f32::blocked:a:f0 dst_f32::blocked:ab:f0,,,mb1ic768oc2,0.0165

These are one_dnnl related logs for an inference with a SAVED_MODEL and one_dnn_ops "activated":

dnnl_verbose,info,oneDNN v2.2.0 (commit N/A)

dnnl_verbose,info,cpu,runtime:threadpool

dnnl_verbose,info,cpu,isa:Intel AVX2

dnnl_verbose,info,gpu,runtime:none

dnnl_verbose,info,prim_template:operation,engine,primitive,implementation,prop_kind,memory_descriptors,attributes,auxiliary,problem_desc,exec_time

dnnl_verbose,create:cache_miss,cpu,inner_product,gemm:jit,forward_inference,src_f32::blocked:ab:f0 wei_f32::blocked:ba:f0 bia_f32::blocked:a:f0 dst_f32::blocked:ab:f0,post_ops:'eltwise_tanh;';,,mb1ic768oc768,0.0577999

dnnl_verbose,exec,cpu,inner_product,gemm:jit,forward_inference,src_f32::blocked:ab:f0 wei_f32::blocked:ba:f0 bia_f32::blocked:a:f0 dst_f32::blocked:ab:f0,post_ops:'eltwise_tanh;';,,mb1ic768oc768,0.1392

dnnl_verbose,create:cache_miss,cpu,inner_product,gemm:jit,forward_inference,src_f32::blocked:ab:f0 wei_f32::blocked:ba:f0 bia_f32::blocked:a:f0 dst_f32::blocked:ab:f0,,,mb1ic768oc2,0.00670004

dnnl_verbose,exec,cpu,inner_product,gemm:jit,forward_inference,src_f32::blocked:ab:f0 wei_f32::blocked:ba:f0 bia_f32::blocked:a:f0 dst_f32::blocked:ab:f0,,,mb1ic768oc2,0.0151

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

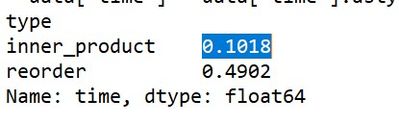

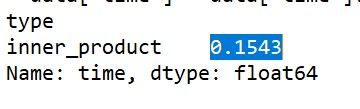

Thank you for sharing the logs. A possible explanation for the performance improvement with frozen models as compared to non-frozen models is the fact that during freezing the inner product is reduced by 50% and an additional reordering. We deduced these observations after parsing through the verbose logs that you provided.

With freezing Without freezing

The verbose logs can be parsed using: https://github.com/oneapi-src/oneAPI-samples/tree/master/Libraries/oneDNN/tutorials/profiling

To know more about inner product, please refer: https://oneapi-src.github.io/oneDNN/dev_guide_inner_product.html

To know more about reorder, please refer: https://oneapi-src.github.io/oneDNN/dev_guide_reorder.html

Hope this helps

Regards

Gopika

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Has the solution provided helped? Is your query resolved? Can we discontinue monitoring this thread?

Regards

Gopika

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Sorry for the delay in response. The issue - not getting performance improvement even when TF_ENABLE_ONEDNN_OPTS is set to one, is fixed in TensorFlow 2.7 which was released earlier this month. To get the latest tensorflow 2.7 version, please refer the tensorflow pip page.

Regards

Gopika

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thank you for accepting our response as solution. If you need any additional information, please post a new question as this thread will no longer be monitored by Intel.

Regards

Gopika

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page