- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I have downloaded ssd_mobilenet_v2_coco from the listed URL and trained it using custom dataset.

To run training in Docker, I used the following command:

/tensorflow/models/research# python object_detection/model_main.py \

--pipeline_config_path=learn_vehicle/ckpt/pipeline.config \

--model_dir=learn_vehicle/train \

--num_train_steps=500 \

--num_eval_steps=100After training, I tried to generate IE files on the virtual machine, where OpenVINO is installed by copying non-frozen MetaGraph files (from learn_vehicle/train folder) to VM and run the following command:

python3 mo_tf.py --input_meta_graph ~/vehicle_model/model.ckpt-500.meta --tensorflow_use_custom_operations_config extensions/front/tf/ssd_v2_support.json --data_type FP16(~/vehicle_model is the folder on VM)

After running the command above, I got the following error:

Model Optimizer arguments:

Common parameters:

- Path to the Input Model: None

- Path for generated IR: /home/niko/intel/computer_vision_sdk_2018.5.455/deployment_tools/model_optimizer/.

- IR output name: model.ckpt-500

- Log level: ERROR

- Batch: Not specified, inherited from the model

- Input layers: Not specified, inherited from the model

- Output layers: Not specified, inherited from the model

- Input shapes: Not specified, inherited from the model

- Mean values: Not specified

- Scale values: Not specified

- Scale factor: Not specified

- Precision of IR: FP16

- Enable fusing: True

- Enable grouped convolutions fusing: True

- Move mean values to preprocess section: False

- Reverse input channels: False

TensorFlow specific parameters:

- Input model in text protobuf format: False

- Offload unsupported operations: False

- Path to model dump for TensorBoard: None

- List of shared libraries with TensorFlow custom layers implementation: None

- Update the configuration file with input/output node names: None

- Use configuration file used to generate the model with Object Detection API: None

- Operations to offload: None

- Patterns to offload: None

- Use the config file: /home/niko/intel/computer_vision_sdk_2018.5.455/deployment_tools/model_optimizer/extensions/front/tf/ssd_v2_support.json

Model Optimizer version: 1.5.12.49d067a0

[ ERROR ] Graph contains 0 node after executing add_output_ops and add_input_ops. It may happen due to absence of 'Placeholder' layer in the model. It considered as error because resulting IR will be empty which is not usualI would appreciate if anyone helped to solve this issue.

Thank you,

Niko

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

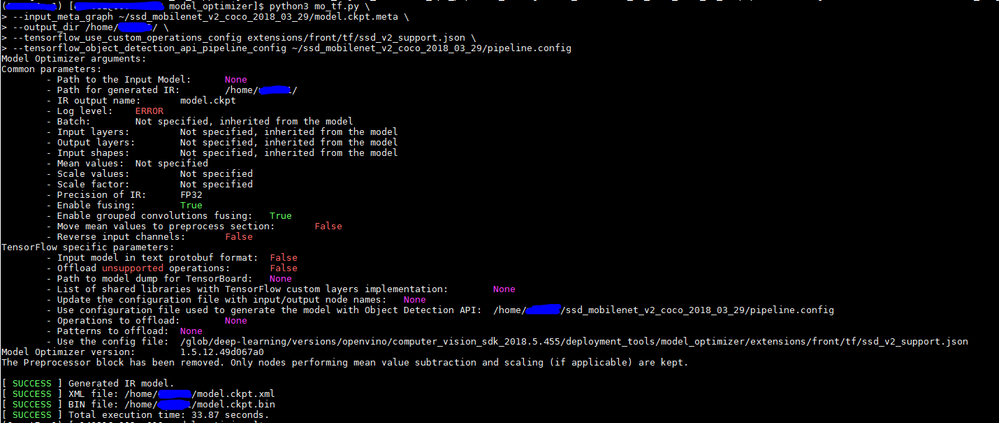

We expect that you are having pipeline.config file for trained model.

Hence please try out with the below command:

python3 mo_tf.py \

--input_meta_graph ~/vehicle_model/model.ckpt-500.meta \

--output_dir /home/uXXXX/ \

--tensorflow_use_custom_operations_config extensions/front/tf/ssd_v2_support.json \

--tensorflow_object_detection_api_pipeline_config ~/<path_to>/pipeline.config

If that does not work, try passing input_shape as an argument(--input_shape [1,X, X, 3] where X is the input shape and 3 is channel).

Kindly find the attached screenshot(Converting meta file to IR) which worked fine for us.

Please let me know if you still face this issue. Kindly share the trained model and configuration file for further troubleshooting.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for a quick response! I have tried to modify the command the following way, but unfortunately got an error:

python3 mo_tf.py \

> --input_meta_graph ~/vehicle_model/checkpoint/model.ckpt-500.meta \

> --output_dir ~/vehicle_model/checkpoint/output \

> --tensorflow_use_custom_operations_config extensions/front/tf/ssd_v2_support.json \

> --tensorflow_object_detection_api_pipeline_config ~/vehicle_model/checkpoint/pipeline.config \

> --data_type FP16

Model Optimizer arguments:

Common parameters:

- Path to the Input Model: None

- Path for generated IR: /home/niko/vehicle_model/checkpoint/output

- IR output name: model.ckpt-500

- Log level: ERROR

- Batch: Not specified, inherited from the model

- Input layers: Not specified, inherited from the model

- Output layers: Not specified, inherited from the model

- Input shapes: Not specified, inherited from the model

- Mean values: Not specified

- Scale values: Not specified

- Scale factor: Not specified

- Precision of IR: FP16

- Enable fusing: True

- Enable grouped convolutions fusing: True

- Move mean values to preprocess section: False

- Reverse input channels: False

TensorFlow specific parameters:

- Input model in text protobuf format: False

- Offload unsupported operations: False

- Path to model dump for TensorBoard: None

- List of shared libraries with TensorFlow custom layers implementation: None

- Update the configuration file with input/output node names: None

- Use configuration file used to generate the model with Object Detection API: /home/niko/vehicle_model/checkpoint/pipeline.config

- Operations to offload: None

- Patterns to offload: None

- Use the config file: /home/niko/intel/computer_vision_sdk_2018.5.455/deployment_tools/model_optimizer/extensions/front/tf/ssd_v2_support.json

Model Optimizer version: 1.5.12.49d067a0

[ ERROR ] Graph contains 0 node after executing add_output_ops and add_input_ops. It may happen due to absence of 'Placeholder' layer in the model. It considered as error because resulting IR will be empty which is not usualincludin parameter --input_shape [1,300, 300, 3] didn't work either.

Enclosed please find the checkpoint files that the training procedure generated. I would really appreciate if you could help with further troubleshooting.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page