- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm setting up 100 queues, each with 1k float shared mem and doing a simple parallel_for in the kernel that does a single multiply and assign to fill each shared array, then a wait on each of the return events.

I time three passes.

on cpu:

N_QUEUES=100, N=1000

xpu-time :1.26718

xpu-time :0.00582737

xpu-time :0.00395401

Passed

on GPU:

N_QUEUES=100, N=1000

xpu-time :10.3693

xpu-time :0.0118774

xpu-time :0.0101834

In both cases, the arrays are filled with expected values, but it seems to me that the time for the first execution is extremely long. I'm wondering if this is some known start-up overhead for tbb, since the book examples usually have some warm-up pass that excludes measuring the initial task.

Assuming that is the issue, Is there some method of initializing tbb for a large number of tasks rather than adding them one at a time?

I'm substituting this for the fig_1_1_hello in the dpc++ book, and build with the makefile created by its cmake on ubuntu 20.04 linux, using the current docker distribution for oneapi.

After thinking about this some more, is this perhaps the JIT compilation time being added for each queue?

After searching for JIT issues, I see that this is a known issue of around 140ms per kernel. I changed my queue count to 1000 and saw initial pass go up to around 140 secs on GPU. The proposed solutions are to use Ahead of Time Compilation.

I also noted, during further testing, that there is a very long exit time from the app, so there must be some associated clean-up for multiple queues. Is there some uninstall of the kernel executables?

I'll attach my example code.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for reaching out to us.

Thank you for providing the reproducible code. We are looking into it and we will get back to you soon.

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We looked into your code. We can see that the queues(100) which are launched in the 1st iteration have been used in second & third iterations without free-ing the queues (at the end of 1st iteration). So, as the queue initialization takes time, we can see that 1st iteration is taking time greater than 2nd & 3rd iterations. Since the same queues have been used in the 2nd & 3rd iterations it took less time compared to 1st one.

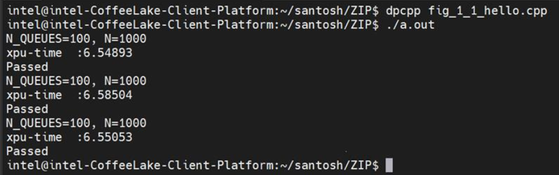

We attached a sample code, where we free the queue at the end of each iteration. We can see that each iteration is taking a similar time for execution as shown in the below screenshot.

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, I understand how to make it re-do the JIT compilation overhead each pass by creating new queues, so taking a long time on all measurements. It isn't a very useful example, since the objective is, in general, to reduce the execution time.

The real problem here is that the JIT compilation time appears to increase linearly for each added queue. For example, if I change N_QUEUES from 100 to 1000, execution time goes up

for cpu:

N_QUEUES=1000, N=100

xpu-time :123.282

xpu-time :0.0236045

xpu-time :0.0236197

for gpu:

N_QUEUES=1000, N=100

xpu-time :147.845

xpu-time :0.931922

xpu-time :0.954865

Also, from the same example, with N_QUEUES=1000 and using cpu_selector, exit from program takes over a minute.

It exits immediately when using gpu_selector. This is a different issue, but you already have the code.

On the JIT compilation overhead ... why should there be the linear overhead for every queue/kernel? Isn't this a good candidate for doing multiple compilations in parallel?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

To understand your query better, could you please provide us the use-case or the intention behind creating 1000 queues and doing the same task with each queue?

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We haven't heard back from you. Could you please provide us the use-case or the intention behind creating 1000 queues and doing the same task with each queue?

Best Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This resnet-50 description shows large numbers of filter channels ... 1024 and 2048 in conv4 and conv5 layers. Assuming you are trying to lock specific filter channels to specific cores, so the specific filter parameters stay in specific core caches, this could provide a use case that could benefit from specifying a large number of queues.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't have a specific use case that requires that many queues.

I suspect FPGA tasks with kernel to kernel pipes could require explicit locking of kernel tasks to hardware. Perhaps that would be a use case.

I see the taskflow project referencing apps that require millions of tasks. Perhaps their application could provide a use case. See https://taskflow.github.io/.

This Intel video, https://youtu.be/p7HWSciMAms?t=994, suggests using multiple in-order queues as a way to obtain more parallelism. Perhaps there is a use case there.

I would guess, that neural net execution with high batch size could specify a queue per batch index. For example 256 batch sizes are used in the Intel Ponte Vecchio resnet configurations at https://edc.intel.com/content/www/us/en/products/performance/benchmarks/architecture-day-2021/?r=1849242047

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We are analyzing your issue and we will get back to you soon.

Thanks & regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We analyzed your code and attached the log files regarding the API timing results for your reference.

For Q=100:

Function, Calls, Time (%)

zeModuleCreate, 100, 97.22

zeCommandQueueExecuteCommandLists, 300, 1.98

For Q=1000:

Function, Calls, Time (%)

zeModuleCreate, 1000, 94.76

zeCommandQueueExecuteCommandLists, 3000, 4.39

From the above analysis, we can see that the module creation for each queue has been done only Q(but not 3xQ) times which took the maximum of time(97.22% for Q=100, 94.76% for Q=1000). As a result, we can see a long overhead for the initial use of multiple queues but not in the successive iterations.

As the module creation will be done in a linear fashion, so we can expect a linear increase in time as the increase in No. of queues increases from 100 to 1000.

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We haven't heard back from you. Could you please provide us with an update on your issue?

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We have not heard back from you. This thread will no longer be monitored by Intel. If you need further assistance, please post a new question.

Thanks & Regards,

Santosh

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page