- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am using a code (Wien2k) which extensively exploits lapavck/scalapack via the mkl library, and can also work in hybrid mode with openmp+mpi. In my prior experience, and that of others, the hybrid mode with 2 openmp threads was slightly slower, perhaps 10%.

With a 64 core Gold 6338 it is very different, with 2 openmp & the rest mpi ~1.6 times faster! I cannot explain this, and I am wondering whether this somehow relates to the architecture or is a bug with using all 64 mpi.

For reference I am using 2021.1.1 versions of mkl/compiler/impi as later ones don't work for reasons I have not been able to determine (large program for matrix eigensolving hangs).

I can provide a way to reproduce this, but it would involve transferring a large code & some control files.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Slightly embarrassing!

Before posting in November I double checked the timings, talked to a colleague in Cambridge who had seen something similar on other code and also checked with the local sys_admins for the cluster at Northwestern. Everyone indicated it was real, with other info on the internet that was similar.

When you asked for timings with 2 nodes (attached), the full mpi was not 1.5 times slower. I rechecked and with 3 nodes it is now also not abnormally slower. Investigating, not soon after my tests & posting I was told that a switch on the cluster relevant to the nodes I was using died, and also one of the nodes I was using died. I do not know specifics, and I suspect both have now been recycled. My guess is that there were major hardware problems at the time of the test which were being patched by some slow fault-tolerant algorithms, but costing a lot of time. A guess only, and I have no idea why this should effect pure mpi more than hybrid.

Attached in a tar is the output with 2 nodes of grep -ie time Up_1 and Up_2. I edited slightly PtF.klist (also attached) so the calculations would be faster. I am also attaching the M1 and M2 to use for these. It will still be interesting to know what your timings are.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Laurence,

Thanks for reaching out to us.

>>I can provide a way to reproduce this..

It would be a great help if you can provide us with a sample reproducer code and steps to reproduce it so that we can check it from our end as well.

Regards,

Vidya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The Intel version is 2021.1.1 . Later versions have worse problems, hanging with no information.

$ uname -a

Linux qnode1058 3.10.0-1160.71.1.el7.x86_64 #1 SMP Wed Jun 15 08:55:08 UTC 2022 x86_64 x86_64 x86_64

GNU/Linux

[lma712@qnode1058 ~]$ head -20 /proc/cpuinfo

processor : 0

vendor_id : GenuineIntel

cpu family : 6

model : 106

model name : Intel(R) Xeon(R) Gold 6338 CPU @ 2.00GHz

stepping : 6

microcode : 0xd000363

cpu MHz : 2000.000

cache size : 49152 KB

physical id : 0

siblings : 32

core id : 0

cpu cores : 32

apicid : 0

initial apicid : 0

fpu : yes

fpu_exception : yes

cpuid level : 27

wp : yes

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Laurence,

Thanks for providing us with the details.

Could you please let us know if there are any additional dependencies needed to be installed here in this case?

If yes, please provide us with the required information so that we can proceed further in this case.

Regards,

Vidya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Nothing beyond ifort/icc/impi, tcsh & standard Linux.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For reference, I am seeing the 6338 as about 1.5 times slower (normalized to the number of cores) than a 6130 in pure mpi, with a speedup of about 1.75 using hybrid.

I spoke to a colleague in Cambridge, UK and they have seen something similar, in fact far worse -- it can be a factor of 10. You are probably going to hear multiple grumbles from major supercomputer users around the world on similar issues.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Laurence,

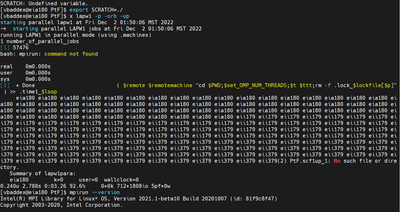

I tried following the steps provided in README and this is the error I'm getting when trying to run this step

x lapw1 -p -orb -upCould you please let me know what am i missing here and help me to resolve this issue?

Regards,

Vidya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Laurence,

Yes, I've already sourced oneAPI setvars.sh script still I'm getting the mpirun command not found error (from the screenshot in my previous post you can see mpirun --version is working fine as I've already set up oneAPI environment).

Do you have any idea about this error like is there any script that effects oneAPI environment setup?

Please do let me know so that i can proceed further.

Regards,

Vidya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1) Before doing "x lapw1 -up -p -orb" do "which mpirun". If mpirun is not found then, you have an incomplete oneapi. You will need to add the impi package.

2) If you find mpirun, edit lapw1para and after:

#which def-file are we using?

if ($#argv < 1) then

echo usage: $0 deffile

exit

endif

Add (line 134) "which mpirun".

Let me know if the first works but the second fails. If that is the case I will try and replicate it myself.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Laurence,

Could you please let us know how much time it would take approximately to finish this step x lapw1 -up -p -orb?

I tried running it for about 2.5 hrs but still, it kept running.

Regards,

Vidya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you have done cp M2 .machines, where M2 is (with nodes edited)

granularity:1

omp_lapw1:2

1:node01:32 node02:32 node03:32

extrafine

That should take 27 minutes. M1 shoul take about 45 minutes. If you used instead

granularity:1

omp_lapw1:1

1:node01:64

extrafine

In principle it should take about 90 minutes. It will either crash somewhere in impi, or run forever for reasons I do not undestand.

Please ensure that you did not oversubscribed the number of mpi processes, as then they compete/conflict and it may never stop.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Laurence,

If possible, could you please try to isolate the issue that you are facing in the form of a sample reproducer code to reproduce the performance issue that you are observing with hybrid mode so that it would be easier to address the issue quickly?

Regards,

Vidya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It is not appropriate to make a toy version, it will not be representative.

I assume that you still cannot get it to run. What is your . machines file? What CPU are you using? What is your network?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Laurence,

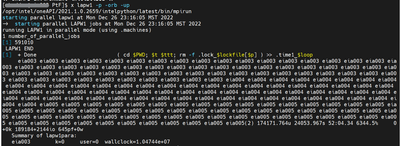

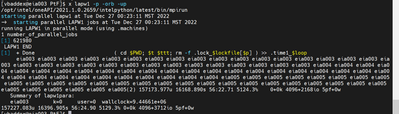

Could you please let me know if I'm on right track in replicating the issue that you are observing?

Here is the screenshot of the output of x lapw1 -p -orb -up command (5th step in Readme)

Here is the screenshot of the output of x lapw1 -p -orb -up command (8th step in Readme)

Regards,

Vidya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

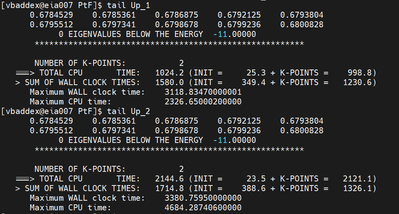

lma712@quser21 PtF]$ tail *output1up_1 0.6516724 0.6517651 0.6518586 0.6521098 0.6522035

0.6522145 0.6523540 0.6524470 0.6525784 0.6528393

0 EIGENVALUES BELOW THE ENERGY -11.00000

********************************************************

NUMBER OF K-POINTS: 2

===> TOTAL CPU TIME: 1979.8 (INIT = 16.8 + K-POINTS = 1963.0)

> SUM OF WALL CLOCK TIMES: 1020.1 (INIT = 17.1 + K-POINTS = 1003.0)

Maximum WALL clock time: 2230.08410000001

Maximum CPU time: 4212.38736000000

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For reference, with just mpi the last two lines I have are

Maximum WALL clock time: 3520.51289999999

Maximum CPU time: 3510.76150400000

And combining omp & mpi

Maximum WALL clock time: 2228.30399999999

Maximum CPU time: 4237.83427500000

WALL is the expired time (in seconds), which is what matters. I expect your numbers to be similar.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Laurence,

Could you please check the below screenshot and let me know if this is the expected result (i guess there is some difference)?

Regards,

Vidya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Something is very odd with your numbers as WALL should be only slight larger than CPU with pure MPI, and about 1/2 in hybrid. Thoughts:

1) Do you have a fast infiniband or or a slow Ethernet interconnect?

2) Is hyperthreading on (it can get in the way)?

3) Was anyone else running on those nodes, beyond standard OS?

Please do "grep -ie time Up_1 Up_2" and find a way to get me the output. It might give me a clue.

Currently confused!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page