- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello everyone,

We recently transitioned our code to utilise the MKL data fitting spline and have now noticed a rather large issue with memory usage.

In our largest examples we can allocate in excess of 1e6 splines using the MKL spline library and we are seeing an additional overhead of ~100GB of RAM from just the MKL splines.

Previously we used our own code for the natural cubic spline and have compared directly between implementations to understand and isolate the memory increase. It seems that the additional memory is allocated within dfdNewTask1D.

The splines themselves are nothing to complicated or large, typically consist of ~27 knots and use the natural cubic spline method.

I have tried to disable the fast memory management, and to free unused memory for MKL as discussed at avoiding-memory-leaks-in-intel-mkl.html. These options has no impact on the overall memory usage.

Is there anything that can be done to reduce the memory overhead of the MKL data fitting splines?

Is this memory usage expected?

Thanks,

Ewan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ewan,

The memory consumption issue has been resolved in oneMKL 2021.2 which available for download.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ewan, do you use something from MKL beyond of splines?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Gennady,

Yes, MKL is used elsewhere in the tool. For example, the LAPACK library.

Within the cubic spline code, I restrict the MKL include to just "mkl_df.h"

Ewan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ewan,

Glad to see new topics from you!

Could you please provide a little bit more details regarding next points:

>> In our largest examples we can allocate in excess of 1e6 splines using the MKL spline library and we are seeing an additional overhead of ~100GB of RAM from just the MKL splines.

Do you create a new Datafitting task for each spline? And do you use dfDeleteTask() routine to free the used memory when the task is not needed anymore?

Also it would be great to know Datafitting task parameters like “nx”, “ny”, “yhint”.

The answers can help me to understand/reproduce observed problem.

Best regards,

Pavel

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Pavel,

I'm glad you are happy to see me, I wasn't sure how keen you would be to hear from me again

Ok, let me answer your questions and give you some detail so that you can reproduce:

- Yes, a new datafitting task is created for every spline.

- Yes, we do correctly deallocate splines when they are no longer used. However, during our computation cycle all >1e6 splines will be required. Only at the end of computation do we deallocate and clean up the splines.

- Maybe worth noting that the memory consumption doesn't appear to be a memory leak, from some analysis with Heaptrack (heap only analyser).

- The large bulk of splines are natural cubic spines with BCs of f''(x) == 0.0.

- Spline type DF_PP_NATURAL with DF_PP_CUBIC order.

- Spline BC:

- DF_BC_2ND_LEFT_DER == 0.0

- DF_BC_2ND_RIGHT_DER == 0.0

- No internal conditions, with DF_NO_HINT.

- Using DF_NON_UNIFORM_PARTITION for x-values hint.

- Using DF_NO_HINT for y-values hint.

- Using DF_NO_HINT for spline co-efficients.

- Typically these splines have something like 27 knots (nx=ny=~27 points).

If you have any more questions then let me know.

Ewan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ewan,

thank you for the provided details.

Gennady and me are checking that on our side.

Preliminary I could say about 16GB are used for data fitting tasks in case of 1e6 splines.

Some temporary memory is also allocated during interpolation routine call but it is freed at the end of the call.

If all of the 1e6 splines use the same x-coordinates with different function values I could suggest to use single Datafitting task with the vector-valued function.

But anyway we continue investigating it.

Best regards,

Pavel

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok, so you don't seem to be seeing nearly the same issue with memory usage.

I probably should've mentioned above, but I am using MKL 2019_U5 .

What version of MKL are you guys testing with?

Ewan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We tested the latest version 2020 u4.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ewan,

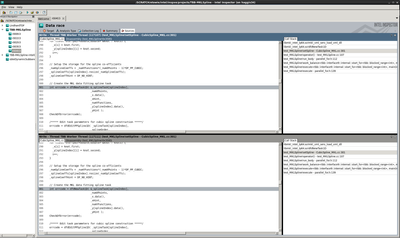

we tried to emulate the case you reported and measure the size of memory consumed by mkl. Please take a look at the example of the code attached.

Here are what we see ( mkl 2020 u4, openmp threaded version, lp64 mode, RH7):

$ ./a.out 1000000 <--- the input number of splines,

Number of Splines == 1000000

Peak memory allocated by Intel(R) MKL allocator : 17208004048 bytes.

it means that MKL allocates itself about 17 Gb of memory only.

You may give us the reproducer to see the 100 Gb memory consumed by mkl, in the case your usage model is different.

-Gennady

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

the test we built follow with your instruction is attached

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks, I will have a look at the test case and try to repeat on my side.

The only thing I can immediately see differently is that I only use the serial MKL library. In our software we make heavy use of TBB parallelism and therefore try to stick with serial MKL functions.

Ewan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Just to update as I'm on holiday next week..

Repeated your test case in my build environment and can confirm that I see the same memory usage of 16GB per 1e6 splines.

Have quickly thrown together a test case based on my MKL spline class (wrapper for your library calls), and also see the same memory usage.

So, will run another memory analysis using our full software to get more info on the memory issue. If possible, I will try to get either a reproducer, or at least a better understanding and work backwards from there.

TLDR Am on holiday next week, will pick this up and figure out what is unique about my test case when I return.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

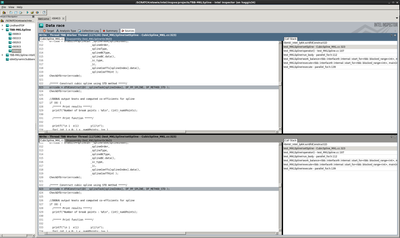

Ok, I've recreated the test using a cutdown version of my MKL spline class (wrapper for Data Fitting functions). In this test the construction of the splines can be constructed in serial, or in parallel using tbb::parallel_for loop.

I've compiled with the 2019U5 versions of MKL and TBB, and using the serial version of the MKL library.

The arguments for the compiled binary are: <binary> <numSplines> <parallelFlag>

where <parallelFlag>=0 for serial mode, or <parallelFlag>=1 for parallel mode.

I don't repeat the memory consumption values I see in my full code (I'm still investigating this), but if I run the test through inspector I see a data race that I don't fully understand. Going one step further would be some method to make sure the constructed splines are actually valid and correct, but I've run out of time for this today.

Obviously the peak memory consumption of the parallel case is higher (and probably estimated).

Can you confirm if the data race in the attached example is something I should be concerned about, or not?

Thanks,

Ewan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I maybe didn't make it clear in my previous post that I see the data race within the TBB library code.

Has anyone picked this up to investigate it yet?

Thanks,

Ewan

PS I've attached a quick attempt at trying to parallelise your test code with a TBB parallel for loop. It doesn't work at all well! I don't have the time to figure out what needs to be updated for safe memory access, but maybe this might help to get you started?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

regarding the previous message about the Data Race problem reported by Inspector - yes, we managed to see this data race pointed to

CubicSpline_MKL *spline = new CubicSpline_MKL(CubicSpline_MKL::SplineType::NORMAL,

line where no mkl's call happen. I think the problem outside of mkl but mostly regarding tbb parallel loop

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I definitely do not see the data race on the line you mention!

I've attached the Inspector analysis I have which shows the data race occurring within dfdNewTask1D, and another data race occurring within dfdConstruct1D. Below are some screenshots demonstrating the data race.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ewan,

sorry for the delay in response and thanks for attaching the inspector's report.

I think that the reason that both Gennady and me saw the different inspector’s report is that we checked the latest oneMKL build (instead of 2019U5).

I reproduced the report you have sent with the 2019U5 MKL release.

We investigated this issue in the past and I can confirm that observed data-races do not cause any data corruptions and any other correctness/performance issues.

Also you shouldn't see such problems with the latest oneMKL builds.

Please fill free to ask more.

Best regards,

Pavel

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for getting back to me. Is oneMKL available for download on GitHub yet?

Ewan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Evan, the very first version of oneMKL will be released very soon, probably by eow. I will make the announcement at the top of the Forum.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok, in the interim I will download the package for the latest version of MKL to continue my testing.

When I have resolved or better understood the issue, I will update the thread here.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello again!

I was just reading the Release Notes (Intel® Math Kernel Library Release Notes and New Features) and I spotted the following in the section for 2019U5:

Known Limitations:

- GEMM_S8U8S32 and GEMM_S16S16S32 may return wrong results when using Intel® TBB threading if the offsetc parameter is given by a lower case letter.

- GEMM_S8U8S32_COMPUTE and GEMM_S16S16S32_COMPUTE may return wrong results when using Intel(R) TBB threading if neither the A or B matrix are packed into the internal format and the offsetc parameter is given by a lower case letter. As a workaround use only upper case letters for the offsetc parameter in the above situations i.e. ‘F’, ‘C’, or ‘R’.

- Customer using complex general sparse eigenvalue function can come across a potential segmentation fault issue depending on input matrices.

- When MKL is used with TBB-based threading layer, reducing the number of threads that TBB can use (in particular via tbb::global_control) may lead to high memory consumption.

- For the standalone version of Intel® MKL on Linux, if you expect to use Intel® TBB, you will need to install the standalone version of Intel® TBB, otherwise expect the examples to crash. This is a workaround. For more information, see this article.

Is this relating to some of the issues you say have been resolved in the 2020 release?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page