- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm attempting to measure the performance of MKL's FFTW3 wrapper on a cluster ("libfftw3x_cdft_lp64.a", version 2022.1.0) and I'm seeing large variations in measurement.

The benchmarking code looks like this (pseudo-code):

initialize mpi

call "fftw_mpi_init"

call "fftw_init_threads"

for i in [0:60]:

allocate test data using "fftw_alloc_complex"

create a plan using "fftw_mpi_plan_dft_3d" and FFTW_MEASURE

record time-stamp-1

MPI barrier

call "fftw_execute" on the created plan

MPI barrier

record time-stamp-2

deallocate data using "fftw_destroy_plan"

call "fftw_cleanup_threads"

Note:

- The first 10 iterations of the loop in the above snippet are not timed - these are considered to be warm-up runs.

- I'm recording the minimum, maximum and the mean of the measurements made above.

Cluster: The cluster is composed of compute nodes which have a dual socket Xeon Platinum 8168 setup. This means each compute node has two ccNUMA domains with 24 cores each.

Pinning:

- No thread migration is allowed,

- SMT/HT is disabled,

- all 48 cores of the compute node are to be used.

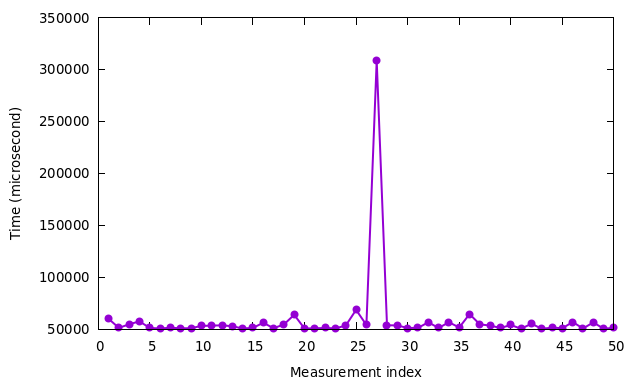

Measurements using 27 nodes (1296 cores), N=360x360x360:

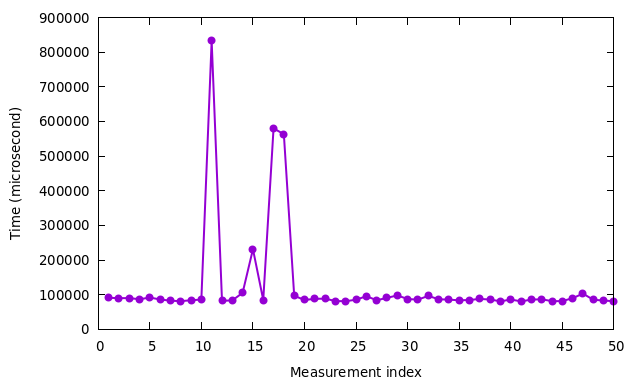

Measurements using 64 nodes (3072 cores), N=540x540x540:

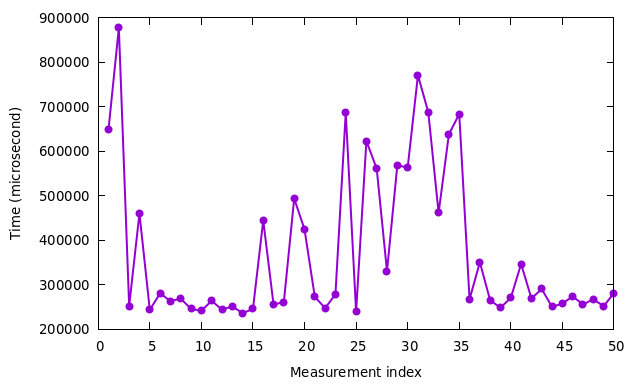

Measurements using 125 nodes (6000 cores), N=720x720x720:

As can be seen, the variation in measurements is increasing with problem size and node count.

Question:

- Why is this happening?

- How can I reduce the variation?

- Am I using the library correctly?

Final note: When performing measurements of other 3rd party library FFT functions, this variation cannot be seen.

Any help will be greatly appreciated, additional information can be provided upon request. Thank you in advance.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thank you for posting on Intel Communities.

We would like to request you to help us with the below-required details to take up this issue further?

- Could you please confirm for us if you are using Intel or GNU Fortran compiler?

- Could you please let us know the steps followed to get the code compiled and build?

- Could you please provide us with a working reproducer?

- Could you please let us know what process you followed to prevent thread migration.

- Could you please let us know the 3rd party FFT library that was used to check?

Best Regards,

Shanmukh.SS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Shanmukh,

1. I am using the Intel C++ compiler (version 2021.6.0 20220226)

2. I'm compiling the code using the flags "-O3;-ffast-math;-march=native;-qopt-zmm-usage=high", linking my executable with the libraries libfftw3x_cdft_lp64.a, libmkl_cdft_core.so, libmkl_blacs_intelmpi_ilp64.so, libmkl_intel_lp64.so, libmkl_intel_thread.so and libmkl_core.so.

3. I unfortunately cannot provide you a minimum reproducer since the code is too much to present here. Therefore I request you to orientate yourself around the pseudo-code which I have posted above, and to trust that my C++ code is doing exactly that. I can, however, provide you with the code which I use to initialize my data:

// allocate data

local_data_ = fftw_alloc_complex(local_size_);

if (local_data_ == nullptr)

{

std::cerr << "Fatal error: test data could not be allocated for!" << std::endl;

MPI_Abort(MPI_COMM_WORLD, MPI_ERR_OTHER);

}

// create a plan

const auto chosen_plan = FFTW_MEASURE;

plan_ = fftw_mpi_plan_dft_3d(n_, n_, n_, local_data_, local_data_, MPI_COMM_WORLD, FFTW_FORWARD,

plan_creation_count_ == 0 ? chosen_plan : FFTW_WISDOM_ONLY | chosen_plan);

if (plan_ == NULL)

{

std::cerr << "Fatal error: fftw_plan could not be created!" << std::endl;

MPI_Abort(MPI_COMM_WORLD, MPI_ERR_OTHER);

}

++plan_creation_count_;

As you can see, I'm using FFTW_MEASURE on the first run (not timed, because it is part of the first 10 warm-up runs) and then using the wisdom accumulated during this run for all the other 59 runs.

4. I am very certain of my thread binding, because I have a test program which I routinely use to check it. Basically, I use OMP_PROC_BIND=true to prevent thread migration in combination with the --cpu-bind=threads --distribution=block:fcyclic:fcyclic binding utility provided by SLURM. This ensures that SMT/HT is not used and all threads stay on the cores assigned to them at the beginning of the task.

5. The other 3rd party FFT library which I use to check is S3DFT, a library which I have developed. I am comparing its performance with that of Intel MKL's FFTW wrapper. S3DFT is under-performing but is found to be much more consistent in its measurements (std. deviation ~5% of the mean as compared to MKL's 33-110%)

I strongly suspect that the variation has to do with the creation of the plan because I observed a very similar behaviour while measuring the performance of FFTW 3.3.10. Thanks for your reply, any tips/help will be greatly appreciated. I am of course willing to provide more information as per requirement.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks a lot for sharing all the required details.

>>I unfortunately cannot provide you a minimum reproducer since the code is too much to present here.

We would like to request you to attach the required reproducer files, if the code is too much to present, or else you could even compress the required reproducer files and attach them to the case in your reply.

Best Regards,

Shanmukh.SS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In the attachments you will find the requested minimum reproducer. I've just run it to test that it reproduces the effect. In order to build the benchmark program, you will need e.g. the following CMake block:

add_executable(benchmarking_min_rep benchmarking_min_rep.cpp)

target_include_directories(benchmarking_min_rep PRIVATE ${MKL_INCLUDE_DIR} ${MPI_INCLUDE_PATH} ${TIXL_INCLUDE_DIR})

target_compile_options(benchmarking_min_rep PRIVATE "-O3;-ffast-math;-march=native;-qopt-zmm-usage=high;-fPIE;-DTIXL_USE_MPI" ${OpenMP_CXX_FLAGS})

target_link_directories(benchmarking_min_rep PRIVATE ${MKL_LIBRARY_DIR} ${DISTR_FFTW_LIBRARY_DIR} ${TIXL_LIBRARY_DIR})

target_link_libraries(benchmarking_min_rep PRIVATE tixl_w_mpi libfftw3x_cdft_lp64.a libmkl_cdft_core.so libmkl_blacs_intelmpi_ilp64.so libmkl_intel_lp64.so libmkl_intel_thread.so libmkl_core.so ${MPI_CXX_LIBRARIES} ${OpenMP_CXX_FLAGS})Note: in the above, "-march=native" translates to "-march=skylake" in my case.

Of course, prior to this, you will need to build the TIXL library (provided in the attachment) with the MPI option. Thanks for taking the time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Nitin,

Thank you for letting us know about this issue.

We will do some investigation and will let you know how we will do next.

Best regards,

Khang

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page