- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

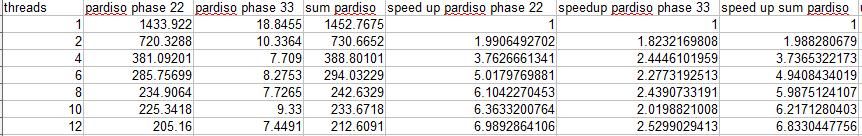

I have a question about scalability of the PARDISO solver. I'm using Intel MKL PARDISO with my Finite Element code. I tested simple linear elastic problem with around 800 000 unknowns. The time results are:

| threads | pardiso phase 22 | pardiso phase 33 | sum pardiso | speed up pardiso phase 22 | speedup pardiso phase 33 | speed up sum pardiso |

| 1 | 1433.922 | 18.8455 | 1452.7675 | 1 | 1 | 1 |

| 2 | 720.3288 | 10.3364 | 730.6652 | 1.9906492702 | 1.8232169808 | 1.988280679 |

| 4 | 381.09201 | 7.709 | 388.80101 | 3.7626661341 | 2.4446101959 | 3.7365322173 |

| 6 | 285.75699 | 8.2753 | 294.03229 | 5.0179769881 | 2.2773192513 | 4.9408434019 |

| 8 | 234.9064 | 7.7265 | 242.6329 | 6.1042270453 | 2.4390733191 | 5.9875124107 |

| 10 | 225.3418 | 9.33 | 233.6718 | 6.3633200764 | 2.0198821008 | 6.2171280403 |

| 12 | 205.16 | 7.4491 | 212.6091 | 6.9892864106 | 2.5299029413 | 6.8330447756 |

I've tested it on a node with 2 processors with 6 cores Xeon X5650 with 2,66Ghz and 24 GB RAM DDR3 1333MHz .

I'm wondering if speed up around 7 is ok or I may get better speed up, when I play a little bit with input parameters for PARDISO?

Is there some articles or papers about scalability of the PARDISO solver? Could you pass me some links to them?

My input parameters are:

iparm(1) = 1

iparm(3) = 0

iparm(4) = 0

iparm(5) = 0

iparm(6) = 0

iparm(7) = 0

iparm(8) =10

iparm(9) = 0

iparm(10) = 8

iparm(11) = 1

iparm(12) = 0

iparm(13) = 1

iparm(14) = 0

iparm(15) = 0

iparm(16) = 0

iparm(17) = 0

iparm(18) = -1

iparm(19) = 0

iparm(20) = 0

iparm(21) = 1

iparm(22) = 0

iparm(23) = 0

iparm(24) = 0

iparm(25) = 0

iparm(27) = 0

iparm(28) = 0

iparm(30) = 0

iparm(31) = 0

iparm(35) = 0

iparm(60) =0

Thanks for all advices.

best regards,

Pawel J.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry my table was unreadable. I've attached screenshot from Calc.

best regards,

Pawel J.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Pawel,

The speedup of around 7 seems to be fine. Since the matrix size is large, you may try the Cluster PARDISO from MKL. Please find details here.

--Vipin

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page