- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi eveyrone,

I have been solving a large symmetric indefinite sparse matrix using Pardiso symmetric solver, with property as below:

- thread = 1

reorder: 0.410754 s

factorize: 0.159544 s

solution: 0.047355 s

- thread = 2

reorder: 0.404389 s

factorize: 0.073979 s

solution: 0.049030 s

- thread = 4

reorder: 0.417474 s

factorize: 0.054813 s

solution: 0.038233 s

--------------

My laptop has 8 CPU, with details as below:

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Alexander,

Thanks for reply.

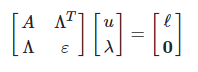

The matrix is a KKT system from FEM stiffness matrix and lagrange mutipliers. It has structure as below:

in which A is the n x n stiffness matrix from a FEM structure, Λ is a m x n lagrange multipliers matrix, usually m << n, ε is a very small perturbation.

When I set the iparm[36] to -90,

- thread = 1

reorder time: 0.205429 s

- thread = 2

reorder time: 0.141978 s

- thread = 4

reorder time: 0.127079 s

It does scale somehow. Is this desired scalability for Pardiso?

The Pardiso manual does not mention what iparm[36] is for. What does setting it to -90 mean?

The output files are in attachment.

Btw, the residual is computed from ||Ax - b||2 / ||b||2,

- iparm[36] = 0

residual = 4.208551e-15

- iparm[36] = -90

residual = 5.733099e-12

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Li Wei,

It looks a problem. Could you provide us your test matrix and command line, a test code will be better.

and FYI, as i mentioned in topic: https://software.intel.com/en-us/forums/intel-math-kernel-library/topic/777236

MKL 2018 update 2 have some update about Pardiso routine, you may try it .

https://software.intel.com/en-us/articles/intel-math-kernel-library-intel-mkl-2018-bug-fixes-list

| MKLD-2776 | Improved the performance of Intel MKL Pardiso routine in the case of multiple RHS and linking with Intel® Threaded Building Blocks (Intel® TBB) threading layer. |

Best Regards,

Ying

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ying H. (Intel) wrote:

Hi Li Wei,

It looks a problem. Could you provide us your test matrix and command line, a test code will be better.and FYI, as i mentioned in topic: https://software.intel.com/en-us/forums/intel-math-kernel-library/topic/...

MKL 2018 update 2 have some update about Pardiso routine, you may try it .

https://software.intel.com/en-us/articles/intel-math-kernel-library-inte...

MKLD-2776

Improved the performance of Intel MKL Pardiso routine in the case of multiple RHS and linking with Intel® Threaded Building Blocks (Intel® TBB) threading layer.Best Regards,

Ying

Thanks for your reply!

Here is the matrix in CSR format. bs is right-hand side vector, row-ptr and col-ind are row and column vectors, val is matrix value.

testPardisoSym.cpp is the code I used to test Pardiso, and makefile.txt(remove .txt) is the makefile I used to compile.

I will try the update 2 to see if scalability improves.

Best,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Wei,

Thank you for the test code. I can reproduce the problem with latest version. Will look into details.

Thanks

Ying

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

the problem has been fixed into version 2019 u3.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page