- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

On IVB, it appears MKL DGEMM decides to run on only eight cores when it is told to run on nine. Behaviour on SNB, HSW, BDW is fine. I tried different IVB chips, but to no avail. When instructed to run on ten cores, all ten cores are used (so it doesn't appear to be a thread pinning issue). I've seen irregularities on IVB chips in RAPL reported power consumption when going from eight to nine to ten cores. Is this related and expected behaviour (i.e. an optimization) because DGEMM knows something I don't? Is there a workaround to force it to use nine cores?

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I made sure thread pinning is correct by using KMP_AFFINITY instead of pinning with LIKWID. I recorded hardware events for all forty logical cores to make sure the work isn't executed on another thread. It appears MKL is distributing the work unevenly. The ninth core only gets 10% of the work the other cores get which causes the imbalance. Is this possible? Here's the log:

--------------------------------------------------------------------------------

CPU name: Intel(R) Xeon(R) CPU E5-2690 v2 @ 3.00GHz

CPU type: Intel Xeon IvyBridge EN/EP/EX processor

CPU clock: 3.00 GHz

--------------------------------------------------------------------------------

OMP: Info #204: KMP_AFFINITY: decoding x2APIC ids.

OMP: Info #202: KMP_AFFINITY: Affinity capable, using global cpuid leaf 11 info

OMP: Info #154: KMP_AFFINITY: Initial OS proc set respected: {0,1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39}

OMP: Info #156: KMP_AFFINITY: 40 available OS procs

OMP: Info #157: KMP_AFFINITY: Uniform topology

OMP: Info #179: KMP_AFFINITY: 2 packages x 10 cores/pkg x 2 threads/core (20 total cores)

OMP: Info #206: KMP_AFFINITY: OS proc to physical thread map:

OMP: Info #171: KMP_AFFINITY: OS proc 0 maps to package 0 core 0 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 20 maps to package 0 core 0 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 1 maps to package 0 core 1 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 21 maps to package 0 core 1 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 2 maps to package 0 core 2 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 22 maps to package 0 core 2 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 3 maps to package 0 core 3 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 23 maps to package 0 core 3 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 4 maps to package 0 core 4 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 24 maps to package 0 core 4 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 5 maps to package 0 core 8 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 25 maps to package 0 core 8 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 6 maps to package 0 core 9 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 26 maps to package 0 core 9 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 7 maps to package 0 core 10 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 27 maps to package 0 core 10 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 8 maps to package 0 core 11 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 28 maps to package 0 core 11 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 9 maps to package 0 core 12 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 29 maps to package 0 core 12 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 10 maps to package 1 core 0 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 30 maps to package 1 core 0 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 11 maps to package 1 core 1 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 31 maps to package 1 core 1 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 12 maps to package 1 core 2 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 32 maps to package 1 core 2 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 13 maps to package 1 core 3 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 33 maps to package 1 core 3 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 14 maps to package 1 core 4 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 34 maps to package 1 core 4 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 15 maps to package 1 core 8 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 35 maps to package 1 core 8 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 16 maps to package 1 core 9 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 36 maps to package 1 core 9 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 17 maps to package 1 core 10 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 37 maps to package 1 core 10 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 18 maps to package 1 core 11 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 38 maps to package 1 core 11 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 19 maps to package 1 core 12 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 39 maps to package 1 core 12 thread 1

OMP: Info #242: KMP_AFFINITY: pid 5796 thread 0 bound to OS proc set {0}

OMP: Info #242: KMP_AFFINITY: pid 5796 thread 1 bound to OS proc set {1}

OMP: Info #242: KMP_AFFINITY: pid 5796 thread 3 bound to OS proc set {3}

OMP: Info #242: KMP_AFFINITY: pid 5796 thread 2 bound to OS proc set {2}

OMP: Info #242: KMP_AFFINITY: pid 5796 thread 5 bound to OS proc set {5}

OMP: Info #242: KMP_AFFINITY: pid 5796 thread 4 bound to OS proc set {4}

OMP: Info #242: KMP_AFFINITY: pid 5796 thread 6 bound to OS proc set {6}

OMP: Info #242: KMP_AFFINITY: pid 5796 thread 7 bound to OS proc set {7}

OMP: Info #242: KMP_AFFINITY: pid 5796 thread 8 bound to OS proc set {8}

Running without Marker API. Activate Marker API with -m on commandline.

Iteration 1. Mean runtime: 3.353719 Total runtime: 3.353720

Iteration 2. Mean runtime: 2.868546 Total runtime: 5.737093

Iteration 3. Mean runtime: 2.706802 Total runtime: 8.120405

Iteration 4. Mean runtime: 2.625975 Total runtime: 10.503900

Iteration 5. Mean runtime: 2.577459 Total runtime: 12.887293

Iteration 6. Mean runtime: 2.545102 Total runtime: 15.270610

Iteration 7. Mean runtime: 2.522070 Total runtime: 17.654488

Iteration 8. Mean runtime: 2.504731 Total runtime: 20.037846

Iteration 9. Mean runtime: 2.491226 Total runtime: 22.421034

Iteration 10. Mean runtime: 2.480431 Total runtime: 24.804308

Iteration 11. Mean runtime: 2.471668 Total runtime: 27.188348

Iteration 12. Mean runtime: 2.464303 Total runtime: 29.571634

Iteration 13. Mean runtime: 2.458674 Total runtime: 31.962761

Iteration 14. Mean runtime: 2.453289 Total runtime: 34.346052

Iteration 15. Mean runtime: 2.448623 Total runtime: 36.729346

Iteration 16. Mean runtime: 2.444576 Total runtime: 39.113220

Iteration 17. Mean runtime: 2.440975 Total runtime: 41.496583

Iteration 18. Mean runtime: 2.437779 Total runtime: 43.880024

Iteration 19. Mean runtime: 2.434932 Total runtime: 46.263715

Iteration 20. Mean runtime: 2.432355 Total runtime: 48.647107

Iteration 21. Mean runtime: 2.430023 Total runtime: 51.030474

Iteration 22. Mean runtime: 2.427905 Total runtime: 53.413921

Iteration 23. Mean runtime: 2.425993 Total runtime: 55.797832

Iteration 24. Mean runtime: 2.424213 Total runtime: 58.181112

Iteration 25. Mean runtime: 2.422810 Total runtime: 60.570250

Iteration 26. Mean runtime: 2.421293 Total runtime: 62.953627

Iteration 27. Mean runtime: 2.419883 Total runtime: 65.336833

Iteration 28. Mean runtime: 2.418575 Total runtime: 67.720096

Iteration 29. Mean runtime: 2.417356 Total runtime: 70.103325

Iteration 30. Mean runtime: 2.416224 Total runtime: 72.486730

Iteration 31. Mean runtime: 2.415169 Total runtime: 74.870236

Iteration 32. Mean runtime: 2.414181 Total runtime: 77.253807

Iteration 33. Mean runtime: 2.413250 Total runtime: 79.637239

Iteration 34. Mean runtime: 2.412367 Total runtime: 82.020486

Iteration 35. Mean runtime: 2.411536 Total runtime: 84.403753

Iteration 36. Mean runtime: 2.410750 Total runtime: 86.786986

Iteration 37. Mean runtime: 2.410009 Total runtime: 89.170345

Iteration 38. Mean runtime: 2.409502 Total runtime: 91.561082

Iteration 39. Mean runtime: 2.408828 Total runtime: 93.944291

Iteration 40. Mean runtime: 2.408206 Total runtime: 96.328232

Iteration 41. Mean runtime: 2.407600 Total runtime: 98.711609

Iteration 42. Mean runtime: 2.407026 Total runtime: 101.095101

Iteration 43. Mean runtime: 2.406476 Total runtime: 103.478452

Iteration 44. Mean runtime: 2.405957 Total runtime: 105.862088

Iteration 45. Mean runtime: 2.405457 Total runtime: 108.245548

Iteration 46. Mean runtime: 2.404973 Total runtime: 110.628774

Iteration 47. Mean runtime: 2.404512 Total runtime: 113.012080

Iteration 48. Mean runtime: 2.404072 Total runtime: 115.395474

Iteration 49. Mean runtime: 2.403650 Total runtime: 117.778863

Iteration 50. Mean runtime: 2.403349 Total runtime: 120.167449

Iteration 51. Mean runtime: 2.402959 Total runtime: 122.550907

Iteration 52. Mean runtime: 2.402581 Total runtime: 124.934223

Iteration 53. Mean runtime: 2.402215 Total runtime: 127.317377

Iteration 54. Mean runtime: 2.401863 Total runtime: 129.700605

Iteration 55. Mean runtime: 2.401526 Total runtime: 132.083937

Iteration 56. Mean runtime: 2.401201 Total runtime: 134.467233

Iteration 57. Mean runtime: 2.400888 Total runtime: 136.850597

Iteration 58. Mean runtime: 2.400587 Total runtime: 139.234067

Iteration 59. Mean runtime: 2.400295 Total runtime: 141.617416

Iteration 60. Mean runtime: 2.400014 Total runtime: 144.000834

Iteration 61. Mean runtime: 2.399741 Total runtime: 146.384214

Iteration 62. Mean runtime: 2.399478 Total runtime: 148.767622

Iteration 63. Mean runtime: 2.399329 Total runtime: 151.157749

Iteration 64. Mean runtime: 2.399083 Total runtime: 153.541311

Iteration 65. Mean runtime: 2.398845 Total runtime: 155.924894

Iteration 66. Mean runtime: 2.398610 Total runtime: 158.308293

Iteration 67. Mean runtime: 2.398383 Total runtime: 160.691671

Iteration 68. Mean runtime: 2.398161 Total runtime: 163.074916

Iteration 69. Mean runtime: 2.397944 Total runtime: 165.458155

Iteration 70. Mean runtime: 2.397736 Total runtime: 167.841491

Iteration 71. Mean runtime: 2.397531 Total runtime: 170.224679

Iteration 72. Mean runtime: 2.397336 Total runtime: 172.608218

Iteration 73. Mean runtime: 2.397144 Total runtime: 174.991548

Iteration 74. Mean runtime: 2.396961 Total runtime: 177.375093

Iteration 75. Mean runtime: 2.396779 Total runtime: 179.758435

Iteration 76. Mean runtime: 2.396710 Total runtime: 182.149957

Iteration 77. Mean runtime: 2.396534 Total runtime: 184.533129

Iteration 78. Mean runtime: 2.396369 Total runtime: 186.916778

Iteration 79. Mean runtime: 2.396203 Total runtime: 189.300062

Iteration 80. Mean runtime: 2.396041 Total runtime: 191.683304

Iteration 81. Mean runtime: 2.395884 Total runtime: 194.066615

Iteration 82. Mean runtime: 2.395731 Total runtime: 196.449905

Iteration 83. Mean runtime: 2.395586 Total runtime: 198.833680

Iteration 84. Mean runtime: 2.395443 Total runtime: 201.217197

Iteration 85. Mean runtime: 2.395305 Total runtime: 203.600958

Iteration 86. Mean runtime: 2.395166 Total runtime: 205.984288

Iteration 87. Mean runtime: 2.395029 Total runtime: 208.367496

Iteration 88. Mean runtime: 2.394967 Total runtime: 210.757081

Iteration 89. Mean runtime: 2.394835 Total runtime: 213.140328

Iteration 90. Mean runtime: 2.394706 Total runtime: 215.523532

Iteration 91. Mean runtime: 2.394582 Total runtime: 217.906995

Iteration 92. Mean runtime: 2.394462 Total runtime: 220.290481

Iteration 93. Mean runtime: 2.394342 Total runtime: 222.673840

Iteration 94. Mean runtime: 2.394225 Total runtime: 225.057159

Iteration 95. Mean runtime: 2.394110 Total runtime: 227.440472

Iteration 96. Mean runtime: 2.393999 Total runtime: 229.823858

Iteration 97. Mean runtime: 2.393892 Total runtime: 232.207486

Iteration 98. Mean runtime: 2.393785 Total runtime: 234.590894

Iteration 99. Mean runtime: 2.393679 Total runtime: 236.974246

Iteration 100. Mean runtime: 2.393577 Total runtime: 239.357665

Iteration 101. Mean runtime: 2.393552 Total runtime: 241.748706

Iteration 102. Mean runtime: 2.393450 Total runtime: 244.131922

Iteration 103. Mean runtime: 2.393353 Total runtime: 246.515310

Iteration 104. Mean runtime: 2.393256 Total runtime: 248.898616

Iteration 105. Mean runtime: 2.393161 Total runtime: 251.281925

Iteration 106. Mean runtime: 2.393068 Total runtime: 253.665257

Iteration 107. Mean runtime: 2.392979 Total runtime: 256.048703

Iteration 108. Mean runtime: 2.392890 Total runtime: 258.432128

Iteration 109. Mean runtime: 2.392804 Total runtime: 260.815635

Iteration 110. Mean runtime: 2.392721 Total runtime: 263.199303

Iteration 111. Mean runtime: 2.392636 Total runtime: 265.582618

Iteration 112. Mean runtime: 2.392553 Total runtime: 267.965972

Iteration 113. Mean runtime: 2.392522 Total runtime: 270.355024

Iteration 114. Mean runtime: 2.392442 Total runtime: 272.738341

Iteration 115. Mean runtime: 2.392362 Total runtime: 275.121620

Iteration 116. Mean runtime: 2.392285 Total runtime: 277.505009

Iteration 117. Mean runtime: 2.392209 Total runtime: 279.888418

Iteration 118. Mean runtime: 2.392135 Total runtime: 282.271923

Iteration 119. Mean runtime: 2.392062 Total runtime: 284.655331

Iteration 120. Mean runtime: 2.391988 Total runtime: 287.038552

Iteration 121. Mean runtime: 2.391918 Total runtime: 289.422104

Iteration 122. Mean runtime: 2.391850 Total runtime: 291.805721

Iteration 123. Mean runtime: 2.391781 Total runtime: 294.189043

Iteration 124. Mean runtime: 2.391714 Total runtime: 296.572513

Iteration 125. Mean runtime: 2.391648 Total runtime: 298.955944

Iteration 126. Mean runtime: 2.391636 Total runtime: 301.346102

runtime: 300.369342

GFlop/s: 181.216897

--------------------------------------------------------------------------------

STRUCT,Info,3,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

CPU name:,Intel(R) Xeon(R) CPU E5-2690 v2 @ 3.00GHz,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

CPU type:,Intel Xeon IvyBridge EN/EP/EX processor,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

CPU clock:,2.99979031 GHz,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

TABLE,Group 1 Raw,JOHANNES,10,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

Event,Counter,Core 0,Core 1,Core 2,Core 3,Core 4,Core 5,Core 6,Core 7,Core 8,Core 9,Core 10,Core 11,Core 12,Core 13,Core 14,Core 15,Core 16,Core 17,Core 18,Core 19,Core 20,Core 21,Core 22,Core 23,Core 24,Core 25,Core 26,Core 27,Core 28,Core 29,Core 30,Core 31,Core 32,Core 33,Core 34,Core 35,Core 36,Core 37,Core 38,Core 39

INSTR_RETIRED_ANY,FIXC0,5294039044058,5290669395330,5290693075130,5290634923741,5290662643401,5290672942690,5290658896953,5290668076024,217334939071,56919681,5575049,127260056,36040162,6564321,8186722,1682102,3036481,8312798,753607,14476,721,721,721,20138,721,721,721,721,872,4029840,722,784685,2251032,2544937,722,1852834,2424050,2278774,722,588864

CPU_CLK_UNHALTED_CORE,FIXC1,1800351200004,1799015461125,1798968537962,1798967650319,1799000543888,1798881089133,1798967160650,1798855371910,220234341842,68311605,6463765,129746324,43877825,9166346,7340559,3028670,4940247,8037292,1359617,131758,20325,9783,9767,207125,11043,9737,9745,9748,23687,33333011,12052,896475,1700666,2089476,10457,2533276,2973661,2115715,10448,456320

CPU_CLK_UNHALTED_REF,FIXC2,1800351149070,1799015309130,1798968391860,1798967604900,1799000449650,1798880970120,1798967147610,1798855275660,220234324650,68313030,6538650,130715880,53476770,12871980,7355250,3324030,4968750,8070840,1369350,131760,20310,9750,9750,207090,11010,9750,9720,9750,23670,33333390,12060,896130,1700430,2099910,10440,2537430,2973360,2115540,10470,456240

TEMP_CORE,TMP0,67,68,61,68,64,64,62,64,64,61,34,31,34,34,35,30,34,35,31,30,67,68,61,67,64,64,63,64,64,62,32,31,35,33,36,30,34,35,32,30

PWR_PKG_ENERGY,PWR0,54635.9197,0,0,0,0,0,0,0,0,0,13286.2774,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0

PWR_PP0_ENERGY,PWR1,45727.5466,0,0,0,0,0,0,0,0,0,5627.7243,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0

PWR_DRAM_ENERGY,PWR3,13564.0653,0,0,0,0,0,0,0,0,0,8351.3002,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0

UNCORE_CLOCK,UBOXFIX,1805230876592,0,0,0,0,0,0,0,0,0,1062025230698,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0

MEM_LOAD_UOPS_RETIRED_L3_ALL,PMC0,518880122,512196838,509897297,512951910,522803662,522560008,509359535,516815074,333088517,46045149,433308,1431465,777725,451728,222771,210743,227005,616986,321043,64886,48054,29309,30584,692853,52482,30389,28943,29823,5748688,2301163,50499,150219,141253,359077,86745,114735,101135,271438,78035,41172

MEM_LOAD_UOPS_RETIRED_L3_HIT,PMC1,465327581,461012978,457258609,460430711,475990991,484017261,461263012,468784576,69460189,10344594,331380,1172306,554311,290922,142556,136033,149709,407798,219363,36634,19795,11192,11951,404946,23527,11325,12643,11700,156757,362913,16749,31786,30409,194035,32441,58489,44972,133275,17160,17557

TABLE,Group 1 Raw STAT,JOHANNES,10,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

Event,Counter,Sum,Min,Max,Avg,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

INSTR_RETIRED_ANY STAT,FIXC0,42546305065092,721,5294039044058,1.063658e+12,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

CPU_CLK_UNHALTED_CORE STAT,FIXC1,14613570203358,9737,1800351200004,3.653393e+11,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

CPU_CLK_UNHALTED_REF STAT,FIXC2,14613584215140,9720,1800351149070,3.653396e+11,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

TEMP_CORE STAT,TMP0,1943,30,68,48.5750,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

PWR_PKG_ENERGY STAT,PWR0,67922.1971,0,54635.9197,1698.0549,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

PWR_PP0_ENERGY STAT,PWR1,51355.2709,0,45727.5466,1283.8818,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

PWR_DRAM_ENERGY STAT,PWR3,21915.3655,0,13564.0653,547.8841,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

UNCORE_CLOCK STAT,UBOXFIX,2867256107290,0,1805230876592,7.168140e+10,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

MEM_LOAD_UOPS_RETIRED_L3_ALL STAT,PMC0,4519742368,28943,522803662,1.129936e+08,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

MEM_LOAD_UOPS_RETIRED_L3_HIT STAT,PMC1,3818935136,11192,484017261,9.547338e+07,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

TABLE,Group 1 Metric,JOHANNES,13,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

Metric,Core 0,Core 1,Core 2,Core 3,Core 4,Core 5,Core 6,Core 7,Core 8,Core 9,Core 10,Core 11,Core 12,Core 13,Core 14,Core 15,Core 16,Core 17,Core 18,Core 19,Core 20,Core 21,Core 22,Core 23,Core 24,Core 25,Core 26,Core 27,Core 28,Core 29,Core 30,Core 31,Core 32,Core 33,Core 34,Core 35,Core 36,Core 37,Core 38,Core 39,

Runtime (RDTSC) ,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,601.6725,

Runtime unhalted ,600.1590,599.7137,599.6981,599.6978,599.7088,599.6689,599.6976,599.6604,73.4166,0.0228,0.0022,0.0433,0.0146,0.0031,0.0024,0.0010,0.0016,0.0027,0.0005,4.392240e-05,6.775474e-06,3.261228e-06,3.255894e-06,0.0001,3.681257e-06,3.245894e-06,3.248560e-06,3.249560e-06,7.896219e-06,0.0111,4.017614e-06,0.0003,0.0006,0.0007,3.485910e-06,0.0008,0.0010,0.0007,3.482910e-06,0.0002,

Core Clock [MHz],2999.7904,2999.7906,2999.7906,2999.7904,2999.7905,2999.7905,2999.7903,2999.7905,2999.7905,2999.7277,2965.4347,2977.5400,2461.3355,2136.1994,2993.7987,2733.2410,2982.5822,2987.3211,2978.4685,2999.7448,3002.0058,3009.9434,3005.0207,3000.2973,3008.7815,2995.7906,3007.5058,2999.1750,3001.9448,2999.7562,2997.8004,3000.9452,3000.2066,2984.8850,3004.6750,2994.8794,3000.0940,3000.0385,2993.4870,3000.3163,

CPI,0.3401,0.3400,0.3400,0.3400,0.3400,0.3400,0.3400,0.3400,1.0133,1.2001,1.1594,1.0195,1.2175,1.3964,0.8966,1.8005,1.6270,0.9669,1.8041,9.1018,28.1900,13.5687,13.5465,10.2853,15.3162,13.5049,13.5160,13.5201,27.1640,8.2715,16.6925,1.1425,0.7555,0.8210,14.4834,1.3672,1.2267,0.9284,14.4709,0.7749,

Temperature ,67,68,61,68,64,64,62,64,64,61,34,31,34,34,35,30,34,35,31,30,67,68,61,67,64,64,63,64,64,62,32,31,35,33,36,30,34,35,32,30,

Energy ,54635.9197,0,0,0,0,0,0,0,0,0,13286.2774,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,

Power ,90.8067,0,0,0,0,0,0,0,0,0,22.0822,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,

Energy PP0 ,45727.5466,0,0,0,0,0,0,0,0,0,5627.7243,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,

Power PP0 ,76.0007,0,0,0,0,0,0,0,0,0,9.3535,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,

Energy DRAM ,13564.0653,0,0,0,0,0,0,0,0,0,8351.3002,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,

Power DRAM ,22.5439,0,0,0,0,0,0,0,0,0,13.8801,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,

Uncore Clock [MHz],3000.3546,0,0,0,0,0,0,0,0,0,1765.1218,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,

L3 hit ratio,0.8968,0.9001,0.8968,0.8976,0.9105,0.9262,0.9056,0.9071,0.2085,0.2247,0.7648,0.8190,0.7127,0.6440,0.6399,0.6455,0.6595,0.6610,0.6833,0.5646,0.4119,0.3819,0.3908,0.5845,0.4483,0.3727,0.4368,0.3923,0.0273,0.1577,0.3317,0.2116,0.2153,0.5404,0.3740,0.5098,0.4447,0.4910,0.2199,0.4264,

TABLE,Group 1 Metric STAT,JOHANNES,13,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

Metric,Sum,Min,Max,Avg,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

Runtime (RDTSC) STAT,24066.9000,601.6725,601.6725,601.6725,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

Runtime unhalted STAT,4871.5307,3.245894e-06,600.1590,121.7883,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

Core Clock [MHz] STAT,118221.0564,2136.1994,3009.9434,2955.5264,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

CPI STAT,235.4694,0.3400,28.1900,5.8867,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

Temperature STAT,1943,30,68,48.5750,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

Energy STAT,67922.1971,0,54635.9197,1698.0549,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

Power STAT,112.8889,0,90.8067,2.8222,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

Energy PP0 STAT,51355.2709,0,45727.5466,1283.8818,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

Power PP0 STAT,85.3542,0,76.0007,2.1339,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

Energy DRAM STAT,21915.3655,0,13564.0653,547.8841,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

Power DRAM STAT,36.4240,0,22.5439,0.9106,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

Uncore Clock [MHz] STAT,4765.4764,0,3000.3546,119.1369,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

L3 hit ratio STAT,21.8372,0.0273,0.9262,0.5459,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your reply.

Yes, I made sure that there's exactly nine threads running.

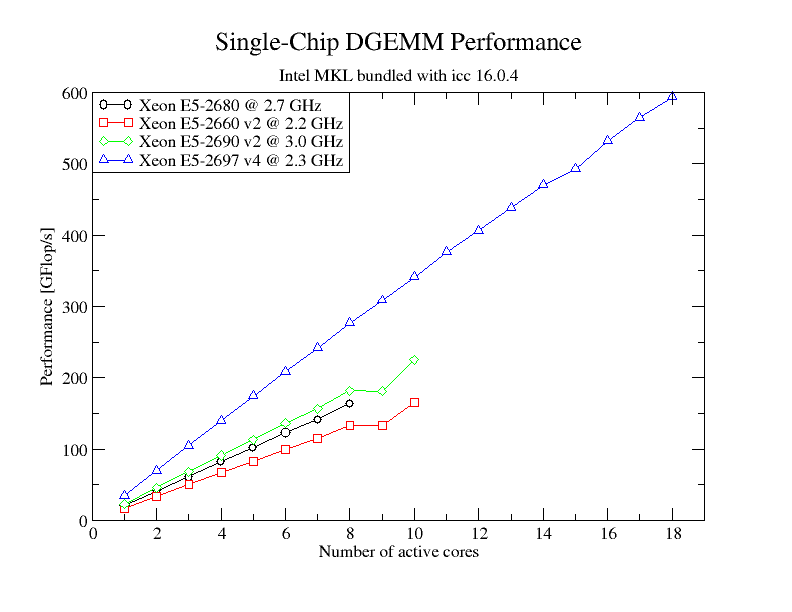

I'm aware of the fact that using more threads than the physical number of cores will not give me any more performance. The green graph in my plot corresponds to a Xeon E5-2690 v2 with ten physical cores; clock frequency is fixed at 3.0 GHz. With eight cores, I achieve the expected performance of 8 [cores] x 3.0 [GHz] x 4 [Flops / AVX instructions] x 2 [AVX instructions per cycle; 1 vaddpd+1 vmulpd] = 192 GFLop/s. With ten cores, I get the expected 240 GFlops (10x3x4x2). With nine cores, I expect 216 GFlops, but in the graph you can see that with 9 cores you only get the performance achieved with eight cores. Looking at the performance counters, you can see that of the nine physical cores the nine threads are pinned to, eight do the same amount of work and one is doing about 10% of what the eight other cores are doing individually. That's why I think it is a work distribution problem.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

May I ask how many active cores are used for the log mentioned in #2, please?

Is there log for running of 9 active cores?

Btw, please also provide MKL-related environment variables when running the test.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The log shows a run with 9 threads. The KMP_AFFINITY output shows that the 9 threads are pinned to the first nine physical cores of the first socket (i.e., cores 0-8).

For the run that produced the log, there were no MKL-related environment variables set. I did another run with MKL_DYNAMIC set to false, but it appears MKL isn't changing the number of threads.

In the performance counter logs, you can see that cores 0-7 (the first eight threads) retire a total of 5294039044058 instructions each (INSTR_RETIRED_ANY); the 8th core (running the 9th thread) only retires 217334939071 instructions (5% of the other cores).

The remaining cores hardly register any instructions -- which is expected and indicates thread pinning is working. (I measured the events on the other cores to make sure the thread from the core that does hardly any work doesn't wander off to another core.) All in all this points to a work imbalance. What's funny though is that on HSW and BDW, the same binary produces the expected performance and if a examine the performance counters, I find that work is distributed evenly among all threads.

I tried MKLs coming with icc 16.0.3 and icc 17.0.1 (that's the most recent one I got).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I did as instructed, except changing the pinning mask to [0,1,2,3,4,5,6,7,8], because they correspond to the first nine physical cores. Here's the log:

--------------------------------------------------------------------------------

CPU name: Intel(R) Xeon(R) CPU E5-2660 v2 @ 2.20GHz

CPU type: Intel Xeon IvyBridge EN/EP/EX processor

CPU clock: 2.20 GHz

Warning: The Marker API requires the application to run on the selected CPUs.

Warning: likwid-perfctr pins the application only when using the -C command line option.

Warning: LIKWID assumes that the application does it before the first instrumented code region is started.

Warning: You can use the string in the environment variable LIKWID_THREADS to pin you application to

Warning: to the CPUs specified after the -c command line option.

--------------------------------------------------------------------------------

OMP: Info #204: KMP_AFFINITY: decoding x2APIC ids.

OMP: Info #202: KMP_AFFINITY: Affinity capable, using global cpuid leaf 11 info

OMP: Info #154: KMP_AFFINITY: Initial OS proc set respected: {0,1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39}

OMP: Info #156: KMP_AFFINITY: 40 available OS procs

OMP: Info #157: KMP_AFFINITY: Uniform topology

OMP: Info #179: KMP_AFFINITY: 2 packages x 10 cores/pkg x 2 threads/core (20 total cores)

OMP: Info #206: KMP_AFFINITY: OS proc to physical thread map:

OMP: Info #171: KMP_AFFINITY: OS proc 0 maps to package 0 core 0 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 20 maps to package 0 core 0 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 1 maps to package 0 core 1 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 21 maps to package 0 core 1 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 2 maps to package 0 core 2 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 22 maps to package 0 core 2 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 3 maps to package 0 core 3 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 23 maps to package 0 core 3 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 4 maps to package 0 core 4 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 24 maps to package 0 core 4 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 5 maps to package 0 core 8 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 25 maps to package 0 core 8 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 6 maps to package 0 core 9 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 26 maps to package 0 core 9 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 7 maps to package 0 core 10 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 27 maps to package 0 core 10 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 8 maps to package 0 core 11 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 28 maps to package 0 core 11 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 9 maps to package 0 core 12 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 29 maps to package 0 core 12 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 10 maps to package 1 core 0 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 30 maps to package 1 core 0 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 11 maps to package 1 core 1 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 31 maps to package 1 core 1 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 12 maps to package 1 core 2 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 32 maps to package 1 core 2 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 13 maps to package 1 core 3 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 33 maps to package 1 core 3 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 14 maps to package 1 core 4 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 34 maps to package 1 core 4 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 15 maps to package 1 core 8 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 35 maps to package 1 core 8 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 16 maps to package 1 core 9 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 36 maps to package 1 core 9 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 17 maps to package 1 core 10 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 37 maps to package 1 core 10 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 18 maps to package 1 core 11 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 38 maps to package 1 core 11 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 19 maps to package 1 core 12 thread 0

OMP: Info #171: KMP_AFFINITY: OS proc 39 maps to package 1 core 12 thread 1

OMP: Info #242: KMP_AFFINITY: pid 21311 thread 0 bound to OS proc set {0}

OMP: Info #242: KMP_AFFINITY: pid 21311 thread 1 bound to OS proc set {1}

OMP: Info #242: KMP_AFFINITY: pid 21311 thread 2 bound to OS proc set {2}

OMP: Info #242: KMP_AFFINITY: pid 21311 thread 3 bound to OS proc set {3}

OMP: Info #242: KMP_AFFINITY: pid 21311 thread 4 bound to OS proc set {4}

OMP: Info #242: KMP_AFFINITY: pid 21311 thread 5 bound to OS proc set {5}

OMP: Info #242: KMP_AFFINITY: pid 21311 thread 6 bound to OS proc set {6}

OMP: Info #242: KMP_AFFINITY: pid 21311 thread 8 bound to OS proc set {8}

OMP: Info #242: KMP_AFFINITY: pid 21311 thread 7 bound to OS proc set {7}

Iteration 1. Mean runtime: 3.546116 Total runtime: 3.546116

Iteration 2. Mean runtime: 3.396040 Total runtime: 6.792080

Iteration 3. Mean runtime: 3.345847 Total runtime: 10.037540

Iteration 4. Mean runtime: 3.320686 Total runtime: 13.282745

Iteration 5. Mean runtime: 3.310963 Total runtime: 16.554816

Iteration 6. Mean runtime: 3.299979 Total runtime: 19.799875

Iteration 7. Mean runtime: 3.292065 Total runtime: 23.044456

Iteration 8. Mean runtime: 3.286089 Total runtime: 26.288715

Iteration 9. Mean runtime: 3.282264 Total runtime: 29.540378

Iteration 10. Mean runtime: 3.278322 Total runtime: 32.783218

Iteration 11. Mean runtime: 3.275092 Total runtime: 36.026011

Iteration 12. Mean runtime: 3.272456 Total runtime: 39.269478

Iteration 13. Mean runtime: 3.270315 Total runtime: 42.514092

Iteration 14. Mean runtime: 3.268492 Total runtime: 45.758888

Iteration 15. Mean runtime: 3.266888 Total runtime: 49.003321

Iteration 16. Mean runtime: 3.265484 Total runtime: 52.247748

Iteration 17. Mean runtime: 3.264254 Total runtime: 55.492322

Iteration 18. Mean runtime: 3.263151 Total runtime: 58.736722

Iteration 19. Mean runtime: 3.262634 Total runtime: 61.990044

Iteration 20. Mean runtime: 3.261626 Total runtime: 65.232518

Iteration 21. Mean runtime: 3.260713 Total runtime: 68.474982

Iteration 22. Mean runtime: 3.259967 Total runtime: 71.719268

Iteration 23. Mean runtime: 3.259317 Total runtime: 74.964302

Iteration 24. Mean runtime: 3.258697 Total runtime: 78.208729

Iteration 25. Mean runtime: 3.258122 Total runtime: 81.453057

Iteration 26. Mean runtime: 3.257603 Total runtime: 84.697684

Iteration 27. Mean runtime: 3.257111 Total runtime: 87.941994

Iteration 28. Mean runtime: 3.256958 Total runtime: 91.194814

Iteration 29. Mean runtime: 3.256461 Total runtime: 94.437372

Iteration 30. Mean runtime: 3.256006 Total runtime: 97.680180

Iteration 31. Mean runtime: 3.255634 Total runtime: 100.924650

Iteration 32. Mean runtime: 3.255280 Total runtime: 104.168946

Iteration 33. Mean runtime: 3.254950 Total runtime: 107.413356

Iteration 34. Mean runtime: 3.254636 Total runtime: 110.657633

Iteration 35. Mean runtime: 3.254346 Total runtime: 113.902117

Iteration 36. Mean runtime: 3.254072 Total runtime: 117.146601

Iteration 37. Mean runtime: 3.254024 Total runtime: 120.398901

Iteration 38. Mean runtime: 3.253726 Total runtime: 123.641588

Iteration 39. Mean runtime: 3.253456 Total runtime: 126.884778

Iteration 40. Mean runtime: 3.253235 Total runtime: 130.129388

Iteration 41. Mean runtime: 3.253023 Total runtime: 133.373941

Iteration 42. Mean runtime: 3.252818 Total runtime: 136.618359

Iteration 43. Mean runtime: 3.252625 Total runtime: 139.862877

Iteration 44. Mean runtime: 3.252441 Total runtime: 143.107401

Iteration 45. Mean runtime: 3.252272 Total runtime: 146.352243

Iteration 46. Mean runtime: 3.252255 Total runtime: 149.603727

Iteration 47. Mean runtime: 3.252054 Total runtime: 152.846546

Iteration 48. Mean runtime: 3.251863 Total runtime: 156.089407

Iteration 49. Mean runtime: 3.251680 Total runtime: 159.332331

Iteration 50. Mean runtime: 3.251517 Total runtime: 162.575854

Iteration 51. Mean runtime: 3.251385 Total runtime: 165.820622

Iteration 52. Mean runtime: 3.251256 Total runtime: 169.065302

Iteration 53. Mean runtime: 3.251132 Total runtime: 172.310006

Iteration 54. Mean runtime: 3.251012 Total runtime: 175.554659

Iteration 55. Mean runtime: 3.250903 Total runtime: 178.799689

Iteration 56. Mean runtime: 3.250955 Total runtime: 182.053468

Iteration 57. Mean runtime: 3.250812 Total runtime: 185.296259

Iteration 58. Mean runtime: 3.250676 Total runtime: 188.539197

Iteration 59. Mean runtime: 3.250567 Total runtime: 191.783451

Iteration 60. Mean runtime: 3.250466 Total runtime: 195.027975

Iteration 61. Mean runtime: 3.250367 Total runtime: 198.272414

Iteration 62. Mean runtime: 3.250274 Total runtime: 201.516993

Iteration 63. Mean runtime: 3.250186 Total runtime: 204.761738

Iteration 64. Mean runtime: 3.250099 Total runtime: 208.006306

Iteration 65. Mean runtime: 3.250137 Total runtime: 211.258917

Iteration 66. Mean runtime: 3.250028 Total runtime: 214.501878

Iteration 67. Mean runtime: 3.249923 Total runtime: 217.744862

Iteration 68. Mean runtime: 3.249820 Total runtime: 220.987752

Iteration 69. Mean runtime: 3.249738 Total runtime: 224.231951

Iteration 70. Mean runtime: 3.249665 Total runtime: 227.476570

Iteration 71. Mean runtime: 3.249595 Total runtime: 230.721244

Iteration 72. Mean runtime: 3.249524 Total runtime: 233.965761

Iteration 73. Mean runtime: 3.249465 Total runtime: 237.210982

Iteration 74. Mean runtime: 3.249497 Total runtime: 240.462780

Iteration 75. Mean runtime: 3.249410 Total runtime: 243.705725

Iteration 76. Mean runtime: 3.249319 Total runtime: 246.948253

Iteration 77. Mean runtime: 3.249242 Total runtime: 250.191654

Iteration 78. Mean runtime: 3.249186 Total runtime: 253.436503

Iteration 79. Mean runtime: 3.249126 Total runtime: 256.680974

Iteration 80. Mean runtime: 3.249071 Total runtime: 259.925713

Iteration 81. Mean runtime: 3.249017 Total runtime: 263.170375

Iteration 82. Mean runtime: 3.248964 Total runtime: 266.415032

Iteration 83. Mean runtime: 3.248998 Total runtime: 269.666799

Iteration 84. Mean runtime: 3.248925 Total runtime: 272.909693

Iteration 85. Mean runtime: 3.248854 Total runtime: 276.152599

Iteration 86. Mean runtime: 3.248796 Total runtime: 279.396490

Iteration 87. Mean runtime: 3.248750 Total runtime: 282.641270

Iteration 88. Mean runtime: 3.248704 Total runtime: 285.885925

Iteration 89. Mean runtime: 3.248659 Total runtime: 289.130615

Iteration 90. Mean runtime: 3.248613 Total runtime: 292.375160

Iteration 91. Mean runtime: 3.248571 Total runtime: 295.619924

Iteration 92. Mean runtime: 3.248530 Total runtime: 298.864772

Iteration 93. Mean runtime: 3.248584 Total runtime: 302.118343

runtime: 301.773308

GFlop/s: 133.133047

--------------------------------------------------------------------------------

STRUCT,Info,3,,,,,,,,

CPU name:,Intel(R) Xeon(R) CPU E5-2660 v2 @ 2.20GHz,,,,,,,,,

CPU type:,Intel Xeon IvyBridge EN/EP/EX processor,,,,,,,,,

CPU clock:,2.200033036 GHz,,,,,,,,,

TABLE,Region DGEMM,Group 1 Raw,JOHANNES,10,,,,,,

Region Info,Core 0,Core 1,Core 2,Core 3,Core 4,Core 5,Core 6,Core 7,Core 8,

RDTSC Runtime ,301.782500,301.779000,301.779000,301.778000,301.776000,301.776600,301.773500,301.776100,301.775000,

call count,1,1,1,1,1,1,1,1,1,

Event,Counter,Core 0,Core 1,Core 2,Core 3,Core 4,Core 5,Core 6,Core 7,Core 8

INSTR_RETIRED_ANY,FIXC0,1951948000000,1952187000000,1952188000000,1952187000000,1952182000000,1952183000000,1952173000000,1952176000000,59336320000

CPU_CLK_UNHALTED_CORE,FIXC1,661221000000,661483700000,661467500000,661495500000,661488500000,661486200000,661484500000,661477700000,59953230000

CPU_CLK_UNHALTED_REF,FIXC2,661221300000,661483900000,661468000000,661495200000,661488500000,661486100000,661484700000,661477300000,59953110000

TEMP_CORE,TMP0,40,46,43,41,42,42,43,43,42

PWR_PKG_ENERGY,PWR0,16729.8300,0,0,0,0,0,0,0,0

PWR_PP0_ENERGY,PWR1,281475000000000,0,0,0,0,0,0,0,0

PWR_DRAM_ENERGY,PWR3,5221.4010,0,0,0,0,0,0,0,0

UNCORE_CLOCK,UBOXFIX,663929900000,0,0,0,0,0,0,0,0

MEM_LOAD_UOPS_RETIRED_L3_ALL,PMC0,218340600,215532500,206896500,207858500,207403200,208686900,209820800,207940200,1708764

MEM_LOAD_UOPS_RETIRED_L3_HIT,PMC1,203438700,201243100,191077600,191928100,189737300,197637400,194441800,195111200,591782

TABLE,Region DGEMM,Group 1 Raw STAT,JOHANNES,10,,,,,,

Event,Counter,Sum,Min,Max,Avg,,,,,

INSTR_RETIRED_ANY STAT,FIXC0,15676560320000,59336320000,1952188000000,1.741840e+12,,,,,

CPU_CLK_UNHALTED_CORE STAT,FIXC1,5351557830000,59953230000,661495500000,5.946175e+11,,,,,

CPU_CLK_UNHALTED_REF STAT,FIXC2,5351558110000,59953110000,661495200000,5.946176e+11,,,,,

TEMP_CORE STAT,TMP0,382,40,46,42.4444,,,,,

PWR_PKG_ENERGY STAT,PWR0,16729.8300,0,16729.8300,1858.8700,,,,,

PWR_PP0_ENERGY STAT,PWR1,281475000000000,0,281475000000000,31275000000000,,,,,

PWR_DRAM_ENERGY STAT,PWR3,5221.4010,0,5221.4010,580.1557,,,,,

UNCORE_CLOCK STAT,UBOXFIX,663929900000,0,663929900000,7.376999e+10,,,,,

MEM_LOAD_UOPS_RETIRED_L3_ALL STAT,PMC0,1684187964,1708764,218340600,187131996,,,,,

MEM_LOAD_UOPS_RETIRED_L3_HIT STAT,PMC1,1565206982,591782,203438700,1.739119e+08,,,,,

TABLE,Region DGEMM,Group 1 Metric,JOHANNES,13,,,,,,

Metric,Core 0,Core 1,Core 2,Core 3,Core 4,Core 5,Core 6,Core 7,Core 8,

Runtime (RDTSC) ,301.7825,301.7790,301.7790,301.7780,301.7760,301.7766,301.7735,301.7761,301.7750,

Runtime unhalted ,300.5505,300.6699,300.6625,300.6753,300.6721,300.6710,300.6703,300.6672,27.2511,

Core Clock [MHz],2200.0320,2200.0324,2200.0314,2200.0340,2200.0330,2200.0334,2200.0324,2200.0344,2200.0374,

CPI,0.3387,0.3388,0.3388,0.3388,0.3388,0.3388,0.3388,0.3388,1.0104,

Temperature ,40,46,43,41,42,42,43,43,42,

Energy ,16729.8300,0,0,0,0,0,0,0,0,

Power ,55.4367,0,0,0,0,0,0,0,0,

Energy PP0 ,281475000000000,0,0,0,0,0,0,0,0,

Power PP0 ,9.327082e+11,0,0,0,0,0,0,0,0,

Energy DRAM ,5221.4010,0,0,0,0,0,0,0,0,

Power DRAM ,17.3019,0,0,0,0,0,0,0,0,

Uncore Clock [MHz],2200.0278,0,0,0,0,0,0,0,0,

L3 hit ratio,0.9317,0.9337,0.9235,0.9234,0.9148,0.9471,0.9267,0.9383,0.3463,

TABLE,Region DGEMM,Group 1 Metric STAT,JOHANNES,13,,,,,,

Metric,Sum,Min,Max,Avg,,,,,,

Runtime (RDTSC) STAT,2715.9957,301.7735,301.7825,301.7773,,,,,,

Runtime unhalted STAT,2432.4899,27.2511,300.6753,270.2767,,,,,,

Core Clock [MHz] STAT,19800.3004,2200.0314,2200.0374,2200.0334,,,,,,

CPI STAT,3.7207,0.3387,1.0104,0.4134,,,,,,

Temperature STAT,382,40,46,42.4444,,,,,,

Energy STAT,16729.8300,0,16729.8300,1858.8700,,,,,,

Power STAT,55.4367,0,55.4367,6.1596,,,,,,

Energy PP0 STAT,281475000000000,0,281475000000000,31275000000000,,,,,,

Power PP0 STAT,932708200000,0,932708200000,1.036342e+11,,,,,,

Energy DRAM STAT,5221.4010,0,5221.4010,580.1557,,,,,,

Power DRAM STAT,17.3019,0,17.3019,1.9224,,,,,,

Uncore Clock [MHz] STAT,2200.0278,0,2200.0278,244.4475,,,,,,

L3 hit ratio STAT,7.7855,0.3463,0.9471,0.8651,,,,,,

Again, I find that the first eight physical cores retire about 1952176000000 instructions while the ninth only retires 59336320000 -- 3% of the work of the other cores.

Is there any way to set a breakpoint in GDB to examine the amount of work each thread does?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Johannes,

Thank you for the report. In MKL DGEMM, we usually, using 8 threads instead of 9 for matrix partitioning as sometimes MKL DGEMM uses even number of threads will get better performance. But in this case, yes, it seems cause the flat performance. We can consider to add different partition method for the case.

Could you please let us know if 9 threads or other number of DGEMM are important for your real projects.

and what is your target machine?

Thanks

Ying

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Probably you can try 3x3 partition if the problem size is suitable for this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok, thanks for the information.

Interestingly I observe the expected 9-core performance on HSW and BDW (see plot in original post). I can see the expected behaviour on two IVB chips (Xeon E5-2660v2 and Xeon E5-2690v2).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page