- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello!

The problem is reproducible on Windows machines with big number (4 or more) of NUMA nodes.

My testing machine has 4 Intel(R) Xeon(R) CPU E7-8860 v3 with HyperThreading, so it has 4 Processor Groups (NUMA nodes) with 32 cores in each.

I ran 4 instances of parallel TBB application (each starts 20 TBB threads).

I would expect that all the applications will be distributed evenly on all NUMA nodes.

I even tried to do it explicitly (.bat file):

start /node 0 .\Release\tbb_affinity_test.exe

start /node 1 .\Release\tbb_affinity_test.exe

start /node 2 .\Release\tbb_affinity_test.exe

start /node 3 .\Release\tbb_affinity_test.exe

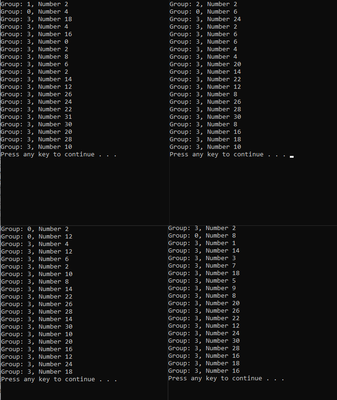

The result is not as I expected. Main thread of each instance is located on correct NUMA node indeed. But almost all the other threads are on 3rd (the last one) NUMA node.

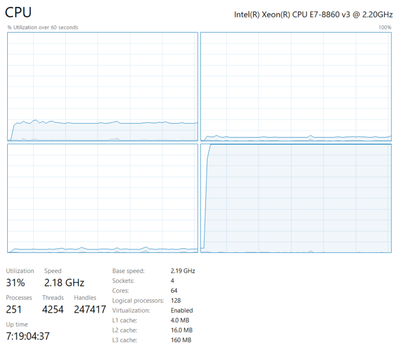

So 3rd CPU is oversubscribed while all the other CPUs are almost idle. It is proven by Task Manager as well as app's output (see below).

What can I do to evenly distribute workload?

I would expect that TBB threads should first be assigned to the same node as the main thread, how can I achieve it?

I ran my test on Windows 10 Enterprise, Version 20H2

Using TBB version 2021.5.2 (the same problem was found on older version of TBB as well)

Example was compiled on Microsoft Visual Studio Professional 2015, Version 14.0.25431.01 Update 3

The machine has 4 (four) Intel(R) Xeon(R) CPU E7-8860 v3 with HyperThreading, so it has 4 Processor Groups (NUMA nodes) with 32 cores in each.

The sample application is below:

#include <stdio.h>

#include <oneapi/tbb/parallel_for.h>

#include <oneapi/tbb/global_control.h>

#include <oneapi/tbb/enumerable_thread_specific.h>

int main()

{

oneapi::tbb::global_control global_limit(oneapi::tbb::global_control::max_allowed_parallelism, 20);

oneapi::tbb::enumerable_thread_specific< std::pair<int, int> > tls;

oneapi::tbb::enumerable_thread_specific< double > tls_dummy;

oneapi::tbb::parallel_for(0, 1000, [&](int i)

{

// dummy work

double & b = tls_dummy.local();

for(int k = 0; k < 10000000; ++ k)

b += pow(i * k, 1./3.);

PROCESSOR_NUMBER proc;

if (GetThreadIdealProcessorEx(GetCurrentThread(), &proc))

{

tls.local() = std::pair<int, int>(proc.Group, proc.Number);

}

});

for (const auto it : tls)

printf("Group: %d, Number %d\n", it.first, it.second);

system("pause");

return 0;

}

The output of 4 simultaneous run of the application is below:

Task Manager:

Thank you,

Maksim Popov

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for reaching out to us.

>> Microsoft Visual Studio Professional 2015, Version 14.0.25431.01 Update 3

Could you please try with supported version of Visual studio(VS 2022 17.0.0) and do let us know if you still face the same issue.

Please refer to the below link for Intel oneAPI compatibility with Microsoft Visual Studio

Thanks & Regards,

Noorjahan.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I recompiled my example on Microsoft Visual Studio Professional 2019 Version 16.11.10

And get absolutely the same issue

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for the confirmation.

We are working on your issue. We will get back to you soon.

Thanks & Regards,

Noorjahan.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for posting the sample. I will run a test and see if I can find the root of the problem. I know that while TBB is good for composability, some of those optimizations have a negative effect on NUMA platforms (more info: https://www.youtube.com/watch?v=2t79ckf1vZY). They've added new features to oneTBB for better performance on NUMA, such as task_arena contraints: https://oneapi-src.github.io/oneTBB/main/tbb_userguide/Guiding_Task_Scheduler_Execution.html

Does each instance of the application have the same performance, or is one instance much faster than the others? Since the OS is doing the pinning before the start of the application, TBB may need to be explicitly told to stay in its own node and ensure that memory allocation is done on the same node. As to why everything ends up on node 3, I'm not sure. If the data is somehow all being allocated on node 3, then threads running on the other nodes would be much slower with lower cpu utilization.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

All the instances seemed to be doing their job at the same pace. However, I haven't measured their performance.

I expect that TBB should assign threads to the same processor group as the main thread. Which apparently is not true according to my test. This issue is really a serious problem for our customers, so I would like to have some kind of workaround

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I understand this is a critical problem for you. I'm working with TBB experts to understand whether this is a Windows issue or specific to TBB. It looks like there is an /affinity option for the start command, which might be necessary to make TBB keep its threads on the CPUs within the specified NUMA node. Otherwise there are APIs to set affinity within the code, which it looks like you are trying to avoid. I'll let you know when I have more details from our TBB team.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jennifer,

I appreciate your efforts.

There are several important aspects:

First: We have different scenarios to run our application.

Main scenario is when we start only one instance of the application and it uses all available resources on the machine. This works well.

Less common (but also important) scenario is when we run several instances of the app each of them uses only part of available cores.

For example: on 4 CPU machine we can run 2 instances, each of them should work on 2 CPUs. Or we can run 4 instances, each of them should use 1 CPU. All work should be evenly distributed on the machine. This scenario doesn't work now due to this affinity issue.

We explicitly specify number of cores for the app at start. So number of cores to use is an input parameter for the app.

Second: we don't start the app using start command. We use CreateProcess Win API function.

Any type of solution is fine: either options for CreateProcess or example how to use Win API to set affinity within the code.

I'm not an affinity expert, so I don't know all options how to set affinity within the code.

I would expect that behavior:

If the app uses less or equal cores than available on single CPU, then all threads should be assigned to the same CPU as main thread. But any thread should be able to run on any core of the CPU rather than on the one particular core.

If the app uses more cores than available on single CPU, then threads should be assigned to CPU in round-robin manner. Or threads should be assigned to adjacent CPUs (I don't know which method will be better.)

I would appreciate an example how to workaround that problem for TBB threads in the most correct (and/or elegant) way.

Thank you,

Maksim

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The oneTBB experts looked into the issue and believe there is a bug in TBB and Windows NUMA environments. They are currently working on a fix.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jennifer,

Are there any updates on the issue?

Is it possible to open Service Request for the issue?

Thank you,

Maksim Popov

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Maxim,

Copying here from the OSC ticket chat for completeness, e.g., so our development team would see our exchanges:

The fixed code is merged with master - https://github.com/oneapi-src/oneTBB. Currently this fix is only in the main branch in GitHub. There are discussions ongoing what bug fixes to release in the next oneAPI release but no decision was made. You can try the main branch and let us know if it works for you.

Regards,

Mark.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

oneTBB 2021.7 including the fix has been delivered to GitHub https://github.com/oneapi-src/oneTBB/releases/tag/v2021.7.0

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page