- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello.

I wrote a C++ software to do h264 hardware video decoding, using DXVA2.

Source code here :

This program works very well with NVIDIA cards and Intel HD Graphics 4000 (windows7). I think this program respects correctly h264 specification from DXVA2_ModeH264_E device guid.

Unfortunately, with Intel graphic HD 500 and 510, decoding is not correct, for both windows7 and windows10. Drivers are updated.

If you run my program, you will see that there is no error, but the decoded video frame are not correct with Intel graphic HD 500/510. But it is ok with Intel HD Graphics 4000.

Can you help me to understand why Intel graphic HD 500/510 does not decode correctly, using dxva2.

- Tags:

- Development Tools

- Graphics

- Intel® Media SDK

- Intel® Media Server Studio

- Media Processing

- Optimization

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just contact with dev team and confirm the followings:

"For MSDK decoders, it’s doesn’t matter if stream has 3 or 4 byte start codes. When MSDK sends slices to the driver, MSDK always passes 3 byte start code to the driver whatever original slice in bitstream had."

So it seems confirmed what I said, DXVA doesn't go to MSDK but runtime(user mode driver), from product point of view, this is clear out of the Media SDK scope, but from user experience point of view, we should consider this situation.

I will send your suggestion to dev team.

Mark

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

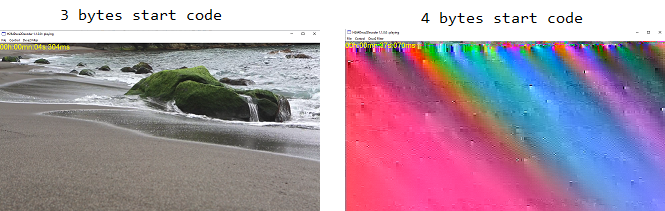

I found the problem.

Nalu buffer with start code 0x00, 0x00, 0x00, 0x01 are not handled.

Start code 0x00, 0x00, 0x01 is ok.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi David,

I am quite sure the hardware decoder should handle both 3 and 4 bytes startcodes.

But if you use DXVA2, I am not sure where the problem happened. It should be better if you can use the same video stream to run sample_decode.exe in our sample code.

You can go to Media SDK landing page and install Media SDK to try the sample code.

https://software.intel.com/en-us/media-sdk

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello.

In fact, Intel HD Graphics 4000 and Intel graphic HD 500 have this problem, using 4 bytes start code. If i use 3 bytes start code, it's ok, with shades. I tried with sample_decode.exe.

I use this sample movie : Movie sample (there is different resolution)

My program :

-> 1280*720 with 3 bytes start code = ok

-> 1920*1080 with 3 bytes start code = artifacts on top of the movie (first few lines)

-> 4 bytes start code = bad for all resolution

Intel media SDK :

-> 1280*720 with 3/4 bytes start code = ok

- -> 1920*1080 with 3/4 bytes start code = at the beginning (50 frames ~), video is green, after it's ok.

Check the movie file with 1920*1080 resolution, and let me know if you have green frame like me, with Intel media SDK.

Hope we find a solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello.

I proposed to add movie file parser on Intel-Media-SDK issues

-> no answer...

I proposed pull request on Intel-Media-SDK pull

-> no answer : closed (good news)

Here

-> no answer : see below

So, what should i think about it.

Intel, you want to make your product great, or not.

if so, tell me, I will not waste my time.

I receive advertising like :

Discover with Intel: Transformation solutions for any industry. "Access to business information can enable data-driven decisions and create opportunity. We are here to help you manage today’s critical workloads-and set you up for the future. Your digital transformation starts now."

So.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi David,

Sorry for the late response, there was a time when I was busying on other project and your post probably pushed down to the bottom when I came back.

I am going to try the clip you linked in our sample_decode.exe, did you change the stream when you tried? If you did, you can just upload here.

The other thing I was wondering is the hardware, if the issue happened only on a particular hardware, then it might take time to fix since they are old hardware.

Could you clarify the 2 questions?

I will not wait for your answer, I will use the default video from your link and try it on a SkyLake machine with Media SDK 2018R2 in Windows 10.

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello.

thank you for your reply. (How can i delete my previous comment ?).

- About the stream, the only thing i changed is that i replaced the four bytes nalu size with start code. That's all.

- The Intel HD Graphics 4000 and Intel graphic HD 500 behave the same. For Intel graphic HD 500, all is up to date : I bought the laptop this year, and I choose Intel GPU to test my program.

I can update my program to generate h264 video stream, so you can check, and if you are not sure my video stream is correct. But like I said, it is perfect with Nvidia GPU for all start code.

About the video sample : the video stream with 1280*720 resolution and 3 bytes start code displays well for both my program and latest Intel media SDK.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No worries about your comment, this shows the true situation of our support and I will think it as a valuable feedback.

About my goal, I might not explain clearly, I want to use the stream file you used and run it with our sample code, could you posted it here?

I think I have explain the reason why I want to check the bitstream in our sample first, there might be issue related to DXVA framework, I want to isolate any platform issues related to DXVA, driver or OS.

Sorry I still don't run your original stream since our server block the website because of the security, but I will find a way to download it and try it later today.

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello.

Here are the files. One with 3 bytes start code, and the other with 4 bytes start code.

For now, i just checked them with Media Player Classic-HC, and they play well. I will retry on my Intel Laptop tomorrow.

I will post more info about the laptop configuration, and some screenshots about the problem, if needed.

Here is the project i use to generate raw h264 video stream : CheckMp4Files

Thanks for your interest.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Great!

Let me try it today and see if I can reproduce it. My processor has HD530 and I hope I can reproduce it with our sample code.

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry for the late response,

I have done my test with sample_decode.exe but didn't find any issue. Could you also check it on your platform with the same command line? My concern is, the issue might related to some hardware and driver combination.

If you already tested with sample_decode.exe, please let me know your driver version and GPU version. I will submit a bug.

Here is my system configuration:

Processor Core i5-6440HQ @2.60GHz

GPU: HD Graphics 530

OS: Windows 10 Enterprise Ver 1709

Driver: 25.20.100.6444

Here are the logs:

~\Intel® Media SDK 2018 R2 - Media Samples 8.4.27.378\_bin\x64>sample_decode.exe h264 -i Beach10890_1280-720-3bytes.h264 Decoding Sample Version 8.4.27.378 Input video AVC Output format NV12 Input: Resolution 1280x720 Crop X,Y,W,H 0,0,1280,720 Output: Resolution 1280x720 Frame rate 29.97 Memory type system MediaSDK impl hw MediaSDK version 1.27 Decoding started Frame number: 1048, fps: 513.310, fread_fps: 0.000, fwrite_fps: 0.000 Decoding finished ~\Intel® Media SDK 2018 R2 - Media Samples 8.4.27.378\_bin\x64>sample_decode.exe h264 -i Beach10890_1280-720-4bytes.h264 Decoding Sample Version 8.4.27.378 Input video AVC Output format NV12 Input: Resolution 1280x720 Crop X,Y,W,H 0,0,1280,720 Output: Resolution 1280x720 Frame rate 29.97 Memory type system MediaSDK impl hw MediaSDK version 1.27 Decoding started Frame number: 1048, fps: 683.747, fread_fps: 0.000, fwrite_fps: 0.000 Decoding finished

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok.

So, you agree, both video streams are correct.

Why with dxva2 and Intel, 3 bytes start code is ok, while 4 bytes start code is not ok :

Configuration :

Processor: Intel(R) Celeron(R) CPU N3350 @ 1.10GHz (2CPUs)

GPU: HD Graphics 510

OS: Windows 10 Family 64bits Ver 1809

Driver: 26.20.100.6709

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Are you using sample_decode.exe?

For Windows, if you are using MFT, the software path might be different. Rather than through Media SDK layer, it might go directly to user mode driver(media runtime which is distributed via driver package). The only one thing could make this happens, the parsing is run under driver layer.

I also double check sample_decode.exe with rendering and didn't reproduce as your screen capture.

I happen to have a Liva Z with N3350, let me try it in Windows 10 1809 with sample_decode.exe.

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried on Liva Z, and still no issue.

I found the processor on this device is N4200;

Windows Pro 1809.

Driver was 25.20.100.6373 by default and changed to 26.20.100.6709 after update with DSA.

So I actually tested 2 drivers, I believe 6709 is DCH driver.

Let me check where bitstream parser should be, it seems like not in hardware, this is why DXVA could take some role.

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you.

Yes, sample_decode.exe has no problem to play those streams (3 or 4 bytes start code).

I don't use MFT, i use Dxva2. Did not you see the source code that I provided ? H264Dxva2Decoder

I use DXVA2_ModeH264_E device guid, and DXVA_Slice_H264_Short (ConfigBitstreamRaw == 2).

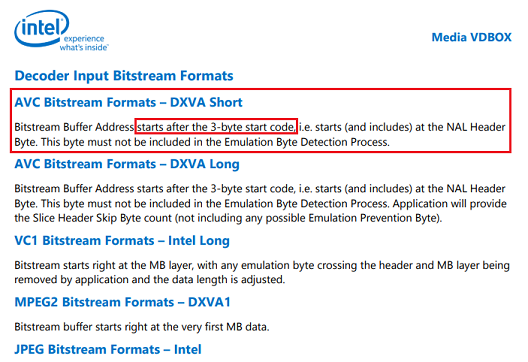

I found this documentation : intel-graphics-developers-guides

Under "Open Source Graphics Programmers Reference Manual", I downloaded "Volume 8: Media VDBOX" (intel_os_gfx_prm_vol8_-_media_vdbox_0.pdf) :

This document only talk about 3 bytes start code, not 4 bytes start code... So it seems Intel's Dxva2 implementation does not handle 4 bytes start code, according to this document.

Now i think, I just have to change the BSNALunitDataLocation from the DXVA_Slice_H264_Short structure, when GPU is Intel.

I just do this program for fun, but it would be nice if on Windows, we could have direct access to Intel Gpu decoder.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok. I think I understand now.

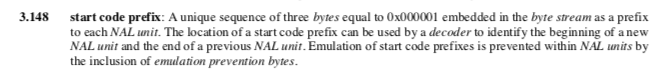

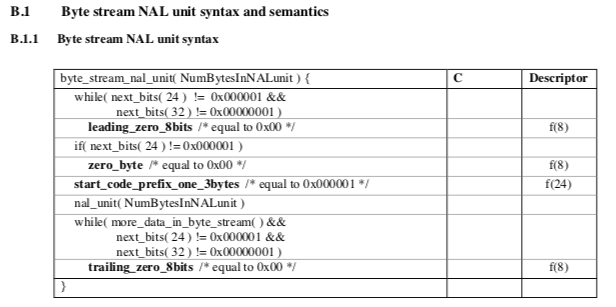

From "DirectX Video Acceleration Specification for H.264/AVC Decoding" :

BSNALunitDataLocation

If wBadSliceChopping is 0 or 1, this member locates the NAL unit with

nal_unit_type equal to 1, 2, or 5 for the current slice. The value is the byte offset,

from the start of the bitstream data buffer, of the first byte of the start code prefix in

the byte stream NAL unit that contains the NAL unit with nal_unit_type equal to 1, 2,

or 5. (The start code prefix is the start_code_prefix_one_3bytes syntax element. The

byte stream NAL unit syntax is defined in Annex B of the H.264/AVC specification.

The current slice is the slice associated with this slice control data structure.)

I'm sorry, I misread the specifications.

It seems that Nvidia is more permissive with this.

Thank you for your time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the quick response,

I think the manual has a confusion of start code but it should be basically right. Media SDK should handle 3 and 4 bytes without problem. I think we have confirmed this by testing. The problem you got should because DXVA might access to media runtime directly and didn't go through Media SDK.

Based on my understanding but I might be wrong, the manual only specifies start code prefix as 3 bytes long:

Here is Annex B Byte stream NAL unit syntax:

- First of all, NAL unit start code prefix is always 3 byte length (start_code_prefix_one_3bytes).

- Depending on NAL unit type and position additional one 0x00 “zero_byte” may be appended before “start_code_prefix_one_3bytes”. Usually this pair (zero_byte + start_code_prefix_one_3bytes) is called ”4-byte start code”.

- Standard clearly defines cases when “4-byte start code” is used: for SPS, PPS, and for first NAL unit of coded picture (latter is regardless of NAL unit type). For these cases MSDK uses ”4-byte start code” as specification requires. In other cases start code is always 3-byte.

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just saw your new post after I sent mine.

You are welcome, this discussion are very good and I like it.

So your solution should be: detect the NALU type and set BSNALunitDataLocation bit for Intel platform?

Any suggestion to our product?

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Now that I understand what's going on, at first, I would say I should respect Dxva2 specifications.

So, doing this, I don't really need to know, what GPU is in used. My first tests seem conclusive. I play with BSNALunitDataLocation.

But...

I always think that start code is a bad thing, and I will not speak about emulation prevention byte, the trick to fill the deficiencies.

With all these things, "start code/emulation prevention byte/nalu size (1/2 or 4)", the decoding process requires additional buffer management, that are not necessary, in my opinion.

So, the problem is with people who are doing the specifications. I think they forget that the decoding process must be quick, - very quick. One person encodes the file, millions play it. Because of their specification, we need to do some code contortion, who are counterproductive, and unecessary, again in my opinion.

My only suggestion : if Intel is in the mpeg/h264/h265 consortium, tells them to stop using stupid start code. Just use nalu size, and with 4 bytes only, or 8 bytes if they think that in the future, it will be needed...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just contact with dev team and confirm the followings:

"For MSDK decoders, it’s doesn’t matter if stream has 3 or 4 byte start codes. When MSDK sends slices to the driver, MSDK always passes 3 byte start code to the driver whatever original slice in bitstream had."

So it seems confirmed what I said, DXVA doesn't go to MSDK but runtime(user mode driver), from product point of view, this is clear out of the Media SDK scope, but from user experience point of view, we should consider this situation.

I will send your suggestion to dev team.

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the details.

If one day, you provide direct access to Intel GPU decoder on Windows, i will play with it.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page