- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Sir,

Could you help us to improve the playing delay issue.

We found the MSDK playing delay caused by kept four h264 packages and then decode and playing on x11,

if video stream fps = 1 from RTSP, the MSDK playing delay at least four seconds

At pipelinedecode.cpp at #1899

mfxStatus CDecodingPipeline::RunDecoding()

The sts = m_pmfxDEC->DecodeFrameAsync always return -10 in first four streams,

Thanks

Michael Wu

/mnt/nfsshare/atom_rebuild/msdk_ori/build$ __bin/release/sample_decode h264 -hw -gpucopy::on -f 1 -rgb4 -vaapi -async 1 -window 50 100 1820 980 -i test_ch14_20.h264 -calc_latency -r

michaelwu@michaelwu-Broxton-P:/mnt/nfsshare/atom_rebuild/msdk_ori/build$ __bin/release/sample_decode h264 -hw -gpucopy::on -f 1 -rgb4 -vaapi -async 1 -window 50 100 1820 980 -i test_ch14_20.h264 -calc_latency -r

libva info: VA-API version 1.8.0

libva info: User environment variable requested driver 'iHD'

libva info: Trying to open /usr/lib/x86_64-linux-gnu/dri/iHD_drv_video.so

libva info: Found init function __vaDriverInit_1_8

libva info: va_openDriver() returns 0

Decoding Sample Version 8.4.27.0

Input video AVC

Output format RGB4 (using vpp)

Input:

Resolution 1920x1088

Crop X,Y,W,H 0,0,1920,1080

Output:

Resolution 1820x980

Frame rate 1.00

Memory type vaapi

MediaSDK impl hw

MediaSDK version 1.34

Decoding started

After DecodeFrameAsync= -10, if -10, need more data

pBitstream->DataLength = 6843

After DecodeFrameAsync= -10, if -10, need more data

pBitstream->DataLength = 5638

After DecodeFrameAsync= -10, if -10, need more data

pBitstream->DataLength = 5162

After DecodeFrameAsync= -10, if -10, need more data

pBitstream->DataLength = 4861

After DecodeFrameAsync= 0, if -10, need more data

pBitstream->DataLength = 4977

After DecodeFrameAsync= 2, if -10, need more data

After DecodeFrameAsync= 2, if -10, need more data

After DecodeFrameAsync= 0, if -10, need more data

pBitstream->DataLength = 6186

After DecodeFrameAsync= 0, if -10, need more data

pBitstream->DataLength = 5263

After DecodeFrameAsync= 0, if -10, need more data

pBitstream->DataLength = 4582

After DecodeFrameAsync= 0, if -10, need more data

pBitstream->DataLength = 4390

After DecodeFrameAsync= 0, if -10, need more data

pBitstream->DataLength = 4287

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Duncan,

Thanks for posting in Intel Community Forums.

We are checking your issue internally and will get back to you soon.

Regards,

Chithra

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Duncan,

We are forwarding this case to Subject Matter Experts and they will get back to you soon.

Regards,

Chithra

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Michael,

Sorry for the late response.

I think I can reproduce the issue and I can tell the reason by check your video:

- The sample video has 20 frames, its display order and decode order are completely reverse.

- When the decode call read the bit stream, it reads the first decode frame which is the last frame being displayed, this is exactly you observed, the decoder is expecting to read more data to decode the first display frame, so it returns MFX_ERR_MORE_DATA which means continue decoding.

I can ask dev team to see if the decoder can output as decode order, but I want to check if this is your purpose.

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Mark,

We need display out is first frame input in MSDK and first output frame to display without latency,

If the first frame is I-Frame of H264 Frame.

We can not fully understand below, could you give us some hints?

"The sample video has 20 frames, its display order and decode order are completely reverse"

Thank you for your reply.

Michael

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Michael,

I am just checking if your video is a normal video in your product. If this was extracted from some bugs or a test content in your product, I will submit an investigation request.

This is not a normal video, since it shows wired when you play it. And FFmpeg player also has playback errors.

My previous comment is trying to say: This video stream has a special structure that the first frame displayed is actually coming at the end of the data stream, the second frame displayed is actually coming from the second to the end of the data stream, ... etc.

For display order and encoding order, you can refer to this web page:

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Mark,

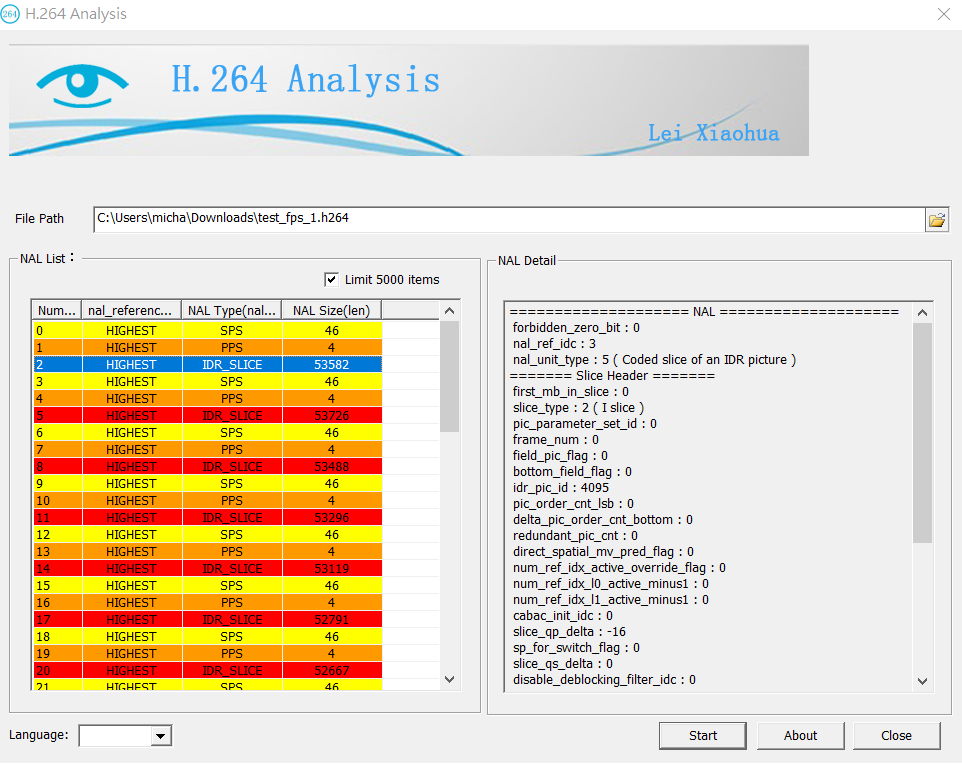

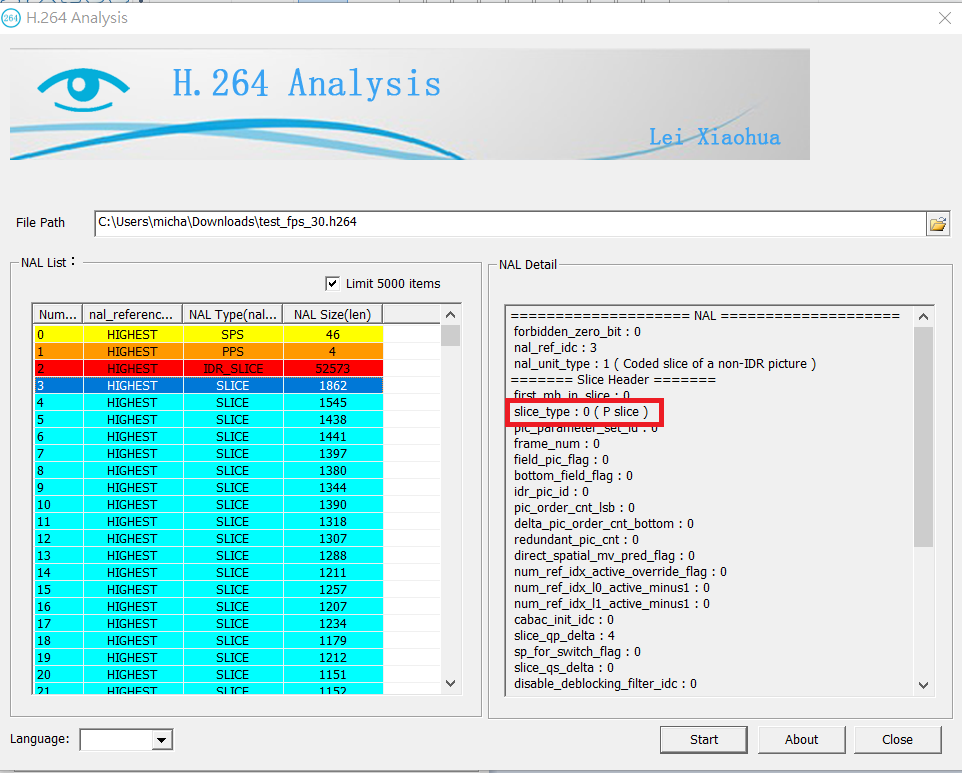

We re-configure the ipcam setup it to fps = 30, GOP = 30, and fps = 1, GOP = 1, the msdk decode and play always queue four frames and then playback, we hope the each frame input and display output without any delay.

if FPS = 1, GOP = 1, we can received I-frame per sec without any P-frame and Bi-Frame, we hope the msdk do not need queue any frame before playback.

if FPS = 30, GOP = 30, we can received first I-frame and P-frames following, we hope the msdk do not need queue any frame before playback.

The attached is our test video stream and log file (msdk and ffplay)

20210104.tar.gz/

fps_1_ffplay_20210104.log

fps_1_msdk_20210104.log

fps_30_ffplay_20210104.log

fps_30_msdk_20210104.log

test_fps_1.h264

test_fps_30.h264

Thank you for your help and reply.

Michael Wu

Thank you for your reply.

Michael Wu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

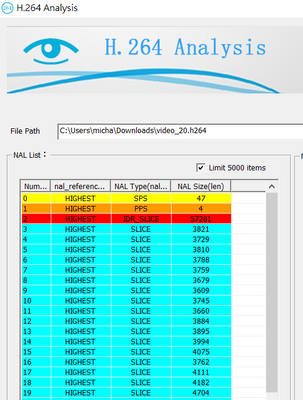

Added two H264 streams analysis,

fps = 1 , GOP =1

FPS = 30, GOP =30

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Michael,

Thanks so much for the new video and I can tell your goal now.

The test_fps_20 has P slices and test_fps_1 has I slices only. I think your goal is to remove the delay during the live stream.

I tried your command on test_fps_30 and here is what I found:

- The original command line: the latency starts at 31ms, and accumulated to 30757ms at the end of 920 frames.

- When I removed "-r" argument, the latency starts at 26ms and accumulated to 1000ms at the end of 920 frames.

- When I removed "-rgb4", the latency stays at average of 8ms.

- If I keep "-r" but remove "-gpucopy::on -f 30 -rgb4", the stream runs at 60fps but keep a latency of 67ms(1 frame)

As you can see, "-r" rendering causes a latency of 1 frame, this is understandable since Media SDK has to copy the frame to the rendering buffer.

"-rgb4" uses VPP, this also introduced a copying, this introduced another latency of 1 frame.

So could you remove "-r", "-rgb4" and see if you still have the latency?

I am not sure why the latency calculation was accumulated during my test but I believe this related to the testing algorithm.

So the latency was not introduced by decoder but the copying, if you can avoid color format converting and rendering, you should get least latency.

You can also remove "-f" to let the decoder run as fast as possible, this should align with the live streaming usage.

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Mark,

Thank you for your suggestion and support.

We try to remove "-gpucopy::on" "-f 30" "-rgb4", we can get little improved.

(PS, we can not remove "-r", we need do live view on x11 render.)

The result latency is about 132.85300 ms => four frames.

The log file is in fps_30_msdk_20210105.log

We need no latency decoding , if fps =1 rtsp stream, the latency = four frames, the delay will be 4 secs.

The attached liveVideo.zip is live view (fps =30 and fps = 1) video from rtsp streams to msdk + x11 render, we will see two video captures(fps =30, fps = 1) are un-sync.

Thanks

Michael Wu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Michael,

Can you share your platform info? Like processor ID and Media SDK version, etc.

I rebuild the latest MSDK release and I think I can reproduce your report as attached. My latency number is half of yours but I think this related to the hardware.

But this doesn't change my conclusion: the latency was caused by frame buffer copying from MSDK output to graphic rendering buffer. In my log file, I also did an output to a file and you can see the latency was decreased a lot. This is the other prove of my conclusion.

Let me repeat my conclusion: Media SDK uses a hardware codec in the integrated GPU of Intel processor, it has its own working frame buffer, if you want to render this frame to graphic, the copying from decoder output buffer to rendering buffer can't be avoid, this should be the latency you observed. To decrease this latency, you can remove the rendering by disable it or output to a file.

But let me do a final check with dev team, from the following document, it seems "-calc-latency" has some limitations:

https://github.com/Intel-Media-SDK/MediaSDK/blob/master/doc/samples/readme-decode_linux.md

"low_latency and –сalc_latency options should be used with H.264 streams having exactly 1 slice per frame. Preferable streams for an adequate latency estimate are generated by Conferencing Sample."

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Mark,

From your comment,

"The latency was caused by frame buffer copying from MSDK output to graphic rendering buffer."

We think the GPU copy may solve this latency issue, but we setup -gpucopy::on, the latency not be improved, any idea to do GPU copy or opencl about fixing latency of "copy MSDK output to graphic rendering buffer."

BTW, Our Platform Information following,

Processor ID, Intel(R) Atom(TM) Processor E3950 @ 1.60GHz

MediaSDK version 1.34

libva info: VA-API version 1.8.0

Thank for your support,

Michael Wu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Michael,

I think "GPU copy" here means moving the bits in the GPU memory, this is out of the scope of Media SDK, we might consider for our future product but not for this product. so you can search Linux graphic if there is a method. The "gpucopy::on" in the sample has different meaning, sorry for the confusion.

Since you are using ApolloLake, you can also try our Media SDK for Embedded Linux, although it support Yacoto official, it can also be used in Ubuntu. Its rendering path is different AFAIK.

I am still waiting for dev team's response, I will keep you updated.

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Michael,

Just an updated for dev team's response, they confirmed my conclusion and they are looking for some improvements in coming weeks.

The point is, "-vaapi" should be good enough to enable the gpu copying.

Since dev team is on vacation, we are expecting 2 weeks delay on this, I will keep you updated.

During this time, I also encourage to try the Media SDK for Embedded Linux which is using Wayland in stead of X11 for graphic engine, also optimized for ApolloLake.

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Michael,

Just a quick question, which OS were you using when you run the previous tests?

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Mark,

Our testing OS is following,

Linux 5.4.0-56-generic x86_64 GNU/Linux

18.04.1-Ubuntu

Thanks

Michael Wu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the info,

I have update this information for dev team, will keep you updated on their progress.

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Mark,

Any updated about this issue.

Thanks

Michael Wu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Michael,

The dev team starts investigating now and let me ask them again.

Mark Liu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @duncanchou ,

The stream you shared has DPB with size equal to 4 that's why you observe 4 frame latency.

Please read this post where we've explained latency reason and a way to avoid workaround it: https://community.intel.com/t5/Media-Intel-oneAPI-Video/h-264-decoder-gives-two-frames-latency-while-decoding-a-stream/m-p/1099706#M10087

Regards,

Dmitry

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Michael,

Sorry for the late response to your issue.

Dmitry had a good catch about Decoded Picture Buffer, if this is greater than 1, the camera will send the frame in several packet which cause the delay. It should be in the bitstream of your camera, if you can't change this, you can set DataFlag of mfxBitstream to MFX_BITSTREAM_COMPLETE_FRAME.

Let me know if you are still working on this issue, if not, I will close the case.

Mark

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page