- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't have Broadwell hardware in front of me yet so can you tell me which fine-grain SVM capabilities are supported in the latest driver on Gen8 devices? Just FINE_GRAIN_BUFFER?

If FINE_GRAIN_SYSTEM is supported then can an 8-16GB host address space be shared?

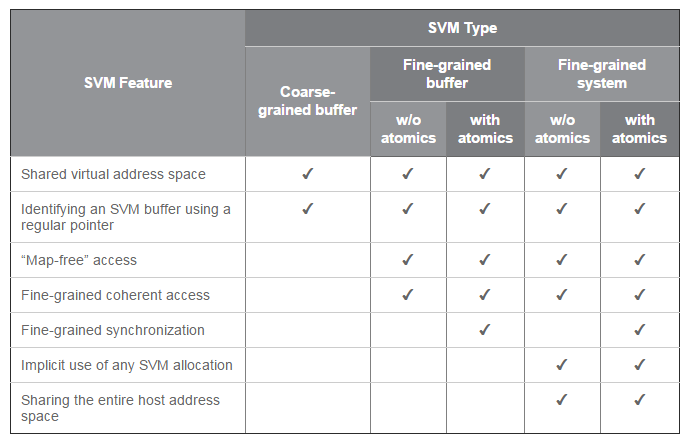

The OpenCL 2.0 SVM article does a nice job summarizing the capability bits. Can you list which are supported in the .4080 driver and which might eventually be supported?

CL_DEVICE_SVM_COARSE_GRAINfor coarse-grained buffer SVMCL_DEVICE_SVM_FINE_GRAIN_BUFFERfor fine-grained buffer SVMCL_DEVICE_SVM_FINE_GRAIN_SYSTEMfor fine-grained system SVMCL_DEVICE_SVM_ATOMICSfor atomics support

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Allan,

It depends on the Broadwell hardware you will have:

if it has HD5300 graphics, then only coarse grain buffer SVM is supported.

if it has HD5500 graphics and above, then both coarse grain buffer SVM and fine grain buffer SVM with or without atomics are supported.

Cannot comment on fine grain system SVM capabilities, since I haven't seen any production systems that support it yet.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks, that's helpful.

The followup Broadwell question is what are the latest memory allocation limits (max and total) for clCreateBuffer() and clSVMAlloc()?

I'm planning out an IGP demo that could potentially benefit from a large heap.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Allan,

I managed to get a hold of the guy with Broadwell system and it is reporting the following, which looks like the Global memory size is 1.6 GB, but individual buffer allocations are ~400 MB max (I believe this is on a system w/ 4 GB of RAM):

Using platform: Intel(R) Corporation and device: Intel(R) HD Graphics 5500.

OpenCL Device info:

CL_DEVICE_VENDOR :Intel(R) Corporation

CL_DEVICE_NAME :Intel(R) HD Graphics 5500

CL_DRIVER_VERSION :10.18.15.9999

CL_DEVICE_PROFILE :FULL_PROFILE

CL_DEVICE_VERSION :OpenCL 2.0

CL_DEVICE_OPENCL_C_VERSION :OpenCL C 2.0 ( using USC )

CL_DEVICE_MAX_COMPUTE_UNITS : 24

CL_DEVICE_MAX_WORK_ITEM_DIMENSIONS : 3

CL_DEVICE_MAX_WORK_ITEM_SIZES : ( 256, 256, 256)

CL_DEVICE_MAX_WORK_GROUP_SIZE : 256

CL_DEVICE_MEM_BASE_ADDR_ALIGN : 1024

CL_DEVICE_MIN_DATA_TYPE_ALIGN_SIZE : 128

CL_DEVICE_MAX_CLOCK_FREQUENCY : 900

CL_DEVICE_IMAGE2D_MAX_WIDTH : 16384

CL_DEVICE_GLOBAL_MEM_SIZE : 1669927680.0

CL_DEVICE_LOCAL_MEM_SIZE : 65536.0

CL_DEVICE_MAX_MEM_ALLOC_SIZE : 417481920.0

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK, thanks for providing such detailed answers. I'll try it out on my HD 6000 NUC when it arrives.

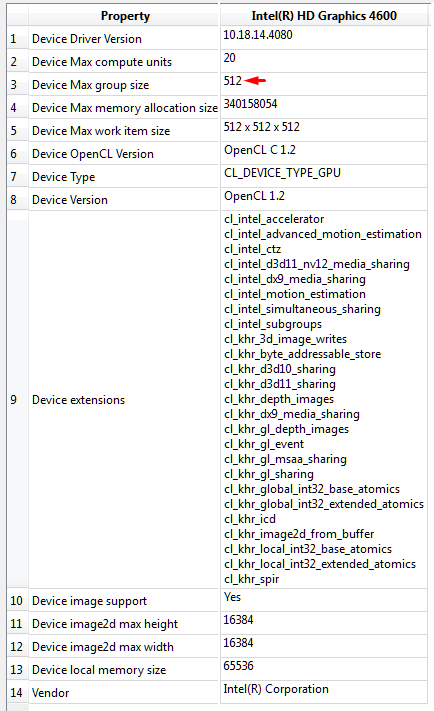

I'm surprised to see that the max workgroup size has been reduced to 256 from Haswell's 512.

Is this a permanent limit going forward?

Haswell was 512:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Allan,

Here is the answer from our compute architect:

You can compute the workgroup size from the # of threads in a subslice. BDW dropped the number of EUs per SS to 8 from 10.

HSW: 10 EUs/subslice * 7 threads * simd8 = 560. Rounded down to 512.

BDW: 8 EUs/subslice * 7 threads * simd8 = 448. Rounded down to 256.

Not sure about the permanency of this change though.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I asked because for some kernels launching a workgroup of 448 items (vs. 2 x 224) can avoid an extra round of off-slice communication while taking advantage of higher performance intra-subslice / intra-workgroup communication through SLM. Not a huge deal.

OK, thanks for answering my long stream of questions. Pumped to get the HD 6000 in my paws on Monday. :)

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page