- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I invested quite a sum in a computer with top specs, and got 4 14TB Toshiba MG07ACA14TE drives to make a Raid 5 array with a total of around 40TB.

Now, the initialization process takes 4 hours pr %. That's right. A whopping 400 hours to complete. This can't be right. 20 days!!

I have latest drivers from yesterday, latest OS, latest everything.

Computer is a I9-14900F with 192 GB ram, with fast M2-drives for system.

Everything is supposed to be super fast, but the RAID is so slow, even my 2017-computer running the same Intel raid is way faster.

What can be the issue here?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Still having problems?

You can try to disable write-cache buffer flushing and then enable Write Back cache in the Intel Optane GUI. Write back cache choice is greyed out until you disable buffer flushing.

Then test the array with crystal disk mark.

Another thing, which block size did you format the array with?

I think, with 128k stripe size, you should choose 128k block size when formatting. I tested a three drive array using default block size when formatting and got a bit worse write speeds. Not sure about this though.

Which motherboard are you using?

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Backtrader,

Thank you for posting on the Intel® communities. I can imagine how frustrated you should feel, I will do my best to clarify any doubts you have.

I would like to let you know that the time will depend on the disk performance. For example, different performance between GEN3/GEN4 NVMe, SATA SSD, SATA HDD 5400 rpm and 7200 rpm. Also, this behavior could be related to a SATA HDD cache design, we previously observed one performance issue related to SATA HDD cache design. Ex: Seagate ST500LX025.

If you have any questions, just let me know.

Regards,

Deivid A.

Intel Customer Support Technician

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I use 4x Toshiba MG07ACA14TE series Harddisk - 14 TB - 3.5"

Now, after running for 42 hours, it's at 10%.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Backtrader,

Thanks for your response. I will check internally to confirm if there is more information related to this behavior with the Intel® Rapid Storage Technology (Intel® RST). I will get back to you as soon as possible.

Best regards,

Deivid A.

Intel Customer Support Technician

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I did read recently an article of Western Digital.

RAID-5 rebuild this executed at max of 10MB/s and its true it takes lot of time.

At their high TB models they had added special tweak at the HDD firmware.

Sorry if this is not any major help, but these were news even to me.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

There are Toshiba drives, not Western Digital, and it's not a rebuild, but an initialization.

Thanks,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Backtrader,

Thanks for your time. Since you are creating a RAID for 40 TB, this behavior is expected. This also could be related to your hardware, I recommend you to check with ASUS to confirm if the driver used is the latest one.

Regards,

Deivid A.

Intel Customer Support Technician

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I use the latest driver as of 27.12.2023.

It has now passed 56% after nearly 10 days, so my estimate wasn't too far off. For some reason, it seems to go slightly faster now, I don't know what's going on in this process anyway.

Yes, it's a large array, but I still don't understand why such an operation should take this long for drives capable of much faster reading and writing of data.

But if you say this is expected, what can I do other than wait for it to finish.

Thanks,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Backtrader,

After checking your thread, I would like to know if you need further assistance.

If so, please let me know.

Regards,

Deivid A.

Intel Customer Support Technician

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It finally finished initializing, so I started transferring data from various sources.

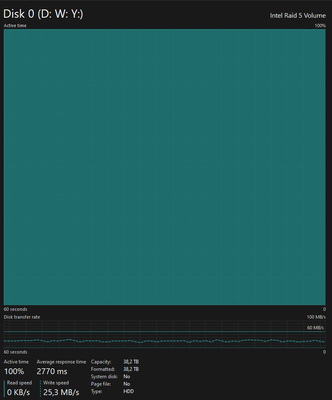

The write speed is very slow. I can't believe this is normal behaviour. It's the same from network, SSD, memory or other disk.

I have transferred about 1 TB til now, all with speeds going from zero to peaks of 60-70 MB/s for a few seconds, and then back to zero. Normally it's like in the picture below.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Still having problems?

You can try to disable write-cache buffer flushing and then enable Write Back cache in the Intel Optane GUI. Write back cache choice is greyed out until you disable buffer flushing.

Then test the array with crystal disk mark.

Another thing, which block size did you format the array with?

I think, with 128k stripe size, you should choose 128k block size when formatting. I tested a three drive array using default block size when formatting and got a bit worse write speeds. Not sure about this though.

Which motherboard are you using?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried your suggestion. Can't really notice any difference.

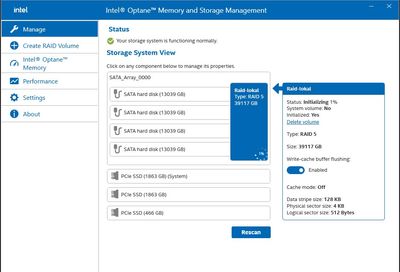

It is 128k stripe size, physical sector size 4KB and logical sector size 512 bytes.

I have a Asus Z790f.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Same problem here, i9-14900k, Z790 chipset. Seems like write speed is capped at 60-70 MB/sec.

And that is CrystalDiskMark benchmark speed. Real world performance is even slower, 18-20 MB/sec.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I guess I will be moving all data to a new drive (two, of course) and then make a new RAID with mirroring only. I lose about 8TB on it, but this is so slow I can't stand it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

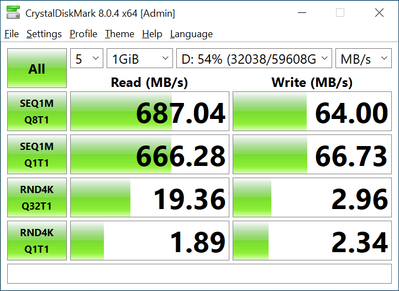

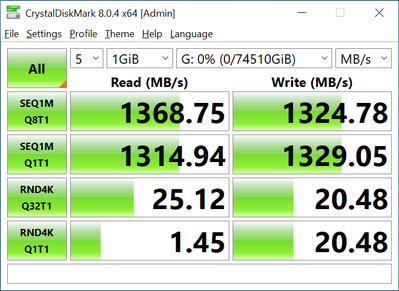

Could you do a CrystalDiskMark benchmark (ALL) on your array?

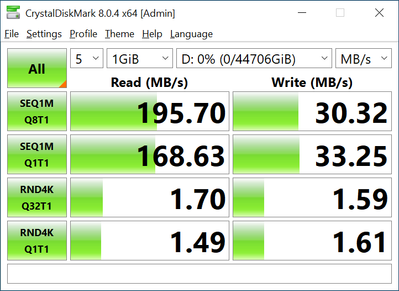

These are the figures I was getting on my 5x16 TB Seagate IronWolf Pro RAID-5 array (after expanding from 4 to 5 drives):

I decided to backup and disassemble my 5x16 TB array and test with different setups.

Here's the benchmark results for 4x16 TB RAID-5, not initialized:

Stripe size 128k, block size 128k, Write cache flushing OFF, Writeback cache ON, and init NOT started.

I then pressed "Initialize" and benchmarked again, with initialization running at 0%

Tested with three and five drives too. With five drives, initialization is forced to start when creating the array, so I was not able to benchmark it without initialization. (It will probably take three weeks to finish init..)

Could it be that our RAID setups got stuck in init mode, even if the GUI says init is finished?

Did you restart your computer while initing the array? I restarted mine a couple of times while expanding my array from 4 to 5 drives.

Just for fun, I did a RAID-0 test as well, with all five drives:

Single drives are performing around 280 MB/sec, both read and write.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Update:

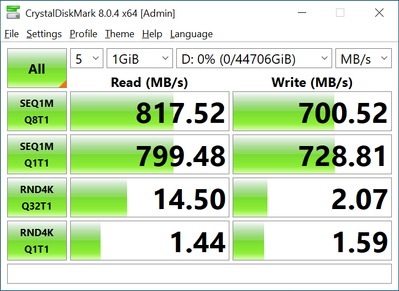

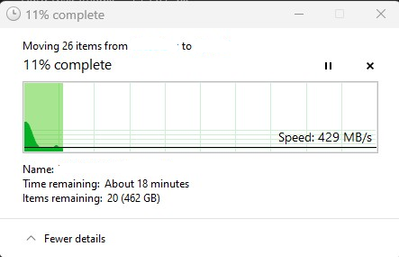

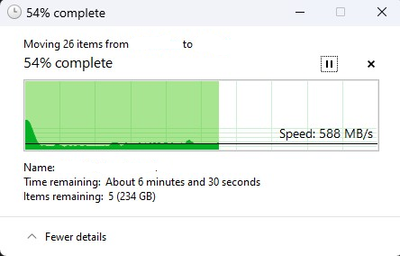

Yesterday I only transferred small files. Now I am trying to move 0,6TB of large VHD-files, and as you can see, the new settings makes a tremendous difference.

For some reason, the drives has been capable of moving a few gigabytes in a matter of seconds before, but as far as I can see, that is only buffering, because the drives are busy at 100% for quite some time after the copy process seemingly are over.

Now it runs at peaks of nearly 900 MB/s, with sustained speed of around 400-600 MB/s. This is how it was supposed to be all along. If this continues, I think you have solved my frustrating problem. THANK YOU!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's more like the speeds you would expect from a 4x14 TB array! Woohoo!

Which settings did you change?

The first few seconds as you point out, is the write cache being filled, so the real drive speed is the more flat sustained transfer rate you're getting. 600 MB/sec on big files is in line with the speed I got on my 4 drive array, before expanding to 5 drives.

A thing to note, when the array is filled up, speeds will be slower, down to roughly 50% when 100% full.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page