- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

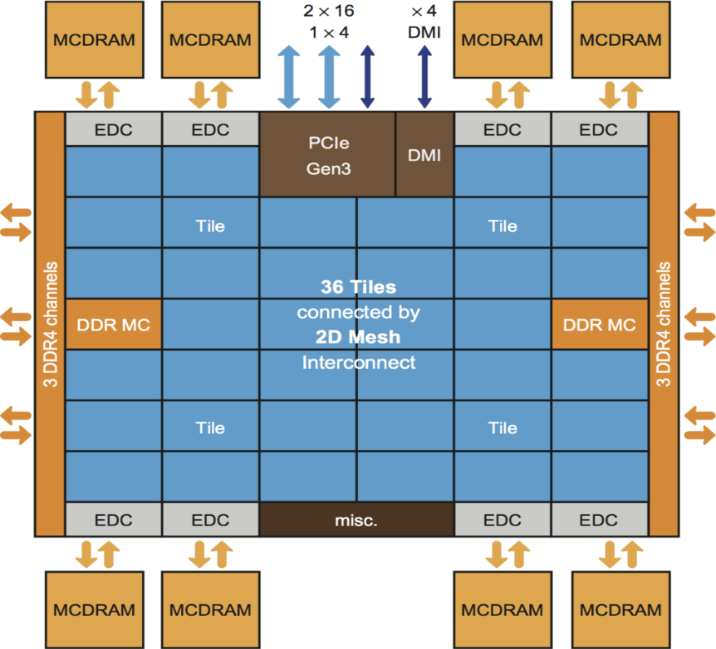

Below is the image of 7290 version of Xeon Phi x200 which has max number of tiles of all the versions of Xeon Phi i.e. 36 tiles / 72 cores (+ 2 tiles / 4 cores for yield recovery). In image below, there are 38 tiles, as the paper on this architecture states that 2 tiles are for yield recovery.

Based on this information following should be number of tiles and cores for other two versions of x200 given all x200 versions have 38 tiles physically including tiles for yield recover:

- Xeon Phi 7290: 36 tiles / 72 cores (+ 2 tiles / 4 cores for yield recovery)

- Xeon Phi 7250: 34 tiles / 68 cores (+ 4 tiles / 8 cores for yield recovery)

- Xeon Phi 7210: 32 tiles / 64 cores (+ 6 tiles / 12 cores for yield recovery)

Question:

- I want to know which of the tiles in below image Intel considers as yield recover?

- If it marks core 0 from bottom left, then I can guess:

- Last 6 tiles from top right are for yield recover for 7210

- Last 4 tiles from top right are for yield recover for 7250

- Last 2 tiles from top right are for yield recover for 7290

- If it marks core 0 from bottom left, then I can guess:

- Is it correct to consider that core 0 is at the bottom left and there on row by row to top cores 1,2....N are aligned?

- If above point is not correct, then how to visualize where is core 0 and how cores inside tile below are numbered?

Such information can help programmers map threads for better speed up. Please share if anyone has any information on this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I believe the extra tiles "for yield recovery" can be any tiles on the die. They are deactivated, i.e. not detectable in the OS or in the BIOS, and not connected to the power.

The idea is to manufacture more tiles than you need, then detect defective tiles in the batch of CPUs, and deactivate these tiles. Depending on how many tiles they had to deactivate and on what clock speed a particular die can reliably sustain, Intel sells the chip as a 64-core, 68-core or 72-core model. This way building in the the extra tiles allows them to increase the yield, i.e., the fraction of processors that make it to the shelf rather than get rejected due to defects in a part of the die. This is how they did it with Xeon Phi x100 as well: all chips were built with 62 cores, but sold with 57, 60 or 61 active cores.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I believe the extra tiles "for yield recovery" can be any tiles on the die. They are deactivated, i.e. not detectable in the OS or in the BIOS, and not connected to the power.

The idea is to manufacture more tiles than you need, then detect defective tiles in the batch of CPUs, and deactivate these tiles. Depending on how many tiles they had to deactivate and on what clock speed a particular die can reliably sustain, Intel sells the chip as a 64-core, 68-core or 72-core model. This way building in the the extra tiles allows them to increase the yield, i.e., the fraction of processors that make it to the shelf rather than get rejected due to defects in a part of the die. This is how they did it with Xeon Phi x100 as well: all chips were built with 62 cores, but sold with 57, 60 or 61 active cores.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Andrey,

Thank you.

Does this also mean that number of tiles for yield recovery can be any number not just power of 2?

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Intel has not disclosed the details of how various processor and/or tile identification schemes map to the physical layout of the chip.

It is possible to use performance counters and microbenchmarks to derive the layout for any particular chip, but it is fairly labor intensive.

I don't know if this can be overridden by the BIOS, but on my first cluster of Xeon Phi 7250 systems, the x2 APIC ID for each logical processor appears to be in a fixed relationship with the location of the core on the chip. Using the CPUID instruction with eax=0x0b, the "x2APIC ID" is returned in edx. Reviewing the results for 452 nodes, x2APIC IDs cover the full range expected for 38 tiles (=76 cores = 304 threads), with each of these 68-core processors missing x2APIC IDs corresponding to 4 pairs of cores. This suggests that the mapping of x2APIC IDs to physical position on the chip may only need to be done once.

Unfortunately, the "tile numbers" (used in the CHA indexing, for example), are not directly tied to the co-located core numbers. The uncore performance monitoring guide lists MSRs for all 38 tiles, but my experiments suggest that the disabled tiles have their CHA numbers shifted to the end of the list. I.e., the 68-core Xeon Phi 7250 may have many different patterns of disabled cores, but all of them map the active CHAs to the range 0-33, with the CHAs on the disabled tiles mapped to tile numbers 34-37. It is not clear whether this remapping is guaranteed to be done in the same way every time, so I gave up on trying to reverse engineer the layout. (The layout of the tiles is more interesting to me than the layout of the cores, since I am most interested in the mesh traffic counters.)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

>>all of them map the active CHAs to the range 0-33, with the CHAs on the disabled tiles mapped to tile numbers 34-37.

That seems to be the reasonable thing to do and is a side effect of having the (x2)APIC IDs being compressed by encapsulating scope (HT within core, core within tile, tile within die, die within package, package within/upon motherboard, motherboard amongst motherboards).

>> It is not clear whether this remapping is guaranteed to be done in the same way every time, so I gave up on trying to reverse engineer the layout.

My suspicion is the (x2)APIC IDs generated are deterministic for any given set of physically enabled hardware threads. But this is just a suspicion. The thread placement routine that I wrote and use does not make a distinction of tile within die except for in the case of KNL in SNC2 or SNC4 modes where subsets of tiles reside within a NUMA node. IOW I do not (cannot) determine the placement (north, south, distance) within the node.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The x2APIC IDs appear to have a 1:1 mapping to physical location on KNL, but not on other Intel processors. On both Xeon E5 v4 (Broadwell) and Xeon Scalable Platinum, I see the same set of x2APIC IDs on every chip, even when the bit masks in CAPID6 show a different pattern of enabled and disabled cores.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

respected to all

sir can you advice me for intel i7 is good or intel xeon for 3d max render as working which platform.

we build a new pc for as worked for 3d max but we cant't decide to which platform working is good for the future.

plz suugest me full configuration a new pc build.

thanks & regards

jagdish mumbai ,india

jagdish.vishwakarma.46@gmail.com

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page