- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

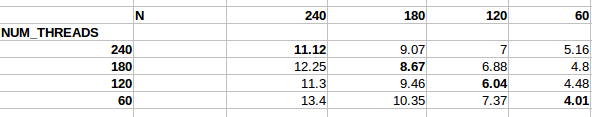

For 240 iterations on Xeon Phi, 240 threads give the best time, for 180 iterations, 180 threads give the best time, and similarly for 120 and 60. Shouldn't we expect that for even 120 and 180 iterations, 240 threads would've given the best times? If not, then why, ie, please provide some explanation or pointers to some document. (please note that iterations take the same time, programming language is fortran)

Thanks in advance.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I can't tell whether you're falling for the "complete divisibility of work" fallacy, or are asking about resource contention. So, let's try a few questions...

Q1: If it takes one woman nine months to gestate a baby, how long does it take nine women?

A: Nine months, with eight of the women uninvolved in the baby-production.

Q2: If it takes one person one minute to drill a hole on the wall, how long does it take ten people to drill the same hole?

A: (As above) One minute, more people don't help.

Q3: If it takes one person one minute to drill a hole in the wall, how long does it take ten people to drill ten holes?

A: If they have 10 drills, then one minute, but if there's only one drill it'll take ten minutes, or more if they spend time fighting over the drill.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If we've 180 units of work and 180 people, where 1 person takes 1 unit time to finish the work, then 180 people will take 1 unit time to finish 180 units of work (assuming complete divisibility). If we have 240 people, and 180 units of work, then they should ideally take 1 unit to finish. But they're taking more. Why is it so, ie, resource contention? How to know what's the contention?

Also, what is the "complete divisibility of work" fallacy?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Take a look at my blog, How to Plot OpenMP* Scaling Results which discusses one of the issues you may be having (not all threads are equal).

The "complete divisibility of work fallacy" is the assumption in Amdahl's law that you can divide some finite amount of work into an arbitrarily small number of chunks to allow you to use an arbitrarily large number of hardware threads to execute it.

How to know what's the contention?

Use VTune. (But, more generally, it's unlikely that you can tune your problem size to exactly match the number of hardware threads that are optimal, so even on the simple bin-packing theory, you 'd expect a lot of inefficiency, which is why a good rule of thumb is to have ten-times as many iterations as threads [which guarantees you ~90% efficiency]).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It is not clear what is meant by "iterations" in the initial posting -- a simple example (with wall-clock timings) would be helpful...

The unexpected timings that you are seeing could easily be due to incorrect thread placement:

- For example, with 180 units of work and 240 threads, it is rather easy to assign 4 units of work to each of the first 45 physical cores and 0 units of work to each of the last 15. This would certainly be slower than assigning 3 units of work to each of the first 60 physical cores. The same issue applies to the cases of 120 units of work and 60 units of work.

If the time required per unit of work is small, then unexpected timings could be due to synchronization overhead. For example, an OpenMP parallel DO has more overhead for 240 threads than for 180 threads. Even if 180 units of work are distributed uniformly, this additional synchronization overhead will make the 240-thread case more expensive:

- Using the EPCC OpenMP Benchmarks in C, my most recent measurements on the first generation Xeon Phi (Knights Corner) show that the OpenMP PARALLEL FOR construct has an overhead of about 20 microseconds for 60 threads on 60 cores, increasing to about 27 microseconds for 240 threads on 60 cores.

- The overhead on the second generation Xeon Phi is much lower -- just under 6 microseconds for 68 threads on 68 cores, increasing to about 12 microseconds for 272 threads on 68 cores.

If the thread count is changed within the program, additional overheads apply, and these overheads are fairly large on Xeon Phi.

If the threads are not bound properly, almost nothing useful can be deduced from timing measurements.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Other factors are if your "iteration", do work code, performs a library call that contains a critical section, or other means for serialization, then only one thread at a time can execute the critical section (other means for serialization). A common problem new users to parallel programming encounter is writing a dummy do work piece of code that calls a (serializing) random number generator. Or is performing file I/O.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For Intel(r) Xeon Phi KNC, a typical situation for efficiently parallel compiled code is a 60% speedup from 1 to 2 threads per core, and a further 10% speedup to 3 threads per core, if cache capacity considerations don't limit speedup. This is connected with the ability of the single VPU per core to take input from 1 thread every 2 cycles. In early versions of Intel compilers for KNC, the option to optimize code for a specific number of threads per core was needed.

For the KNL, it is quite possible to get full performance at 1 thread per core, with threads pinned properly to account for pairs of cores sharing cache tiles. Ideally, the choice of number of threads per core may not be as critical as it was for KNC. It is possible for each of 2 VPUs per core to take input even from a single thread.

Evidently, no one is sure what question is being asked in this thread. I'm guessing the question is predicated on an openmp parallel loop with the specified number of iterations, with enough work balanced among iterations for effective static scheduled performance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, Sorry for being sloppy. (Please tell if the details below are also incomplete) -

The machine is Xeon Phi 7120P (KNC). Compiler is icc 15.0.2. The application is CAM5 (by NCAR). Following is the pseudocode (very small part of CAM5 physics routines) -

begin = omp_get_wtime()

!dir$ offload begin

!$omp parallel do default (shared) num_threads(NUM_THREADS)

do i = 1,N

call "function_name" with the arguments as the ith input array index

end do

!dir$ offload end

end = omp_get_wtime()

total = total + (end - begin)

The above "function_name" also contains call to a random number generator, and there is no IO being done (commented out). The thread affinity used is scatter (this document says - Specifying scatter distributes the threads as evenly as possible across the entire system. scatter is the opposite of compact; so the leaves of the node are most significant when sorting through the machine topology map.)

Following is the timing result with different values of N and NUM_THREADS. Please note that these timings (which are in seconds) are for 49 offloads (or above code executed 49 times), and includes the copy time, computation time, and the initial overheads, ie, the value of "total" in the code above after executing the above for 49 times.

Thanks in advance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

>>The above "function_name" also contains call to a random number generator

If the random number generator is called heavily, then:

Depending on random number generator, it may use a critical section. meaning code within critical section is serialized for duration of the critical section. Either move the generation of the random numbers out of the parallel section .OR. use multi-threaded, non-blocking, random number generator.

If the random number generator is called lightly, then:

Then the above chart is indicative of the function (by all threads) has reached a memory bandwidth limitation.

If the above does not fit your situation, can you show your function.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It should become obvious that if the function performs serializing procedures (e.g. I/O, DB queries, ...) that this will cause the observed behavior.

Jim Dempsey

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page