- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

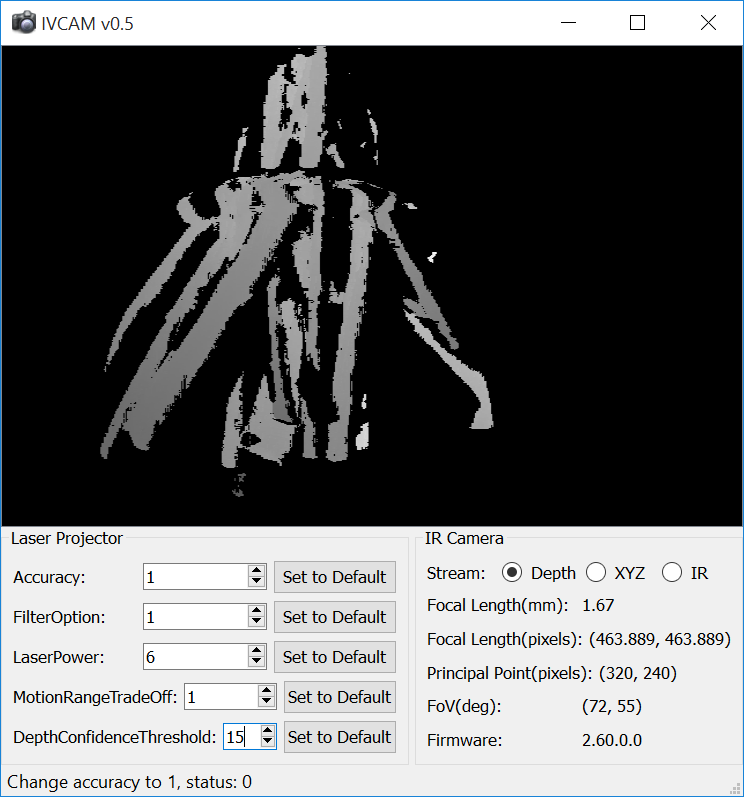

IVCAM v0.5 is a free utility that I made that allows you to do the following with your Intel RealSense F200 camera:

- Read and write camera and laser projector settings.

- Display depth, XYZ (color coded), or IR streams in real time while you are changing those settings.

Requirements are either:

a) Laptop with a built-in RealSense F200 camera

or

b) Computer with the following:

- 32 or 64 bit Windows 8.1 or Windows 10.

- DCM installed (to use the USB version of the F200 camera).

This is how it currently looks:

You can download it from here:

http://www.samontab.com/web/files/ivcam.zip

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you Sebastian! Great add to the community!

(In a few days I'll move it up to a post note to stay near the top).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The RealSense camera has a laser in it? I thought the depth sense data stream was infrared using the time-of-flight algorithm?

Robert

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK, here is how this camera works:

The Intel RealSense camera has an IR laser projector that emits IR light into the scene. There is an IR camera that reads the emitted pattern. Based on the displacement of the pattern due to objetcs in the scene, it can calculate the distance of the objects from the camera. This method of calculating depth in general is know as structured light, and this is the way other 3D cameras, like the kinect, work.

In the following image you can see the IR light being emitted by the camera into a wall that was a few cms away. (I expected a pincushion distortion in both axes, but it seems that only the vertical axis is distorted).

Now, about the IVCAM settings:

The LaserPower argument basically allows controlling the intensity of this laser projection, from powered off at 0 up to 16 at maximum power. Usually you will be using this setting at 16.

MotionRangeTradeOff: The difference here is that the pattern used in this camera is not a fixed set of points as is the case with the kinect, but instead is a moving pattern, from top to bottom. It seems that the rate at how the patttern is projected is controlled by this setting. The longer the number, the longer the vertical pattern gets stretched, so less patterns per second are being emitted and detected. This allows to gather more light, and therefore to detect objects farther away, but when they move, you don't detect the pattern correctly so no depth info for fast moving objects far away. The reverse is true for smaller numbers. You can think of this parameter as exposure time in normal camera terms.

Here is an image of the pattern. Because the image was exposed for too long (normal camera rates, like 30fps for example), the pattern looks blurred in the vertical direction, but some information can be seen in the horizontal axis.:

If you decrease the exposure time, you can actually see the pattern that is being projected into the wall:

Note that the pattern is projected so that it is seen straight, even though the illuminated area is not.

Accuracy: This parameter changes between 3 types of projected patterns, from coarse up to fine grained.

FilterOption: I think this parameter is only for the post processing and creation of the depth map. Given the initial points, with this option you select the method to interpolate the rest of the frame. Here there are a few different option. Look at the SDK manual for more details on this.

I hope these parameters make a bit more sense now.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Samontab,

My understanding is that the Real Sense camera uses time-of-flight not structured light. Here is a description of the camera www.eetasia.com posted on January 9th:

" It comprises a conventional HD RGB sensor, a laser that emits 16 different modulated signals and an IR sensor to detect the time-of-flight of the transmitted laser signals and compute distance. "

This article also mentions that ASUS and Lenovo are marketing laptops with the camera in the Netherlands.

However, a user comment to an article in PC Perspective (www.pcper.com) in October 2014, stated that Real Sense uses structured light depth sensing as did Kinect v1, whereas the first version used time-of-flight. Perhaps this is the source of confusion.

By the way, Kinect v2 now uses time-of-flight.

Maybe someone from Intel can clear up the debate.

Regards,

Jack

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you Sebastian, this has helped me a lot.

Regards,

Sam

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The previous "Perceptual" camera was a rebranded SoftKinetic DepthSense device, which was Time of Flight camera.

I'd be really surprised if they would have totally changed the device between the beta and the gold release. The resolutions, range, etc. are mostly the same, so I'm 90% sure it's still Time of Flight.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Looking at Samontab's excellent pictures of patterned (structured) light would seem to answer the question.

The Perceptual camera was totally different than the RealSense F200. (And similarly future Realsense cameras may use different tecnology than F200).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@samontab and others. Thank you for the information!

Robert

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Very useful tool and topic.

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi guys,

I just updated this tool:

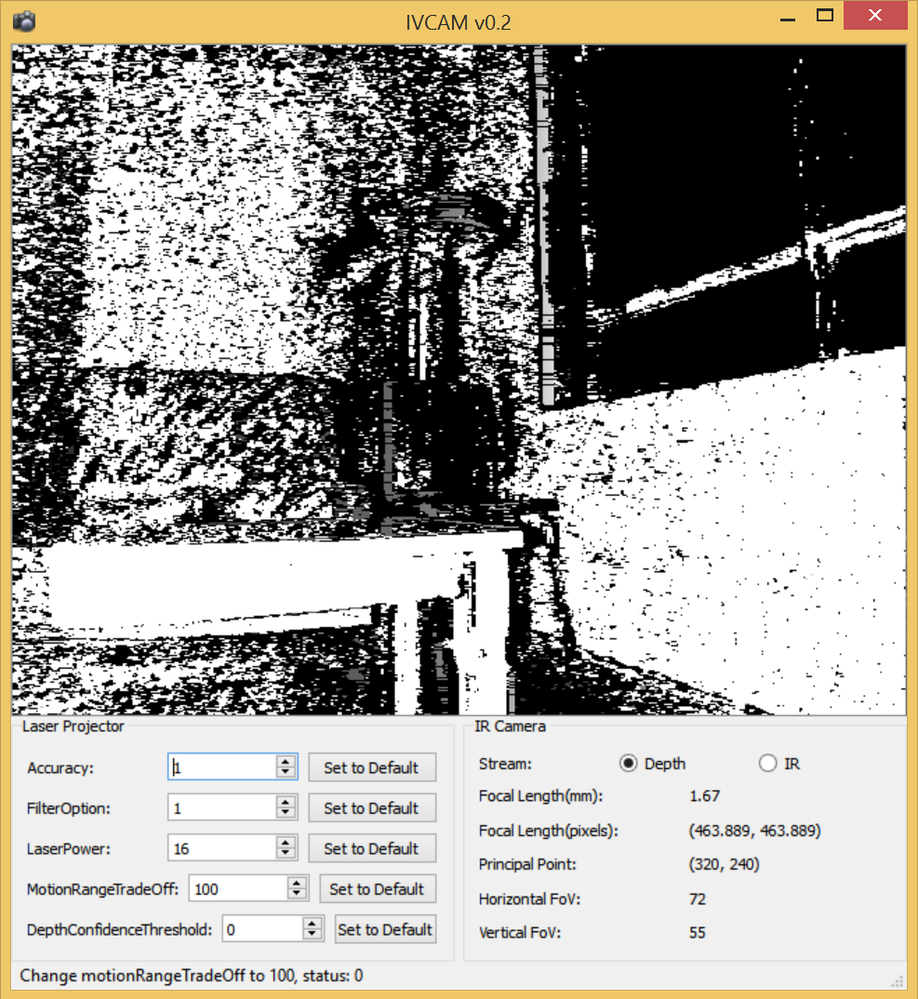

IVCAM v0.2

- Updated camera frame access for better performance.

- Added DepthConfidenceThreshold to the controls.

- Added individual buttons to set a feature to its default value.

- Added tool tips explaining the feature values.

- Added IR Camera information details.

Here is a screenshot of it, showing a longer range capability by tweaking the parameters:

This version should work better in general than the previous one. So if you had any issues before, give it a try again.

You can still get it at the same place:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The longer range is definitely something I'm interested in, thanks!

Regards,

Sam

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Nice post. Thank you all!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

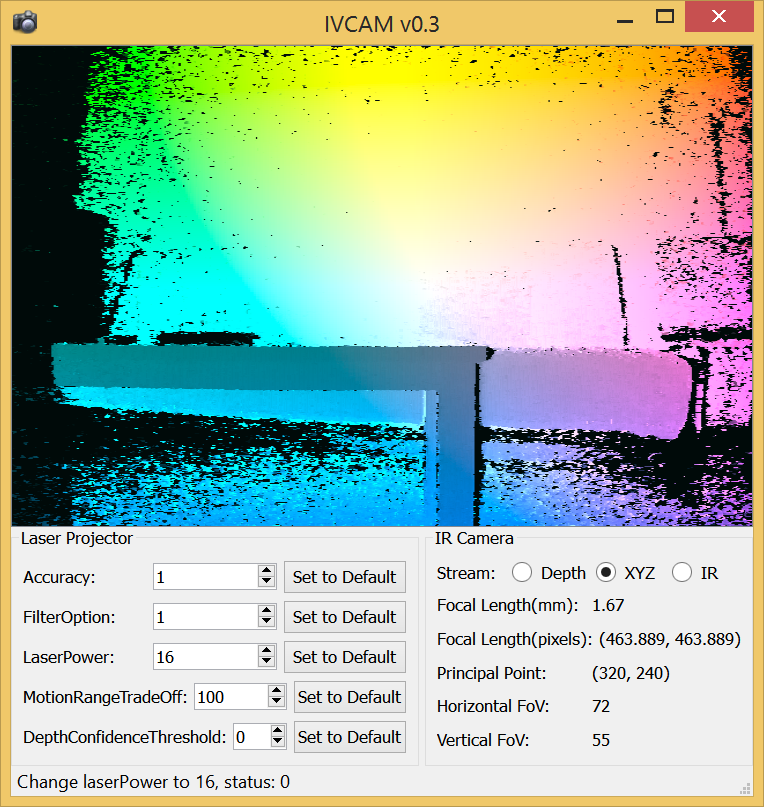

Another update to this tool:

IVCAM v0.3

- Added XYZ camera stream (Lab color space coded image based on XYZ position of each pixel, X and Y for color, Z for lightness)

- Updated depth stream view to display the entire depth range correctly (You should now see more gray scale tones, not mostly just black and white as it was before)

- Minor bug fixes

You can still get it at the same place:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I wrote similar software for Linux, and recorded a youtube demo of adjusting the controls. https://www.youtube.com/watch?v=Ht9PzVjWOgI

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Nice to hear that Daniel!

I'm also developing a Linux version of the SDK for the Intel RealSense camera, as I usually prefer to use Linux. It looks promising because as you could see, the OS detects the camera without an issue. Actually the app that I published here is cross platform, so once I finish the Linux access to the camera, this app will be able to run in Linux as well.

Regarding your depth pixel format mistery, the camera actually has 6 depth formats, as seen in the documentation:

Here are the 6 depth formats explained:

PIXEL_FORMAT_Y8

The 8-bit gray format. Also used for the 8-bit IR data. See fourcc.org for the description and memory layout.

PIXEL_FORMAT_Y8_IR_RELATIVE

The IVCAM specific 8-bit IR data containing the I1-I0 IR image. I1 is the IR image with the coded pattern on and I0 is the IR image with the coded pattern off.

PIXEL_FORMAT_Y16

The 16-bit gray format. Also used for the 16-bit IR data. See fourcc.org for the description and memory layout.

PIXEL_FORMAT_DEPTH

The depth map data in 16-bit unsigned integer. The values indicate the distance from an object to the camera's XY plane or the Cartesian depth.The value precision is in millimeters.

A special depth value is used to indicate low-confidence pixels. The application can get the value via the QueryDepthLowConfidenceValue function.

PIXEL_FORMAT_DEPTH_RAW

The depth map data in 16-bit unsigned integer. The value precision is device specific. The application can get the device precision via the QueryDepthUnit function;

PIXEL_FORMAT_DEPTH_F32

The depth map data in 32-bit floating point. The value precision is in millimeters.

A special depth value is used to indicate low-confidence pixels. The application can get the value via the QueryDepthLowConfidenceValue function.

I guess the mistery would be what does the YUV stream looks like, as it seems that it does not belong there.

Best of luck, and keep up the good work!

PS: I wish Intel could have some of those awesome hacking events here in Sydney, Australia...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Daniel, great work!, thanks for sharing it here.

I am also working on a Linux version of the SDK for the camera, as I prefer to use Linux. It seems promising because, as you saw, the camera seems to be working fine out of the box. Once I'm finished with that, this app would be cross platform.

Here is some help with your format mistery. The camera actually has 6 pixel depth formats documented:

PIXEL_FORMAT_Y8

The 8-bit gray format. Also used for the 8-bit IR data. See fourcc.org for the description and memory layout.

PIXEL_FORMAT_Y8_IR_RELATIVE

The IVCAM specific 8-bit IR data containing the I1-I0 IR image. I1 is the IR image with the coded pattern on and I0 is the IR image with the coded pattern off.

PIXEL_FORMAT_Y16

The 16-bit gray format. Also used for the 16-bit IR data. See fourcc.org for the description and memory layout.

PIXEL_FORMAT_DEPTH

The depth map data in 16-bit unsigned integer. The values indicate the distance from an object to the camera's XY plane or the Cartesian depth.The value precision is in millimeters.

A special depth value is used to indicate low-confidence pixels. The application can get the value via the QueryDepthLowConfidenceValue function.

PIXEL_FORMAT_DEPTH_RAW

The depth map data in 16-bit unsigned integer. The value precision is device specific. The application can get the device precision via the QueryDepthUnit function;

PIXEL_FORMAT_DEPTH_F32

The depth map data in 32-bit floating point. The value precision is in millimeters.

A special depth value is used to indicate low-confidence pixels. The application can get the value via the QueryDepthLowConfidenceValue function.

The only mistery that I see here is the YUV pixel format. It doesn't really make much sense to be there. I wonder what that image looks like...

I hope it helps!

PS: I wish Intel would have those awesome hacking events here in Sydney as well!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I saw the pixel formats in the both the documentation, and in the USB descriptors, but when I select anything I get 16 bit per pixel data. I looked for interleaved frames, and multiplanar formats, and didn't find anything there either. The YUV format shows up as effectively 16 bit little endian depth values too. I'm going to try to figure out how to get the IR stream tomorrow.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

mmm, that sounds like maybe the stream selection is not working. I am currently requesting the pixels with PIXEL_FORMAT_Y8, and using only 8 bits per pixel to create the depth image, and it is working fine. This means that I am not getting the 16 bit per pixel data stream that you are receiving regardless of the stream you use.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

New SDK breaks this utility

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes Vlad, this was expected.

I was reading the details in the Release Notes of the updated library and there are a few changes to the API, so probably one of the methods I'm using is not supported in this release.

Thanks for testing it!.

When I upgrade the library, I will port the application to the new API. For the moment, just the R1 version is supported.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page