- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

I am running Intel Caffe for AlexNet (512 iterations, 256 batch size) on Xeon Phi. First with Scatter 128 threads and then with Balanced 128 threads. I observed following

Run Time:

1) Scatter: 7.52 minutes

2) Balanced: 11.28 minutes

Number of Instructions (user space):

1) Scatter: 4.18671E+13

2) Balanced: 5.10185E+13

Questions:

1) Why is Scatter beating Balanced? With 128 threads, in Balanced we have sequential threads (0-1, 1-2 etc) running on same core compared to Scatter. This way Balanced should out perform Scatter?

2) Even the number of instruction executed by Scatter is less. Why? The input (training image) and output (weights) are identical, then why do I see instruction difference?

OpenMP environment variable used:

1) Scatter: export OMP_NUM_THREADS=128 export KMP_AFFINITY=scatter,granularity=fine

2) Balanced: export OMP_NUM_THREADS=128 export KMP_AFFINITY=balanced,granularity=fine

Please share your thoughts on this.

Thanks.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As discussed in your other thread, it's easier to do scaling experiments and understand what's going on if you use KMP_HW_SUBSET and either scatter or compact affinities. However since you do have a number of threads which is exactly divisible by the number of cores here, balanced is identical to compact.

The real questions, then, are

- Why is compact so much worse than scatter?

- Why does the number of instructions change?

Why is compact worse?

Whether compact or scatter affinity works better is a property of the application. Consider, for instance, what happens if you have a statically scheduled triangular loop like this running on 5 cores with 2T/C (i.e. 10 threads)

#pragma omp parallel for

for (int i=0; i<10; i++)

for (int j=0; j<i; j++)

... do something that takes constant time ...

If you use a compact schedule, then the distribution of threads to cores will be (0,1), (2,3), ... so the number of iterations executed by each core will be (0+1), (2+3)... (8+9), however with a scatter affinity the distribution of threads to cores is (0,5),(1,6) , and the similarly the work will be (0+5), (1+6), ... (4+9) in either case there remains imbalance, but in the compact case that is between 1 and 17 iterations per core whereas in the second (scatter) case it's between 5 and 13.

Of course, that's a naive view, though :-). What really matters is whether the core can re-allocate the out-of-order resources between the two threads when one of them has no work. If we consider that and use a model in which the work takes 1 unit of time when run with 1T/C but 2 when run with 2T/C, we can see that the critical aspect is the last core. With the compact schedule it will take (8*2)+1 = 17 units of time, whereas in scatter (4*2)+5 = 13 units of time.

It is also possible that compact is better than scatter; that frequently happens if neighbouring threads are accessing the same(or nearby) data; then data which is brought into the cache by one thread can be used by the other, avoiding a costly memory access.

Thus, as ever, the answer as to which is better is "It depends..." with the corollary that you have to measure it and understand your code.

Why different numbers of instructions?

That should be obvious by now. Threads which are waiting may be executing polling loops

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi James,

This is getting really confusing for me. You said for my case above, balanced is equal to compact. I don't think that is correct.

Number of cores for each of the thread affinity scheme:

1) Scatter: 128 threads = 64 cores with 0-63 allocated to first thread id of each core, then 64-127 allocated to second thread id of each core.

2) Compact: 128 threads = 32 cores with 0-127 allocated from core 0 to core 31 with all fours threads each core can handle filled up before moving to next one

3) Balanced: 128 threads = 64 cores with 0-64, 1-65 and so on allocated first and by the time 63rd core allocation arrive, all 128 thread is allocated.

Then how can (3) be same as (2)?

Also, if Intel OpenMP has an option to use "KMP_AFFINITY=balanced" (unless you say above use of balanced with this flag is incorrect) then why should I rely on "KMP_HW_SUBSET".

Sorry if this is getting confusing from my side.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Chetan,

What James was trying to point out it the loop he has sketched is a static scheduled loop where the outer loop is that which is partitioned. The outer loop iteration range is evenly partitioned amongst workers, however each worker's inner loop iteration range, per iteration of the outer loop, is dependent upon the specific index value acquired from the outer loop. IOW the workload of each thread is not equivalent. The thread processing the lowest indexes of the outer loop has much less work to do than the threads processing the highest indexes of the outer loop. As to if this is how the the test program you run (Caffe) I cannot say as I haven't seen your code.

To evenly distribute the work, you may have to restructure your loops:

int N = 1000; // whatever outer count is (e.g. N as in N-Body)

int iMax = N*N / 2;

int DispatchTable[iMax];

int iSize = 0;

// once only

for (int i=0; i<N; i++)

for (int j=0; j<i; j++)

DispatchTable[iSize++] = i*N + j;

...

#pragma omp parallel for

for (int index=0; index<iSize; index++)

{

int i = DispatchTable[index] / N;

int j = DispatchTable[index] % N;

... do something that takes constant time ...

...

}

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

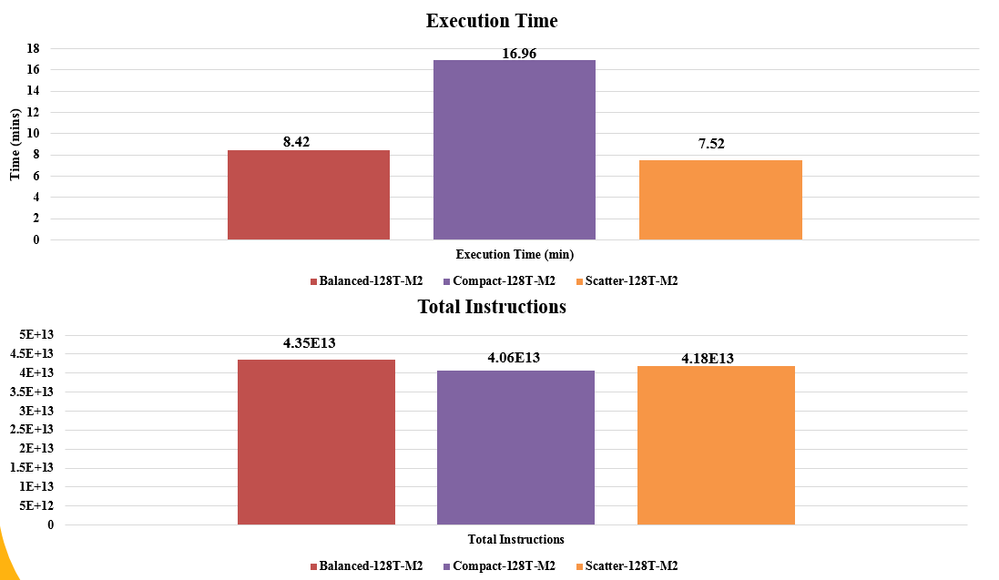

I run Balanced, Compact and Scatter for Intel Caffe for AlexNet with input and output being same.

Experiment 1

I have following environment variables set for 128 threads:

Compact: export KMP_HW_SUBSET=32c,4t export KMP_AFFINITY=compact,granularity=fine

Scatter: export KMP_HW_SUBSET=64c,2t export KMP_AFFINITY=scatter,granularity=fine

Balanced: export KMP_HW_SUBSET=64c,2t export KMP_AFFINITY=compact,granularity=fine

Below graph shows the execution time and instruction retired (userspace) for same application with different affinity.

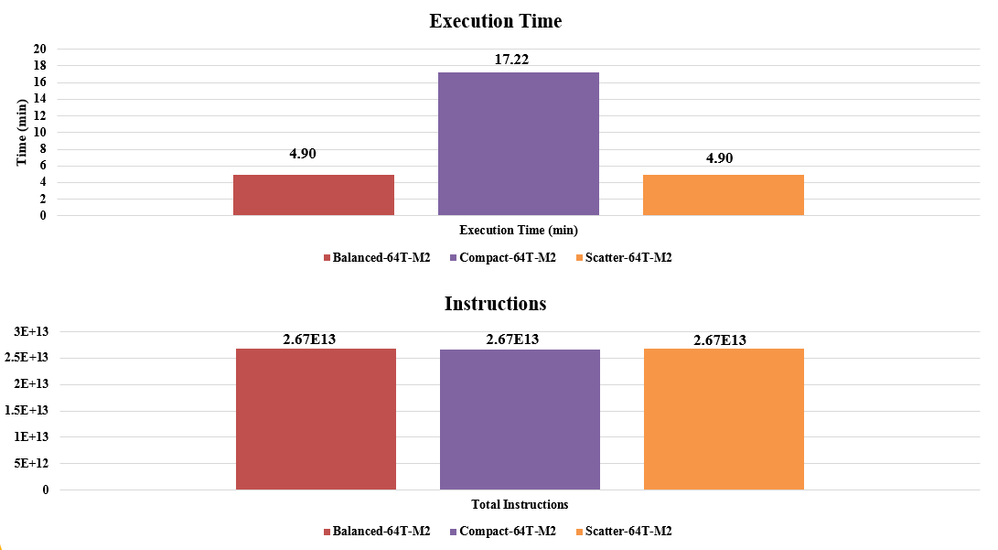

Experiment 2

I have following environment variables set for 64 threads:

Compact: export KMP_HW_SUBSET=16c,4t export KMP_AFFINITY=compact,granularity=fine

Scatter: export KMP_HW_SUBSET=64c,1t export KMP_AFFINITY=scatter,granularity=fine (for 64 threads, Scatter == Balanced)

Balanced: export KMP_HW_SUBSET=64c,1t export KMP_AFFINITY=compact,granularity=fine (for 64 threads, Scatter == Balanced)

Below graph shows the execution time and instruction retired (userspace) for same application with different affinity.

Questions:

1) If compact is taking more time to finish, then the number of instruction should be higher? As there are 4 threads which are fighting to issue instructions. If any of the thread goes ideal (looping), then is should retire instructions (not be related to application) to keep it self alive?

2) I have thread to system logs for each affinity. I have verified everything correctly and mapping happens as per what settings I provide for both 128 and 64 thread. For 128 thread, I expect balanced to perform better as sequential thread are within same core, which isn't the case for Scatter. Is this correct? If not, then too I don't expect number of instructions to be higher compared to Scatter.

I understand, any conclusion is difficult until proper understanding of code (specifically loop and matrix) structure. However, at least the number of instruction shouldn't not be inconsistent. If pooling loops are the major reason, then Compact should be retiring many more instructions than other two affinity.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Number of cores for each of the thread affinity scheme:

1) Scatter: 128 threads = 64 cores with 0-63 allocated to first thread id of each core, then 64-127 allocated to second thread id of each core.

2) Compact: 128 threads = 32 cores with 0-127 allocated from core 0 to core 31 with all fours threads each core can handle filled up before moving to next one

3) Balanced: 128 threads = 64 cores with 0-64, 1-65 and so on allocated first and by the time 63rd core allocation arrive, all 128 thread is allocated.Then how can (3) be same as (2)?

I was discussing in the context of your other thread, where I have already said (in effect) "Never use balanced because it's horribly confusing and you can't run scaling tests. Instead use KMP_HW_SUBSET and either compact or scatter affinities".

What I was trying to say above was that your 128T balanced case is the same as KMP_HW_SUBSET=64C,2T KMP_AFFFINITY=compact ; I wasn't trying to say anything about using compact on its own, which, as you say, only uses half the hardware resources.

Also, if Intel OpenMP has an option to use "KMP_AFFINITY=balanced" (unless you say above use of balanced with this flag is incorrect) then why should I rely on "KMP_HW_SUBSET".

Because "balanced" doesn't let you do any scaling experiments. With KMP_HW_SUBSET I can look at any number of cores and any number of HW threads/core with scatter or compact affinity. With balanced I can't. Suppose I want to check the performance at 30Cx4T with KMP_HW_SUBSET=32C,4T I can then look at compact or scatter affinities. If I try to use KMP_AFFINITY=balanced, OMP_NUM_THREADS=120, I get some horrible distribution over 64C in which 56C have 2T and 8C have 1T which is utterly imbalanced (:-)), and nothing like what I wanted.

For sensible investigation of the effect of affinity you must ensure that you're running on the same amount of hardware in each case.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Answering your latest.

Please, please, please, go and read my blog on "How to Plot OpenMP Scaling Results". I pointed you at it in your other thread.

Above you are committing the cardinal sin of comparing results that are using completely different amounts of hardware and expecting them to be similar; two of your testcases use 64C but one uses 32C. Unsurprisingly the 32C case is ~1/2 the performance of the 64C one.

As to instruction counts, consider these two trivial cases

- I have a single thread that executes with performance 2MIPs which executes a problem in 1s. How many instructions are executed?

- I have two threads each of which executes at 1MIPs, they have to execute the same problem, which we assume is perfectly parallelizable, so each executes half the problem. How long does this take? How many instructions are executed in total in this case?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

>>1) If compact is taking more time to finish, then the number of instruction should be higher?

That is not normally true. The time for a single instruction to execute is not necessarily fixed. In most applications, large portions of the instruction execution time vary depending on operand(s) placement. Register, L1 cache, L2 cache, L3/LLC cache, same memory node, different node, TLB entry in cache, page resident.... Therefor mere instruction count is not (necessarily) a good performance metric. Also, for some most CPUs floating point instructions compete for resources amongst threads within a core.

A secondary issue with instruction counts is that threads within a parallel region tend to complete the parallel region at different times (as well as reach barriers at different times). Simple instruction counting will include instructions executed in the spin-wait loop. You can extract this time, but this time is important in aiding you in balancing the workload.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In part for the reasons Jim gave, increasing number of threads might be expected to increase total number of instructions. Under Vtune, some simple statistics like clock ticks per instruction may become useless unless you can isolate the critical path.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page