- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

I have been able to measure Xeon Phi memory bandwidth using STREAM.

I wish to do same but using Intel Caffe. I can see following counters in "perf". Is it advisable to trust these? I am not sure why "ScaleUnit" is used.

1 [

2 {

3 "BriefDescription": "ddr bandwidth read (CPU traffic only) (MB/sec). ",

4 "Counter": "0,1,2,3",

5 "EventCode": "0x03",

6 "EventName": "UNC_M_CAS_COUNT.RD",

7 "PerPkg": "1",

8 "ScaleUnit": "6.4e-05MiB",

9 "UMask": "0x01",

10 "Unit": "imc"

11 },

12 {

13 "BriefDescription": "ddr bandwidth write (CPU traffic only) (MB/sec). ",

14 "Counter": "0,1,2,3",

15 "EventCode": "0x03",

16 "EventName": "UNC_M_CAS_COUNT.WR",

17 "PerPkg": "1",

18 "ScaleUnit": "6.4e-05MiB",

19 "UMask": "0x02",

20 "Unit": "imc"

21 },

22 {

23 "BriefDescription": "mcdram bandwidth read (CPU traffic only) (MB/sec). ",

24 "Counter": "0,1,2,3",

25 "EventCode": "0x01",

26 "EventName": "UNC_E_RPQ_INSERTS",

27 "PerPkg": "1",

28 "ScaleUnit": "6.4e-05MiB",

29 "UMask": "0x01",

30 "Unit": "edc_eclk"

31 },

32 {

33 "BriefDescription": "mcdram bandwidth write (CPU traffic only) (MB/sec). ",

34 "Counter": "0,1,2,3",

35 "EventCode": "0x02",

36 "EventName": "UNC_E_WPQ_INSERTS",

37 "PerPkg": "1",

38 "ScaleUnit": "6.4e-05MiB",

39 "UMask": "0x01",

40 "Unit": "edc_eclk"

41 }

42 ]

Thanks.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The "ScaleUnit" value simply indicates that each increment of the counter corresponds to 64 Bytes. The units are incorrect in the example above-- 64 Bytes is 6.4e-05 MB, which is not the same as 6.4e-05 MiB -- but I don't know whether the actual usage matches this incorrect definition.

The four events above appear to count correctly, but to make sense of the numbers requires some post-processing (and some additional events) if you are running in Cached mode. This is described in Section 3.1 of Volume 2 the Xeon Phi Performance Monitoring Reference Manual (document 334480).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi John,

The values I get from perf and from STREAM differ 5-10x (in GB/s), so I am trying to understand how to ensure what I get from "perf" is correct including any post processing.

The post processing is only applicable to Cache mode and not Flat and Hybrid, if I am correct?

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The post-processing formulas appear to be correct for cache mode (with the caveat about streaming stores). I don't have any machines running in Hybrid mode, so I have not looked at that configuration.

I don't think I have tried "perf" for memory traffic on KNL -- the command lines get ridiculously long since you have to specify each event for each of the 6 DDR4 channels, and then you have to manually combine the results.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi John,

I do have such data. Following is the total request across EDC and DDR. Will you be able to suggest how to get final bandwidth out of these?

EDC (8): 51111276532

MC (6): 2175389216

Above is for AlexNet running on Intel Caffe with 64 threads in A2A Flat Mode.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The traffic is simply 64 Bytes times the corresponding counts. To compute a bandwidth, you simply divide by elapsed time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi John,

Have you done Xeon Phi's memory bandwidth analysis for non-STREAM benchmarks?

My calculation for other benchmarks show 1/8th bandwidth utilization. Is this true for your analysis too?

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't use "perf" very often because the implementation is undocumented and even the location of the source code that defines various events moves around from one kernel revision to the next. In some revisions of the kernel, "perf" does not understand that uncore counters have socket-level scope, so it reports the sum of the counts measured on all cores. In newer revisions of the kernel, this particular problem is fixed, but it is certainly possible that other problems could have been introduced -- particularly on a processor like KNL that does not have an extremely wide user base (by Linux kernel writer standards).

I have certainly tested the DDR and EDC counters on KNL with a variety of codes for which I can compute the expected memory traffic. In "flat" mode, the counters look quite accurate, including reasonable levels of overcounting of streaming stores due to eviction of incompletely filled write-combining buffers. In "cache" mode, I have done fewer tests, but the values look reasonable, and the formulae in Volume 2 of the KNL Performance Monitoring Reference Manual give reasonable matches to expected values for benchmarks that have a mix of MCDRAM hits and misses (provided that streaming stores are not used).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi John,

For Hybrid bandwidth calculation doesn't change in terms of performance counters and is same as Flat mode (using EDC and MC for MCDRAM and DDR4 respectively? I don't think it should be that way, given 50% (or 25%) of MCDRAM changes to L3 in Hybrid mode.

For Cache mode (as you suggested above) the Xeon Phi PMU document emphasizes the need to log different counters for bandwidth calculation, not for Hybrid mode.

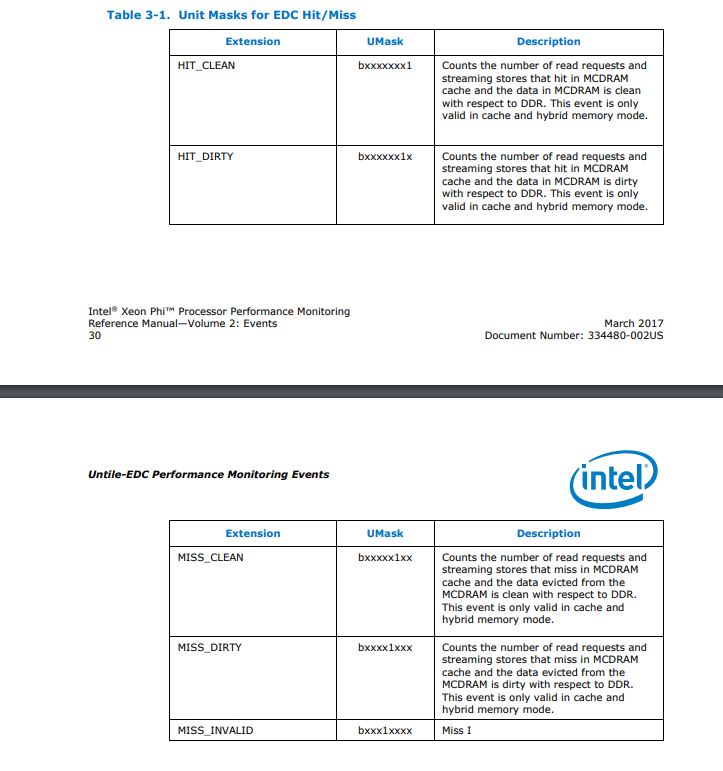

Can you please confirm if following "umask" conversion are correct for counters required for Cache mode bandwidth. I am getting very large values (> 1000 GB/sec) or negative values. Wondering if following masking is correct or not?

EDC0_MISS_CLEAN="-e uncore_edc_uclk_0/event=0x2,umask=0x4/" EDC1_MISS_CLEAN="-e uncore_edc_uclk_1/event=0x2,umask=0x4/" EDC2_MISS_CLEAN="-e uncore_edc_uclk_2/event=0x2,umask=0x4/" EDC3_MISS_CLEAN="-e uncore_edc_uclk_3/event=0x2,umask=0x4/" EDC4_MISS_CLEAN="-e uncore_edc_uclk_4/event=0x2,umask=0x4/" EDC5_MISS_CLEAN="-e uncore_edc_uclk_5/event=0x2,umask=0x4/" EDC6_MISS_CLEAN="-e uncore_edc_uclk_6/event=0x2,umask=0x4/" EDC7_MISS_CLEAN="-e uncore_edc_uclk_7/event=0x2,umask=0x4/" EDC0_MISS_DIRTY="-e uncore_edc_uclk_0/event=0x2,umask=0x8/" EDC1_MISS_DIRTY="-e uncore_edc_uclk_1/event=0x2,umask=0x8/" EDC2_MISS_DIRTY="-e uncore_edc_uclk_2/event=0x2,umask=0x8/" EDC3_MISS_DIRTY="-e uncore_edc_uclk_3/event=0x2,umask=0x8/" EDC4_MISS_DIRTY="-e uncore_edc_uclk_4/event=0x2,umask=0x8/" EDC5_MISS_DIRTY="-e uncore_edc_uclk_5/event=0x2,umask=0x8/" EDC6_MISS_DIRTY="-e uncore_edc_uclk_6/event=0x2,umask=0x8/" EDC7_MISS_DIRTY="-e uncore_edc_uclk_7/event=0x2,umask=0x8/"

Following is the snapshot from PMU documentation:

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Section 3.1 of Volume 2 of the KNL Performance Monitoring Guide uses some very confusing syntax, and adds to the confusion by listing seven events, but only using five of them....

It has been a while since I looked at this, but I think the formulas in the guide are correct. For reads, MCDRAM is accessed on all L2 misses, but you only want to count the accesses that are hits. Intel's recommended formula is all accesses (RPQ.INSERTS) less the clean misses and the dirty misses. The Event and Umask values defined above look correct. (Event 0x02, Umask 0x04 and Event 0x02, Umask 0x08).

It is not clear to me that it is possible to make sense of the results in Hybrid mode, since you don't know which of the RPQ.INSERTS are related to accessing MCDRAM as cache and which are related to accessing MCDRAM as memory.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page