- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

on Page "15-23" in 15.8 "4K Aliasing" in Intel 64 and IA-32 Architectures Optimization Reference Manual (Order Number: 248966-044b June 2021) I find:

"Align data to 32 Bytes."

However, this may not be very useful to avoid 4K aliasing. Therefore I have tried

_mm_malloc(some_size, 4096);

Nevertheless there are still some weird points in my benchmark of L1D cache bandwidth.

The CPU is Intel Xeon Gold 6148. Only one CPU core is used in the benchmark.

- when the number of elements is 608, the measured bandwidth is 280.1 GB/s.

- when the number of elements is 576 (= 608 - 32), the measured bandwidth is reduced to 273.7 GB/s.

By the microarchitecture analysis using Intel VTune I find the cause is due to "4K aliasing":

4K Aliasing: 11.7% of Clockticks

The following are my C code, commands for compilation and tests.

Could you please help me to solve this problem? Thank you!

- C code

#include <stdio.h>

#include <stdlib.h>

#include <omp.h>

#define FOURK (4096) /* 4K Bytes */

void schoenauer(const double * restrict b,

const double * restrict c,

const double * restrict d,

double * restrict a,

const size_t n)

{

#pragma omp simd aligned(b, c, d, a : 64)

for (size_t i = 0; i < n; ++i)

a[i] = b[i] + c[i] * d[i];

}

int main(int argc, char *argv[])

{

const int nr = 1 << 25;

size_t n;

double *a, *b, *c, *d;

double start, stopp, wtsec;

n = (size_t) atoi(argv[1]);

a = _mm_malloc(sizeof(*a) * n, FOURK);

b = _mm_malloc(sizeof(*b) * n, FOURK);

c = _mm_malloc(sizeof(*c) * n, FOURK);

d = _mm_malloc(sizeof(*d) * n, FOURK);

#pragma omp simd aligned(b, c, d, a : 64)

for (size_t i = 0; i < n; ++i) a[i] = b[i] = c[i] = d[i] = i % 16 / 16.0;

/* performance measurement */

wtsec = 1.0e6;

for (int k = 0; k < 8; ++k) { // measure 8 times

start = omp_get_wtime();

for (int i = 0; i < nr; ++i)

schoenauer(b, c, d, a, n);

stopp = omp_get_wtime();

wtsec = wtsec > (stopp - start) ? (stopp - start) : wtsec;

}

/* report */

printf("n: %12zu minimum walltime %8.3f sec, memory bandwidth %8.1f GB/sec\n",

n, wtsec, sizeof(*a) * 4.0 * (double) n * (double) nr / (1.0e9 * wtsec));

_mm_free(a);

_mm_free(b);

_mm_free(c);

_mm_free(d);

return 0;

}- commands for compilation and tests

icc -Ofast -Wall -std=c11 -fno-alias -restrict \

-qopt-streaming-stores=never \

-xCORE-AVX512 -qopt-zmm-usage=high \

-qopenmp -qopenmp-simd -g \

-qopt-report-phase=all -qopt-report=5 \

-o schoenauer.x schoenauer.c

./schoenauer.x 608 # OK!

./schoenauer.x 576 # 4K aliasing- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am not 100% sure I understand your diagrams.....

double *a_base, *b_base, *c_base, *d_base;

int padding = 3*64/sizeof(*a); // three cache lines padding total

a_base = _mm_malloc(sizeof(*a) * n, FOURK+padding);

b_base = _mm_malloc(sizeof(*b) * n, FOURK+padding);

c_base = _mm_malloc(sizeof(*c) * n, FOURK+padding);

d_base = _mm_malloc(sizeof(*d) * n, FOURK+padding);

Then

- make "a" a pointer to &a_base[0*64/sizeof(*a)]

- make "b" a pointer to &b_base[1*64/sizeof(*b)]

- make "c" a pointer to &c_base[2*64/sizeof(*c)]

- make "d" a pointer to &d_base[3*64/sizeof(*d)]

This will make &a[0] point to set 0 of the L1 Data Cache, &b[0] will point to set 1, &c[0] will point to set 2, &d[0] will point to set 3.

There are lots of other ways to do this, but this approach is moderately readable and relatively easy to modify for arbitrary relative alignments....

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Section 15.8 of the optimization manual is not as clear as it might be....

The recommendation to align data to 32 Bytes is intended to avoid a specific problem:

- There is at least one store and at least one load in progress at the same time.

- At least one load-store pair are operating on addresses that differ by a multiple of 4KiB.

- It is assumed that the compiler is generating 256-bit AVX loads and stores.

In this case, the compiler will almost always ensure that the stores are 32-Byte-aligned (because the penalty for crossing cache line boundaries and especially 4KiB page boundaries can be severe depending on the specific processor generation). This means that the concurrent loads will have to take whatever alignment is left over. If that alignment is not 32 Bytes, the 256-bit loads will cross cache line boundaries and cause the 4KiB aliasing problem to get worse.

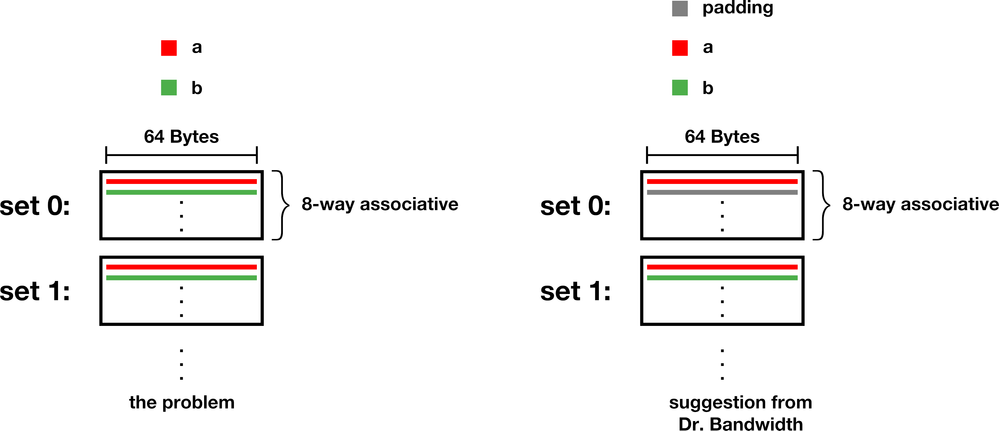

What you are seeing is different -- it is a 4KiB aliasing problem, but you have requested that the compiler generate 512-bit (64 Byte) operations. In this case, neither loads nor stores will cross cache line boundaries. The problem in this case is that the hardware can be certain that load and store operations are to different addresses if they are accessing different sets in the L1 Data Cache, but not if they are to the same set. There is no issue of alignment within cache lines because all memory operations are requested to be full-line loads and stores -- the problem is that these full-line loads and stores are to the same set. This is not a terrible aliasing problem, but it does prevent the disambiguation mechanism of looking at the set numbers. So the HW has to wait until the L1 Data Cache tags have been read in order to make a full comparison of the addresses.

This problem has been around as long as caches and is almost always dealt with by padding the arrays to make the offsets between corresponding elements at least one cache line away from any multiple of 4KiB. This applies to offsets between the bases of 1-dimensional arrays and to the offsets between rows/columns of 2-dimensional arrays. Your approach of aligning everything to 4KiB boundaries maximizes the problem -- good for tutorial purposes, but not so much for performance. In your case there are a couple of choices:

- Pad each of the array allocations by 4 cache lines, then set the [a,b,c,d] pointers to 0,1,2,3 cache lines above the corresponding bases of the allocated regions.

- Make one large allocation and place the pointers with the desired offsets inside that larger allocation. (This has the disadvantage of appearing to break the implied usage of the "-restrict" keyword, so the compiler might back off on optimization. If you have computed the pointer locations and offsets correctly, it does not actually break the restrict model, so full performance is still possible if you can get the compiler to trust you....)

Sometimes the padding needs to be more than one cache line. This is often the case when working with arrays that will be loaded from the L2 or L3 caches, or from memory. In those cases the operations happening "concurrently" include not only the direct load and store operations, but also the hardware prefetch operations. It is not unusual to see cases that require separating addresses by 10-20 cache lines in order to prevent analogous conflicts further out in the memory hierarchy.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you, Dr. Bandwidth!

I need to do some experiments to fully understand your reply and suggestions. I think the most important info is to pad the array allocation so that a, b, c, and d start from different sets in the L1 data cache. right? Below is a simplified depiction. Is my understanding correct?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am not 100% sure I understand your diagrams.....

double *a_base, *b_base, *c_base, *d_base;

int padding = 3*64/sizeof(*a); // three cache lines padding total

a_base = _mm_malloc(sizeof(*a) * n, FOURK+padding);

b_base = _mm_malloc(sizeof(*b) * n, FOURK+padding);

c_base = _mm_malloc(sizeof(*c) * n, FOURK+padding);

d_base = _mm_malloc(sizeof(*d) * n, FOURK+padding);

Then

- make "a" a pointer to &a_base[0*64/sizeof(*a)]

- make "b" a pointer to &b_base[1*64/sizeof(*b)]

- make "c" a pointer to &c_base[2*64/sizeof(*c)]

- make "d" a pointer to &d_base[3*64/sizeof(*d)]

This will make &a[0] point to set 0 of the L1 Data Cache, &b[0] will point to set 1, &c[0] will point to set 2, &d[0] will point to set 3.

There are lots of other ways to do this, but this approach is moderately readable and relatively easy to modify for arbitrary relative alignments....

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page