- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

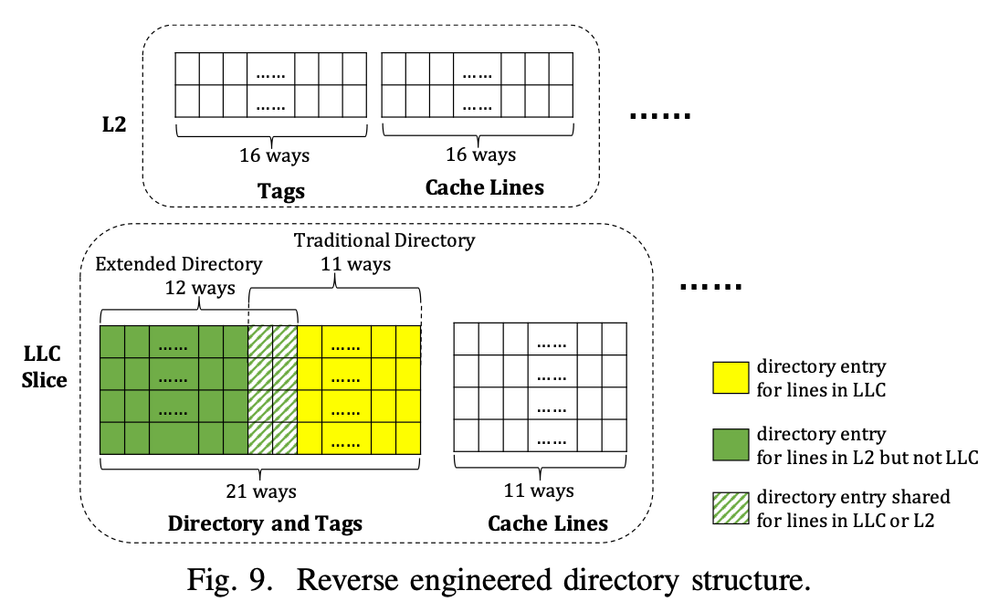

I have been working on Skylake Server CPUs (Xeon Platinum 8180). Together with switch to mesh topology its cache architecture is changed to a directory based protocol. I am looking into understanding how it works, but cannot find much official documentation. Some descriptions of directory based cache coherency protocols describes that there is a directory structure kept in memory, and caching agent caches it. That would mean, there might be two memory accesses if the requested data is not in the caches, and corresponding directory information is not in the directory cache. However, I am not sure if the directory is implemented this way. The WikiChip mentions that, NCID (non-inclusive cache, inclusive directory architecture) might be used in the Skylake Server CPUs. However, there is not much information about it. If NCID is used, I am curious whether there is a directory entry for every set and way of the L2 and LLC of each core? If the inclusive directory entries are not directly mapped to the cache lines, then I might not be able to use all the cache lines. Reading data into cache should evict other cache lines, not because I am out of cache lines, but because I am out of directory storage right? I am asking this because there is a recent paper (http://iacoma.cs.uiuc.edu/iacoma-papers/ssp19.pdf), which thinks that such a problem can occur, as some directory entries are shared between L2 and LLC lines. I provide below a figure that shows the reverse engineered directory structure.

May I kindly ask, if their reverse engineering is accurate? Can I use all cache lines, or if I am limited with directory entry counts?

Kind Regards,

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have not checked all the details of that paper, but the general picture is correct. The specific hypothesis that the reported 12-way Snoop Filter plus 11-way L3 are crammed into a 21-way combined tag a scary one to consider, and I have not tried to figure out whether that paper's argument for this (horrifying) "feature" is convincing. Rather than dive into the details of that specific hypothesis, I would like to review the issues at a higher level.

It is important to note that "directory" can mean several different things, not necessarily consistent with the way Intel uses the term.

In Xeon Scalable Processors, it is clear that the L3 is no longer "inclusive", but it is harder to whether it is "non-inclusive" or "(fully) exclusive".

Nomenclature is fairly inconsistent across the industry (and literature), so I will try to be more precise. I am using the term "non-inclusive" here to mean that the L3 is *sometimes* allowed to cache lines that are also cached in one or more private (L1+L2) caches. AMD calls this "mostly-exclusive, and uses this as the L3 policy in AMD Family 10h processors -- the L3 can keep a shared copy of a line if it detects that the line is in use by more than one L2. In contrast, the term "exclusive" (perhaps more clearly as "fully exclusive") absolutely prohibits a line from being cached in both the shared L3 and any private (L1/L2) at the same time. This is the policy of the L1D and L2 caches in AMD Family 10h processors -- the L2 is purely a victim of the L1, and lines are "moved" from the L2 to the L1, rather than "copied". (AMD reference https://doi.org/10.1109/MM.2010.31)

In the Intel Optimization Reference Manual (document 248966-042b, September 2019), section 2.2.1 states:

Because of the non-inclusive nature of the last level cache, blocks that are present in the mid-level cache of one of the cores may not have a copy resident in a bank of last level cache.

This makes it sound like Intel is using the term "non-inclusive" to mean "fully exclusive". The wording in the HotChips 2017 presentation is slightly more ambiguous:

Non-inclusive (Skylake architecture) --> lines in L2 may not exist in L3. [slide 9, emphasis in original]

If one interprets "may not" as "are not allowed to", then this matches my definition of "fully exclusive", while if one interprets "may not" as "might not", this would indicate something less than full inclusion (or full exclusion).

Slide 10 of the HotChips presentation did not clarify the issue in my mind, but now I interpret all of this together to mean that the L3 in Xeon Scalable Processors is fully exclusive.

In the absence of an inclusive LLC, a mechanism must exist to maintain coherence for lines that are cached in L1/L2 caches. A broadcast protocol could be used, but that scales poorly past 2-4 cores, and would be hopeless here. So some kind of directory is required, and that directory needs to be scalable in the same way that the shared, inclusive L3 was scalable in previous generations of Intel processors. What Intel implemented is a distributed shared "Snoop Filter", using the address hash function as the L3 to distribute accesses quasi-uniformly over the active L3 "slices" on the chip. This "Snoop Filter" plays almost exactly the same role as the directory tags in an inclusive L3, but does not include the data array -- it only tracks the location and state of lines held in L1/L2 caches. Because the Snoop Filter is inclusive, before a Snoop Filter entry can be evicted, the cache line that the entry tracks must be invalidated in all L1/L2 caches in the system. If that cache line was still valid in an L1/L2 cache, it is typically victimized to the L3. The impact of systematic Snoop Filter conflicts on application performance is discussed in my SC18 paper (https://doi.org/10.1109/SC.2018.00021) and presentation (https://sites.utexas.edu/jdm4372/2019/01/07/sc18-paper-hpl-and-dgemm-performance-variability-on-intel-xeon-platinum-8160-processors/).

So the Snoop Filter is a directory, but not really of a different nature than the tags of an inclusive L3.

For Intel server processors, the term "directory" is used to refer to aspects of the *cross-socket* cache coherence implementation, as presented in https://software.intel.com/content/www/us/en/develop/articles/intel-xeon-processor-scalable-family-technical-overview.html. One feature that is poorly described in that presentation is the "memory directory", which uses one or more bits in the DRAM ECC to indicate whether another socket *might* have a modified copy of the cache line. This is discussed on slides 7-8 of https://www.ixpug.org/documents/1524216121knl_skx_topology_coherence_2018-03-23.pptx, which also includes more words on inclusivity, snoop filters, and topology of the chips.

So getting back to your question about the number of "directory" entries relative to the number of L2 entries....

The size and associativity of the Snoop Filter was documented by Intel in their presentation at ISSCC in 2018 (https://doi.org/10.1109/ISSCC.2018.8310170), reporting 2048 sets per active "tile" and 12-way associativity. There is at least one active CHA/SF/LLC tile per core, so the aggregate Snoop Filter has at least 1.5 entries for every L2 entry in the system. (L2 = N cores * 1024 sets * 16 ways, SF = P (>=N) tiles * 2048 sets * 12 ways). At a ratio of 1.5, there are enough Snoop Filter entries to use all of the L2 (and L1) cache in the system, but there is very little headroom for conflicts.

Why would there be conflicts? The potential problem is that the L2 caches are (intended to be) independent, with a 28-core chip able to simultaneously cache up to 28*16 = 448 addresses that map to the same cache index (assuming they are equally distributed across the cores). The structure that needs to track this is only 12-way associative, so it is critically important that the address hash always do a near-perfect job of mapping addresses with the same L2 cache index to different [slice, set]. Spoiler -- it does not... My SC18 paper (cited above) reports on a severe conflict in the SKX processors with 24 active CHA/SF/LLC tiles, but significant conflicts exist in all Xeon Scalable Processors with >16 tiles, as I reported in https://www.ixpug.org/components/com_solutionlibrary/assets/documents/1538092216-IXPUG_Fall_Conf_2018_paper_20%20-%20John%20McCalpin.pdf.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Mr. John McCalpin,

Thank you very much for your helpful answer. I have to admit that you are the reason why I wrote my question into this forum, because I find your replies to other questions very elaborate. Once again, you provided a very detailed explanation.

If you don’t mind, I would like to ask two further questions on the “memory directory” topic, you mentioned.

I didn’t understand when a “memory directory” information is created for a cache line. Does it happen, when an entry is evicted from the snoop filter (the directory)? You described that snoop filter entry evictions invalidate the corresponding line in the L1/L2 of all cores (probably by broadcasting an invalidate signal). If so, then there will be no need to create a memory directory information for snoop filter evictions. Am I right?

Alternatively, if a cache line is evicted from L3, then the data will be written back to memory. I am curious if that could cause a memory directory information to be created? I guess, that indeed could be the cause, because if a cache line is in L3 (on such a non-inclusive cache architecture), multiple “cores” should have been sharing the corresponding line. Thus, there may still be a copy of it in the L1/L2 of one of these cores. Hence, it would be safe to create a memory directory information, indicating that the line might still be somewhere in the cache. Do you think, am I right with this interpretation? However, my interpretation (in comparison to your explanation) does not say that another “socket” might have a modified copy of the cache, but another “core”. So I guess, there might be a mistake in my understanding. Can you please guide me with that?

A follow up question is about the slide 7-8 of https://www.ixpug.org/documents/1524216121knl_skx_topology_coherence_2018-03-23.pptx , which provide a simple explanation of the memory directory. However, the last bullet of its disadvantages is a bit confusing for me. It says that “Remote reads can update the directory, forcing the entire cache line to be written back to DRAM”. I could not image an example scenario why/when this may happen. Would it be possible for you to elaborate this a bit?

Kind Regards,

Furkan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Intel does not provide much in the way of documentation for memory directories (except perhaps in patents, which are too confusing for mere mortals to understand), so these comments are inferences based on various controlled and uncontrolled observations (and ~35 years experience in the field).

In the most common configuration(s), cache coherence in Intel processors is based on an "on-chip" protocol plus a "cross-chip" protocol. (In some cases the contents of a single "package" can include more than one coherence domain -- either multiple chips or a single chip split into multiple coherence domains. There are other horrible corner cases, but equating coherence domains with packages is by far the most common configuration for Intel processors in the last decade.)

The "on-chip" coherence protocol is managed by the shared, distributed CHA/SF/L3 structure. This tracks all copies of any cache line held in any cache on the chip, whether the address maps to one of the local memory controllers or to a memory controller in another package/socket/chip. The most common transactions involve processors executing loads or stores that miss in their private L1/L2 caches. (I will limit this discussion to loads just to avoid too many detours.). On an L1/L2 load miss, the L2 cache controller submits a read request to the coherence fabric. Each physical cache line address maps to exactly one CHA/SF/L3 "slice" on the mesh, so based on the address the request is routed across the mesh to the CHA/SF/L3 "slice" that owns the address. The L3 slice checks to see if the address is cached there and the Snoop Filter is checked to see if the address is cached in any L1/L2 cache on the chip. If both of those return "miss", the cache line might be in a modified state in another coherence domain (chip/package) or the value in memory may be current. Those possible sources for the data can be checked concurrently, consecutively, or with partial overlap. This is (finally) where "memory directories" come into play.

Here I assume that the address being loaded is mapped to one of the "local" memory controllers. On an idle system, the lowest latency will come from issue a snoop request to the other chip and a read request to the appropriate local memory controller at the same time. The time required to get responses for the requests will vary based on technology and load, but as chips get more and more cores and caches, the responses from remote chips are getting slower, while the response time from local memory is mostly the same. So consider the scenarios:

- Local memory returns data first. There are two sub-cases:

- Data is "clean" in the other socket(s): That means data from memory is valid, but we don't know that yet.

- Data is "dirty" in the other socket(s): Data from memory is "stale" and must be discarded.

- Remote socket returns snoop first.

- If the data is "clean" in the other socket, the snoop response will indicate that, but will not contain the data. The CHA/SF/L3 unit will note the clean response and wait for local memory to return the data.

- If the data is "dirty" in the other socket, the snoop response will contain the data. (Some architectures split the response and return the status before the data, but here I assume that they are together in a single transaction.). The CHA/SF/L3 unit can forward the data to the requesting core. It continues monitoring the address until the local memory returns the "stale" copy, which it discards.

The "memory directory" is one or more bits located with the cache line data in DRAM that indicate whether another coherence domain might have a modified copy of the cache line. This bit is set and cleared by the memory controller, based on the particular transaction and state of the returned data.

- For loads from local cores/caches, there is no need to set the bit because the distributed CHA/SF/L3 will be tracking the cache line state.

- For writeback-invalidate or writeback-downgrade of Modified-state cache lines (from either local or remote caches), the memory directory bit can be cleared because only one cache in the system is allowed to have an M-state line, and that cache just gave up write permission and sent the most current copy of the data to memory.

- For loads from remote caches, the decision depends on the state that is granted when the cache line is sent to the requestor. By default, a load request that does not hit in any caches will be returned in "Exclusive" state.

- Exclusive state grants write permission without additional traffic.

- For snooped architectures, granting E state by default is a very good optimization because

- Most memory references are to "private" memory addresses (stack, heap, etc), that are only accessed by one core

- A significant fraction of those private addresses will become store targets (but they are first accessed by a load, not a store)

- So this optimization eliminates the "upgrades" that would be required for the lines that are later store targets (if the initial state was "Shared"), and eliminates writebacks of data that is not modified (if the initial state was "Modified")

- But, since E state lines can be modified, the memory controller has to take the conservative route and set the "possibly modified in another coherence domain" bit in the memory directory.

- If that bit was not set initially, the entire cache line has to be written back to memory to update the bit.

- If the bit *was* set initially, then there are too many special cases to consider here....

- This scenario is one that caches very poorly.

- If you could track the directory bit for *all* the local caches on-chip, then the problem would go away -- but that is too many bits. We have relatively small memories on our Xeon Platinum 8280 systems, but they still have 1.5 billion cache lines per socket, and a full memory configuration is over 12 billion cache lines per socket.

- If you try to cache the most recently-used memory directory bits, you will end up adding significant complexity to the memory controller, significant opportunities for deadlock/livelock when evicting cached memory-directory entries, and could easily end up generating *more* memory traffic than the baseline.

- Note that the effective minimum size of a memory write is 512 bits -- one memory directory bit for each of 512 cache lines.

- With the default 4KiB virtual memory page size, cache line accesses naturally clump into 64-cacheline blocks -- making it hard to use more than 1/8 of the bits per memory directory entry.

That was probably way more than anyone wanted here.....

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello again Dear Mr. John McCalpin,

It is a very detailed, very elaborate description. Thank you very much. I will consider these when dealing with the cache coherence of these Skylake-Server processors.

I am planning to make some experiments soon to verify some of these, and maybe contact you again if I can encounter some interesting findings.

Thanks again.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I would like to ask a simple question, can I confirm that, the directory structure, or distributed shared "Snoop Filter"(or part of it) , is actually stored in the memory DIMMs? Hence, the case that the requested data is not in the caches, and the corresponding directory information is not in the directory cache, accessing the data would incur two memory accesses, one for the directory lookup/modifcation, and the other for fetching the data itself?

I am asking this as I find different descriptions regarding the placement of this so-called directory.

In paper, https://iacoma.cs.uiuc.edu/iacoma-papers/isca19_2.pdf , section 2.1, it is said that "the directory is partitioned and physically distributed into as many slices as cores. Each directory slice can store directory entries for a fixed set of physical addresses.".

While in another paper, "https://multics69.github.io/pages/pubs/pactree-kim-sosp21.pdf", section 3.1.1., it says that the direcorty inforamtion is actually stored in memory media (the description is actually about optane DC DIMMs, but I assume it would be the same for normal DRAM DIMMs).

These descriptions makes it confusing to understand the actual placement of the so-called directory structure.

Thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Intel processors have several different kinds of "directories" to help with cache coherence.

- The "Snoop Filter" is a distributed directory (distributed in exactly the same way as the L3 cache) that tracks *all* cache lines that are held in L1 and/or L2 caches (for the same set of cores that share the L3 cache). The Snoop Filter is essentially the same as the "tags" of the inclusive L3 cache used in generations before Skylake Xeon -- it tracks the location and state of locally cached lines, but not the data in those lines.

- The "Memory Directory" is located in DRAM inside the ECC bits of each cache line. This directory tracks whether a cache line *might* be in a dirty state in a cache in a *different socket*. I wrote about the "Memory Directory" and included some references at https://sites.utexas.edu/jdm4372/2023/08/28/memory-directories-in-intel-processors/. The primary benefit of the "Memory Directory" is a large reduction in the snoop traffic between sockets -- leaving the UPI bandwidth available for data. The Memory Directory reduces the local memory latency slightly, but increases the remote memory latency substantially. This is usually the right tradeoff.

Both of these are "directories", but the details are strongly influenced by the differences in latency and bandwidth between cores in the same socket and cores in different sockets.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi John,

Thank you for your detailed reply. Now, I have a clear understanding of the placement regarding this directory.

Regards,

Wetnao

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page