- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I have a Skylake server.

As the title suggests, I am aiming to find out CHA a physical address is mapped to. For this purpose what I do as follows:

1) I allocate cache aligned (cache size is 64 bytes on my system) memory via posix_memalign() syscall. (this memory space will contain 8 long long variables, 64 bytes in total.)

2) I then set uncore performance counters for each CHA to read LLC_LOOKUP event with umask set so that I measure only LOCAL lookups. (Note that my system is single-socket. So, I guess reading REMOTE lookups would not make sense. I am saying this based on my assumption that REMOTE lookups are cross-socket.)

3) I pin my main (and only) thread to a core.

4) I read LLC_LOOKUP counters for each CHA and record them. Let's call recorded value in each CHA CHAx_RECORD_START, x representing the CHA number.

5) Increment the value of the first long long variable stored in allocated space 100 million times via a for loop. In each iteration, I am flushing the cache line via _mm_clflush call right after incrementing the variable so that in the next iteration it will be necessary to be fetch it from DRAM again.

6) I read LLC_LOOKUP counters for each CHA again and record them. Let's call recorded value in each CHA CHAx_RECORD_END, x representing the CHA number.

7) I compare the differences CHAx_RECORD_END - CHAx_RECORD_START for each CHA and record them.

I thought that while incrementing the value in the variable, in each iteration, core will see that address is not present in its cache (Because we flushed it from cache in the previous iteration). So, the core will query the distributed directory (I believe it will query ONLY THE CHA that is responsible for managing coherency of the allocated address, by running a hash function on the physical address of the allocated space). Is my thinking correct here? Since the managing CHA does not have the address in its cache as well (again, because we flushed it in the previous iteration) I expect that CHA to emit LLC_LOOKUP event.

Is my flow correct? Am I using the correct event (LLC_LOOKUP) for this purpose? Is there any other events I can make use of like SF_EVICTION, DIR_LOOKUP or an event related with LLC misses?

Thanks

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

(It looks like my reply initially ended up in a forum on "Business Client Software Development" -- this is a much more sensible place....)

This looks like the right approach. I have found that the code is much more reliable if MFENCE instructions are added before and after the CLFLUSH instruction. I typically use 1000 iterations of load/mfence/clflush/mfence for each address, but I have some additional "sanity checks" on the results, with the code re-trying the address if the data looks funny. The technical report linked below describes this in more detail.

You can find the address to LLC mapping equations for SKX/CLX processors with 14, 16, 18, 20, 22, 24, 26, and 28 LLC slices at https://repositories.lib.utexas.edu/handle/2152/87595 -- the technical report is the second entry in the list of links of the left side of the page -- the other links provide "base sequence" and "permutation selector masks" for the various processor configurations. The equations are valid for any address below 256 GiB, except for the 18-slice SKX/CLX processors, where the masks are only good for the first 32 GiB. (I have updated the masks for the 18-slice processors to be valid for the first 256 GiB, but have not pushed it out to a public place yet.)

The report also includes results for the Xeon Phi 7250 (KNL, 68-core, with all 38 CHAs active), and for a 28-slice Ice Lake Xeon (Xeon Gold 6330). Unlike previous product transitions which have left the mapping unchanged for fixed slice counts, the Ice Lake Xeon 28-slice processor uses a different hash than the SKX/CLX 28-slice processors. I am still gathering data on the ICX 40-slice Xeon Platinum 8380 processor.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

With my method I described above (just with the modification that I made sure to issue mfence instruction just before and just after flushes. and also I monitored LLC_LOOKUP.DATA_READ, not LOCAL) I found an CHA that has more than 90% of LLC_LOOKUP.DATA_READ event observed among all CHAs. And I concluded that that CHA is responsible for managing coherency of the memory address I allocated for my benchmark. In other words, I found the CHA mapping for my allocated address.

I have CHA-core mapping information and I know how CHAs are laid out in SKX server thanks to @McCalpinJohn 's work about utilizing CAPID6 register and performance counters programmed to measure traffic across the mesh. Hence, I know the full mapping information. Also, with the help of the paper https://dl.acm.org/doi/pdf/10.1145/3316781.3317808 (page 2), I know that when a core tries to access an address that is not in its local cache, it will query the distributed directory (so, if I want to access my address I allocated above and found the CHA mapping for, CHA I found above will be queried according to my understanding).

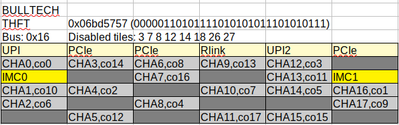

Below is a picture exposing topology information of my server's only socket.

In next step, I wanted to sanity check to make sure that what I concluded is right. So, I setup a tiny experiment. I created two threads and bound them to cores 2 and 5. I used a condition variable to let threads modify a variable taking turns 100 million times (address of the variable I mentioned above and have the CHA-mapping information of. Suppose that CHA is 6 in my example.) By making threads taking turns, I effectively aimed to evict that variable from the waiting core's cache so that when its turn comes, it would have to query distributed directory to find out if the variable is stored on die somewhere. Meanwhile I setup uncore performance counters to monitor traffic on mesh.

Here is what I monitored: CHAs between core2 and core5 (including CHAs that are co-located with core 2 and 5) reported high numbers if uncore performance counters are programmed to measure VERT/HORZ_RING_BL_IN_USE events. However I would expect CHA6 to report high as well due to MESIF protocol (since it is supposed to be queried) but that was not the case. Then I figured that BL is for block/data and I would need to measure traffic of another parameter maybe. As I read through the uncore performance monitoring manual, I encountered AK (acknowledge), IV (Invalidate) and AD (Address) events as well for traffic measurement. I found explanations for these parameters in https://www.intel.com/content/dam/www/public/us/en/documents/design-guides/xeon-e5-2600-uncore-guide.pdf and I hope the same is true for SKX. I tried all of these parameters but CHA6 (I am assuming CHA6 is mapped to my address in each run, so take it for granted for the sake of example) reported low numbers in all of them.

What could be wrong here? Am I measuring wrong parameters? I just want to observe flow mentioned in paper (in page 2) I attached using uncore performance counters programmed to measure traffic.

Best regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The uncore performance monitoring reference manual for the SKX/CLX processors includes a large number of changes from the Xeon E5-2600 processors, so you will definitely want to review your performance counter programming.

The place to start looking is: https://www.intel.com/content/www/us/en/developer/articles/technical/intel-sdm.html

The uncore reference manuals are listed about 70% of the way down the page -- the one you want is Intel® Xeon® Processor Scalable Memory Family Uncore Performance Monitoring Reference Manual

Your experiment seems reasonable but the particular memory access pattern you are using might be activating different performance counter events than you expect. Since Xeon E5 v3 (Haswell), Intel server processors have included a feature called the "HitMe cache" that is used to improve the performance of some classes of cache-to-cache transfers. There are a few words at https://www.intel.com/content/www/us/en/developer/articles/technical/xeon-processor-scalable-family-technical-overview.html and a few words in the SKX Uncore performance monitoring guide. Unfortunately to give a reasonable interpretation of those few words requires a lot of experience and a lot of work to reverse-engineer what the transactions mean. I don't know exactly which transactions the HitMe cache handles, but somewhere I got the impression that it is intended to handle the specific case you are using -- migrating dirty data back and forth between two cores efficiently. (Many implementations will require two transactions per direction -- one to read the data from the previous owner and a second to "upgrade" the cache state to give the new core exclusive access and invalidate the line from other cores. Transferring the dirty data in the Modified state is the wrong answer most of the time, but the right answer for this case, so a history-based mechanism that monitors traffic patterns and switches from one transaction pattern to the other can be a benefit.)

Once you have the correct documentation for the uncore events, I would look at the sub-events of HITME_LOOKUP and LLC_LOOKUP at CHA 6.

Any strategy for learning about how this works will have to pay careful attention to the different types of traffic on the different meshes. For your test configuration, I would expect to see VERT_RING_AD_IN_USE.UP at CHA 3 (for requests coming from core 2 to CHA6) and the also at CHAs 13 and 12 (for requests coming from core 5 to CHA 6). These requests should also show up on HORZ_RING_AD_IN_USE at CHA 9 (probably counted as going right since this is an odd-numbered column, but you should check both directions) and will show up on HORZ_RING_AD_IN_USE at CHA 6 in both the left and right directions.

It is less clear which path the data will take. For core 2 reading data that is dirty in core 5's cache, a slow implementation would be for core 5 to send the data along VERT_RING_BL_IN_USE.UP at CHAs 13 and 12, then HORZ_RING_BL_IN_USE at CHA 9 and CHA 6. Then CHA 6 would forward the data to core 2 using HORZ_RING_BL_IN_USE at CHA 3 and VERT_RING_BL_IN_USE at CHA 4.

I would not be surprised if the repeated dirty transfer activated a more direct data route, using HORZ_RING_BL_IN_USE at CHAs 10 and 2, with only commands, responses, and possibly invalidates going to CHA 6 on the AD, AK, and IV rings.

If you take enough data and stare at it long enough, it is possible that things may start to make sense....

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi again,

First of all thanks for your valuable opinions. I observed that, after my experiments above, I observed one of the CHAs produced most of the LLC_LOOKUPs and HITME_LOOKUPs, and concluded that it is CHA that allocated memory is mapped to. Traffic counters were also helpful to monitor, but *AD* ring did not always produce results I would expect it to produce for coherency traffic (along path to mapped-CHA). Maybe there are some stuff that I am not considering (during benchmarks, I did not disable turbo-boost for example, also there may be some activity in my server sometimes which may have effected the results), but I will for now take *LOOKUP events for granted.

In next step, I want to observe the following (please check the topology of the die on my server in my previous post):

Suppose that allocated space is mapped to CHA17. Again, I will have 2 threads that are bound to cores and ping-pong'ing (modifying variable in the cache line, taking turns) a cache line between each other 50 million times (without flushing, so data is always on die). In first case, cores are mapped to core-0 and core-14 (took ~425 seconds). In second case, cores are mapped to core-7 and core-5 (took ~441 seconds). I repeated each case 10 times and calculated average of them. I would expect second case to be way more faster, but this was not the case. I thought that round trip time to communicate between the two cores would be much lower since cores are close to each other, not only that, the memory they are ping-ponging is managed by a nearby CHA. How should I interpret this? I will make sure to double-check that my topology information is correct. I would be grateful for an answer from @McCalpinJohn

Thanks and best regards.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would have expected your first case to be slower than the second, but I have not done much ping-pong latency testing on these systems.

It should be easy to tell if your topology is correct by reading a large block of memory from a single core and measuring all the vertical and horizontal data traffic on the BL rings.

Have you tried a case where your memory address is mapped to the CHA at the same tile location as one of the cores?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I verified my topology again using BL rings, and it is most certainly true. What got my attention is that most of the data flow was from IMC0 and not IMC1 for all cases I observed. Is that expected behavior? Is IMC0 the primary memory controller?

And yes, I have tried a case where my memory address is mapped to the CHA at the same tile location as one of the cores, and it was certainly not the fastest case I observed.

Now, I suspect 2 things:

1) Maybe I found address-CHA mapping is wrong (I will double check that again)

2) Otherwise, there may be some factors that I am not considering for ping-pong case.

Thanks, I will update the post for my findings if there are any.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Unless the processor is booted in SNC (Sub-NUMA-Cluster) mode, IMC0 and IMC1 each service 1/2 of the addresses. This should be very easy to see when doing large reads using a single core -- the BL ring links from each of the IMCs to the requesting core should be carrying equal traffic.

If you are looking at single addresses, it is possible to accidentally make biased choices. One of my systems has memory alternating between IMC0 and IMC1 with a 256 Byte granularity. It is easy to accidentally pick addresses that are biased in this case (e.g., 4KiB-aligned addresses are always on IMC0). Most systems have a more complex interleave.

It might be interesting to compare your address-to-CHA mapping with the values computed from the base sequence and permutation selectors that I derived. http://dx.doi.org/10.26153/tsw/14539. The Permutation Selector masks that are provided with that report are only valid for addresses in the range of 0-32GiB, but the set of masks in the attached file extends the validity to 0-256GiB. (The base sequence is unchanged.)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

@McCalpinJohn I am using the PermSelectMasks.txt that you provided to find CHA of a memory address. I followed your algorithm in your technical paper. In the attachments, you can find my code to figure out CHAs using hash function. Base sequence file is also attached.

CHA I am finding via performance counters and hashing function is not the same. Do you have any pointers as to where I am wrong with my code?

Thanks and best regards.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You forgot to check for the exit code from the virtual to physical translation step. Recent versions of Linux block users from accessing the /proc/self/pagemap -- only root is allowed to lookup those translations.

You really ought to be computing CHA numbers for 4096 consecutive cache line addresses (aligned to a 4Ki cache-line boundary). That way you would be able to see if the numbers vary, and you should be able to confirm that the output is a binary permutation of the original sequence.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It turns out I had to use uintptr_t as input parameter types for function getIndex, findCHAByHashing and compute_perm. Doing this way, I am 100% consistent with the CHAs that I found via performance counters. Tried with 100'000 different memory addresses.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page