- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

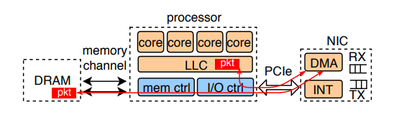

We have a setup in which FPGA sends network packets via its own DMA to CPU over PCI/E I/O port. In this scenario, packets will be written to LLC and also DRAM at the same time instance as far as I know. Here is a paper depicting the scenario:

https://par.nsf.gov/servlets/purl/10173128

This is the picture that shows how packets travel.

So, here are my questions:

1) Which LLC slice will the received packet stored in? In what ways CHAs are involved in this?

2) How can we measure this flow? Are there any performance counter configurations that measure this? I want to make sure that packets really travel as depicted here, and also discover about whereabouts of the packet.

Thanks and best regards.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

DMA to/from system memory uses the same address hash that every agent uses for system memory addresses. Each 64B block is mapped to one of the L3/CHA slices for processing. For DMA traffic from IO to system memory, the default behavior is for the L3 to cache the incoming data. This is made completely explicit in Section 2.2.4 of the Scalable Memory Family Uncore Performance Monitoring Guide (document 336274-001, July 2017) (emphasis mine):

All core and IIO DMA transactions that access the LLC are directed from their source to a CHA via the mesh interconnect. The CHA is responsible for managing data delivery from the LLC to the requestor and maintaining coherence between the all the cores and IIO devices within the socket that share the LLC.

Tracing the traffic flow can be done in the performance monitoring units of the IIO blocks, the IRP block, the CHAs, and (for cross-socket traffic), the UPI blocks.

- At the IIO level, once you find the specific PCIe interface in use, you can use the mask/match filter to measure traffic that is specific to your IO device.

- The IRP block manages IIO to coherent memory, providing the ability to monitor coherent memory operations from IIO.

- The CHAs have sophisticated filtering capability by opcode and LLC state. Several of the opcodes are described as being specific to IIO functionality. Section 2.2.9 of the uncore performance monitoring manual provides examples of filter configurations for measuring

- LLC traffic due to DMA writes to system memory that miss in the LLC

- number of PCIe full cache line and partial cache line writes

- number of PCIe read requests

- The M2M block manages the interface between the mesh and the memory controllers. Filters enable matching on both opcode and address range!

- The UPI Link Layer performance monitors allow matching by opcode. I have not tested these to see which opcodes are used by DMA traffic.

- The UBOX is the intermediary for interrupt traffic, including interrupts from IO devices.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page