- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I have a tree-like datastructure. Each node of the tree is four `float`*4 vectors (i.e., 64 bytes, or one cache line). Semantically, during traversal, some pseudo-C++ would look like:

//Four loads. // Note that `node` is a 64-byte aligned type. __m128 v0 = node->v0; __m128 v1 = node->v1; __m128 v2 = node->v2; __m128 v3 = node->v3; // Do stuff with `v0`, `v1`, `v2`, and `v3`. // ...

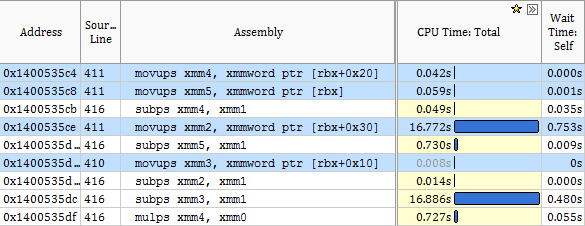

On an Intel 990X (Westmere Gulftown), I've gotten the following VTune trace from a traversal roughly corresponding to the above:

I've highlighted the loads from `node`. As you can see, the compiler has chosen to load them in the order `v2`, `v0`, `v3`, `v1`, with a few instructions from the later processing interleaved in. The CPU time is most of the traversal cost. The column that varies with it is "Wait Time: Self", which seems strange.

Question 1: Why does "Wait Time: Self" correlate with CPU time? The docs say it's for synchronization primitives, but here it's (presumably) for a cache miss?

Question 2: Why don't the first two loads stall?

Question 3: Why does the third load stall? The first two loads are precede it on the same cache line, so if they're resident in the cache, then the third should be as well!

Thanks,

Ian

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Ian,

The CPU Time column doesn't point precisely to instructions but with some skid (usually a few instructions but in some cases it could be dozens of instructions). This is due to how sampling mechanism working. So you should take this skid into account when analyzing data on assembly level.

Also note that processors executes instructions out of order, plus we have hardware prefetcher, plus we can have some other non-trivial factors which could lead to such uneven distribution of clockticks samples between loads.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Additional to Dmitry's response, and more like what you are observing, the IA32 and Intel64 architectures permit you to perform a move from memory to register .AND. continue executing instructions prior to move completion as long as you do not use the contents of the register being moved to. Should you attempt to use the targeted register of the move prior to the arrival of the data, then that instruction stalls.

In your above screenshot, the stalls are likely occurring at the subps instructions (due to them immediately referencing the targeted register(s)), and the instruction skew that Dmitry refers to results in the instruction following the subps to get billed for the operation. Like he said "take this skid into account when analyzing data on assembly level".

Note that the source code snip you showed omitted the "Do stuff..." and the assembly showed not only the loads but a subtraction operations of "Do stuff..." relating to v0:3.

If there is "other stuff" that can be done before, "Do stuff..." that uses the registers (used by local copies of v0:3), .AND. that is not too register intensive, then place (some of) "other stuff" in front of the operations on v0:3. IOW you assist the compiler in interleaving instructions.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Ian,

Answering your first question about the wait time: Since your hotspots have high CPU Time (over 16 seconds), your program is very likely to be preempted by the OS while executing those hotspots, hence you see the correlation between the Wait Time and the CPU Time.

I would personally expect to see the correlation with Inactive Time, instead of Wait Time, since there's no explicit synchronization in the code, but the OS might have used some synchronization mechanisms, while scheduling your thread off the CPU, which enabled the VTune driver to classify the inactivity time as Wait Time.

In essence, both Wait Time and Inactive Time are inactivity times, when your thread is not executing, and their duration depends more on the activity of other threads and overall system load.

Thanks,

Stas

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

Thanks for the replies; very helpful. Just to be sure I've got it:

The first two loads would stall, but because their results are not yet required and possibly due to superscalar or out-of-order issuing, execution continues until the subtraction which, since `xmm4` is the destination of the first load and is required to execute it, stalls. However, due to instruction skid (an artifact of the profiler), we see the load come up on the following instruction, which happens to be the third move. During this delay, the OS is likely to page the process out, resulting in an increase in the "wait time" metric.

I tried various rearrangings of the instructions to try to interleave some more computation after the loads, but unfortunately most of the computation for a node depends on the node. I also tried even more things to optimize it (that load is 3/4 the runtime of my program!) but couldn't really decrease it. I'd appreciate some more suggestions for what to try if this problem seems interesting.

Here's a few versions of pseudo-C++ (simplified to various degrees for maximal convenience):

Recursive traversal (algorithm-level pseudo-code):

void traverse(const Vec8f& query, const Node* pnode, Result* result) {

//Try to convince compiler to load 64-byte node (this line is the pseudocode in my OP).

Node node = *pnode;

if (node.type.is_interior) {

//We can easily compute our child pointers (simple unpacking operations).

const Node* child0 = node.calculate_child0();

const Node* child1 = node.calculate_child1();

//[this line marked for reference below]

//Calculate whether we need to traverse our children. Functions are math-heavy.

// Note that they do not depend on dereferencing `child0` or `child1`.

calculate_should_traverse0(node,query,result);

calculate_should_traverse1(node,query,result);

//Recurse. The tests involve very simple logic.

if (should_traverse_0_only(result)) {

traverse(query, child0, result);

} else if (should_traverse_1_only(result)) {

traverse(query, child1, result);

} else if (should_traverse_0_and_1(result)) {

if (should_traverse_0_first(result)) {

traverse(query, child0, result);

traverse(query, child1, result);

} else {

traverse(query, child1, result);

traverse(query, child0, result);

}

}

} else { //Is leaf

calculate_math_and_memory_intensive_functions(node,result);

}

}

void driver(const Vec8f& query, const BinaryTree& tree, Result* result) {

traverse( query, tree.root, result );

}

More-accurate traversal (I actually use a programmer-managed stack to unroll the recursion. Also, some calculations have temporaries):

https://pastebin.com/2cgnEXAq

The actual code (only minor formatting and cleanup):

https://pastebin.com/2V24nFFh

The behavior of the traversal is that very few leaf nodes get processed. Most of the traversal touches only internal nodes. To this end, I have arranged the nodes to be flat in-memory. Also, the child that is most likely to be chosen in the traversal (one can tell in-advance) immediately follows its parent, to make maximal use of continuous-access hardware prefetching.

One thing I tried was to add calls to `_mm_prefetch(...)` for both `child0` and `child1`, at the marked line. My thinking was that if we issue loads for both nodes, then calculate whether we need those nodes, they will both be ready in-cache by the time we have to recurse to one of them. That is, most of the computation for a node is to decide which children to traverse to, so speculatively loading both regardless should be a win—and on the next loop iteration, we won't have a problem doing the computation because that node was one of the children that got loaded. Unfortunately, manual prefetching seemed to have no impact on performance (either positive or negative).

Now I'm thinking that perhaps the first (root) node or recursing to nodes-popped-from-the-stack could be causing most of the delay? This maybe wouldn't be picked up by the hardware prefetcher either? Thoughts? Ideas?

Thanks again!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

>>During this delay, the OS is likely to page the process out, resulting in an increase in the "wait time" metric

No, the instruction stalls until the memory finished being fetched (from L1, or L2 or L3 or RAM, or cache/RAM on other socked in event of multi-CPU system). Should the O/S coincidently decide to preempt the stalled thread, the preemption would not occur until after the memory/cache stall completes. Only a fault would backup the instruction pointer.

Copying the node...

Please show the declaration of the class/struct Node (TypeNode).

What is the value of MCRT_MAX_BVH_TRAVERSAL_FRAMES?

What is the worst case number of nodes?

I suggest if you intend to copy the node you experiment with making the following changes:

a) Have your stack_nodes array contain the copy of the nodes as opposed to the pointer to the nodes. This may reduce the load on the limited number of TLB's the core has available. (if you modify a copy then you must copy it back when you unstack).

b) align stack_nodes to 64 byte address (I assume your TypeNode nodes are aligned).

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

`TypeNode` is a fairly complex structure. The code is here (https://pastebin.com/fkkBYLuS), but describing the memory layout is perhaps more informative. Essentially, it is four vectors, each representing three `float`s (in pairs, they semantically represent a 3D box, `Math::AABB`). In the unused fourth channels, I pack a description of the node and children indices (if it's a leaf node, the children indices are to objects, not other nodes):

Bytes [ 0–15]: `__m128` representing a Vec3f

Bytes [16–31]: `__m128` representing a Vec3f

Bytes [32–47]: `__m128` representing a Vec3f

Bytes [48–63]: `__m128` representing a Vec3f

Byte 12: information byte

Bytes [28-31]: upper 4 bytes of 6-byte child 0 index

Bytes [44-45]: lower 2 bytes of 6-byte child 0 index

Bytes [46-47]: upper 2 bytes of 6-byte child 1 index

Bytes [60-63]: lower 4 bytes of 6-byte child 1 index

`MCRT_MAX_BVH_TRAVERSAL_FRAMES` is currently `256u`. Most trees are not close to this deep, but most trees are also unbalanced (on-purpose).

Worst case number of nodes is not well-bounded. My small test has ~1e4, but ~5e9 is a plausible real-world use case (though 1e5–1e8 would be more-common). In the future, I want to support more. Note that with 6 bytes, I can index ~3e14, which should be enough for a while.

I ran a test removing the leaf node calculations (the `calculate_math_and_memory_intensive_functions(...)` in the pseudo code), and the traversal ran in a few milliseconds. I'm not fully sure that the compiler didn't optimize something important away, but the assembly seemed to be okay. From this, I think perhaps the main problem is that the leaf node calculations cause a cache miss the following loop. I thought perhaps manually keeping the top two nodes on the stack in registers might help with this, but maybe something similar can be attained by storing copies of all nodes on the traversal path in the stack as you suggest. Perhaps both.

Anyway, I like the idea of making aligned copies on the stack. The idea is to make the TLB lookup only for the node residing on the stack, not two lookups (one for the pointer on the stack, the other for the node in memory the pointer points to), right? (It's a bit nontrivial to try straight off, since the structure `reinterpret_cast`s and offsets itself to get the pointer, so a stack copy is bogus, but this could be fixed.)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

One thing I would suggest to do is run VTune General Exploration analysis on your code if you haven't done this yet. This will show true performance bottlenecks from micro-architecture point of view. Most likely this will be memory latency but still would be great to confirm.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The question you have to resolve is:

Is the cost of copying the nodes to the stack (less the savings in potential TLB loads), less than the cost of the potential TLB loads (and potential extra cache loads). The only way (barring full simulation) is to profile both ways.

You may also want to consider splitting your node into two parts if possible. It may be effective to split the node by the portion used for traversal, and the portion used for computation. (This may work if you traverse more often than compute.)

An additional alternative is to allocate your nodes as an array of these nodes (as a private heap of these nodes) as opposed of obtaining the nodes individually via new. Should you allocate individually, you will have at least one cache line of non-node data interspersing each node. Depending on how you allocate, using individual allocations, the node placements may be all over the Virtual Address space (exasperating TLB loads).

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

Dmitry Ryabtsev (Intel) wrote:I didn't even know about this! The summary metrics highlighted end up being CPI Rate (1.114), Retire Stalls (0.625), Instruction Starvation (0.166) and Branch Mispredict (12.1%).

One thing I would suggest to do is run VTune General Exploration analysis on your code if you haven't done this yet.

I took a look at branch mispredict, and the trace records high numbers for instructions that aren't branches or even jumps. E.g., for one very simple virtual method:

Block 1: move byte ptr [r8+0x80], 0x1 ; BR_MISP_EXEC.ANY = 1680000 ret ; BR_MISP_EXEC.ANY = 1440000

Just as for profiling, am I seeing instruction skid? Possibly, is the virtual call making branches that mispredict somehow?

---

jimdempseyatthecove wrote:To investigate this, I implemented different versions of the nodes and profiled all of them. The tree is initially constructed from 80-byte nodes, and can then be converted to 64-byte packed nodes (the version given in my original posts). I also made a version that's 16 bytes and uses tons of nasty fixed point. I've attached these to this post, in-case anyone wants to look at them.

Is the cost of copying the nodes to the stack (less the savings in potential TLB loads), less than the cost of the potential TLB loads (and potential extra cache loads). The only way (barring full simulation) is to profile both ways.

For comparison, I also tested a tweak of the 64-byte node that has 16 padding bytes (making it 80 bytes also). I tested all of these in conjunction with your above suggestion to load on the stack. Here are the results summarized on a small test case:

Pointers, Copy to stack Simple (80 byte nodes): 6.903s, 6.875s Packed (64 byte nodes): 10.275s, 10.272s Packed (64+16 byte nodes): 10.152s, 10.284s FixedPt (16 byte nodes): 7.446s, 7.265s

The fixed-point node type seems to be slower due to its complex (and relatively unoptimized) implementation. Also, due to the fixed-point inaccuracies, the traversal must touch slightly more. However, not me nor anyone in my lab can explain why the 64 and 64+16 byte packed nodes are slowest.

The 64-byte packed node type should be thought of as a very simple modification of the 80-byte node. In the 80-byte node, there are two 8-byte indices. In the 64-byte version, these are instead both 6-byte indices, split into 4-byte and 2-byte pieces and stored into some padding bytes. To unpack an index is just a `<<` and an `|`:

static_cast<size_t>( static_cast<uint64_t>(index_child0_lower) | (static_cast<uint64_t>(index_child0_upper)<<32) );

I compared profiles of both the 80-byte and (64+16)-byte nodes, and the slowdowns were in the same places—just bigger for the (64+16)-byte one.

jimdempseyatthecove wrote:I already do this. C.f.:

An additional alternative is to allocate your nodes as an array of these nodes (as a private heap of these nodes) as opposed of obtaining the nodes individually via new.

ian m. wrote:Might putting nodes consecutively be a bad idea? Cache bank conflicts or something? It would be difficult to interleave the tree's children, but perhaps it could be done, particularly for the 80-byte or 64-byte types.

I have arranged the nodes to be flat in-memory. Also, the child that is most likely to be chosen in the traversal (one can tell in-advance) immediately follows its parent, to make maximal use of continuous-access hardware prefetching.

Thanks,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Bear in mind that I do not fully understand the problem at hand...

Have you considered examining the nature of your "key" data, I guess this might be the bound variables, such that you can replace the tree structure with a hash table?

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yeah, unfortunately that won't work: the tree, a BVH, is effectively a binary search tree. The queries and nodes both have geometric meaning, so hashing saliently becomes difficult. FWIW spatial hashing for this problem domain has been tried by others, but it doesn't end up working very well.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page